- SAP Community

- Products and Technology

- Technology

- Technology Blogs by Members

- Optimizing BDLS For HANA Large Scale Instances (HL...

Technology Blogs by Members

Explore a vibrant mix of technical expertise, industry insights, and tech buzz in member blogs covering SAP products, technology, and events. Get in the mix!

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

former_member32

Explorer

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

10-05-2021

3:47 PM

Introduction:

The purpose of this blog post is to give the audience an overview of how we handled BDLS process in the refreshes of HANA Large Scale Instances. I will try to give as much information as possible (which is already publicly available and maybe not all be in a single place) and try to depict how we used it to build a solution.

The Motivation:

All SAP Basis consultants had to deal with BDLS process in their careers at some point or the other, but it becomes a challenge and a pain when the size of the DB is in several TBs. The process then needs many hours or even days to run.

We had similar challenges while dealing with HANA Large Scale Instances (HLIs) refreshes and the BDLS was eating most of the time and left little or no time as a buffer for any unforeseen issues. Our current process had some level of optimization but still the runtime was huge. Therefore there was a need to find a better optimized solution.

The Challenge:

Current Process:

We used manual BDLS process for the HLI landscape instead of the standard BDLS (which is limited to smaller sized systems like less than 1TB).

This manual run involved running BDLS at two levels

- SAP: All tables for a conversion are run except for the pre-identified 20 large tables

- SQL: Parallel execution of SQL statements on the splits of those 20 large tables as "UPDATE TOP" commands. The maximum time taken was in this step

Limitations:

Some of the limitations of the current process were as below:

- The effort to generate SQL statements was huge and complex. Multiple things must be done manually to get the required SQL statements

- The time taken for the process was huge. If we consider that all SQL statements are run in parallel, then it will take a minimum of 25 hours for the smallest of the HLIs

- There is a limitation that the generated update statements for a specific table can not be run in parallel i.e. Until the first update statement for a table completes the second update statement cannot run for the same table

- The LSN conversion we are handling is a single LSN and if multiple LSN were to be converted then the times would be multiple of number of conversions

- Overall timing can go up to ~96 hours i.e. around 4 days

The Concepts:

I had gone through various blog posts and SAP notes to see what can be done but one of the blog post written in 2012 was exactly what I was looking for. You can read that blog post here.

This blog post although related to Oracle, gave great insights on how to tackle this problem. It suggested to use Create Table As Select method or CTAS instead of using UPDATE queries on the existing tables (that is what our SQL statements or standard BDLS run does).

But Why CTAS?:

- CTAS is the approach used in other DBs like Oracle as well to reduce the overall BDLS run time by using “decode” or “case” statements for conversion

- It is because select queries run faster than update queries.

- This has the potential to reduce the actual runtime of conversion of a table by > 90% and overall runtime of BDLS as a step by more than 60-70% (considering the additional activities to be performed)

The author of the blog post had used "decode" or "case" statements to build a process. But there was nothing like a decode which could handle this in HANA. Therefore I went with MAP function for HANA DB.

MVP of the solution: HANA's MAP Function

MAP function is used to replace a value with another during a query. So this means if you do a select or an insert with MAP fxn in that query like "if you got LOST then replace it with FOUND". Now the result of that query will have FOUND in every spot where you had LOST for the values in the field for which MAP was used.

Syntax: MAP(<FIELDNAME>, '<ORIGINAL>','<REPLACEMENT>',<FIELDNAME>)"<FIELDNAME>"

- The first <FIELDNAME> is used to tell the function which field to work on.

- The <ORIGINAL> and the <Replacement> are self explanatory.

- The second <FIELDNAME> is required to tell the function that if you don't find the ORIGINAL value in some places then leave the field value as is i.e. don't change it. We don't want all LSNs to be converted to the same value.

- The 3rd and the last <FIELDNAME> is to tell the function that keep the name of the field in output as the fieldname and don't replace it with your name.

Example:

A simple select query:

select * from T000

Result:

MANDT,MTEXT,ORT01,MWAER,ADRNR,CCCATEGORY,CCCORACTIV,CCNOCLIIND,CCCOPYLOCK,CCNOCASCAD,CCSOFTLOCK,CCORIGCONT,CCIMAILDIS,CCTEMPLOCK,CHANGEUSER,CHANGEDATE,LOGSYS

"500","LSN DESCRIPTION","Waldorf","EUR","","C","1","","","","","","","","DDIC","20211107","SRCCLNT500"

Query with MAP:

select MANDT,MTEXT,ORT01,MWAER,ADRNR,CCCATEGORY,CCCORACTIV,CCNOCLIIND,CCCOPYLOCK,CCNOCASCAD,CCSOFTLOCK,CCORIGCONT,CCIMAILDIS,CCTEMPLOCK,CHANGEUSER,CHANGEDATE,MAP(LOGSYS, 'SRCCLNT500', 'ITWORKED',LOGSYS) "LOGSYS" from T000

Result:

MANDT,MTEXT,ORT01,MWAER,ADRNR,CCCATEGORY,CCCORACTIV,CCNOCLIIND,CCCOPYLOCK,CCNOCASCAD,CCSOFTLOCK,CCORIGCONT,CCIMAILDIS,CCTEMPLOCK,CHANGEUSER,CHANGEDATE,LOGSYS

"500","LSN DESCRIPTION","Waldorf","EUR","","C","1","","","","","","","","DDIC","20211107","ITWORKED"

We can use MAP module in a CTAS query as shown in the following example:

CREATE TABLE T000_COPY AS (SELECT MANDT,MTEXT,ORT01,MWAER,ADRNR,CCCATEGORY,CCCORACTIV,CCNOCLIIND,CCCOPYLOCK,CCNOCASCAD,CCSOFTLOCK,CCORIGCONT,CCIMAILDIS,CCTEMPLOCK,CHANGEUSER,CHANGEDATE,MAP(LOGSYS, 'SRCCLNT500', 'ITWORKED',LOGSYS) "LOGSYS" from T000)

So a new table will be created based on existing table but with LSN value changed to ITWORKED. Now the only thing left would be to rename the _COPY table to the original table.

I chose the above examples to showcase two things: First to show how MAP fxn works and second how MAP will be used in a conversion. We need the whole list of fields to get the right result if we are looking to create a copy of an existing table from it. Else, if we shorten the query to just the field in question like LOGSYS then it would be just giving us that output and a whole copy of the table cannot be created.

So some of the most important questions now are:

- How to get the tables in real time for each system individually?

- How to get the fields in each table to build these queries?

- What about tables that are partitioned?

- What about indexes on tables?

Brief answers to the above questions are as below:

Q: How to get the tables in real time for each system individually?

Ans: We can use the same logic that SAP is using for its BDLS run i.e. querying table TBDBDLSALL for BDLS relevant tables. So I created an SQL query with multiple JOINs to find largest tables in the system and then find tables relevant for BDLS conversion (based on which tables match the ones in TBDBDLSALL table).

Then ran sorting logic on the shortlisted tables to get tables that have records with a particular LSN in BDLS relevant fields like LOGSYS, AWSYS, LOGSYS_EXT etc which can be found from tables like TBDBDLSUKEY & TBDBDLSUARG.

Q: How to get the fields in each table to build these queries?

Ans: I used R3ldctl to generate STR files for each table which comes as an output (i.e. resultant tables after going through the first answer's logic). Then using those fields to generate my query with MAP function in between for the BDLS relevant fields.

Q: What about tables that are partitioned?

Ans: We run the first answer's output to validate which tables are partitioned and generate separate CREATE & INSERT queries for them. The partitioned tables would be converted in two steps instead of single CREATE table used for non-partitioned. This is done to have the benefit of parallelism due to partitions. Therefore we CREATE an empty table with same number of partitions as the source and then run in parallel INSERTs on each partition. This is shown for VBAP below in the conclusion.

Q: What about indexes

Ans: We can have multiple indexes on a table. These indexes need to exist on the converted table after the steps like CTAS, renaming original table to a backup and & then renaming the converted table back to original are completed. So these indexes need to be dropped from the original table and then recreated later on the newly converted table with their original names. Also dropping indexes from the original table reduces it’s size on disk and also reduce the rename time as well.

I used stored procedure get_object_definition in the script to extract DDL statements for all indexes on a table and then used them to recreate the indexes later.

The Solution:

By using inbuilt CTAS function for HANA along with R3ldctl tool to complete the process of convert, drop, rename & rebuild:

- Convert the selected tables using CTAS in HANA and store it as a temporary table

- Drop the indexes on the original table

- Rename the original table to a backup table and rename converted temp table to original table

- Rebuild the dropped indexes of the newly converted renamed table

We can drop the original tables after validation to save additional disk usage.

For Non-Partitioned tables the process can be depicted as below:

For Partitioned tables the process can be depicted as below: Important to note that as mentioned before the tables are created as empty tables and then data is inserted in all partitions in parallel.

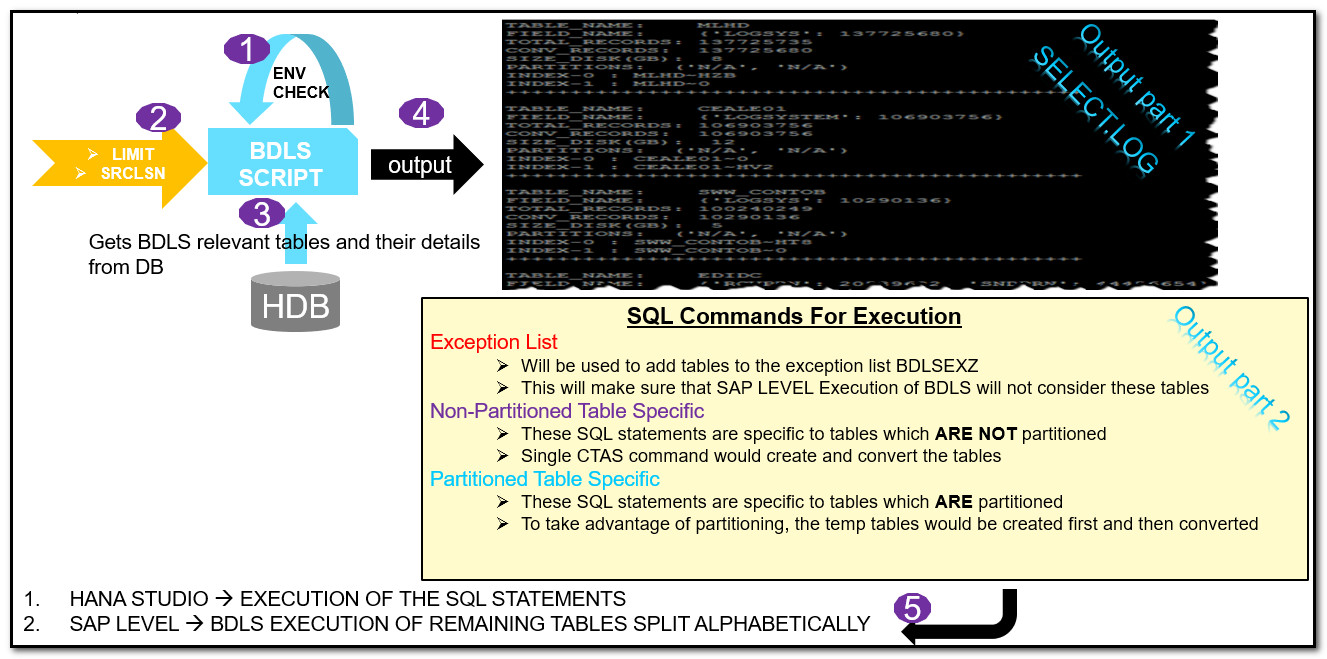

After scripting all the logic the overall flow of the BDLS process was as below:

The script took 3 inputs namely:

- Limit = which is number of records a table must have to be included in the selection criteria

- Source LSN = To find the relevant tables having this LSN and the number of records based on limit input

- Target LSN = Once we are fine with the selection we give target LSN to generate the scripts

Once the script finishes in around 20 mins for a system with 25+ TB, we have the

- List of tables

- Total records in the table

- Relevant records in the table for the source LSN

- All indexes for relevant tables

- Different sql commands for partitioned and non-partitioned tables

Now we can put all the tables selected for special handling in the exception table BDLSEXZ and run SQL queries via HANA Studio or hdbsql. And in parallel run SAP level BDLS with multiple runs of A* to Z* as shown below

The Conclusion:

This process gave us the following advantages

- CTAS because of inherent advantage of select query being faster than update has made the overall conversion very fast

- Partitioning on the tables is used to achieve in-table parallelism in convert phase for those tables

- SAP Level execution is reduced significantly due to 1) All major tables converted using script 2) Alphabetical execution of remaining tables

- Script enables us (due to 2-part output) to run select part of the script multiple times to achieve desired output before generating relevant SQL commands

- The script can be modified to convert multiple LSNs in a single go. No additional time will be required to convert multiple LSNs

- The scope of further automation is quite high with a basic target to reduce human intervention to almost 0

We normally ran around 7-8 tables in parallel by a resource with 2 resources working together therefore around 16 tables were done in parallel. Out which VBAP's snapshot of the time is shown below (it was the longest running table).

It had two partitions of 500 million+ records which had INSERTs run in parallel for each partition. So a runtime of ~1 hr 13 mins for the conversion of LSN and ~47 mins for the rest of the statements like rename, index recreation etc. Therefore total runtime is ~ 2 hours for VBAP where more than a billion records were converted. Point to note is that in those 2 hours many other large tables like EDIDC, VBRK etc were also converted in parallel.

VBAP Run

Total runtime: Also a point to note is that these results are consistent for multiple runs and include the total time for complete BDLS i.e. SAP Level plus SQL Level including the script run time for preparation.

So all in all the solution was designed and implemented by combining MAP Function, SQL JOINs, R3LDCTL, Finding BDLS relevant tables & finally python to code all of this.

I hope with this blog post I was able to provide some valuable insights on how BDLS in HANA can be tackled by existing tools to get astonishing results. And also showcase how MAP functions work and how they can be leveraged to make changes to LSNs without the need for an UPDATE query. The process and features built were specific to our use case where I thought of including some additional functionality in order to get the most flexibility out of the solution with limited manual interventions.

But the readers of this blog post can definitely use the concepts explained here for their systems where they can enhance their own BDLS conversions on HANA even if they are not large scale instances, for example: using a fixed set of tables and using pre-built MAP queries to handle them at SQL level and in parallel running BDLS on SAP level by excluding those tables.

Thank you for spending your time reading this and I would welcome any suggestions/feedback/questions in the comments section related to the blog post.

Cheers,

Vishesh Sood

8 Comments

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

"automatische backups"

1 -

"regelmäßige sicherung"

1 -

"TypeScript" "Development" "FeedBack"

1 -

505 Technology Updates 53

1 -

ABAP

14 -

ABAP API

1 -

ABAP CDS Views

2 -

ABAP CDS Views - BW Extraction

1 -

ABAP CDS Views - CDC (Change Data Capture)

1 -

ABAP class

2 -

ABAP Cloud

2 -

ABAP Development

5 -

ABAP in Eclipse

1 -

ABAP Platform Trial

1 -

ABAP Programming

2 -

abap technical

1 -

absl

2 -

access data from SAP Datasphere directly from Snowflake

1 -

Access data from SAP datasphere to Qliksense

1 -

Accrual

1 -

action

1 -

adapter modules

1 -

Addon

1 -

Adobe Document Services

1 -

ADS

1 -

ADS Config

1 -

ADS with ABAP

1 -

ADS with Java

1 -

ADT

2 -

Advance Shipping and Receiving

1 -

Advanced Event Mesh

3 -

AEM

1 -

AI

7 -

AI Launchpad

1 -

AI Projects

1 -

AIML

9 -

Alert in Sap analytical cloud

1 -

Amazon S3

1 -

Analytical Dataset

1 -

Analytical Model

1 -

Analytics

1 -

Analyze Workload Data

1 -

annotations

1 -

API

1 -

API and Integration

3 -

API Call

2 -

Application Architecture

1 -

Application Development

5 -

Application Development for SAP HANA Cloud

3 -

Applications and Business Processes (AP)

1 -

Artificial Intelligence

1 -

Artificial Intelligence (AI)

5 -

Artificial Intelligence (AI) 1 Business Trends 363 Business Trends 8 Digital Transformation with Cloud ERP (DT) 1 Event Information 462 Event Information 15 Expert Insights 114 Expert Insights 76 Life at SAP 418 Life at SAP 1 Product Updates 4

1 -

Artificial Intelligence (AI) blockchain Data & Analytics

1 -

Artificial Intelligence (AI) blockchain Data & Analytics Intelligent Enterprise

1 -

Artificial Intelligence (AI) blockchain Data & Analytics Intelligent Enterprise Oil Gas IoT Exploration Production

1 -

Artificial Intelligence (AI) blockchain Data & Analytics Intelligent Enterprise sustainability responsibility esg social compliance cybersecurity risk

1 -

ASE

1 -

ASR

2 -

ASUG

1 -

Attachments

1 -

Authorisations

1 -

Automating Processes

1 -

Automation

2 -

aws

2 -

Azure

1 -

Azure AI Studio

1 -

B2B Integration

1 -

Backorder Processing

1 -

Backup

1 -

Backup and Recovery

1 -

Backup schedule

1 -

BADI_MATERIAL_CHECK error message

1 -

Bank

1 -

BAS

1 -

basis

2 -

Basis Monitoring & Tcodes with Key notes

2 -

Batch Management

1 -

BDC

1 -

Best Practice

1 -

bitcoin

1 -

Blockchain

3 -

bodl

1 -

BOP in aATP

1 -

BOP Segments

1 -

BOP Strategies

1 -

BOP Variant

1 -

BPC

1 -

BPC LIVE

1 -

BTP

12 -

BTP Destination

2 -

Business AI

1 -

Business and IT Integration

1 -

Business application stu

1 -

Business Application Studio

1 -

Business Architecture

1 -

Business Communication Services

1 -

Business Continuity

1 -

Business Data Fabric

3 -

Business Partner

12 -

Business Partner Master Data

10 -

Business Technology Platform

2 -

Business Trends

4 -

CA

1 -

calculation view

1 -

CAP

3 -

Capgemini

1 -

CAPM

1 -

Catalyst for Efficiency: Revolutionizing SAP Integration Suite with Artificial Intelligence (AI) and

1 -

CCMS

2 -

CDQ

12 -

CDS

2 -

Cental Finance

1 -

Certificates

1 -

CFL

1 -

Change Management

1 -

chatbot

1 -

chatgpt

3 -

CL_SALV_TABLE

2 -

Class Runner

1 -

Classrunner

1 -

Cloud ALM Monitoring

1 -

Cloud ALM Operations

1 -

cloud connector

1 -

Cloud Extensibility

1 -

Cloud Foundry

4 -

Cloud Integration

6 -

Cloud Platform Integration

2 -

cloudalm

1 -

communication

1 -

Compensation Information Management

1 -

Compensation Management

1 -

Compliance

1 -

Compound Employee API

1 -

Configuration

1 -

Connectors

1 -

Consolidation Extension for SAP Analytics Cloud

2 -

Control Indicators.

1 -

Controller-Service-Repository pattern

1 -

Conversion

1 -

Cosine similarity

1 -

cryptocurrency

1 -

CSI

1 -

ctms

1 -

Custom chatbot

3 -

Custom Destination Service

1 -

custom fields

1 -

Customer Experience

1 -

Customer Journey

1 -

Customizing

1 -

cyber security

3 -

Data

1 -

Data & Analytics

1 -

Data Aging

1 -

Data Analytics

2 -

Data and Analytics (DA)

1 -

Data Archiving

1 -

Data Back-up

1 -

Data Governance

5 -

Data Integration

2 -

Data Quality

12 -

Data Quality Management

12 -

Data Synchronization

1 -

data transfer

1 -

Data Unleashed

1 -

Data Value

8 -

database tables

1 -

Datasphere

2 -

datenbanksicherung

1 -

dba cockpit

1 -

dbacockpit

1 -

Debugging

2 -

Delimiting Pay Components

1 -

Delta Integrations

1 -

Destination

3 -

Destination Service

1 -

Developer extensibility

1 -

Developing with SAP Integration Suite

1 -

Devops

1 -

digital transformation

1 -

Documentation

1 -

Dot Product

1 -

DQM

1 -

dump database

1 -

dump transaction

1 -

e-Invoice

1 -

E4H Conversion

1 -

Eclipse ADT ABAP Development Tools

2 -

edoc

1 -

edocument

1 -

ELA

1 -

Embedded Consolidation

1 -

Embedding

1 -

Embeddings

1 -

Employee Central

1 -

Employee Central Payroll

1 -

Employee Central Time Off

1 -

Employee Information

1 -

Employee Rehires

1 -

Enable Now

1 -

Enable now manager

1 -

endpoint

1 -

Enhancement Request

1 -

Enterprise Architecture

1 -

ETL Business Analytics with SAP Signavio

1 -

Euclidean distance

1 -

Event Dates

1 -

Event Driven Architecture

1 -

Event Mesh

2 -

Event Reason

1 -

EventBasedIntegration

1 -

EWM

1 -

EWM Outbound configuration

1 -

EWM-TM-Integration

1 -

Existing Event Changes

1 -

Expand

1 -

Expert

2 -

Expert Insights

2 -

Fiori

14 -

Fiori Elements

2 -

Fiori SAPUI5

12 -

Flask

1 -

Full Stack

8 -

Funds Management

1 -

General

1 -

Generative AI

1 -

Getting Started

1 -

GitHub

8 -

Grants Management

1 -

groovy

1 -

GTP

1 -

HANA

6 -

HANA Cloud

2 -

Hana Cloud Database Integration

2 -

HANA DB

2 -

HANA XS Advanced

1 -

Historical Events

1 -

home labs

1 -

HowTo

1 -

HR Data Management

1 -

html5

8 -

HTML5 Application

1 -

Identity cards validation

1 -

idm

1 -

Implementation

1 -

input parameter

1 -

instant payments

1 -

Integration

3 -

Integration Advisor

1 -

Integration Architecture

1 -

Integration Center

1 -

Integration Suite

1 -

intelligent enterprise

1 -

iot

1 -

Java

1 -

job

1 -

Job Information Changes

1 -

Job-Related Events

1 -

Job_Event_Information

1 -

joule

4 -

Journal Entries

1 -

Just Ask

1 -

Kerberos for ABAP

8 -

Kerberos for JAVA

8 -

KNN

1 -

Launch Wizard

1 -

learning content

2 -

Life at SAP

4 -

lightning

1 -

Linear Regression SAP HANA Cloud

1 -

local tax regulations

1 -

LP

1 -

Machine Learning

2 -

Marketing

1 -

Master Data

3 -

Master Data Management

14 -

Maxdb

2 -

MDG

1 -

MDGM

1 -

MDM

1 -

Message box.

1 -

Messages on RF Device

1 -

Microservices Architecture

1 -

Microsoft Universal Print

1 -

Middleware Solutions

1 -

Migration

5 -

ML Model Development

1 -

Modeling in SAP HANA Cloud

8 -

Monitoring

3 -

MTA

1 -

Multi-Record Scenarios

1 -

Multiple Event Triggers

1 -

Neo

1 -

New Event Creation

1 -

New Feature

1 -

Newcomer

1 -

NodeJS

2 -

ODATA

2 -

OData APIs

1 -

odatav2

1 -

ODATAV4

1 -

ODBC

1 -

ODBC Connection

1 -

Onpremise

1 -

open source

2 -

OpenAI API

1 -

Oracle

1 -

PaPM

1 -

PaPM Dynamic Data Copy through Writer function

1 -

PaPM Remote Call

1 -

PAS-C01

1 -

Pay Component Management

1 -

PGP

1 -

Pickle

1 -

PLANNING ARCHITECTURE

1 -

Popup in Sap analytical cloud

1 -

PostgrSQL

1 -

POSTMAN

1 -

Process Automation

2 -

Product Updates

4 -

PSM

1 -

Public Cloud

1 -

Python

4 -

Qlik

1 -

Qualtrics

1 -

RAP

3 -

RAP BO

2 -

Record Deletion

1 -

Recovery

1 -

recurring payments

1 -

redeply

1 -

Release

1 -

Remote Consumption Model

1 -

Replication Flows

1 -

research

1 -

Resilience

1 -

REST

1 -

REST API

1 -

Retagging Required

1 -

Risk

1 -

Rolling Kernel Switch

1 -

route

1 -

rules

1 -

S4 HANA

1 -

S4 HANA Cloud

1 -

S4 HANA On-Premise

1 -

S4HANA

3 -

S4HANA_OP_2023

2 -

SAC

10 -

SAC PLANNING

9 -

SAP

4 -

SAP ABAP

1 -

SAP Advanced Event Mesh

1 -

SAP AI Core

8 -

SAP AI Launchpad

8 -

SAP Analytic Cloud Compass

1 -

Sap Analytical Cloud

1 -

SAP Analytics Cloud

4 -

SAP Analytics Cloud for Consolidation

3 -

SAP Analytics Cloud Story

1 -

SAP analytics clouds

1 -

SAP BAS

1 -

SAP Basis

6 -

SAP BODS

1 -

SAP BODS certification.

1 -

SAP BTP

21 -

SAP BTP Build Work Zone

2 -

SAP BTP Cloud Foundry

6 -

SAP BTP Costing

1 -

SAP BTP CTMS

1 -

SAP BTP Innovation

1 -

SAP BTP Migration Tool

1 -

SAP BTP SDK IOS

1 -

SAP Build

11 -

SAP Build App

1 -

SAP Build apps

1 -

SAP Build CodeJam

1 -

SAP Build Process Automation

3 -

SAP Build work zone

10 -

SAP Business Objects Platform

1 -

SAP Business Technology

2 -

SAP Business Technology Platform (XP)

1 -

sap bw

1 -

SAP CAP

2 -

SAP CDC

1 -

SAP CDP

1 -

SAP CDS VIEW

1 -

SAP Certification

1 -

SAP Cloud ALM

4 -

SAP Cloud Application Programming Model

1 -

SAP Cloud Integration for Data Services

1 -

SAP cloud platform

8 -

SAP Companion

1 -

SAP CPI

3 -

SAP CPI (Cloud Platform Integration)

2 -

SAP CPI Discover tab

1 -

sap credential store

1 -

SAP Customer Data Cloud

1 -

SAP Customer Data Platform

1 -

SAP Data Intelligence

1 -

SAP Data Migration in Retail Industry

1 -

SAP Data Services

1 -

SAP DATABASE

1 -

SAP Dataspher to Non SAP BI tools

1 -

SAP Datasphere

10 -

SAP DRC

1 -

SAP EWM

1 -

SAP Fiori

2 -

SAP Fiori App Embedding

1 -

Sap Fiori Extension Project Using BAS

1 -

SAP GRC

1 -

SAP HANA

1 -

SAP HCM (Human Capital Management)

1 -

SAP HR Solutions

1 -

SAP IDM

1 -

SAP Integration Suite

9 -

SAP Integrations

4 -

SAP iRPA

2 -

SAP Learning Class

1 -

SAP Learning Hub

1 -

SAP Odata

2 -

SAP on Azure

1 -

SAP PartnerEdge

1 -

sap partners

1 -

SAP Password Reset

1 -

SAP PO Migration

1 -

SAP Prepackaged Content

1 -

SAP Process Automation

2 -

SAP Process Integration

2 -

SAP Process Orchestration

1 -

SAP S4HANA

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Cloud for Finance

1 -

SAP S4HANA Cloud private edition

1 -

SAP Sandbox

1 -

SAP STMS

1 -

SAP successfactors

3 -

SAP SuccessFactors HXM Core

1 -

SAP Time

1 -

SAP TM

2 -

SAP Trading Partner Management

1 -

SAP UI5

1 -

SAP Upgrade

1 -

SAP Utilities

1 -

SAP-GUI

8 -

SAP_COM_0276

1 -

SAPBTP

1 -

SAPCPI

1 -

SAPEWM

1 -

sapmentors

1 -

saponaws

2 -

SAPS4HANA

1 -

SAPUI5

4 -

schedule

1 -

Secure Login Client Setup

8 -

security

9 -

Selenium Testing

1 -

SEN

1 -

SEN Manager

1 -

service

1 -

SET_CELL_TYPE

1 -

SET_CELL_TYPE_COLUMN

1 -

SFTP scenario

2 -

Simplex

1 -

Single Sign On

8 -

Singlesource

1 -

SKLearn

1 -

soap

1 -

Software Development

1 -

SOLMAN

1 -

solman 7.2

2 -

Solution Manager

3 -

sp_dumpdb

1 -

sp_dumptrans

1 -

SQL

1 -

sql script

1 -

SSL

8 -

SSO

8 -

Substring function

1 -

SuccessFactors

1 -

SuccessFactors Platform

1 -

SuccessFactors Time Tracking

1 -

Sybase

1 -

system copy method

1 -

System owner

1 -

Table splitting

1 -

Tax Integration

1 -

Technical article

1 -

Technical articles

1 -

Technology Updates

14 -

Technology Updates

1 -

Technology_Updates

1 -

terraform

1 -

Threats

1 -

Time Collectors

1 -

Time Off

2 -

Time Sheet

1 -

Time Sheet SAP SuccessFactors Time Tracking

1 -

Tips and tricks

2 -

toggle button

1 -

Tools

1 -

Trainings & Certifications

1 -

Transport in SAP BODS

1 -

Transport Management

1 -

TypeScript

2 -

ui designer

1 -

unbind

1 -

Unified Customer Profile

1 -

UPB

1 -

Use of Parameters for Data Copy in PaPM

1 -

User Unlock

1 -

VA02

1 -

Validations

1 -

Vector Database

2 -

Vector Engine

1 -

Visual Studio Code

1 -

VSCode

1 -

Web SDK

1 -

work zone

1 -

workload

1 -

xsa

1 -

XSA Refresh

1

- « Previous

- Next »

Related Content

- 10+ ways to reshape your SAP landscape with SAP Business Technology Platform – Blog 4 in Technology Blogs by SAP

- Workload Analysis for HANA Platform Series - 2. Analyze the CPU, Threads and Numa Utilizations in Technology Blogs by SAP

- Augmenting SAP BTP Use Cases with AI Foundation: A Deep Dive into the Generative AI Hub in Technology Blogs by SAP

- 10+ ways to reshape your SAP landscape with SAP Business Technology Platform – Blog Series in Technology Blogs by SAP

- Graph intro series, part 8: Use Graph With OData v4 in Technology Blogs by SAP

Top kudoed authors

| User | Count |

|---|---|

| 13 | |

| 11 | |

| 5 | |

| 5 | |

| 5 | |

| 4 | |

| 4 | |

| 3 | |

| 3 | |

| 3 |