- SAP Community

- Products and Technology

- Technology

- Technology Blogs by Members

- Black Friday will take your CPI instance offline u...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

This is part 3 of a series discussing distributed scenarios for SAP Cloud Integration (CPI). Part 1: How to crash your iflows and watch them failover beautifully (DR for CPI with Azure FrontDoor) Part 2: Second round of crashing iFlows in CPI and failing over with Azure – even simpler (DR for CPI with Azure Traffic Manager) Part 3: Black Friday will take your CPI instance offline unless… (HA for CPI) |

Dear community,

Processing millions of messages with iFlows per day can already be a challenge. But getting hit by this order of magnitude within a short time frame (3..2..1..aaand 8.00am Black Friday discounts are LIVE!!!) with a default CPI setup will likely be devastating – even though there is no rubble or actual smoke involved – Maybe just coming out of the ears of those poor admins fighting the masses to get the integration layer functional again 😉

CPI is part of Integration Suite and nowadays called “SAP Cloud Integration”. Nevertheless, the term CPI is widely spread and much better distinguished acronym than CI. Therefore, I keep using it here.

CPI can scale by adding additional worker nodes to your runtime. This is usually done via Support Ticket (autoscaling within one deployment is part of the roadmap). Nevertheless, this is capped by a maximum number of nodes that can be added to your tenant. What if that is not enough?

Today we will investigate orchestrating multiple CPI tenants on Azure to scale beyond the worker node limit and putting you in control to add instances on-the-fly as you see fit. We will shed light on the challenges of asynchronous messaging and ensuring processing only once, synching artifacts across tenants and sharing config to avoid redundancy.

I am building on my last posts, that covered redundancy and failover strategy for SAP CPI in the CloudFoundry (CF) environment using Azure FrontDoor and Traffic Manager. Also, I’d like to reference Santhosh’s post on his take on running multiple CPI instances.

Fig.1 Collection of consumers threatening your integration layer with stampede of purchases

Let’s get you out of that mess.

The moving parts to make it happen

As mentioned in the intro there are several angles to look at the parallel usage of multiple CPI instances in parallel for scalability and high availability. During my other posts we assumed that only one CPI instance is active and serves as primary. The secondary only becomes active if the primary is not reachable anymore. The traffic gets re-routed and flows always through one instance only. That approach is often referred to as active-passive setup.

That is fundamentally different to the active-active setup we are discussing in this post today. We need to solve the following challenges to succeed.

Present messages to CPI tenant pool in a way to process exactly once

With multiple flows we either need to be able to distribute reliably and potentially re-start on failure or equip the receiver to deal with multiple identical messages coming in. Many SAP targets cannot deal with redundant messages. Therefore, we should tackle on CPI tenant level.

We distinguish between three types of triggers for our iFlows.

- Asynchronous messages with receive acknowledgement only

- Synchronous messages with a blocked thread until fully processed by the target receiver

- Timer-based flows.

Fig.2 CPI asynch processing with Azure Service Bus

To achieve resilient asynchronous messaging, we need a message bus to supply the messages (in order if required!) and keep them until acknowledged. The bus ensures that only one CPI instance processes the message in question. There are various options on the market like RabbitMQ or the BTP native solution SAP Event Mesh to implement such an approach. In our case all components are deployed on Azure, and we are looking for a managed solution. Therefore, it makes sense to use Azure Service Bus. It has built-in request throttling to ensure we can react on unexpected steep request increases and protect down stream services. For instance until we can bring another CPI tenant online.

CPI offers an AMQP sender adapter. With that we can honour the described required properties regarding speed, throughput, scalability, and reliable messaging when pulling from the service bus.

Find more info on how to configure your service bus with HA features and how to brace against outages on the Azure docs.

Fig.3 CPI synch processing with Traffic Manager

With synchronous messages life becomes a looooot easier. We can directly hit the endpoint. For effective routing and high availability, we need to distribute messages cleverly though. In our case we applied the Azure Traffic Manager that offers round-robin, priority, performance, and geo-based algorithms. If all our CPI instances run in the same BTP region it makes most sense to apply performance-based routing to always choose the quickest route and cater for bottlenecks on the individual instance.

To be able to apply request throttling like for the asynch calls before we need to introduce an API Management component. You can choose from SAP-native options on SAP's Integration Suite or Azure API Management for instance. This step is optional but increases the robustness of your setup, by giving down stream services time to recover or to onboard an additional CPI tenant.

Because Traffic Manager acts on the DNS level, we need a custom domain setup on CF. Alternatively, you can apply Azure FrontDoor to avoid that requirement. To learn more on the various load balancing components haver a look at this Azure docs entry. I personally also found this blog useful.

About time we look at the last trigger type. Timely, isn’t it? All right, all right, I’ll stop in time 😉

Fig.4 CPI timer-based processing with LogicApps

For this trigger type we can apply the same mechanism as for synchronous messages to distribute tasks. In addition to that we need a process that can be scheduled. In the Azure ecosystem LogicApps are a good fit for the task. On the BTP side you can have a look at the BTP Automation Pilot for an SAP-native approach to kick off the trigger via Azure Traffic Manager.

Ok, so now we established how to call the multi-instance integration flows. How about their shared artifacts like credentials for downstream services? And finally: how do you keep the iFlows in synch across the multiple CPI tenants?

Synch artifacts across CPI tenant pool

In many places iFlows and associated artifacts are moved manually by export/import from the Dev to Prod instance. That bears tremendous risk when you run multiple CPI tenants in production. We need to apply an automated approach to overcome this. SAP offers built-in transporting with the BTP Transport Management Service (TMS). There is also CTS+ and MTAR download. Check the note 265197 for more details.

The high availability setup in its simplest form, would have one CPI dev tenant and run at least two productive instances and serve them with updates only via transporting to ensure a consistent state.

Having more than one dev instance would create a “split brain” problem, because you cannot merge via the transporting and potentially mess with version numbers etc. Furthermore, dev tenants usually don’t require the same magnitudes of processing capabilities, that made us apply this high availability concept to our prod instances in the first place.

Multiple dev instances in different BTP Azure regions for redundancy and disaster recovery purposes are a different story though. Check out my earlier posts for that.

Fig.5 Synch artifacts cross CPI tenants with TMS

Considering high availability for the transport component, you might favour a true DevOps approach and global services like GitHub actions compared to a single instance of TMS on BTP. GitHub Actions, Azure DevOps Pipelines or Jenkins however need to integrate with the CPI public APIs and cannot be triggered by the CPI Admin UI. Have a look at one of my older posts on DevOps practices with Azure Pipelines for CPI for reference.

For the credential “sharing” across tenants we apply the same notion of abstracting the component as before to distribute the content.

Fig.6 Event-driven CPI credential sharing via KeyVault

For a proper setup you want to maintain and update the credentials for CPI in a central, resilient, and highly available place. Azure KeyVault is a great fit for that task as it is cross Azure region replicated by design and integrates natively with Azure EventGrid. That allows you to push out credential changes automatically. Finally, you integrate with the CPI Security Content API. A straightforward option are LogicApps to perform the http calls. Find the template on the GitHub repos.

Fig.7 Screenshot of LogicApp pushing the new shared secret to registered CPI tenants (config scrapped for a “smaller” picture)

So, now every time you create a new version of your sharable secret (let’s say: “sap with azure rocks”) it gets pushed out to all configured CPI tenants. I provided an iFlow including a groovy script to read the secret “CPI-AUTH-SHARED”.

Et voilà, we get the confirmation, that the secret has reached its destination.

Fig.8 Postman call verifying “pushed” secret on CPI tenants

For a BTP-native option you can have a look at the Credential Store Service. Again, the BTP service would be a single point of failure, that would require explicit redundancy and can only serve pull-based scenarios.

Anyhow, formalizing and automating the propagation of CPI artifacts is key to achieve reliable and consistent updates.

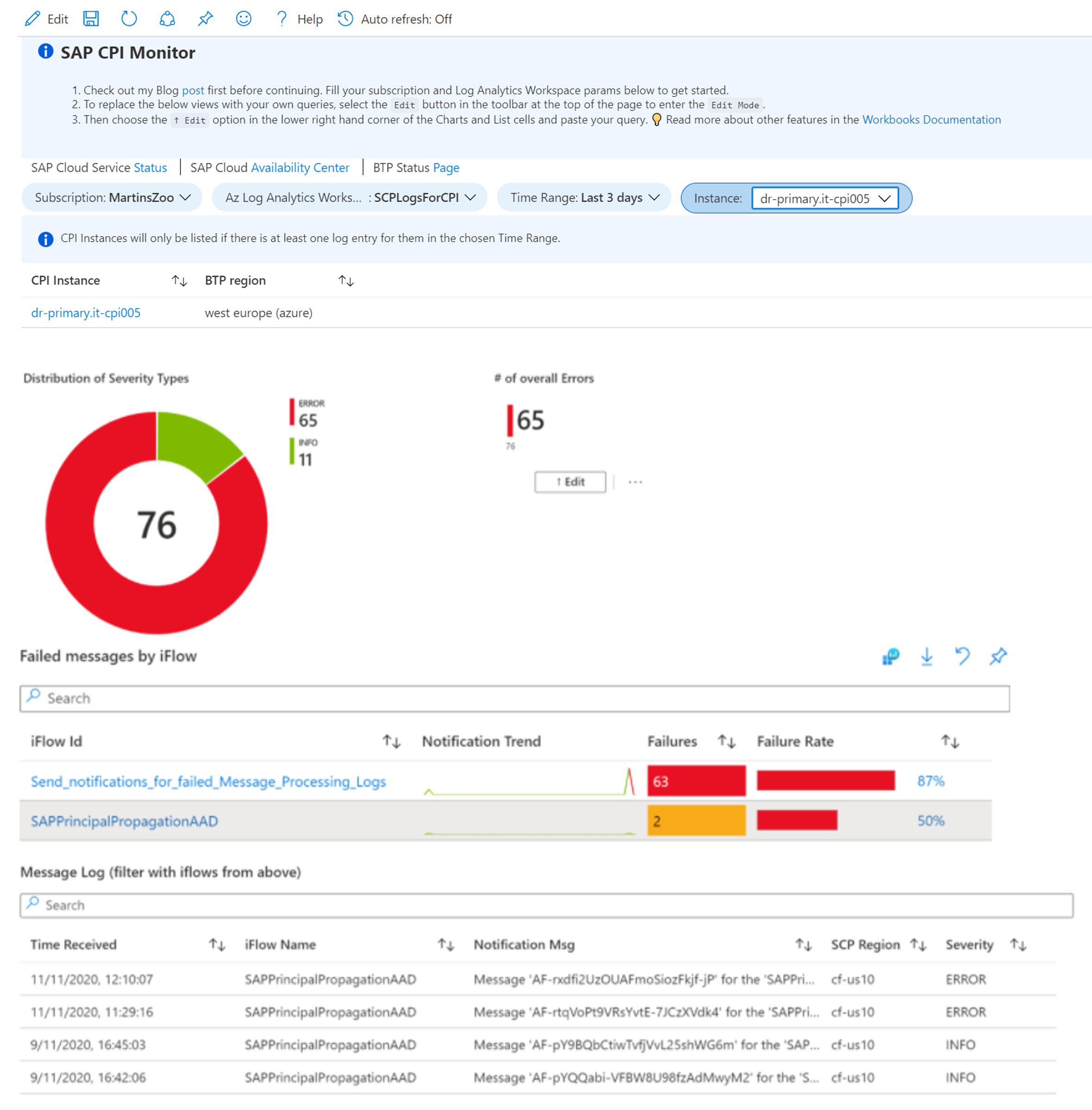

Streamline monitoring in one central place

From time-to-time CPI messages fail. How do we monitor and troubleshoot this effectively across our multitude of CPI prod tenants? To this day CPI monitoring is targeted only on a tenant-by-tenant basis. So, we need to extract the logs and consolidate them in one central place ourselves. There are some popular solutions out there like Dynatrace or Splunk. In our case I created an Azure Workbook to apply Azure Monitor to the problem. All my components in this example run on Azure after all 😉

Fig.9 Govern CPI logs in a central place

Find a detailed description of the workbook on my earlier post.

Fig.10 Azure Workbook with consolidated monitoring capability for multiple CPI tenants

Thoughts on production readiness

There is an extensive list on the production readiness of this setup on the first post already. In addition to that the mentioned managed Azure services have geo-redundancy built in. They are used to power services like Azure Portal, Dynamics365, Bing Search, XBox and the likes.

Customers are already running multiple CPI instances for scalability reasons today. The provided guidance simply gives a structure to the various aspects of distributed processing with Integration Suite (specifically SAP Cloud Integration) discussed within the community.

Final Words

That was quite the ride! This post concludes the trilogy of running multiple CPI instances. During the first two posts we covered disaster recovery (DR) across BTP and Azure regions and applied different load balancing mechanisms (FrontDoor and Traffic Manager). Today’s write up explained the difference between DR and high availability concepts for CPI and shed light on the synchronization, redundancy and race-condition problems that come with high availability.

The key to solving all these challenges is decoupling the components, because CPI has no concept for this. We saw SAP BTP native options to approach this as well as Microsoft managed ones on Azure.

Find the mentioned artifacts on my GitHub repos.

You are now fully equipped to survive the stampede of discount hungry consumers hitting your integration infrastructure 😊Do you agree?

#Kudos to iinside for all the interesting conversations around this.

As always feel free to leave or ask lots of follow-up questions.

Best Regards

Martin

- SAP Managed Tags:

- SAP Integration Suite,

- Cloud Integration,

- SAP BTP, Cloud Foundry runtime and environment

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

"automatische backups"

1 -

"regelmäßige sicherung"

1 -

"TypeScript" "Development" "FeedBack"

1 -

505 Technology Updates 53

1 -

ABAP

14 -

ABAP API

1 -

ABAP CDS Views

2 -

ABAP CDS Views - BW Extraction

1 -

ABAP CDS Views - CDC (Change Data Capture)

1 -

ABAP class

2 -

ABAP Cloud

2 -

ABAP Development

5 -

ABAP in Eclipse

1 -

ABAP Platform Trial

1 -

ABAP Programming

2 -

abap technical

1 -

absl

1 -

access data from SAP Datasphere directly from Snowflake

1 -

Access data from SAP datasphere to Qliksense

1 -

Accrual

1 -

action

1 -

adapter modules

1 -

Addon

1 -

Adobe Document Services

1 -

ADS

1 -

ADS Config

1 -

ADS with ABAP

1 -

ADS with Java

1 -

ADT

2 -

Advance Shipping and Receiving

1 -

Advanced Event Mesh

3 -

AEM

1 -

AI

7 -

AI Launchpad

1 -

AI Projects

1 -

AIML

9 -

Alert in Sap analytical cloud

1 -

Amazon S3

1 -

Analytical Dataset

1 -

Analytical Model

1 -

Analytics

1 -

Analyze Workload Data

1 -

annotations

1 -

API

1 -

API and Integration

3 -

API Call

2 -

Application Architecture

1 -

Application Development

5 -

Application Development for SAP HANA Cloud

3 -

Applications and Business Processes (AP)

1 -

Artificial Intelligence

1 -

Artificial Intelligence (AI)

4 -

Artificial Intelligence (AI) 1 Business Trends 363 Business Trends 8 Digital Transformation with Cloud ERP (DT) 1 Event Information 462 Event Information 15 Expert Insights 114 Expert Insights 76 Life at SAP 418 Life at SAP 1 Product Updates 4

1 -

Artificial Intelligence (AI) blockchain Data & Analytics

1 -

Artificial Intelligence (AI) blockchain Data & Analytics Intelligent Enterprise

1 -

Artificial Intelligence (AI) blockchain Data & Analytics Intelligent Enterprise Oil Gas IoT Exploration Production

1 -

Artificial Intelligence (AI) blockchain Data & Analytics Intelligent Enterprise sustainability responsibility esg social compliance cybersecurity risk

1 -

ASE

1 -

ASR

2 -

ASUG

1 -

Attachments

1 -

Authorisations

1 -

Automating Processes

1 -

Automation

1 -

aws

2 -

Azure

1 -

Azure AI Studio

1 -

B2B Integration

1 -

Backorder Processing

1 -

Backup

1 -

Backup and Recovery

1 -

Backup schedule

1 -

BADI_MATERIAL_CHECK error message

1 -

Bank

1 -

BAS

1 -

basis

2 -

Basis Monitoring & Tcodes with Key notes

2 -

Batch Management

1 -

BDC

1 -

Best Practice

1 -

bitcoin

1 -

Blockchain

3 -

BOP in aATP

1 -

BOP Segments

1 -

BOP Strategies

1 -

BOP Variant

1 -

BPC

1 -

BPC LIVE

1 -

BTP

11 -

BTP Destination

2 -

Business AI

1 -

Business and IT Integration

1 -

Business application stu

1 -

Business Application Studio

1 -

Business Architecture

1 -

Business Communication Services

1 -

Business Continuity

1 -

Business Data Fabric

3 -

Business Partner

12 -

Business Partner Master Data

10 -

Business Technology Platform

2 -

Business Trends

1 -

CA

1 -

calculation view

1 -

CAP

3 -

Capgemini

1 -

CAPM

1 -

Catalyst for Efficiency: Revolutionizing SAP Integration Suite with Artificial Intelligence (AI) and

1 -

CCMS

2 -

CDQ

12 -

CDS

2 -

Cental Finance

1 -

Certificates

1 -

CFL

1 -

Change Management

1 -

chatbot

1 -

chatgpt

3 -

CL_SALV_TABLE

2 -

Class Runner

1 -

Classrunner

1 -

Cloud ALM Monitoring

1 -

Cloud ALM Operations

1 -

cloud connector

1 -

Cloud Extensibility

1 -

Cloud Foundry

4 -

Cloud Integration

6 -

Cloud Platform Integration

2 -

cloudalm

1 -

communication

1 -

Compensation Information Management

1 -

Compensation Management

1 -

Compliance

1 -

Compound Employee API

1 -

Configuration

1 -

Connectors

1 -

Consolidation Extension for SAP Analytics Cloud

1 -

Controller-Service-Repository pattern

1 -

Conversion

1 -

Cosine similarity

1 -

cryptocurrency

1 -

CSI

1 -

ctms

1 -

Custom chatbot

3 -

Custom Destination Service

1 -

custom fields

1 -

Customer Experience

1 -

Customer Journey

1 -

Customizing

1 -

cyber security

2 -

Data

1 -

Data & Analytics

1 -

Data Aging

1 -

Data Analytics

2 -

Data and Analytics (DA)

1 -

Data Archiving

1 -

Data Back-up

1 -

Data Governance

5 -

Data Integration

2 -

Data Quality

12 -

Data Quality Management

12 -

Data Synchronization

1 -

data transfer

1 -

Data Unleashed

1 -

Data Value

8 -

database tables

1 -

Datasphere

2 -

datenbanksicherung

1 -

dba cockpit

1 -

dbacockpit

1 -

Debugging

2 -

Delimiting Pay Components

1 -

Delta Integrations

1 -

Destination

3 -

Destination Service

1 -

Developer extensibility

1 -

Developing with SAP Integration Suite

1 -

Devops

1 -

digital transformation

1 -

Documentation

1 -

Dot Product

1 -

DQM

1 -

dump database

1 -

dump transaction

1 -

e-Invoice

1 -

E4H Conversion

1 -

Eclipse ADT ABAP Development Tools

2 -

edoc

1 -

edocument

1 -

ELA

1 -

Embedded Consolidation

1 -

Embedding

1 -

Embeddings

1 -

Employee Central

1 -

Employee Central Payroll

1 -

Employee Central Time Off

1 -

Employee Information

1 -

Employee Rehires

1 -

Enable Now

1 -

Enable now manager

1 -

endpoint

1 -

Enhancement Request

1 -

Enterprise Architecture

1 -

ETL Business Analytics with SAP Signavio

1 -

Euclidean distance

1 -

Event Dates

1 -

Event Driven Architecture

1 -

Event Mesh

2 -

Event Reason

1 -

EventBasedIntegration

1 -

EWM

1 -

EWM Outbound configuration

1 -

EWM-TM-Integration

1 -

Existing Event Changes

1 -

Expand

1 -

Expert

2 -

Expert Insights

1 -

Fiori

14 -

Fiori Elements

2 -

Fiori SAPUI5

12 -

Flask

1 -

Full Stack

8 -

Funds Management

1 -

General

1 -

Generative AI

1 -

Getting Started

1 -

GitHub

8 -

Grants Management

1 -

groovy

1 -

GTP

1 -

HANA

5 -

HANA Cloud

2 -

Hana Cloud Database Integration

2 -

HANA DB

1 -

HANA XS Advanced

1 -

Historical Events

1 -

home labs

1 -

HowTo

1 -

HR Data Management

1 -

html5

8 -

HTML5 Application

1 -

Identity cards validation

1 -

idm

1 -

Implementation

1 -

input parameter

1 -

instant payments

1 -

Integration

3 -

Integration Advisor

1 -

Integration Architecture

1 -

Integration Center

1 -

Integration Suite

1 -

intelligent enterprise

1 -

Java

1 -

job

1 -

Job Information Changes

1 -

Job-Related Events

1 -

Job_Event_Information

1 -

joule

4 -

Journal Entries

1 -

Just Ask

1 -

Kerberos for ABAP

8 -

Kerberos for JAVA

8 -

Launch Wizard

1 -

Learning Content

2 -

Life at SAP

1 -

lightning

1 -

Linear Regression SAP HANA Cloud

1 -

local tax regulations

1 -

LP

1 -

Machine Learning

2 -

Marketing

1 -

Master Data

3 -

Master Data Management

14 -

Maxdb

2 -

MDG

1 -

MDGM

1 -

MDM

1 -

Message box.

1 -

Messages on RF Device

1 -

Microservices Architecture

1 -

Microsoft Universal Print

1 -

Middleware Solutions

1 -

Migration

5 -

ML Model Development

1 -

Modeling in SAP HANA Cloud

8 -

Monitoring

3 -

MTA

1 -

Multi-Record Scenarios

1 -

Multiple Event Triggers

1 -

Neo

1 -

New Event Creation

1 -

New Feature

1 -

Newcomer

1 -

NodeJS

2 -

ODATA

2 -

OData APIs

1 -

odatav2

1 -

ODATAV4

1 -

ODBC

1 -

ODBC Connection

1 -

Onpremise

1 -

open source

2 -

OpenAI API

1 -

Oracle

1 -

PaPM

1 -

PaPM Dynamic Data Copy through Writer function

1 -

PaPM Remote Call

1 -

PAS-C01

1 -

Pay Component Management

1 -

PGP

1 -

Pickle

1 -

PLANNING ARCHITECTURE

1 -

Popup in Sap analytical cloud

1 -

PostgrSQL

1 -

POSTMAN

1 -

Process Automation

2 -

Product Updates

4 -

PSM

1 -

Public Cloud

1 -

Python

4 -

Qlik

1 -

Qualtrics

1 -

RAP

3 -

RAP BO

2 -

Record Deletion

1 -

Recovery

1 -

recurring payments

1 -

redeply

1 -

Release

1 -

Remote Consumption Model

1 -

Replication Flows

1 -

Research

1 -

Resilience

1 -

REST

1 -

REST API

1 -

Retagging Required

1 -

Risk

1 -

Rolling Kernel Switch

1 -

route

1 -

rules

1 -

S4 HANA

1 -

S4 HANA Cloud

1 -

S4 HANA On-Premise

1 -

S4HANA

3 -

S4HANA_OP_2023

2 -

SAC

10 -

SAC PLANNING

9 -

SAP

4 -

SAP ABAP

1 -

SAP Advanced Event Mesh

1 -

SAP AI Core

8 -

SAP AI Launchpad

8 -

SAP Analytic Cloud Compass

1 -

Sap Analytical Cloud

1 -

SAP Analytics Cloud

4 -

SAP Analytics Cloud for Consolidation

2 -

SAP Analytics Cloud Story

1 -

SAP analytics clouds

1 -

SAP BAS

1 -

SAP Basis

6 -

SAP BODS

1 -

SAP BODS certification.

1 -

SAP BTP

20 -

SAP BTP Build Work Zone

2 -

SAP BTP Cloud Foundry

5 -

SAP BTP Costing

1 -

SAP BTP CTMS

1 -

SAP BTP Innovation

1 -

SAP BTP Migration Tool

1 -

SAP BTP SDK IOS

1 -

SAP Build

11 -

SAP Build App

1 -

SAP Build apps

1 -

SAP Build CodeJam

1 -

SAP Build Process Automation

3 -

SAP Build work zone

10 -

SAP Business Objects Platform

1 -

SAP Business Technology

2 -

SAP Business Technology Platform (XP)

1 -

sap bw

1 -

SAP CAP

2 -

SAP CDC

1 -

SAP CDP

1 -

SAP CDS VIEW

1 -

SAP Certification

1 -

SAP Cloud ALM

4 -

SAP Cloud Application Programming Model

1 -

SAP Cloud Integration for Data Services

1 -

SAP cloud platform

8 -

SAP Companion

1 -

SAP CPI

3 -

SAP CPI (Cloud Platform Integration)

2 -

SAP CPI Discover tab

1 -

sap credential store

1 -

SAP Customer Data Cloud

1 -

SAP Customer Data Platform

1 -

SAP Data Intelligence

1 -

SAP Data Migration in Retail Industry

1 -

SAP Data Services

1 -

SAP DATABASE

1 -

SAP Dataspher to Non SAP BI tools

1 -

SAP Datasphere

9 -

SAP DRC

1 -

SAP EWM

1 -

SAP Fiori

2 -

SAP Fiori App Embedding

1 -

Sap Fiori Extension Project Using BAS

1 -

SAP GRC

1 -

SAP HANA

1 -

SAP HCM (Human Capital Management)

1 -

SAP HR Solutions

1 -

SAP IDM

1 -

SAP Integration Suite

9 -

SAP Integrations

4 -

SAP iRPA

2 -

SAP Learning Class

1 -

SAP Learning Hub

1 -

SAP Odata

2 -

SAP on Azure

1 -

SAP PartnerEdge

1 -

sap partners

1 -

SAP Password Reset

1 -

SAP PO Migration

1 -

SAP Prepackaged Content

1 -

SAP Process Automation

2 -

SAP Process Integration

2 -

SAP Process Orchestration

1 -

SAP S4HANA

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Cloud for Finance

1 -

SAP S4HANA Cloud private edition

1 -

SAP Sandbox

1 -

SAP STMS

1 -

SAP SuccessFactors

2 -

SAP SuccessFactors HXM Core

1 -

SAP Time

1 -

SAP TM

2 -

SAP Trading Partner Management

1 -

SAP UI5

1 -

SAP Upgrade

1 -

SAP Utilities

1 -

SAP-GUI

8 -

SAP_COM_0276

1 -

SAPBTP

1 -

SAPCPI

1 -

SAPEWM

1 -

sapmentors

1 -

saponaws

2 -

SAPS4HANA

1 -

SAPUI5

4 -

schedule

1 -

Secure Login Client Setup

8 -

security

9 -

Selenium Testing

1 -

SEN

1 -

SEN Manager

1 -

service

1 -

SET_CELL_TYPE

1 -

SET_CELL_TYPE_COLUMN

1 -

SFTP scenario

2 -

Simplex

1 -

Single Sign On

8 -

Singlesource

1 -

SKLearn

1 -

soap

1 -

Software Development

1 -

SOLMAN

1 -

solman 7.2

2 -

Solution Manager

3 -

sp_dumpdb

1 -

sp_dumptrans

1 -

SQL

1 -

sql script

1 -

SSL

8 -

SSO

8 -

Substring function

1 -

SuccessFactors

1 -

SuccessFactors Time Tracking

1 -

Sybase

1 -

system copy method

1 -

System owner

1 -

Table splitting

1 -

Tax Integration

1 -

Technical article

1 -

Technical articles

1 -

Technology Updates

1 -

Technology Updates

1 -

Technology_Updates

1 -

Threats

1 -

Time Collectors

1 -

Time Off

2 -

Tips and tricks

2 -

Tools

1 -

Trainings & Certifications

1 -

Transport in SAP BODS

1 -

Transport Management

1 -

TypeScript

2 -

unbind

1 -

Unified Customer Profile

1 -

UPB

1 -

Use of Parameters for Data Copy in PaPM

1 -

User Unlock

1 -

VA02

1 -

Validations

1 -

Vector Database

1 -

Vector Engine

1 -

Visual Studio Code

1 -

VSCode

1 -

Web SDK

1 -

work zone

1 -

workload

1 -

xsa

1 -

XSA Refresh

1

- « Previous

- Next »

- Analyze Expensive ABAP Workload in the Cloud with Work Process Sampling in Technology Blogs by SAP

- CF Deployment Error: Error getting tenant t0 in Technology Q&A

- Error by Logon to the SAP Cloud System in Technology Q&A

- Kyma Integration with SAP Cloud Logging. Part 2: Let's ship some traces in Technology Blogs by SAP

- SAP BUILD deployment to production in Technology Q&A

| User | Count |

|---|---|

| 11 | |

| 10 | |

| 7 | |

| 6 | |

| 4 | |

| 4 | |

| 3 | |

| 3 | |

| 3 | |

| 3 |