- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- Scheduling Python code on Cloud Foundry

Technology Blogs by SAP

Learn how to extend and personalize SAP applications. Follow the SAP technology blog for insights into SAP BTP, ABAP, SAP Analytics Cloud, SAP HANA, and more.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Product and Topic Expert

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

06-14-2021

11:33 AM

You have some Python code that needs to run on a regular schedule? There are a number of options. Deployment on Cloud Foundry in the SAP Business Technology Platform can be interesting, particularly if the code does not do any heavy calculations. Other options could be Kyma (for scalability and granular control) or SAP Data Intelligence if your code is part of a broader data enrichment process.

This blog starts with a very simple example to schedule a Python file on Cloud Foundry, just to introduce the most important steps. That concept is then extended to schedule a Python file, which applies a trained Machine Learning model in SAP HANA.

Creating this blog was a team effort, together with sapalex and lesterc.lobo. Thanks for inspiration and support go to dimlyras and remi.astier.

Run Python file locally

Run the same Python file on Cloud Foundry

Schedule that Python file on Cloud Foundry

Schedule Python file to trigger SAP HANA Machine Learning

We would like to schedule a Python file, not a Jupyter Notebook. Hence use your preferred local Python IDE or editor to run this simple file, helloworld.py.

The only specialty of this code is that it is already able to detect, whether it is executed locally or in Cloud Foundry. This will be important later, for example when your code may need to securely access logon credentials for SAP HANA.

Now deploy this file on Cloud Foundry. If you haven't worked with Cloud Foundry yet, the "Getting started" guide is a great resource. The free trial of the SAP Business Technology Platform is sufficient for the scheduling.

To run and schedule the file in Cloud Foundry, some further configuration is required. In the space of your Cloud Foundry Environment:

Now prepare the app to be pushed to Cloud Foundry. In your local folder, in which the file helloworld.py is saved, create a file called runtime.txt that contains just this single line, which specifies the Python runtime environment Cloud Foundry should use. The Python buildpack release notes list the versions that are currently supported by Cloud Foundry.

Create another file called manifest.yml, with the following content. It specifies for example the name of the application, the memory limit for the app and it binds our new two services to the app.

Now push the app to Cloud Foundry. You require the Cloud Foundry Command Line Interface (CLI). In a command prompt, navigate to the folder that contains your local files (helloworld.py, manifest.yml, runtime.txt).

Login to Cloud Foundry, if you haven't yet.

Then push the app to Cloud Foundry. The --task flag ensures for instance that the app is pushed and staged, but not started. Without this flag Cloud Foundry would believe that the app crashed after the Python code has executed and terminated. It would therefore constantly restart the app, which we don't want in this case. In case your CLI doesn't recognise this flag, please ensure your CLI is at least on version 7.

Once pushed, the app is showing as "down".

Now run the application once as a task, from the command line. You can chose your own name for this task, "taskfromcli" has no specific meaning.

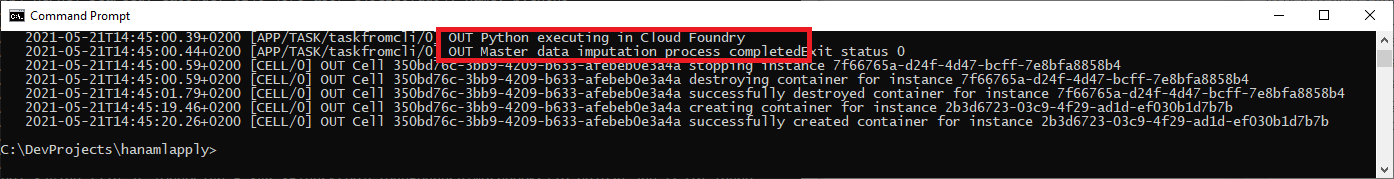

Confirm that the code ran as expected, by looking into the application log, which should include the hello statement.

And indeed, the code correctly identified that it is was running in Cloud Foundry. After outputting the code, the task ended and the container was destroyed.

So far we triggered the task manually in Cloud Foundry through the Command Line Interface. Now lets create a schedule for it. In the SAP Business Technology Platform, find the instance of the Job Scheduling Service, that was created earlier. Open its dashboard.

On the "Tasks" menu create a new task as shown on the screenshot.

Open the newly created task and in the "Schedules" tab you can create the schedule we are after. In the screenshot the application is set to run every hour. In case you specify a certain time, note that: "SAP Job Scheduling service runs jobs in the UTC time zone".

,

Click into the new schedule and on the left hand the "Run Log" shows the history of all runs.

You can also access the logs through the CLI as before.

With the understanding, how Python code can be scheduled in Cloud Foundry, let's put this to good use. Imagine you have some master data in SAP HANA, with missing values in one column. You want to use SAP HANA's Automated Predictive Library (APL) to estimate those missing values. All calculations will be done in SAP HANA. Cloud Foundry is just the trigger.

In this blog I will shorten the Machine Learning workflow to the most important steps. For this scenario:

Should you want to implement these steps, please check with your SAP Account Executive whether these steps are allowed by your SAP HANA license.

Some prerequisites:

Begin by uploading this data about the prices of used vehicles to SAP HANA. Our model will predict the vehicle type, which is not known for all vehicles. This dataset was compiled by scraping offers on eBay and shared as "Ebay Used Car Sales Data” on Kaggle".

In your local Python environment load the data into a pandas DataFrame and carry out a few transformations. Here I am using Jupyter Notebooks, but any Python environment should be fine.

Load that data into a SAP HANA table. The userkey is pointing to SAP HANA credentials that have been added to the hdbuserstore.

Before training the Machine Learning model, have a quick check of the data.

The are 371.528 entries in the table. For 37.869 the vehicle type is not known. Our model will estimate those values.

Focus on the cars for which the vehicle types are known. What are the different types and how often do they occur?

Limousines are most frequent, followed by "kleinwagen", which is German for a smaller vehicle.

Go ahead and train the Machine Learning model. This step will take a while, that's definitely a chance for a coffee, or rather lunch. On my environment it took about 20 minutes.

Apply the trained model on the test data and evaluate the model quality with a confusion matrix.

Save the trained model in SAP HANA, where the scheduled process can pick it up later.

To trigger the application of the trained model, follow the same steps as before when the helloworld app was deployed. You require 4 files all together. Place these in a separate local folder on your local environment.

File 1 of 4: main.py

This file uses the same logic of the earlier helloworld.py to identify whether it is executed locally or in Cloud Foundry. Depending on the environment it is retrieving the SAP HANA logon credentials either from the local hdbuserstore or from a user-defined variable in Cloud Foundry. Other authentication methods are possible, ie pbkdf2, ldap, saml, jwt, saplogon, kerberos, sessioncookie, and X509, but here we use the password.

The trained machine learning model is then used to predict the missing values. The strongest predictions are saved in the VEHICLTETYPE_ESTIMATED table in SAP HANA. Again, no data ever leaves SAP HANA.

Run the file locally and you should see an update that the process completed successfully.

Before pushing this application to Cloud Foundry, you must create the remaining 3 files in the same folder.

File 2 of 4: manifest.yml

No surprises here, along the same lines as before.

File 3 of 4: runtime.txt

Exactly as before.

File 4 of 4: requirements.txt

This file is new. It instructs the Python environment in Cloud Foundry to install the hana_ml library. When running your own project, additional libraries might need to be added to this file. Please see the Installation Guide for package dependencies.

From your local command line push the app to Cloud Foundry.

Before running the app, store the SAP HANA logon credentials in a user-defined variable in Cloud Foundry. The name of the variable (here "HANACRED") has to match with the name used in the above main.py. Port 443 is the port of SAP HANA Cloud. If you are using the CLI on Windows, the following syntax should work. If you are on a Mac, you might need to put double quotes around the JSON parameter.

Hint for Linux: A customer reported, that setting the HANACRED variable on Linux, requires single quotes around the JSON content. The same might apply for macOS. Thanks for the feedback!

From here, it's exactly the same steps as before. Run the task from the CLI if you like.

Check the log.

And indeed, the task completed successfully. The SAP HANA logon credentials were used to apply the trained model, to predict the missing data and to save the strongest predictions into the target table.

Back in the SAP Business Technology Platform, go to the Job Scheduling's Service's Dashboard and create a task for the hanamlapply app.

Create a schedule, lean back and the model is applied automatically!

If your Python code is running for longer than 30 minutes, you can adjust the Timeout in the Job Scheduling Service Dashboard accordingly.

Happy scheduling!

This blog starts with a very simple example to schedule a Python file on Cloud Foundry, just to introduce the most important steps. That concept is then extended to schedule a Python file, which applies a trained Machine Learning model in SAP HANA.

Creating this blog was a team effort, together with sapalex and lesterc.lobo. Thanks for inspiration and support go to dimlyras and remi.astier.

Table of contents

Run Python file locally

Run the same Python file on Cloud Foundry

Schedule that Python file on Cloud Foundry

Schedule Python file to trigger SAP HANA Machine Learning

Run Python file locally

We would like to schedule a Python file, not a Jupyter Notebook. Hence use your preferred local Python IDE or editor to run this simple file, helloworld.py.

import os

import sys

outputstring = 'Hello world'

if os.getenv('VCAP_APPLICATION'):

# File is executed in Cloud Foundry

outputstring += ' from Python in Cloud Foundry'

else:

# File is executed locally

outputstring += ' from local Python'

# Print the output

sys.stdout.write(outputstring)The only specialty of this code is that it is already able to detect, whether it is executed locally or in Cloud Foundry. This will be important later, for example when your code may need to securely access logon credentials for SAP HANA.

Run the same Python file on Cloud Foundry

Now deploy this file on Cloud Foundry. If you haven't worked with Cloud Foundry yet, the "Getting started" guide is a great resource. The free trial of the SAP Business Technology Platform is sufficient for the scheduling.

To run and schedule the file in Cloud Foundry, some further configuration is required. In the space of your Cloud Foundry Environment:

- Create an instance of the Job Scheduler services as explained by carlos.roggan. I have chosen the same name for the service as him: jobschedulerinstance

- Then create an instance of the Authorization & Trust Management (XSUAA) service, as explained again by Carlos. I have used the same name again as him: xsuaaforsimplejobs. Once you created that instance, you can come back to this blog. You do not need to create the Node.js application to continue here.

Now prepare the app to be pushed to Cloud Foundry. In your local folder, in which the file helloworld.py is saved, create a file called runtime.txt that contains just this single line, which specifies the Python runtime environment Cloud Foundry should use. The Python buildpack release notes list the versions that are currently supported by Cloud Foundry.

python-3.10.9Create another file called manifest.yml, with the following content. It specifies for example the name of the application, the memory limit for the app and it binds our new two services to the app.

---

applications:

- name: helloworld

memory: 512M

command: python helloworld.py

services:

- xsuaaforsimplejobs

- jobschedulerinstanceNow push the app to Cloud Foundry. You require the Cloud Foundry Command Line Interface (CLI). In a command prompt, navigate to the folder that contains your local files (helloworld.py, manifest.yml, runtime.txt).

Login to Cloud Foundry, if you haven't yet.

cf loginThen push the app to Cloud Foundry. The --task flag ensures for instance that the app is pushed and staged, but not started. Without this flag Cloud Foundry would believe that the app crashed after the Python code has executed and terminated. It would therefore constantly restart the app, which we don't want in this case. In case your CLI doesn't recognise this flag, please ensure your CLI is at least on version 7.

cf push --taskOnce pushed, the app is showing as "down".

Now run the application once as a task, from the command line. You can chose your own name for this task, "taskfromcli" has no specific meaning.

cf run-task helloworld --command "python helloworld.py" --name taskfromcli

Confirm that the code ran as expected, by looking into the application log, which should include the hello statement.

cf logs helloworld --recentAnd indeed, the code correctly identified that it is was running in Cloud Foundry. After outputting the code, the task ended and the container was destroyed.

Schedule that Python file on Cloud Foundry

So far we triggered the task manually in Cloud Foundry through the Command Line Interface. Now lets create a schedule for it. In the SAP Business Technology Platform, find the instance of the Job Scheduling Service, that was created earlier. Open its dashboard.

On the "Tasks" menu create a new task as shown on the screenshot.

Open the newly created task and in the "Schedules" tab you can create the schedule we are after. In the screenshot the application is set to run every hour. In case you specify a certain time, note that: "SAP Job Scheduling service runs jobs in the UTC time zone".

,

Click into the new schedule and on the left hand the "Run Log" shows the history of all runs.

You can also access the logs through the CLI as before.

cf logs helloworld --recent

Schedule Python file to trigger SAP HANA Machine Learning

With the understanding, how Python code can be scheduled in Cloud Foundry, let's put this to good use. Imagine you have some master data in SAP HANA, with missing values in one column. You want to use SAP HANA's Automated Predictive Library (APL) to estimate those missing values. All calculations will be done in SAP HANA. Cloud Foundry is just the trigger.

In this blog I will shorten the Machine Learning workflow to the most important steps. For this scenario:

- A Data Scientist / IT Expert will use Python to manually trigger the training of the Machine Learning model in SAP HANA and save the model in SAP HANA.

- Cloud Foundry then applies the model through a scheduled task and writes the predictions into a SAP HANA table.

Should you want to implement these steps, please check with your SAP Account Executive whether these steps are allowed by your SAP HANA license.

Some prerequisites:

- You have access to a SAP HANA environment that has the Automated Predictive Library (APL) installed. This can be a productive environment of SAP HANA Cloud. The trial of SAP HANA Cloud does not contain the embedded HANA Machine Learning.

- Your logon credentials to SAP HANA are stored in your local hdbuserstore (see "Connect with secure password").

- You have the hana_ml library installed in your local Python environment.

Train the Machine Learning model

Begin by uploading this data about the prices of used vehicles to SAP HANA. Our model will predict the vehicle type, which is not known for all vehicles. This dataset was compiled by scraping offers on eBay and shared as "Ebay Used Car Sales Data” on Kaggle".

In your local Python environment load the data into a pandas DataFrame and carry out a few transformations. Here I am using Jupyter Notebooks, but any Python environment should be fine.

import hana_ml

import pandas as pd

df_data = pd.read_csv('autos.csv', encoding = 'Windows-1252')

# Column names to upper case

df_data.columns = map(str.upper, df_data.columns)

# Simplify by dropping a few columns

df_data = df_data.drop(['NOTREPAIREDDAMAGE',

'NAME',

'DATECRAWLED',

'SELLER',

'OFFERTYPE',

'ABTEST',

'BRAND',

'DATECREATED',

'NROFPICTURES',

'POSTALCODE',

'LASTSEEN',

'MONTHOFREGISTRATION'],

axis = 1)

# Reneame a few columns

df_data = df_data.rename(index = str, columns = {'YEAROFREGISTRATION': 'YEAR',

'POWERPS': 'HP'})

# Add ID column

df_data.insert(0, 'CAR_ID', df_data.reset_index().index)Load that data into a SAP HANA table. The userkey is pointing to SAP HANA credentials that have been added to the hdbuserstore.

import hana_ml

import hana_ml.dataframe as dataframe

conn = dataframe.ConnectionContext(userkey='HANACRED_LOCAL', encrypt = True, sslValidateCertificate = False)

df_remote = dataframe.create_dataframe_from_pandas(connection_context = conn,

pandas_df = df_data,

table_name = 'USEDCARPRICES',

force = True,

replace = False)Before training the Machine Learning model, have a quick check of the data.

import hana_ml.dataframe as dataframe

conn = dataframe.ConnectionContext(userkey='HANACRED_LOCAL', encrypt = True, sslValidateCertificate = False)

df_remote = conn.table("USEDCARPRICES")

df_remote.describe().collect()The are 371.528 entries in the table. For 37.869 the vehicle type is not known. Our model will estimate those values.

Focus on the cars for which the vehicle types are known. What are the different types and how often do they occur?

top_n = 10

df_remote = df_remote.filter("VEHICLETYPE IS NOT NULL")

df_remote_col_frequency = df_remote.agg([('count', 'VEHICLETYPE', 'COUNT')], group_by = 'VEHICLETYPE')

df_col_frequency = df_remote_col_frequency.sort('COUNT', desc = True).head(top_n).collect()

df_col_frequencyLimousines are most frequent, followed by "kleinwagen", which is German for a smaller vehicle.

Go ahead and train the Machine Learning model. This step will take a while, that's definitely a chance for a coffee, or rather lunch. On my environment it took about 20 minutes.

# Split the data into train and test

from hana_ml.algorithms.pal import partition

df_remote_train, df_remote_test, df_remote_ignore = partition.train_test_val_split(random_seed = 1972,

data = df_remote,

training_percentage = 0.7,

testing_percentage = 0.3,

validation_percentage = 0)

# Parameterise the Machine Learning model

from hana_ml.algorithms.apl.gradient_boosting_classification import GradientBoostingClassifier

gbapl_model = GradientBoostingClassifier()

col_target = 'VEHICLETYPE'

col_id = 'CAR_ID'

col_predictors = df_remote_train.columns

col_predictors.remove(col_target)

col_predictors.remove(col_id)

gbapl_model.set_params(other_train_apl_aliases={'APL/VariableAutoSelection': 'true',

'APL/Interactions': 'true',

'APL/InteractionsMaxKept': 10})

# Train the Machine Learning model

gbapl_model.fit(data = df_remote_train,

key = col_id,

features = col_predictors,

label = col_target)Apply the trained model on the test data and evaluate the model quality with a confusion matrix.

# Apply the model on the test data and keep only the strongest predictions

df_remote_predict = gbapl_model.predict(df_remote_test)

df_remote_predict = df_remote_predict.filter('PROBABILITY > 0.9')

# Confusion Matrix

from hana_ml.algorithms.pal.metrics import confusion_matrix

df_remote__confusion_matrix = confusion_matrix(df_remote_predict, col_id, label_true = 'TRUE_LABEL', label_pred = 'PREDICTED')

df_remote__confusion_matrix[1].collect()

Save the trained model in SAP HANA, where the scheduled process can pick it up later.

from hana_ml.model_storage import ModelStorage

model_storage = ModelStorage(connection_context = conn)

gbapl_model.name = 'Master data Vehicle type'

model_storage.save_model(model=gbapl_model, if_exists = 'replace')Schedule the apply of the Machine Learning model

To trigger the application of the trained model, follow the same steps as before when the helloworld app was deployed. You require 4 files all together. Place these in a separate local folder on your local environment.

File 1 of 4: main.py

This file uses the same logic of the earlier helloworld.py to identify whether it is executed locally or in Cloud Foundry. Depending on the environment it is retrieving the SAP HANA logon credentials either from the local hdbuserstore or from a user-defined variable in Cloud Foundry. Other authentication methods are possible, ie pbkdf2, ldap, saml, jwt, saplogon, kerberos, sessioncookie, and X509, but here we use the password.

The trained machine learning model is then used to predict the missing values. The strongest predictions are saved in the VEHICLTETYPE_ESTIMATED table in SAP HANA. Again, no data ever leaves SAP HANA.

import os

import sys

import json

import hana_ml

import hana_ml.dataframe as dataframe

hana_encrypt = 'true'

hana_sslcertificate = 'false'

if os.getenv('VCAP_APPLICATION'):

# File is executed in Cloud Foundry

sys.stdout.write('Python executing in Cloud Foundry')

# Get SAP HANA logon credentials from user-provided variable in CloudFoundry

hana_credentials_env = os.getenv('HANACRED')

hana_credentials = json.loads(hana_credentials_env)

hana_address = hana_credentials['address']

hana_port = hana_credentials['port']

hana_user = hana_credentials['user']

hana_password = hana_credentials['password']

# Instantiate connection object

conn = dataframe.ConnectionContext(address = hana_address,

port = hana_port,

user = hana_user,

password = hana_password,

encrypt = hana_encrypt,

sslValidateCertificate = hana_sslcertificate

)

else:

# File is executed locally

sys.stdout.write('Python executing locally')

# Get SAP HANA logon credentials from the local client's secure user store

conn = dataframe.ConnectionContext(userkey='HANACRED_LOCAL',

encrypt = hana_encrypt,

sslValidateCertificate = hana_sslcertificate)

# Load the trained model

import hana_ml.dataframe as dataframe

from hana_ml.model_storage import ModelStorage

model_storage = ModelStorage(connection_context = conn)

gbapl_model = model_storage.load_model(name = 'Master data Vehicle type', version = 1)

# Apply the model on rows for which vehicle type is not known

df_remote = conn.table("USEDCARPRICES")

df_remote = df_remote.filter("VEHICLETYPE IS NULL")

df_remote_predict = gbapl_model.predict(df_remote)

# Save the predictions with probability > 0.9 to table

df_remote_predict = df_remote_predict.filter('PROBABILITY > 0.9')

df_remote_predict.save('VEHICLTETYPE_ESTIMATED')

# Print success message

sys.stdout.write('\nMaster data imputation process completed')Run the file locally and you should see an update that the process completed successfully.

Before pushing this application to Cloud Foundry, you must create the remaining 3 files in the same folder.

File 2 of 4: manifest.yml

No surprises here, along the same lines as before.

---

applications:

- name: hanamlapply

memory: 512M

command: python main.py

services:

- xsuaaforsimplejobs

- jobschedulerinstanceFile 3 of 4: runtime.txt

Exactly as before.

python-3.10.9File 4 of 4: requirements.txt

This file is new. It instructs the Python environment in Cloud Foundry to install the hana_ml library. When running your own project, additional libraries might need to be added to this file. Please see the Installation Guide for package dependencies.

hana-ml==2.8.21042100From your local command line push the app to Cloud Foundry.

cf push --taskBefore running the app, store the SAP HANA logon credentials in a user-defined variable in Cloud Foundry. The name of the variable (here "HANACRED") has to match with the name used in the above main.py. Port 443 is the port of SAP HANA Cloud. If you are using the CLI on Windows, the following syntax should work. If you are on a Mac, you might need to put double quotes around the JSON parameter.

cf set-env hanamlapply HANACRED {\"address\":\"REPLACEWITHYOURHANASERVER\",\"port\":443,\"user\":\"REPLACEWITHYOURUSER\",\"password\":\"REPLACEWITHYOURPASSWORD\"}Hint for Linux: A customer reported, that setting the HANACRED variable on Linux, requires single quotes around the JSON content. The same might apply for macOS. Thanks for the feedback!

cf set-env hanamlapply HANACRED '{\"address\":\"REPLACEWITHYOURHANASERVER\",\"port\":443,\"user\":\"REPLACEWITHYOURUSER\",\"password\":\"REPLACEWITHYOURPASSWORD\"}'From here, it's exactly the same steps as before. Run the task from the CLI if you like.

cf run-task hanamlapply --command "python main.py" --name taskfromcliCheck the log.

cf logs hanamlapply --recentAnd indeed, the task completed successfully. The SAP HANA logon credentials were used to apply the trained model, to predict the missing data and to save the strongest predictions into the target table.

Back in the SAP Business Technology Platform, go to the Job Scheduling's Service's Dashboard and create a task for the hanamlapply app.

Create a schedule, lean back and the model is applied automatically!

If your Python code is running for longer than 30 minutes, you can adjust the Timeout in the Job Scheduling Service Dashboard accordingly.

Happy scheduling!

- SAP Managed Tags:

- Machine Learning,

- Python,

- Artificial Intelligence,

- SAP BTP, Cloud Foundry runtime and environment,

- SAP HANA

Labels:

1 Comment

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

93 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

66 -

Expert

1 -

Expert Insights

177 -

Expert Insights

299 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

13 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,577 -

Product Updates

344 -

Replication Flow

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,873 -

Technology Updates

423 -

Workload Fluctuations

1

Related Content

- LLM, RAG and Cloud Foundry: No space left on device in Technology Q&A

- SAP Datasphere - Space, Data Integration, and Data Modeling Best Practices in Technology Blogs by SAP

- Augmenting SAP BTP Use Cases with AI Foundation: A Deep Dive into the Generative AI Hub in Technology Blogs by SAP

- What’s New in SAP HANA Cloud – March 2024 in Technology Blogs by SAP

- Predict, Personalize, Prosper: BTP AI Capabilities Redefining Retail Intelligence - Part 3/3 in Technology Blogs by SAP

Top kudoed authors

| User | Count |

|---|---|

| 40 | |

| 25 | |

| 17 | |

| 14 | |

| 8 | |

| 7 | |

| 7 | |

| 7 | |

| 6 | |

| 6 |