- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- Text mining at SAP HANA Cloud with Python

Technology Blogs by SAP

Learn how to extend and personalize SAP applications. Follow the SAP technology blog for insights into SAP BTP, ABAP, SAP Analytics Cloud, SAP HANA, and more.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Advisor

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

04-26-2021

8:08 PM

The SAP HANA Cloud product team recently announced the availability of out of the box Text mining capabilities, which you can explore with a Jupyter notebook via the latest Python machine learning client for SAP HANA

Why you should care about that?

If you are developing in SAP HANA Cloud, good news: you don't have to move your data in/out of SAP HANA Cloud to operate Text Mining techniques.

Would you like to enhance your advance analytics reports with insights stemmed from text? e.g.

Which is the most frequent spare-part mentioned on High criticality issues reported from Field engineers to the manufacturing plant?

What are the top 3 operations taking place each month from the Maintenance department?

Good news. All you need is one connection to a SAP HANA Cloud instance, consolidate your results in a Calculation View and your BI engineers can enhance their report in SAP Analytics Cloud (SAC).

Last but not least you are able to classify an input document with respect to sets of categories.

This blog will be a series of 2 blog posts, starting with this one to get the very basics of the new functionalities offered. We will use a Jupyter notebook and a sample text with a tiny dataset having 3 columns.

- Document ID as a unique id per row

- Free text mocking an S/4 Plant Maintenance ticket notification.

- If you are not familiar with this S/4 Module, a notification is how a maintenance department is getting a request to check at abnormal or exceptional situation(s) in technical objects of a plant. The ticket among many other fields, includes a free text field with a description of the problem.

- Priority (High or Low) of a ticket.

The second blog post would be to see how we can make use of another SAP BTP Component (Cloud Foundry or Kyma Runtime) to build an application on top of these functions to make them available to our business users.

Setup your Jupyter notebook

1.Create a Jupyter notebook and import hana_ml library:

#SAP LIBRARIES

import hana_ml

import hana_ml.dataframe as dataframe

import hana_ml.text.tm as tmh

###EXTERNAL LIBRARIES

import pandas as pd

##SETTINGS

pd.options.display.max_columns = None

pd.options.display.max_rows = None

pd.set_option('display.max_colwidth', 100)2.Create 2 csv files at the same directory.

1st file , call it sample-text.csv and add the text below and save it.

ID,NOTIFICATION_TEXT,PRIORITY

DOC1000,repair damaged pipe,HIGH

DOC2000,replace main valve,HIGH

DOC3000,repair oil leak,HIGH

DOC4000,paint doors,LOW

DOC5000,clean filters,LOW

DOC6000,maintain A/C filters,LOW

2nd file , call it new-text.csv and add the text below and save it. (note that we are not including a 3rd Column with Priority. This dataset would be to showcase the text classification function.)

ID,NOTIFICATION_TEXT

DOC7000,repair main valve

DOC8000,clean debris

Import CSV files to SAP HANA Cloud

table_name = "TM_SAMPLE_TEXT"

file_name = "sample-text.csv"

df_data = pd.read_csv(file_name,sep=',',encoding= 'unicode_escape')

dataframe.create_dataframe_from_pandas(connection_context = conn,

pandas_df = df_data,

table_name = table_name,

force = True,

replace = True)

df_remote = conn.table("TM_SAMPLE_TEXT")

df_remote.collect()If all worked alright, it should look like this:

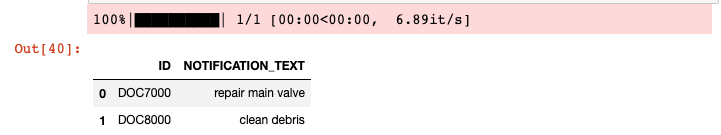

Repeat for the second CSV file:

table_name = "TM_NEW_TEXT"

file_name = "new-text.csv"

df_data = pd.read_csv(file_name,sep=',',encoding= 'unicode_escape')

dataframe.create_dataframe_from_pandas(connection_context = conn,

pandas_df = df_data,

table_name = table_name,

force = True,

replace = True)

df_remote_new = conn.table("TM_NEW_TEXT")

df_remote_new.collect()

Tip 1: Avoid collecting all data as you see above by calling .collect() on the dataframe in real projects. Remember that if you do that you collect all the data at the Jupyter notebook! Instead you should use df_remote.head(5).collect() to check your data and keep the heavy lifting on SAP HANA Cloud.

Now you are holding all the information is this df_remote object. Perform a Term Frequency analysis by simply passing the df_remote object as parameter to the tf_analysis function:

tfidf= tmh.tf_analysis(df_remote)

tfidf[0].head(5).collect()

tfidf[1].head(5).collect()

tfidf[2].head(5).collect()The object returned is a tuple of hana_ml dataframes and you may receive each one by simply adding an index from 0 to 2 if you are interested to check the TF/IDF values calculated etc.

However, once you have the tfidf object initiated, it can be used as input to other text mining functions. Let's see an example:

get_related_doc

This function returns the top-ranked related documents for a query document based on Term Frequency - Inverse Document Frequency(TF-IDF) result or reference data.

Get a sample query document from the new dataset. At this case we select just the first one with text "repair main valve". We pass to get_related_doc this unseen document and the tfidf object.

#get one line from the unseen documents

new_text = conn.sql('SELECT "NOTIFICATION_TEXT" FROM ({}) LIMIT 1 '.format(df_remote_new.select_statement))

display(new_text.collect())

#run the get_related_doc

get_related_doc_rs = tmh.get_related_doc(new_text, tfidf)

#display the list of top N related documents with their mathcing score

display(get_related_doc_rs.collect())

#join the result table wit the initial table to have text and scores for ambiguity

_result = get_related_doc_rs.alias('A').join(

df_remote.alias('B'),

"A.ID = B.ID"

).sort("SCORE",desc="TRUE").deselect(["ID","PRIORITY"]).collect()

display(_result)Tip 2 : Please note the latest addition to the hana_ml data frame where you can deselect a column. Never again having to select all the columns one by one and skipping the ones we don't need!

The results look like below:

The function has returned three documents, with the top one being "replace main valve" whereas the new document was "repair main valve".

get_suggested_term

This function returns the top-ranked terms that match an initial substring based on Term Frequency - Inverse Document Frequency(TF-IDF) result or reference data.

Let's hack our way to retrieve one term from a document. What we do below is retrieving just the the leftmost three characters. The full string is "repair main valve" so we expect to get just "rep" by adding an index of 3.

_index = 3

sub_term = conn.sql('SELECT LEFT(NOTIFICATION_TEXT,{}) as "SUB_STRING_" \

FROM ({}) LIMIT 1 '.format(_index,df_remote_new.select_statement))

display(sub_term.collect())Let's make use of the get_suggested_term functions to get some results.

_result = tmh.get_suggested_term(sub_term,df_remote)

_result.collect()The results are two other terms "repair" and "replace" with the first one coming on top.

text_classification

Last but not least, the text classification function. This is where we will need both the dataframes we created at the beginning of the blog. This is based on k-nearest neighbors algorithm used for classification. The default k is 1, so the new document will be assigned to the class of the nearest neighbor. Just pass the two dataframes to the text_classification function.

res = tmh.text_classification(df_remote_new, df_remote)

display(res[0].collect())

display(res[1].collect())The returned object consists of two dataframes which can be retrieved by passing the correct index.

The first one has the new documents assigned with a category and the latter one the matching distance and the matched document.

Let's operate some basic sql using hana_ml join operators to see what was the actual text between the unseen documents and the matched ones.

res[1].alias('A').join(

df_remote.alias('B'),

"A.TRAIN_ID = B.ID"

).select(

("TEST_ID","NEW_DOC_ID"),

("NOTIFICATION_TEXT","MATCHED_NOTIFICATION_TEXT"),

("TRAIN_ID","MATCHED_ID"),

("PRIORITY","MATCHED_PRIORITY"),

("DISTANCE")

).alias('C').join(

df_remote_new.alias("D"),

"C.NEW_DOC_ID = D.ID"

).select(

"NEW_DOC_ID",

"MATCHED_ID",

("NOTIFICATION_TEXT","NEW_NOTIFICATION_TEXT"),

"MATCHED_NOTIFICATION_TEXT",

"DISTANCE",

"MATCHED_PRIORITY"

).collect()

Outro

From a developer's point of view is alleviating to know that once you have connected to a SAP HANA Cloud instance with your Jupyter you have scalable and secure access to functions that can handle any data type.

From a business point of view, it is easier than ever forming a business hypotheses that requires data preparation, exploration and visualisation capabilities and get back a report on the spot. (fail fast or make impact faster)

Upgrade to latest SAP HANA Cloud and start formulating business use-cases and improve significantly your traditional analytic approaches by combining all sorts of data (and types).

Thanks for reading!

Alex

- SAP Managed Tags:

- SAP HANA Cloud,

- Python

Labels:

5 Comments

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,661 -

Business Trends

88 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

65 -

Expert

1 -

Expert Insights

178 -

Expert Insights

280 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

Kafka

1 -

Life at SAP

784 -

Life at SAP

11 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,577 -

Product Updates

330 -

Replication Flow

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,886 -

Technology Updates

408 -

Workload Fluctuations

1

Related Content

- New Machine Learning features in SAP HANA Cloud in Technology Blogs by SAP

- SAP HANA Cloud Vector Engine: Quick FAQ Reference in Technology Blogs by SAP

- explore the business continuity recovery sap solutions on AWS DRS in Technology Blogs by Members

- Harnessing the Power of SAP HANA Cloud Vector Engine for Context-Aware LLM Architecture in Technology Blogs by SAP

- ML- Linear Regression definition , implementation scenarios in HANA in Technology Blogs by Members

Top kudoed authors

| User | Count |

|---|---|

| 13 | |

| 10 | |

| 10 | |

| 9 | |

| 7 | |

| 6 | |

| 5 | |

| 5 | |

| 5 | |

| 4 |