- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- SAP Data Intelligence(3.1-2010) : CSV as Target us...

Technology Blogs by SAP

Learn how to extend and personalize SAP applications. Follow the SAP technology blog for insights into SAP BTP, ABAP, SAP Analytics Cloud, SAP HANA, and more.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

former_member37

Explorer

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

02-19-2021

9:45 AM

Introduction:

The SAP Data Intelligence provides a platform to orchestrate and integrate the data.

SAP Data Intelligence provides a lot of functionality starting from simple move of the file to real time replication of data from multiple sources to multiple targets.

I have been interacting with SAP Data Intelligence for quite a time now and worked on the multiple aspects of it.

The agenda for writing this blog post is primarily focused on one of the functionality of ABAP SLT Connector operator where using the custom python operator we are going to write csv files with headers.

Background:

In one of our customer project we were supposed to replicate the data from ECC source system to Azure Data Lake Storage Gen 2(ADLS Gen2) via SLT. The Customer requirement was to have the real time replicated data in data lake in csv format.

Currently there is no direct provision in the SAP Data Intelligence to generate csv files with header information except to build your own code using any language supported by SAP Data Intelligence (Python, Golang, JavaScript).

We chose to build this custom code in Python 3.

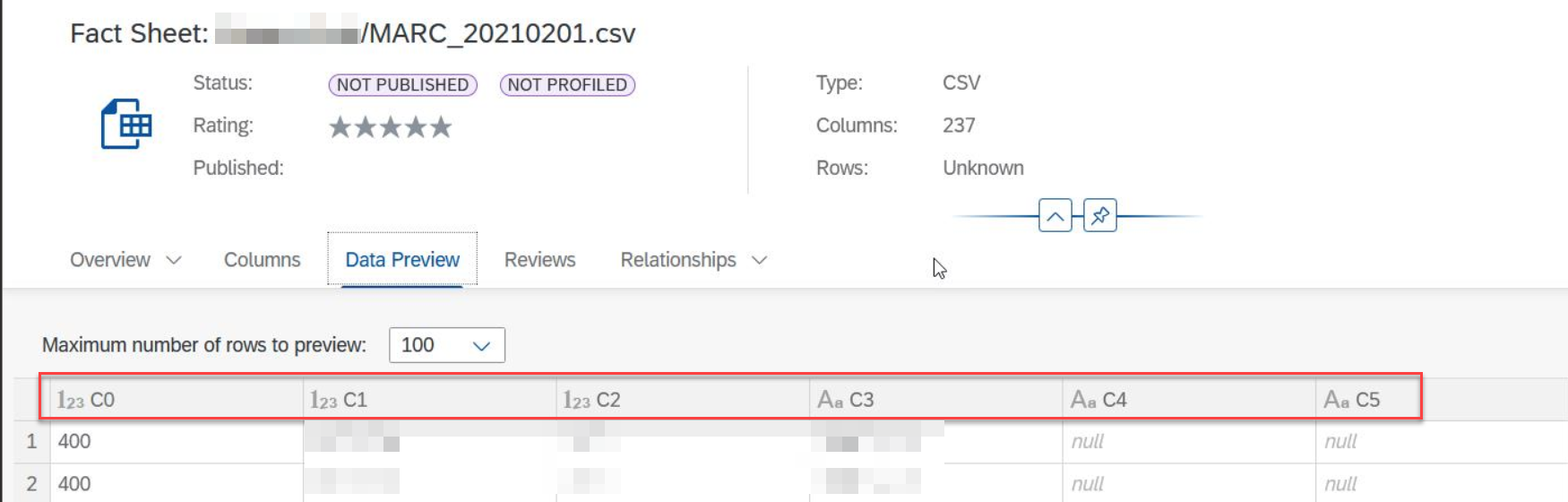

CSV Output with Default SLT Connector OperatorYou can see in the below screenshot the SAP Data Intelligence SLT Connector Operator by default generates csv files without actual column names. All csv files will have generic column names like C0,C1 etc..

Note:We tried to generate the JSON format since it supports out of the box metadata information such as column names, datatypes etc. However, working with JSON is quite expensive approach from performance point of view while dealing with high volume tables(more than 50 million) for Initial Load.

ABAP SLT Connector:

The SLT Connector operator establishes a connection between SAP Landscape Transformation Replication Server (SLT) and SAP Data Intelligence.

The SLT Connector comes with different versions. Until recent release SAP Data Intelligence offered V0 and V1 versions where the output type is abap.

Recently SLT Connector V2 version release provides a message output.

SLT Connector V2 provides a flexibility to extract the body and attributes separately from the input data.

As per current SLT Connector operator it can generate files in below format:

- CSV

- XML

- JSON

Custom Python Code:

As explained earlier in the blog post we were not getting the actual column names and hence we created a custom python code to append the headers to the csv files being generated in the target by exploiting the functionality of SLT Connector V2 version.

Basically, In SLT V2 operator the message output has two sections:

- Attributes

- Data

In this python code we have extracted the body and attributes of the input message separately. The attributes of the input message basically contains the metadata information with the column names, data type etc.

The attributes information looks something like this.

We just extracted the column names from the metadata using the below code snippet and appended the columns over the body before writing the csv file to the target.

def on_input(inData):

data = StringIO(inData.body)

attr = inData.attributes

ABAPKEY = attr['ABAP']

col= []

for columnname in ABAPKEY['Fields']:

col.append(columnname['Name'])

df = pd.read_csv(data, index_col=False, names=col)

df_csv = df.to_csv(index=False, header = True)

api.send("output", api.Message(attributes={}, body=df_csv))

api.set_port_callback( "input1", on_input)CSV Output with Customized SLT Connector OperatorAs you can see now we have appended the csv with actual column names coming from source table.

Enhancements:

- The code snippet works well for Replication Transfer Mode, for Initial Transfer Load few tweaks are required in the code because in Initial load the 2 additional columns Table_Name , IUUC_Operation columns will not be present.

- The file generated in the target is based on the portion size being created from SLT and sometimes it results in huge number of files with a smaller chunk size (ex 1-2 MB).With few enhancements in the code we can combine the potions and can generate the file size as per our requirement.

Note: Please visit this blog by one of my colleague for the above said enhanced scenario related to File sizing.

https://blogs.sap.com/2021/02/19/sap-data-intelligence-slt-replication-to-azure-data-lake-with-file-...

Summary:

We started with challenges with the default SLT Connector operator offered by SAP Data Intelligence and then explained the limitations of earlier versions of SLT Connector and then we talked about latest version of SLT Connector operator and how we can exploit it to achieve our desired goal.

I hope I’ve shown you how easy it is to create a custom code in SAP Data Intelligence using the base operator.

If you are interested to understand how the process were carried out or have ideas for the next blog post, please feel free to reach me out in the comments section. Stay tuned!

For more information on SAP Data Intelligence, please see:

Exchange Knowledge: SAP Community | Q&A | Blogs

- SAP Managed Tags:

- SAP Data Intelligence,

- Python,

- ABAP Connectivity,

- SAP Landscape Transformation

Labels:

4 Comments

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

91 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

66 -

Expert

1 -

Expert Insights

177 -

Expert Insights

293 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

12 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,577 -

Product Updates

340 -

Replication Flow

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,873 -

Technology Updates

417 -

Workload Fluctuations

1

Related Content

- Cloud Connector with Error 425 in Technology Q&A

- Issues with "SAP Analytics Cloud, add-in for Microsoft Excel" while working with SAP Datasphere in Technology Q&A

- AI Core - on-premise Git support in Technology Q&A

- Lowest SAP ECC version that can be integrated with SAP BTP through Cloud Connector in Technology Q&A

- ABAP Cloud Developer Trial 2022 Available Now in Technology Blogs by SAP

Top kudoed authors

| User | Count |

|---|---|

| 34 | |

| 25 | |

| 12 | |

| 7 | |

| 7 | |

| 6 | |

| 6 | |

| 6 | |

| 5 | |

| 4 |