- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- Loading the data from Microsoft Azure into SAP HAN...

Technology Blogs by SAP

Learn how to extend and personalize SAP applications. Follow the SAP technology blog for insights into SAP BTP, ABAP, SAP Analytics Cloud, SAP HANA, and more.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

former_member54

Explorer

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

10-13-2020

8:38 AM

Introduction:

This blog post will take you through the different steps required to load the data from the file stored in Azure Blob container into the SAP HANA Cloud Data Lake. I will use DB Explorer to load the data from .csv file in Azure Blob into Data Lake.

Steps to load the data:

Below are the two Prerequisites

- SAP HANA Cloud account setup with Data lake

- Microsoft Azure Storage Account with container

- First step is to Create a database user and grant the access which will be used to load the data.Go to the DB explorer and open the SQL console. Run the below commands to create the user USER1 and Grant the required permissions to USER1

CREATE USER USER1 PASSWORD Tutorial123 NO FORCE_FIRST_PASSWORD_CHANGE SET USERGROUP DEFAULT;

GRANT HANA_SYSRDL#CG_ADMIN_ROLE to USER1;

GRANT CREATE SCHEMA to USER1 WITH ADMIN OPTION;

GRANT CATALOG READ TO USER1 WITH ADMIN OPTION;

- In the DB explorer left pane, right click on the already added database of DBADMIN user and choose option of "Add database with different user”

- Create a schema for virtual table. Open SQL Console by clicking the SQL option on the top left corner connecting to database for User1

CREATE SCHEMA TUTORIAL_HDL_VIRTUAL_TABLES;

- Create a physical table in data lake using the remote execute call. It should have the same structure as the columns in the file. SYSRDL#CG is the default schema for Data Lake. User can perform DDL operations using the function call as shown below

CALL SYSRDL#CG.REMOTE_EXECUTE('

BEGIN

CREATE TABLE HIRE_VEHICLE_TRIP (

"hvfhs_license_num" VARCHAR(255),

"dispatching_base_num" VARCHAR(255),

"pickup_datetime" VARCHAR(255),

"dropoff_datetime" VARCHAR(255),

"PULocationID" VARCHAR(255),

"DOLocationID" VARCHAR(255),

"SR_Flag" VARCHAR(255));

END');

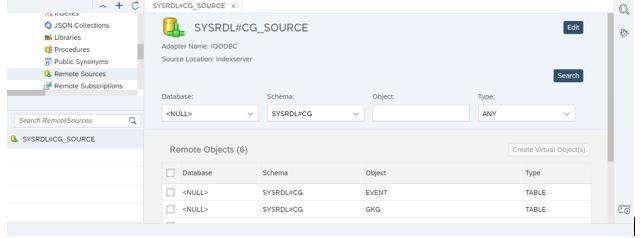

- Open the remote source SYSRDL#CG_SOURCE from the catalog and select the schema as SYSRDL#CG to view all the tables in Data Lake.This remote source provides flexibility of executing DML operations through virtual table

- Select the table HIRE_VEHICLE_TRIP by checking the checkbox and click on create virtual object. Specify the name for virtual object and choose the schema ‘TURORIAL_HDL_VIRTUAL_TABLE’

- Verify that the virtual table has been created in schema TUTORIAL_HDL_VIRTUAL_TABLES. Check the tables from catalog and you would see the virtual table.Also,check the table if its empty before load

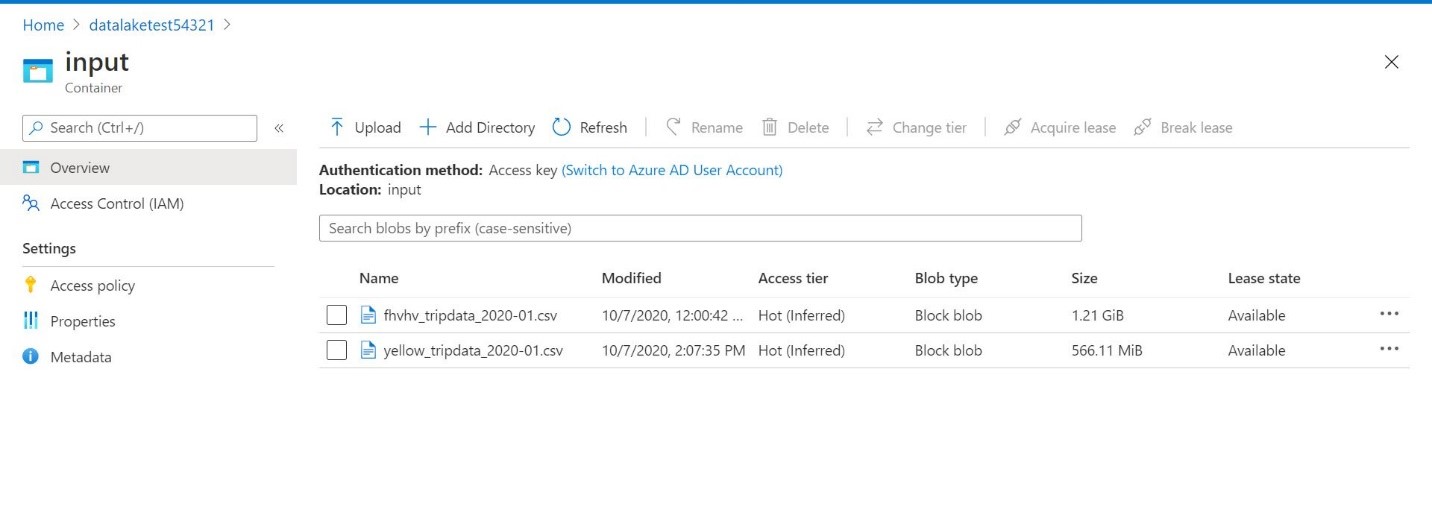

- Now let’s go to Azure to upload the file. Open your storage account from Azure Portal and click on containers

- Upload the csv file in blob container using upload option or by using Azure CLI.I referred the below dataset for this blog post. You can use your dataset https://www1.nyc.gov/site/tlc/about/tlc-trip-record-data.page

- Switch to DB Explorer and verify the SQL console is still connected to USER1. Load data from the CSV in blob container into data lake physical table by executing the below remote call. Replace <azure_connection_string> of your own azure account by accessing it from the Settings ->Access Keys in Storage Account

CALL SYSRDL#CG.REMOTE_EXECUTE('

BEGIN

SET TEMPORARY OPTION CONVERSION_ERROR=''Off'';

LOAD TABLE HIRE_VEHICLE_TRIP(

"hvfhs_license_num",

"dispatching_base_num",

"pickup_datetime",

"dropoff_datetime",

"PULocationID",

"DOLocationID",

"SR_Flag" )

USING FILE ''BB://input/fhvhv_tripdata_2020-01.csv”

STRIP RTRIM

FORMAT CSV

CONNECTION_STRING ''<azure_connection_string>''

ESCAPES OFF

QUOTES OFF;

END');

It took 115109 ms to upload approx 20 million records from csv file (size of 1.2 GB).

If delimiter is comma, you don’t have to specify delimited by option. I have comma separated file so doesn’t require to mention it explicitly

If delimiter is comma, you don’t have to specify delimited by option. I have comma separated file so doesn’t require to mention it explicitly

Otherwise DELIMITED BY option can be used if delimiter is some other character as below

DELIMITED BY ‘’\X09’’ after the using file line

- Verify the loaded data from virtual table

Conclusion:

The SAP HANA Cloud, Data Lake can ingest data from multiple sources like Amazon S3 , Microsoft Azure easily using the Load command. The command is versatile enough to load different file formats. You can explore more on this by going through the documentation link mentioned below

https://help.sap.com/viewer/80694cc56cd047f9b92cb4a7b7171bc2/cloud/en-US

https://help.sap.com/viewer/9f153559aeb5471d8aff9384cdc500db/cloud/en-US

- SAP Managed Tags:

- SAP HANA Cloud

Labels:

1 Comment

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,661 -

Business Trends

91 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

66 -

Expert

1 -

Expert Insights

178 -

Expert Insights

293 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

Kafka

1 -

Life at SAP

784 -

Life at SAP

12 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,577 -

Product Updates

340 -

Replication Flow

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,886 -

Technology Updates

416 -

Workload Fluctuations

1

Related Content

- Issues with "SAP Analytics Cloud, add-in for Microsoft Excel" while working with SAP Datasphere in Technology Q&A

- SAP Analytics Cloud, add-in for Microsoft Excel and SAP Datasphere connection in Technology Blogs by SAP

- Developing & Deploying the UI5 App to Cloud Foundry and Accessing from App-router End-to-End Steps in Technology Blogs by Members

- 体验更丝滑!SAP 分析云 2024.07 版功能更新 in Technology Blogs by SAP

- Receive a notification when your storage quota of SAP Cloud Transport Management passes 85% in Technology Blogs by SAP

Top kudoed authors

| User | Count |

|---|---|

| 30 | |

| 23 | |

| 10 | |

| 7 | |

| 6 | |

| 6 | |

| 5 | |

| 5 | |

| 5 | |

| 4 |