- SAP Community

- Products and Technology

- Technology

- Technology Blogs by Members

- UPLOADING HUGE FILE FROM SAP PO TO AWS S3

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

UPLOADING A FILE FROM PO TO AWS s3 WITHOUT SIZE RESTRICTIONS

One of the many challenges that we face in On-Premise to Cloud migration is the difference in approach to integration. While solutions on the cloud have a great flexibility in making messages as granular as possible, on-premise solutions are bound to restricting factors like bandwidth, memory and message sizes. As a basic premise to SAP PO development, our aim as developers is to optimize the many factors of an integration requirement, message size being very important.

This document is aimed at providing one of the many solutions that we can adapt to transfer a file from PO to AWS s3 bucket without us having to worry about the size of the file. AWS has a list of APIs available for us to use to upload files into the s3 bucket. As mentioned above, AWS doesn’t have a restriction on the size of the file received, but this issue is prominent in PO. You can find details of AWS API details here - https://docs.aws.amazon.com/AmazonS3/latest/API/Welcome.html

Amazon gives us 3 options to upload files into s3 via APIs

Single Chunk Upload - https://docs.aws.amazon.com/AmazonS3/latest/API/sig-v4-header-based-auth.html

Multi Chunk Upload - https://docs.aws.amazon.com/AmazonS3/latest/API/sigv4-streaming.html

Multi Part Upload - https://docs.aws.amazon.com/AmazonS3/latest/dev/mpuoverview.html

We had a file size restriction of ~900KB which wasn’t very promising. Amazon’s Single Chunk Upload was the best option. This is covered extensively in Rajesh PS’s blog -

https://blogs.sap.com/2019/05/31/integrating-amazon-simple-storage-service-amazon-s3-and-sap-ecc-v6....

Multi-chunk upload was not feasible for PO since it requires an HTTP connection to be open until all the chunks have been transferred. That leaves us with the Multipart Upload option. I will attempt to cover how I transferred a huge file using multipart upload. The basic design is as follows:

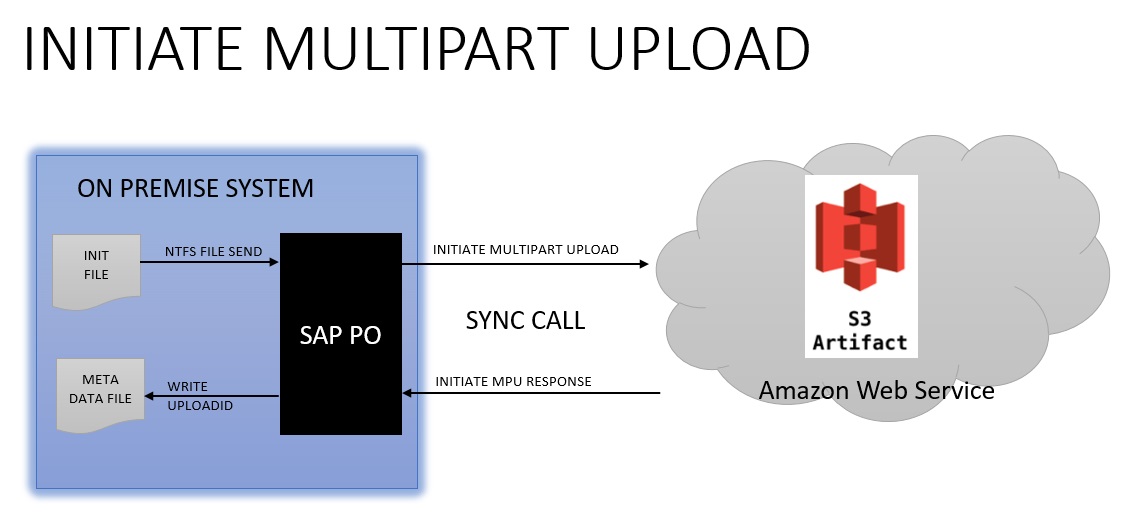

Pic 1: AWS Multipart Upload

AWS MULTIPART UPLOAD APIs

- Multipart Upload works by initiating an API. AWS returns us an UploadId. The smaller files are then sent one by one with the UploadId and part number. AWS allows for parallel sending of the multiple parts, but we will need to know the sequence number beforehand, which is not possible in this design. AWS returns an ETag for each file uploaded. After all the parts have been uploaded, you will then need to call the Complete Multipart Upload API with all the ETags and the Part Number. This enables AWS to ‘stitch’ back the multiple small files into one large file based on the details sent. I’ve had to develop the AWS signature from scratch: https://github.com/pnathan01/AWSSAPMultipartUploadLibrary. I also took inspiration from Rajesh’s blog for single chunk upload(link in the last section)

The following is a diagrammatic representation of each of the 3 types of calls made to s3 API.

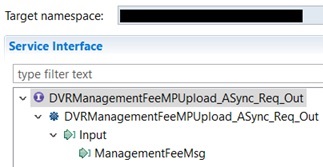

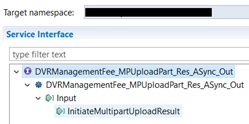

Pic 2: Initiate Multipart Upload

Pic 3: Upload Part

Pic 4: Complete Multipart Upload

IMPLEMENTATION IN PO

As you can see all the above communication are Async-Sync bridges. I have created 4 ICOs – 1 for Request with routing to different APIs and 3 different ICOs for the response part. All 3 of the response ICOs will write into the metadata file.

Please note that I have not accounted for any data conversion, format checks or mapping of the data in the file. Technically they are pass through scenarios, except Complete Multipart Upload, but we have to use mappings to calculate AWS API signatures.

The following configuration will need to be done beforehand:

- Implement certificates, if any

- Enable large message handling. This is because AWS requires all smaller files of a multipart upload, except the last one, to be 5 MB or larger.

Create ESR Objects

We have 2 different software components for source and target systems. So this scenario is from Software Component 1 to Software Component 2. Also, we will be using a common structure to write the metadata content like UploadIDs and ETags into the metadata file.

- Data Types

- Request Data Types in both software components.

- Response Data Type in Software Component 1

- Request Data Type in Software Component 2. CompleteMultipartUpload Data Type will not be a pass through mapping because there is actual data that is required to be sent.

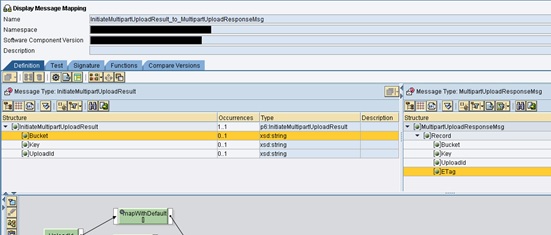

- Response Data Type in Software Component 2. The InitiateMultipartUploadResult data type is used to capture the response of both Initiate MPU and Upload Part because the UploadID and ETags are part of the header.

2. Message Types

2. Message Types

- Request Message Type in Software Component 1

- Response Message Type in Software Component

- Request Message Type in Software Component 2

- Response Message Type in Software Component 2 - I’ve changed the namespace for these structures because I didn’t want to develop code/adapter module to handle this separately. Just like the data type, this message type is common for both Initiate MPU and UploadPart API Response structures.

3. Service Interfaces

3. Service Interfaces

- One outbound service for all requests from Software Component 1

- One inbound service for all responses from Software Component 2

- 3 Synchronous inbound services to send the request messages

- 3 outbound asynchronous services to receive the response – You will notice that we have separate services for InitialMPU and UploadPart even though the structure is the same. This is because the mappings for them are different.

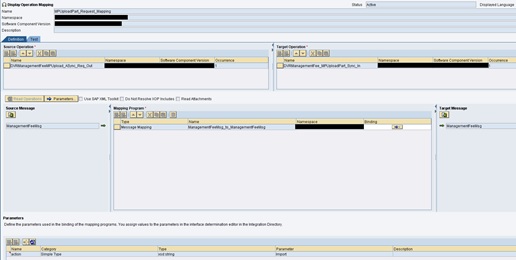

4. Mapping Programs – I’ve added a parameter called action because I reuse the same library code for both single chunk and multipart upload. The difference is required to calculate the AWS signature for the payload. The mappings themselves are pretty straightforward except for the signature calculation, which is handled entirely in the library. Code for AWS Signature Calculation: https://github.com/pnathan01/AWSSAPMultipartUploadLibrary

4. Mapping Programs – I’ve added a parameter called action because I reuse the same library code for both single chunk and multipart upload. The difference is required to calculate the AWS signature for the payload. The mappings themselves are pretty straightforward except for the signature calculation, which is handled entirely in the library. Code for AWS Signature Calculation: https://github.com/pnathan01/AWSSAPMultipartUploadLibrary

- Request Mapping – Initiate Multipart Upload.

- Request Mapping – Upload Part

- Request Mappings – Complete Multipart Upload – Conversion and Formatting

- Response Mapping - Initiate Multipart Upload – Java code to handle empty message

- Response Mapping – Upload Part – Java code to handle empty message received.

- Response Mapping – Complete Multipart Upload. The response is namespace-agnostic, causing issues in PI.

- Request Mapping – Initiate Multipart Upload.

- Operation Mappings

- Request OM – Initiate Multipart Upload

- Request OM – Upload Part

- Request OM – Complete Multipart Upload

- Response OM - Initiate Multipart Upload

- Response OM – Upload Part

- Response OM – Complete Multipart Upload

- Request OM – Initiate Multipart Upload

- One outbound service for all requests from Software Component 1

- Request Message Type in Software Component 1

- Request Data Types in both software components.

Create ID Objects

We will create the following ID objects

- 1 ICO for splitting the files

- 1 ICO for sending out the request part of the ASync-Sync bridge

- 3 ICOs for receiving the response

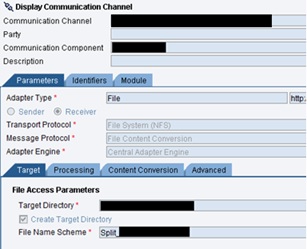

- File Split Scenario

- Sender Channel – The file is split based on number of records because AWS MPU has a condition that all multipart files except the last one be larger than 5 MB.

Here I call 2 scripts, before and after the message processing. The first one creates a file of the format <FILE_NAME>_00000000-000000-000.txt. This will be the first file called which will trigger the Initiate Multipart Upload.The second script creates the last file of the format <FILE_NAME>_99991231-235959-000.txt and Temp_Metadata.txt. This file is the one to initiate the Complete Multipart Upload. The metadata file is the one that will hold all the UploadIDs and ETags for logging, audit and monitoring purposes.I haven’t provided the script code because it is simple, and it is dependent on your OS.

Here I call 2 scripts, before and after the message processing. The first one creates a file of the format <FILE_NAME>_00000000-000000-000.txt. This will be the first file called which will trigger the Initiate Multipart Upload.The second script creates the last file of the format <FILE_NAME>_99991231-235959-000.txt and Temp_Metadata.txt. This file is the one to initiate the Complete Multipart Upload. The metadata file is the one that will hold all the UploadIDs and ETags for logging, audit and monitoring purposes.I haven’t provided the script code because it is simple, and it is dependent on your OS.

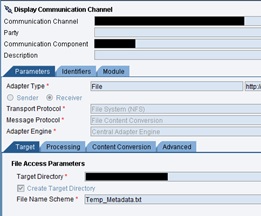

- Receiver Channel

- Sender Channel – The file is split based on number of records because AWS MPU has a condition that all multipart files except the last one be larger than 5 MB.

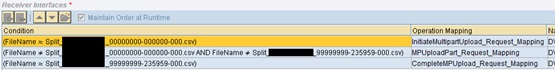

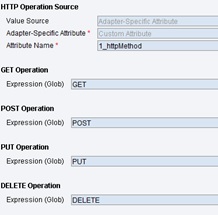

- Request Send Scenario

- ICO

- Sender Channel

- Receiver Channels

- Channel 1 – Request to Initiate Multipart Upload

- Channel 2 – Request to Upload Part

- Channel 3 – Request to Complete Multipart Upload

- Channel 1 – Request to Initiate Multipart Upload

- ICO

- Response Receive Scenario – Initiate Multipart Upload

- ICO

- Sender Channel

- Receiver Channel

- ICO

- Response Receive Scenario – Upload Part

- ICO

- Sender Channel

- Receiver Channel

- ICO

- Response Receive Scenario – Complete Multipart Upload

- ICO

- Sender Channel

- Receiver Channel

- ICO

Unit Test

I have provided only the skeleton and the basic information to get this requirement. Because there is routing to multiple interfaces in the request part, I find it difficult to implement EOIO quality in this scenario. Hence I had to introduce a mandatory delay of 600 seconds. This was calculated based on the timeit took to upload one part of the file, which was 6-7 minutes.

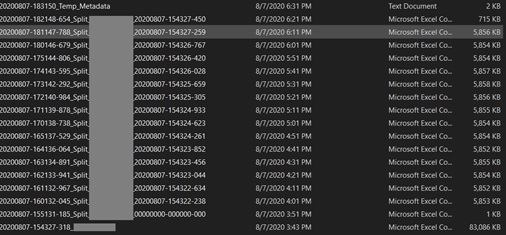

Also, after writing the Complete Multipart Upload, the metadata file is archived. This is a sample file to be uploaded.

A file of this size is split into multiple files of size slightly larger than 5 MB.

The metadata file contains the following data. This is written into the file by the 3 response mappings.

Conclusion

This way we don’t have to worry about files of any size to be uploaded into AWS s3. But there are a few points to be considered here:

- SAP PO has no provision for greater than or lesser than in the condition editor. This prevented us from routing the file to a single chuck or multipart upload based on file size.

- AWS has no central repo for the structures they use. It would’ve been easier for us that way to just download and use them. There was quite some time spent on resolving namespace issues.

- Java mapping could be used to eliminate the need for separate mappings to resolve namespace issues. Also, EOIO quality for file sender to multiple receivers is possible with java mapping.

- The performance of this scenario seems exponential to the number of parts it divides into. This could be optimized if we process files of different sizes based on threshold values. For example: files of size 5 MB or lesser can be uploaded in a single chunk. Files larger than that could be uploaded using multipart.

- The absence of EOIO necessitates that only one file be present in the folder at a time. In case of multiple files, there will be multiple metadata files created and the process will find writing back the correct ETag values to the correct metadata file confusing.

- There are also clean up APIs that need to be implemented in case a file upload fails. When we start afresh, this starts with a new UploadID. We have API to list all the current uploads and delete the ones that are incomplete. This way, we can ensure that the solution is clean.

Feel free to suggest any improvements/suggestions/feedback because this was done due to a time crunch. There can be many more ways to optimize this.

Lastly, I’ve listed the links/people I referred to during the development of this requirement.

- https://blogs.sap.com/2019/07/18/idoc-rest-async-sync-scenario-approach-with-aleaud/

- https://blogs.sap.com/2019/05/31/integrating-amazon-simple-storage-service-amazon-s3-and-sap-ecc-v6....

- https://docs.aws.amazon.com/AmazonS3/latest/dev/mpuoverview.html

- https://github.com/pnathan01/AWSSAPMultipartUploadLibrary

- https://wiki.scn.sap.com/wiki/display/XI/Where+to+get+the+libraries+for+XI+development

- https://launchpad.support.sap.com/#/notes/0001763011

- Robbie Cooray from AWS – He was my go-to person for valuable inputs and great ideas. Thanks Robbie! ?

- SAP Managed Tags:

- SAP Process Integration,

- SAP Process Orchestration

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

"automatische backups"

1 -

"regelmäßige sicherung"

1 -

"TypeScript" "Development" "FeedBack"

1 -

505 Technology Updates 53

1 -

ABAP

14 -

ABAP API

1 -

ABAP CDS Views

2 -

ABAP CDS Views - BW Extraction

1 -

ABAP CDS Views - CDC (Change Data Capture)

1 -

ABAP class

2 -

ABAP Cloud

2 -

ABAP Development

5 -

ABAP in Eclipse

1 -

ABAP Platform Trial

1 -

ABAP Programming

2 -

abap technical

1 -

absl

2 -

access data from SAP Datasphere directly from Snowflake

1 -

Access data from SAP datasphere to Qliksense

1 -

Accrual

1 -

action

1 -

adapter modules

1 -

Addon

1 -

Adobe Document Services

1 -

ADS

1 -

ADS Config

1 -

ADS with ABAP

1 -

ADS with Java

1 -

ADT

2 -

Advance Shipping and Receiving

1 -

Advanced Event Mesh

3 -

AEM

1 -

AI

7 -

AI Launchpad

1 -

AI Projects

1 -

AIML

9 -

Alert in Sap analytical cloud

1 -

Amazon S3

1 -

Analytical Dataset

1 -

Analytical Model

1 -

Analytics

1 -

Analyze Workload Data

1 -

annotations

1 -

API

1 -

API and Integration

3 -

API Call

2 -

Application Architecture

1 -

Application Development

5 -

Application Development for SAP HANA Cloud

3 -

Applications and Business Processes (AP)

1 -

Artificial Intelligence

1 -

Artificial Intelligence (AI)

5 -

Artificial Intelligence (AI) 1 Business Trends 363 Business Trends 8 Digital Transformation with Cloud ERP (DT) 1 Event Information 462 Event Information 15 Expert Insights 114 Expert Insights 76 Life at SAP 418 Life at SAP 1 Product Updates 4

1 -

Artificial Intelligence (AI) blockchain Data & Analytics

1 -

Artificial Intelligence (AI) blockchain Data & Analytics Intelligent Enterprise

1 -

Artificial Intelligence (AI) blockchain Data & Analytics Intelligent Enterprise Oil Gas IoT Exploration Production

1 -

Artificial Intelligence (AI) blockchain Data & Analytics Intelligent Enterprise sustainability responsibility esg social compliance cybersecurity risk

1 -

ASE

1 -

ASR

2 -

ASUG

1 -

Attachments

1 -

Authorisations

1 -

Automating Processes

1 -

Automation

2 -

aws

2 -

Azure

1 -

Azure AI Studio

1 -

B2B Integration

1 -

Backorder Processing

1 -

Backup

1 -

Backup and Recovery

1 -

Backup schedule

1 -

BADI_MATERIAL_CHECK error message

1 -

Bank

1 -

BAS

1 -

basis

2 -

Basis Monitoring & Tcodes with Key notes

2 -

Batch Management

1 -

BDC

1 -

Best Practice

1 -

bitcoin

1 -

Blockchain

3 -

bodl

1 -

BOP in aATP

1 -

BOP Segments

1 -

BOP Strategies

1 -

BOP Variant

1 -

BPC

1 -

BPC LIVE

1 -

BTP

12 -

BTP Destination

2 -

Business AI

1 -

Business and IT Integration

1 -

Business application stu

1 -

Business Application Studio

1 -

Business Architecture

1 -

Business Communication Services

1 -

Business Continuity

1 -

Business Data Fabric

3 -

Business Partner

12 -

Business Partner Master Data

10 -

Business Technology Platform

2 -

Business Trends

4 -

CA

1 -

calculation view

1 -

CAP

3 -

Capgemini

1 -

CAPM

1 -

Catalyst for Efficiency: Revolutionizing SAP Integration Suite with Artificial Intelligence (AI) and

1 -

CCMS

2 -

CDQ

12 -

CDS

2 -

Cental Finance

1 -

Certificates

1 -

CFL

1 -

Change Management

1 -

chatbot

1 -

chatgpt

3 -

CL_SALV_TABLE

2 -

Class Runner

1 -

Classrunner

1 -

Cloud ALM Monitoring

1 -

Cloud ALM Operations

1 -

cloud connector

1 -

Cloud Extensibility

1 -

Cloud Foundry

4 -

Cloud Integration

6 -

Cloud Platform Integration

2 -

cloudalm

1 -

communication

1 -

Compensation Information Management

1 -

Compensation Management

1 -

Compliance

1 -

Compound Employee API

1 -

Configuration

1 -

Connectors

1 -

Consolidation Extension for SAP Analytics Cloud

2 -

Control Indicators.

1 -

Controller-Service-Repository pattern

1 -

Conversion

1 -

Cosine similarity

1 -

cryptocurrency

1 -

CSI

1 -

ctms

1 -

Custom chatbot

3 -

Custom Destination Service

1 -

custom fields

1 -

Customer Experience

1 -

Customer Journey

1 -

Customizing

1 -

cyber security

3 -

cybersecurity

1 -

Data

1 -

Data & Analytics

1 -

Data Aging

1 -

Data Analytics

2 -

Data and Analytics (DA)

1 -

Data Archiving

1 -

Data Back-up

1 -

Data Flow

1 -

Data Governance

5 -

Data Integration

2 -

Data Quality

12 -

Data Quality Management

12 -

Data Synchronization

1 -

data transfer

1 -

Data Unleashed

1 -

Data Value

8 -

database tables

1 -

Datasphere

3 -

datenbanksicherung

1 -

dba cockpit

1 -

dbacockpit

1 -

Debugging

2 -

Delimiting Pay Components

1 -

Delta Integrations

1 -

Destination

3 -

Destination Service

1 -

Developer extensibility

1 -

Developing with SAP Integration Suite

1 -

Devops

1 -

digital transformation

1 -

Documentation

1 -

Dot Product

1 -

DQM

1 -

dump database

1 -

dump transaction

1 -

e-Invoice

1 -

E4H Conversion

1 -

Eclipse ADT ABAP Development Tools

2 -

edoc

1 -

edocument

1 -

ELA

1 -

Embedded Consolidation

1 -

Embedding

1 -

Embeddings

1 -

Employee Central

1 -

Employee Central Payroll

1 -

Employee Central Time Off

1 -

Employee Information

1 -

Employee Rehires

1 -

Enable Now

1 -

Enable now manager

1 -

endpoint

1 -

Enhancement Request

1 -

Enterprise Architecture

1 -

ETL Business Analytics with SAP Signavio

1 -

Euclidean distance

1 -

Event Dates

1 -

Event Driven Architecture

1 -

Event Mesh

2 -

Event Reason

1 -

EventBasedIntegration

1 -

EWM

1 -

EWM Outbound configuration

1 -

EWM-TM-Integration

1 -

Existing Event Changes

1 -

Expand

1 -

Expert

2 -

Expert Insights

2 -

Exploits

1 -

Fiori

14 -

Fiori Elements

2 -

Fiori SAPUI5

12 -

Flask

1 -

Full Stack

8 -

Funds Management

1 -

General

1 -

General Splitter

1 -

Generative AI

1 -

Getting Started

1 -

GitHub

8 -

Grants Management

1 -

GraphQL

1 -

groovy

1 -

GTP

1 -

HANA

6 -

HANA Cloud

2 -

Hana Cloud Database Integration

2 -

HANA DB

2 -

HANA XS Advanced

1 -

Historical Events

1 -

home labs

1 -

HowTo

1 -

HR Data Management

1 -

html5

8 -

HTML5 Application

1 -

Identity cards validation

1 -

idm

1 -

Implementation

1 -

input parameter

1 -

instant payments

1 -

Integration

3 -

Integration Advisor

1 -

Integration Architecture

1 -

Integration Center

1 -

Integration Suite

1 -

intelligent enterprise

1 -

iot

1 -

Java

1 -

job

1 -

Job Information Changes

1 -

Job-Related Events

1 -

Job_Event_Information

1 -

joule

4 -

Journal Entries

1 -

Just Ask

1 -

Kerberos for ABAP

8 -

Kerberos for JAVA

8 -

KNN

1 -

Launch Wizard

1 -

Learning Content

2 -

Life at SAP

5 -

lightning

1 -

Linear Regression SAP HANA Cloud

1 -

Loading Indicator

1 -

local tax regulations

1 -

LP

1 -

Machine Learning

2 -

Marketing

1 -

Master Data

3 -

Master Data Management

14 -

Maxdb

2 -

MDG

1 -

MDGM

1 -

MDM

1 -

Message box.

1 -

Messages on RF Device

1 -

Microservices Architecture

1 -

Microsoft Universal Print

1 -

Middleware Solutions

1 -

Migration

5 -

ML Model Development

1 -

Modeling in SAP HANA Cloud

8 -

Monitoring

3 -

MTA

1 -

Multi-Record Scenarios

1 -

Multiple Event Triggers

1 -

Myself Transformation

1 -

Neo

1 -

New Event Creation

1 -

New Feature

1 -

Newcomer

1 -

NodeJS

2 -

ODATA

2 -

OData APIs

1 -

odatav2

1 -

ODATAV4

1 -

ODBC

1 -

ODBC Connection

1 -

Onpremise

1 -

open source

2 -

OpenAI API

1 -

Oracle

1 -

PaPM

1 -

PaPM Dynamic Data Copy through Writer function

1 -

PaPM Remote Call

1 -

PAS-C01

1 -

Pay Component Management

1 -

PGP

1 -

Pickle

1 -

PLANNING ARCHITECTURE

1 -

Popup in Sap analytical cloud

1 -

PostgrSQL

1 -

POSTMAN

1 -

Process Automation

2 -

Product Updates

4 -

PSM

1 -

Public Cloud

1 -

Python

4 -

Qlik

1 -

Qualtrics

1 -

RAP

3 -

RAP BO

2 -

Record Deletion

1 -

Recovery

1 -

recurring payments

1 -

redeply

1 -

Release

1 -

Remote Consumption Model

1 -

Replication Flows

1 -

research

1 -

Resilience

1 -

REST

1 -

REST API

2 -

Retagging Required

1 -

Risk

1 -

Rolling Kernel Switch

1 -

route

1 -

rules

1 -

S4 HANA

1 -

S4 HANA Cloud

1 -

S4 HANA On-Premise

1 -

S4HANA

3 -

S4HANA_OP_2023

2 -

SAC

10 -

SAC PLANNING

9 -

SAP

4 -

SAP ABAP

1 -

SAP Advanced Event Mesh

1 -

SAP AI Core

8 -

SAP AI Launchpad

8 -

SAP Analytic Cloud Compass

1 -

Sap Analytical Cloud

1 -

SAP Analytics Cloud

4 -

SAP Analytics Cloud for Consolidation

3 -

SAP Analytics Cloud Story

1 -

SAP analytics clouds

1 -

SAP BAS

1 -

SAP Basis

6 -

SAP BODS

1 -

SAP BODS certification.

1 -

SAP BTP

21 -

SAP BTP Build Work Zone

2 -

SAP BTP Cloud Foundry

6 -

SAP BTP Costing

1 -

SAP BTP CTMS

1 -

SAP BTP Innovation

1 -

SAP BTP Migration Tool

1 -

SAP BTP SDK IOS

1 -

SAP Build

11 -

SAP Build App

1 -

SAP Build apps

1 -

SAP Build CodeJam

1 -

SAP Build Process Automation

3 -

SAP Build work zone

10 -

SAP Business Objects Platform

1 -

SAP Business Technology

2 -

SAP Business Technology Platform (XP)

1 -

sap bw

1 -

SAP CAP

2 -

SAP CDC

1 -

SAP CDP

1 -

SAP CDS VIEW

1 -

SAP Certification

1 -

SAP Cloud ALM

4 -

SAP Cloud Application Programming Model

1 -

SAP Cloud Integration for Data Services

1 -

SAP cloud platform

8 -

SAP Companion

1 -

SAP CPI

3 -

SAP CPI (Cloud Platform Integration)

2 -

SAP CPI Discover tab

1 -

sap credential store

1 -

SAP Customer Data Cloud

1 -

SAP Customer Data Platform

1 -

SAP Data Intelligence

1 -

SAP Data Migration in Retail Industry

1 -

SAP Data Services

1 -

SAP DATABASE

1 -

SAP Dataspher to Non SAP BI tools

1 -

SAP Datasphere

9 -

SAP DRC

1 -

SAP EWM

1 -

SAP Fiori

3 -

SAP Fiori App Embedding

1 -

Sap Fiori Extension Project Using BAS

1 -

SAP GRC

1 -

SAP HANA

1 -

SAP HCM (Human Capital Management)

1 -

SAP HR Solutions

1 -

SAP IDM

1 -

SAP Integration Suite

9 -

SAP Integrations

4 -

SAP iRPA

2 -

SAP LAGGING AND SLOW

1 -

SAP Learning Class

1 -

SAP Learning Hub

1 -

SAP Odata

2 -

SAP on Azure

1 -

SAP PartnerEdge

1 -

sap partners

1 -

SAP Password Reset

1 -

SAP PO Migration

1 -

SAP Prepackaged Content

1 -

SAP Process Automation

2 -

SAP Process Integration

2 -

SAP Process Orchestration

1 -

SAP S4HANA

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Cloud for Finance

1 -

SAP S4HANA Cloud private edition

1 -

SAP Sandbox

1 -

SAP STMS

1 -

SAP successfactors

3 -

SAP SuccessFactors HXM Core

1 -

SAP Time

1 -

SAP TM

2 -

SAP Trading Partner Management

1 -

SAP UI5

1 -

SAP Upgrade

1 -

SAP Utilities

1 -

SAP-GUI

8 -

SAP_COM_0276

1 -

SAPBTP

1 -

SAPCPI

1 -

SAPEWM

1 -

sapmentors

1 -

saponaws

2 -

SAPS4HANA

1 -

SAPUI5

5 -

schedule

1 -

Script Operator

1 -

Secure Login Client Setup

8 -

security

9 -

Selenium Testing

1 -

Self Transformation

1 -

Self-Transformation

1 -

SEN

1 -

SEN Manager

1 -

service

1 -

SET_CELL_TYPE

1 -

SET_CELL_TYPE_COLUMN

1 -

SFTP scenario

2 -

Simplex

1 -

Single Sign On

8 -

Singlesource

1 -

SKLearn

1 -

Slow loading

1 -

soap

1 -

Software Development

1 -

SOLMAN

1 -

solman 7.2

2 -

Solution Manager

3 -

sp_dumpdb

1 -

sp_dumptrans

1 -

SQL

1 -

sql script

1 -

SSL

8 -

SSO

8 -

Substring function

1 -

SuccessFactors

1 -

SuccessFactors Platform

1 -

SuccessFactors Time Tracking

1 -

Sybase

1 -

system copy method

1 -

System owner

1 -

Table splitting

1 -

Tax Integration

1 -

Technical article

1 -

Technical articles

1 -

Technology Updates

14 -

Technology Updates

1 -

Technology_Updates

1 -

terraform

1 -

Threats

2 -

Time Collectors

1 -

Time Off

2 -

Time Sheet

1 -

Time Sheet SAP SuccessFactors Time Tracking

1 -

Tips and tricks

2 -

toggle button

1 -

Tools

1 -

Trainings & Certifications

1 -

Transformation Flow

1 -

Transport in SAP BODS

1 -

Transport Management

1 -

TypeScript

2 -

ui designer

1 -

unbind

1 -

Unified Customer Profile

1 -

UPB

1 -

Use of Parameters for Data Copy in PaPM

1 -

User Unlock

1 -

VA02

1 -

Validations

1 -

Vector Database

2 -

Vector Engine

1 -

Visual Studio Code

1 -

VSCode

1 -

Vulnerabilities

1 -

Web SDK

1 -

work zone

1 -

workload

1 -

xsa

1 -

XSA Refresh

1

- « Previous

- Next »

- ERROR: DEFAULT profile in the DB and in the file system are different in Technology Q&A

- ABAP2XLSX problem downloading file in Technology Q&A

- CAP Autentication error in Technology Q&A

- How to make a REST "PUT" call to a dynamic url and pass Binary file content in body in SAP MDK in Technology Q&A

- SAP Integration Suite - IDOC to flat file conversion in Technology Q&A

| User | Count |

|---|---|

| 7 | |

| 5 | |

| 5 | |

| 5 | |

| 5 | |

| 4 | |

| 4 | |

| 4 | |

| 3 | |

| 3 |