- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- Identify problems in Windows Failover cluster envi...

Technology Blogs by SAP

Learn how to extend and personalize SAP applications. Follow the SAP technology blog for insights into SAP BTP, ABAP, SAP Analytics Cloud, SAP HANA, and more.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Advisor

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

09-01-2020

12:09 PM

This blog give you some hints to identify possible network or storage related problems in Windows Failover clusters. If applications are "hanging" in a cluster, the root cause cannot be easily identified after the cluster initiated multiple failovers.

In this example we use a Windows Failover cluster with a clustered ASCS instance and a clustered ERS instance. The procedure described in this blog is based on an S/4 installation, but is also fully valid for NetWeaver based installations with the old, not clustered ERS instance.

Let's assume, we have an issue with the shared disk the ASCS is using or a network related issue.

Therefore, the process "msg_server.exe" (the SAP Message Server) will become "yellow" in SAP MMC:

The SAP start service (sapstartsrv) has detected this behavior and while the other 3 processes are green (responding), the message server is yellow (started, but not responding).

The SAP cluster resource DLL (saprc.dll) asks periodically sapstartsrv about the health of these 4 processes. If one is yellow, the cluster will wait 60 seconds until it will initiate a failover.

This behavior can be adjusted by the parameter "Acceptable Yellow Time" and can be set in the properties of the SAP instance resource:

You can increase this value if needed.

If the hang situation will not be fixed within the "Acceptable Yellow Time", the cluster will failover the ASCS cluster group.

You find the reason for this failover in the cluster log of the node, where the instance failed:

ERR [RES] SAP Resource <SAP BF3 00 Instance>:

Error ntha-197 SAPBF3_00 is not alive. HA state: yellow. HA relevant apps: 3 of 4 [ msg_server:8292=yellow enq_server:6252=green gwrd:660=green ]

ERR [RES] SAP Resource <SAP BF3 00 Instance>:

Error ntha-310 SAP BF3 00 Instance cluster resource is dead, instance: SAPBF3_00 has timed out after: 60 sec, current state is not online: HA state: yellow. HA relevant apps: 3 of 4 [ msg_server:8292=yellow enq_server:6252=green gwrd:660=green ]

But here we only see the symptom, not the root cause for the hang situation.

We need more information from the hanging executable, in our example msg_server.exe. If an application hangs, it's no longer able to write to a trace file, write events in Windows application log or do anything useful. The reason why it hangs might be a TCP/IP socket call, an I/O operation (open file, write file …) or any other OS function call.

If you use saprc.dll version 4.x or higher, then you can create dumps of the SAP processes which will explain the root cause of the hanging situation.

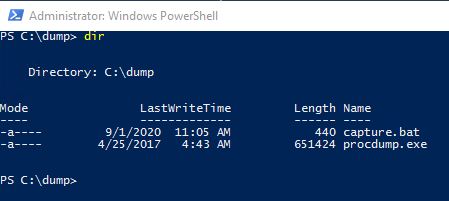

Prepare a folder called "c:\dump" on every cluster node and copy the tool "procdump.exe" to it. You can download this tool from www.sysinternals.com. Then, create a small batch script called "capture.bat".

The capture.bat file should include these lines:

@echo off

echo Start capturing dumps: > c:\dump\capture.log

echo. >> c:\dump\capture.log

c:\dump\procdump.exe -ma -o -accepteula msg_server.exe c:\dump >> c:\dump\capture.log

c:\dump\procdump.exe -ma -o -accepteula gwrd.exe c:\dump >> c:\dump\capture.log

c:\dump\procdump.exe -ma -o -accepteula enq_server.exe c:\dump >> c:\dump\capture.log

echo. >> c:\dump\capture.log

echo End capturing dumps. >> c:\dump\capture.log

The script will start the procdump tool, create a dump of the relevant SAP processes (in this example we have three) and write a small log file. If you started additional processes in ASCS instance you need to change process names accordingly.

If you use the old enqueue server (enserver.exe) then change the line with the new enqueue server executable (enq_server.exe).

Now we have to configure saprc.dll to start this script in case of a hanging situation that is recognized as a failure:

If the problem occurs again, the script will be executed on the node, where the failure happens. All SAP processes will be dumped and then the cluster continues to failover the cluster group.

Send the dumps to SAP for further analysis. This dump will explain, why the process did not respond anymore.

More hints:

Customers had issues with SAP gateway process. The process took in unknown situations a long time to start.

There are two possible ways to cover this.

- Increase AcceptableYellowTime to a high value, for example 5 minutes (300 seconds).

(see screenshot above) - Configure the gateway process (gwrd.exe) as a so called "not HA relevant" application for saprc.dll:

saprc.dll will no longer check the health of gwrd.exe.

If gwrd.exe is yellow in SAP MMC, the cluster will not initiate a failover.

Apart from the process dump, look for dev_ms, dev_enq*, dev_rd trace files which could explain a problem situation.

If the cluster initiates multiple failovers, these trace files might get overwritten when the SAP instance is started and this information is lost.If you use saprc.dll version 4.0.0.11 or higher, saprc.dll creates backups of these trace files for later inspection.

The cluster log shows this:

INFO [RES] SAP Resource <SAP BF3 00 Instance>: saved away c:\usr\sap\bf3\ascs00\work\dev_ms to: c:\usr\sap\bf3\ascs00\work\dev_ms_terminated.txt

INFO [RES] SAP Resource <SAP BF3 00 Instance>: saved away c:\usr\sap\bf3\ascs00\work\dev_enq_server to: c:\usr\sap\bf3\ascs00\work\dev_enq_server_terminated.txt

INFO [RES] SAP Resource <SAP BF3 00 Instance>: saved away c:\usr\sap\bf3\ascs00\work\dev_rd to: c:\usr\sap\bf3\ascs00\work\dev_rd_terminated.txt

You find the saved files in the \work folder of ASCS instances.

- SAP Managed Tags:

- Windows Server

Labels:

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,661 -

Business Trends

91 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

66 -

Expert

1 -

Expert Insights

178 -

Expert Insights

293 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

Kafka

1 -

Life at SAP

784 -

Life at SAP

12 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,577 -

Product Updates

337 -

Replication Flow

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,886 -

Technology Updates

415 -

Workload Fluctuations

1

Related Content

- IoT - Ultimate Data Cyber Security - with Enterprise Blockchain and SAP BTP 🚀 in Technology Blogs by Members

- How to test a Windows Failover cluster? in Technology Blogs by SAP

- SAP Datasphere - Space, Data Integration, and Data Modeling Best Practices in Technology Blogs by SAP

- Advanced Event Mesh Connectors and Easy Event-Driven Example of S/4HANA with Amazon S3 Integration in Technology Blogs by Members

- How to start SAP instances in Windows clusters in emergency situations? in Technology Blogs by SAP

Top kudoed authors

| User | Count |

|---|---|

| 29 | |

| 21 | |

| 10 | |

| 7 | |

| 7 | |

| 6 | |

| 6 | |

| 5 | |

| 5 | |

| 5 |