- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- Using the HANA Cloud Athena api adapter to access ...

Technology Blogs by SAP

Learn how to extend and personalize SAP applications. Follow the SAP technology blog for insights into SAP BTP, ABAP, SAP Analytics Cloud, SAP HANA, and more.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Advisor

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

05-06-2020

4:04 PM

Using the SAP HANA Cloud Athena api adapter to access S3 data

In this blog post we will explore how to access csv data from Amazon S3 into SAP HANA Cloud.

This can be a handy approach when customers want to ingest S3 data directly into HANA Cloud.

Prerequisites

You should have admin access to AWS console, along with the Access Key ID and the secret access key for the AWS user.

You have a SAP Cloud Platform access and a SAP HANA Cloud instance

Log into the AWS console and perform the below steps.

Edit your AWS user and add the following two policies to your user

AWSQuicksightAthenaAccess

AmazonAthenaFullAccess

Create a S3 bucket in AWS and create two folders, one for uploading the csv data file and the other to store query results.

- 3. Create an AWS Athena service and configure it to consume data from the S3 bucket.

First create an Athena service and then click on the “set up a query results location in Amazon S3”

Set the query results location to the S3 folder

Click on the workgroup and add the query results location there as well

Create an Athena database and a table based on the csv file. Use the create table (from S3 bucket data) wizard in the query editor.

The wizard will ask for database name, table name, S3 location, data format, column names and datatypes. Once the wizard creates the table, you can query it like below screenshot

Now we will go to our SAP Cloud Platform and then click SAP HANA Cloud

Open the SAP HANA database explorer from the Open in option under the SAP HANA service information. Note that you need to have CREATE REMOTE SOURCE system privilege. If your user does not have this privilege ask your administrator to grant it .

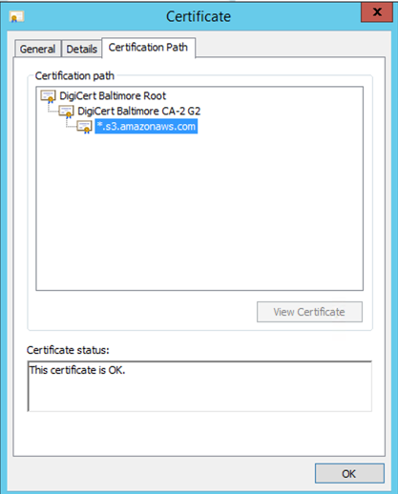

The Athena adapter needs both Athena and S3 certificates for TLS/SSL. Download the required root certificates from the following sites:

https://aws.amazon.com/ (Amazon Root CA 1)

https://<bucket_name>.s3.amazonaws.com (Baltimore CyberTrust Root)

I would suggest downloading ALL the certificates from these 2 sites.

https://aws.amazon.com has five certificates

https://hscbucket.s3.amazonaws.com has 3 certificates.

Once the certificates are downloaded, you will need to run the below set of commands to create a PSE, add the certificates and set a purpose

CREATE PSE PSE1;

CREATE CERTIFICATE FROM '

-----BEGIN CERTIFICATE-----

MIIF+DCCBOCgAwIBAgIQA7LS7ojPMbWMYHYrCWXTaDANBgkqhkiG9w0BAQsFA

## lines are remove##

MlnNeJZsTV8qxACwZEO9v+mLjAc5gGRK/T4jZTMwUF2O1QJcvSzuW5B9y4w=

-----END CERTIFICATE-----

' COMMENT 'AWS1CERT’;

Repeat the above create certificate from command for all the eight certificates.

Add the certificates to the above created PSE

SELECT CERTIFICATE_ID FROM CERTIFICATES WHERE COMMENT = '<comment>';

ALTER PSE PSE1 ADD CERTIFICATE <certificate_id>;

Set the purpose of the PSE to remote source using the below SQL

SET PSE PSE1 PURPOSE REMOTE SOURCE;Here is a screen shot of my certificate store one the above exercise is done

Now you can create a new remote source by right clicking remote source in the database explorer. You will need your Access Key ID and the secret access key for the AWS user and will need to choose Athena as the adapter.

You will be able to see databases and tables you created in Athena. You can now check the box against the table name and then create a virtual object .

Now you will be able to see the table1 in schema DBADMIN.

You can display the data of the S3 bucket in HANA Cloud.

Conclusion

In this blog post, we created a S3 bucket and loaded csv file into it. We then created a Athena table on it.

There after we created a remote source using the SAP HANA Cloud Athena api adapter and created a table in SAP HANA Cloud which was accessing data from the AWS athena. With the right configurations on AWS and the right SSL certificates in SAP HANA Cloud, it is quite simple to consume the S3 data into SAP HANA Cloud..

- SAP Managed Tags:

- SAP HANA Cloud,

- SAP HANA smart data integration

Labels:

1 Comment

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

93 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

66 -

Expert

1 -

Expert Insights

177 -

Expert Insights

299 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

13 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,577 -

Product Updates

345 -

Replication Flow

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,873 -

Technology Updates

427 -

Workload Fluctuations

1

Related Content

- Top Picks: Innovations Highlights from SAP Business Technology Platform (Q1/2024) in Technology Blogs by SAP

- Nested JSON to SAP HANA Tables with SAP Integration Suite in Technology Blogs by Members

- Single Sign On to SAP Cloud Integration (CPI runtime) from an external Identity Provider in Technology Blogs by SAP

- SAP Datasphere - Space, Data Integration, and Data Modeling Best Practices in Technology Blogs by SAP

- Cloud Integration: Advanced Event Mesh Adapter, Client Certificate, AEM in Technology Blogs by SAP

Top kudoed authors

| User | Count |

|---|---|

| 41 | |

| 25 | |

| 17 | |

| 14 | |

| 9 | |

| 7 | |

| 6 | |

| 6 | |

| 6 | |

| 6 |