- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- SAP Analytics Cloud - Landscape Architecture & Lif...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

What should the landscape architecture of SAP Analytics Cloud look like and how does it compare with traditional on-premise landscapes? How should I manage the life-cycle of content in SAP Analytics Cloud? I address these and many other common life-cycle management questions in my article.

I first look at typical on-premise landscapes and then compare this to the Cloud, what’s the same and what’s different. The final architecture is determined by many factors and I present the most important things you need to know, including summaries of:

- Public and Private hosted editions

- SAP Analytics Cloud Test Service and its Preview option

- Quarterly Update Release and Fast Track Update Release cycles

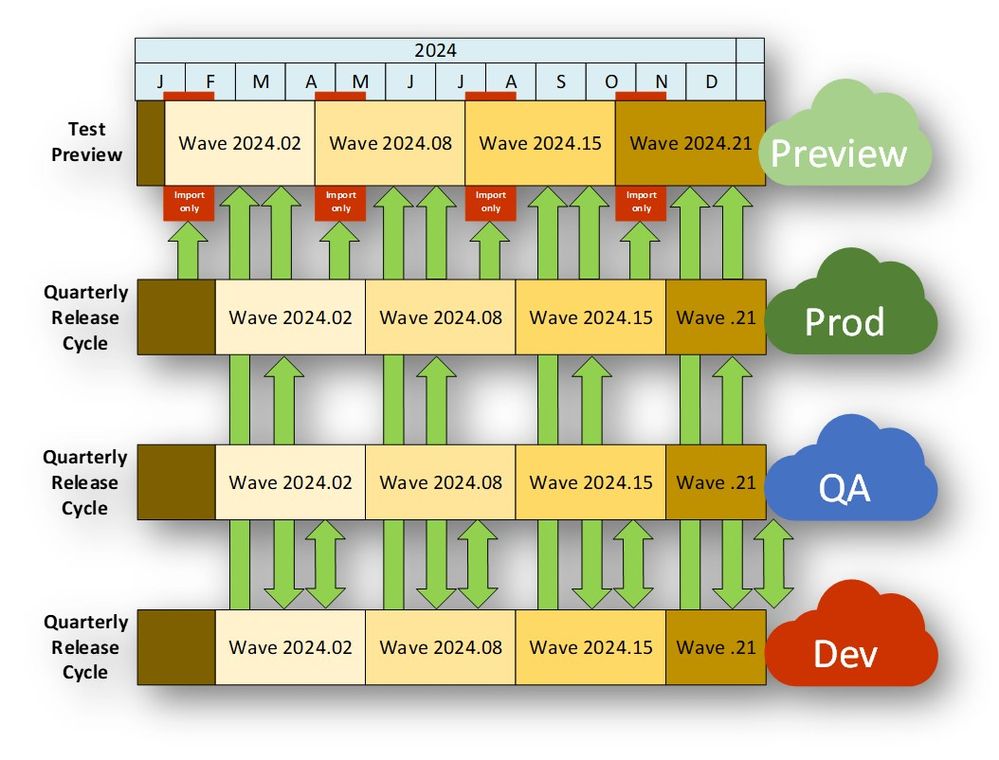

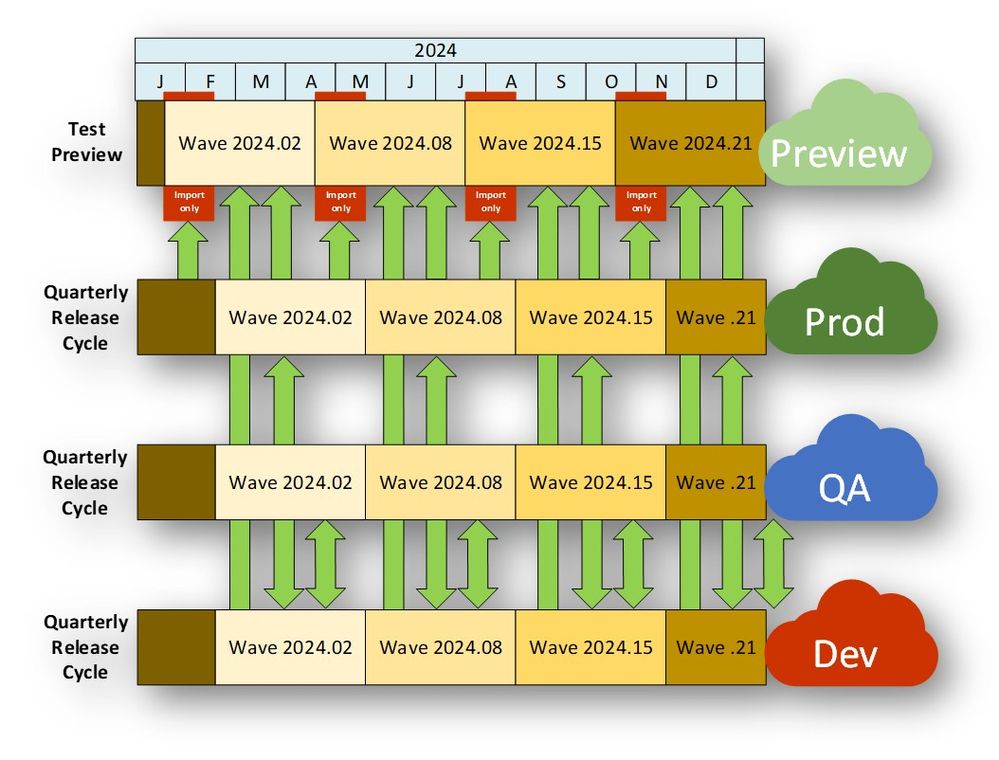

I explain where each fits into the overall life-cycle, thus allowing you to determine the right architecture for your organisation. This includes explaining how and when you can transport objects between the different Services. Here between the Preview Service and the regular Quarterly Release Cycle (QRC):

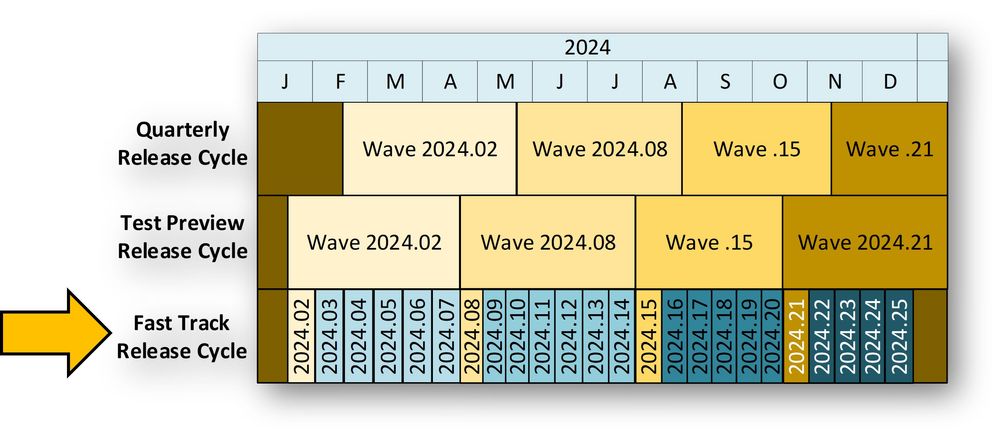

and here between the Quarterly Release Cycle and the 'Fast Track':

(the 'Fast Track' is not provisioned by default)I also present why multiple environments are needed at all and what can be achieved, albeit in a limited fashion, within a single environment when managing the life-cycle of content. I present the typical landscape options chosen by most other customers and the most common path of adoption. Thus, giving you some guidance on what your next step on your SAP Analytics Cloud journey might be:

Content Namespaces are also an important concept to understand and I present some recommendations on how to manage this to avoid known issues and reduce the risk for your projects. I also present the options for transporting objects around the landscape and I finish with a Best Practice Summary. The article is available below and also in other formats:

| Latest Article | Version 1.2.4 - January 2024 |

| Microsoft PowerPoint | Preview Slides - version 1.2.4 |

| Microsoft PowerPoint | Download Slides - version 1.2.4 |

| Video mp4 | Preview 1h 16mins - version 1.1 |

| Video mp4 | Download 1h 16mins - version 1.1 |

Contents

[note: Sorry the links below no longer work due to a limitation with the new hosting platform]

Landscape Architecture: On-premise v Cloud⤒

On-premise landscapes⤒

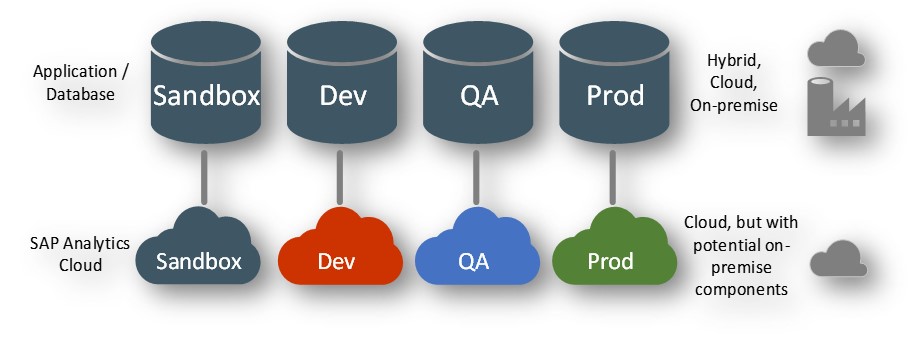

‘Traditional’ on-premise landscape environments typically consist of

- Two primary tiers

- One for the application/database. E.g. SAP BW

- One for the Business Intelligence or Planning system. E.g. SAP BusinessObjects BI Suite

- Both primary tiers hosted on-premise

- Each environment (Sandbox, Dev, QA, Prod) has a unique role with regard to the content life-cycle

- So, what changes when working with a Cloud Service?

Same⤒

What’s the same

- Typically still need two primary tiers

- Though some use cases can be fulfilled solely with SAP Analytics Cloud

- Still need multiple environments (Sandbox, Dev, QA, Prod)

- The role these environments provide remains valid, albeit now with a more obvious associated cost

Different⤒

What’s different

- The SAP Analytics Cloud services are updated on a scheduled basis

- May have a desire for fewer Cloud environments to reduce Cloud Service fees

- There could be cloud-to-on-premise dependencies

- Impacts

- life-cycle management activities

- on-premise software update cycles

Landscape architecture⤒

There are many factors that determine the landscape and these include

- Choice of Public and Private hosted editions

- Any use of SAP Analytics Cloud Test Service and its Preview option

- Pros/Cons of Quarterly Release and Fast Track Update Release cycles

- Options and limitations to promote content between the environments

- Maturity of lifecycle management and change control practices

Public and Private Editions⤒

- Public Edition

- Internal SAP HANA database instance is shared, with a schema per customer

- Database performance could be impacted by other customer activity

- Private Edition

- Still has some shared components but the core SAP HANA database instance is dedicated

- Dedicated database instance reduces the impact of other customers’ database activity

- Typically chosen for larger enterprise deployments

- Public Editions are typically chosen in general

- but not exclusively since Private Editions have increased database performance protection from other customer services

- Both ‘Public’ and ‘Private’ Editions are Public Cloud Services

- There is no Private Cloud Service available, even for the ‘Private’ Edition

- The ‘Private’ Edition is called private only because the SAP HANA database instance is dedicated

Bring Your Own Key⤒

- Customer Controlled Encryption Keys (CCEK) are only available for Private (not Public) Services

- Includes Private Test Services (see later)

- CCEK requires a Data Custodian Key Management Service (DCKMS) tenant for each SAP Analytics Cloud Private Service

- See KBA 3117993: How to use the "Bring Your Own Key (BYOK)" feature

Private Editions – a little more about sizing and performance⤒

- Analytic Models connecting to ‘live’ data sources require minimal hardware resources on SAP Analytics Cloud

- Most of the load is on the data source

- Analytic Models using acquired data means the underlying HANA database is used, mostly for read access so there is a load but it is not too heavy

- Likely to require a higher CPU than a high Memory

- Planning Models using acquired data can require significant resources for both CPU and Memory

- Planning models are more highly dependent upon the model design

- # of exception aggregation, # of dimensions, scope of the users’ security context when performing data entry

- Physical size of a model isn’t necessarily the determining factor for performance!

- Planning models are more highly dependent upon the model design

- Other factors: # of concurrent users, size and shape of the data, # of schedules etc.

Applicable for all environments⤒

- Public and Private editions are applicable to all environments

- There are no restrictions on what type of edition can be used for any particular life-cycle purpose

- However, performance load testing is only permitted against Private editions

- Penetration testing is positively encouraged against all editions

- For both load & penetration testing follow SAP Note 2249479 to ensure compliance with contractual agreements and so we don’t block you thinking it’s a denial of service attack!

Test Services⤒

- Provides unique opportunity designed for testing in mind

- Includes named user Planning Professional licenses and a SAP Digital Boardroom license

- Planning Professional includes Planning Standard license, and Planning Standard includes Business Intelligence named user license

- However, there are no Analytics Hub users, nor any concurrent Business Intelligence licenses available with this service

- Typically all Beta features, if requested and granted, can be enabled on Test Services

- Cannot be used for Productive use

- Does not adhere to standard SLA’s

- Means you can not log a Priority 1 support incident with SAP

- Does not mean it cannot connect to a Productive data source (i.e. you can connect it to a productive data source with a Test Service)

- A Test Service is not required for Testing as such, a regular non-Test Service can be used for all non-Production life-cycle use cases

- Test Services are available for both Public and Private editions

- However, Public Test editions have restrictions with the Test Preview option described later

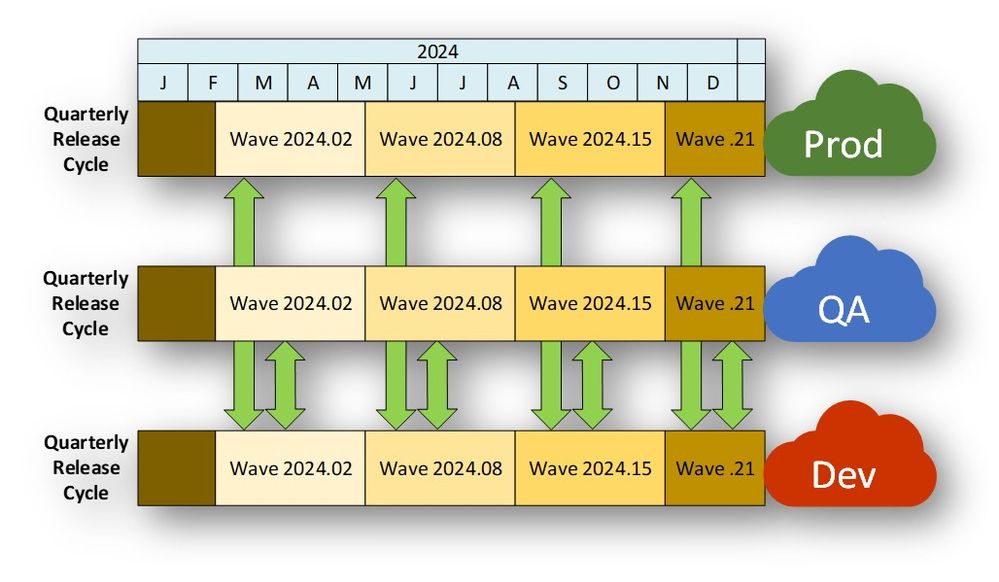

Quarterly Release Cycle (QRC)⤒

Primary Update Release Cycle⤒

- There is one primary update release cycle, the ‘Quarterly Release Cycle’ (QRC)

- For official dates please see KBA 2888562 and Product Updates for other related links

- If you purchased SAP Analytics Cloud, then your service will be on this update release cycle by default

- It means your SAP Analytics Cloud Service is updated:

- on a scheduled basis and you can not elect for it to be ‘not updated’

- once a quarter, so 4 times a year

About the wave version number

- For the year 2023, the Q1 QRC wave version is 2023.02, for 2023 Q2 is wave version is 2023.08 etc.

- The wave version number is a combination of the year, version and patch number

- E.g. version 2023.02.21 is version 2, of the year 2023, with patch 21

- Waves and patches are always cumulative (they include all the features of everything before it)

Life-cycle use of QRC in overall landscape⤒

- For life-cycle management needs to be able to transport objects between Dev, QA and Prod environments at all times

- The ability to transport content is critical to running any productive system

- There should be no ‘blackout’ period, where transporting objects is not possible

- Thus, it makes perfect sense for Dev, QA and Prod to be on the same Release Cycle

- Content can always be transported between all environments, but most typically between Dev and QA, and Dev and Production (as shown in the diagram)

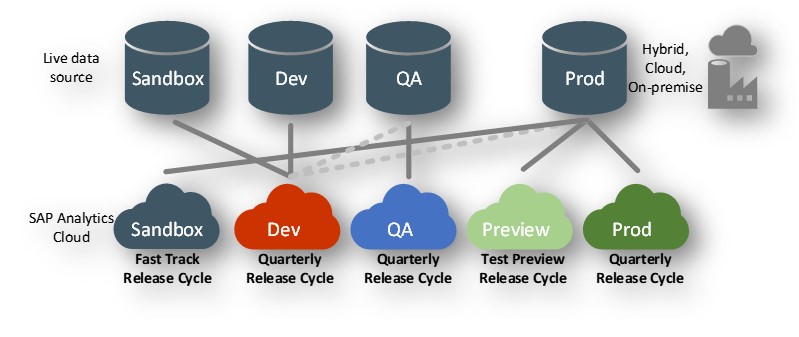

Test Preview Quarterly Update Release Cycle⤒

Test Preview⤒

- Only available with a Private Edition Test Service

- i.e. not available for a Public Edition Test Service

- This ‘Test Preview’ service receives the Quarterly Update Release, but ~1 month earlier

- It’s a regular Private Edition Test Service, but the update release cycle is the ‘Quarterly Preview’

- Provides a unique opportunity to validate the new version with productive data and productive content

- Also enables central IT to test new features and get a ‘heads-up’ for the upcoming version

- For more details please refer to SAP KBA 2652439 and YouTube video

- It is expected that ‘Test Preview’ connects to a Productive data source, even though it is classed as a Test Service. This is necessary to validate the new version with existing Productive content, both data source and SAP Analytics Cloud content (models, stories etc.)

- A ‘Test Preview’ service, like a regular Test Service can not be used for productive purposes, but unlike a regular Test Service, it can also not be used for development purposes either

Life-cycle use of ‘Test Preview’ in overall landscape⤒

- ‘Test Preview’ introduces a new environment into the landscape, almost unique to the cloud

- It is neither development, QA, pre-prod nor production

- Preview should never be used for development purposes; its role is purely to validate new software with existing productive content

- Since it is updated ~1 month ahead, for that month, you cannot transport content from it into another environment until those other environments are updated to the same version

- i.e. you can only import into it (not export from it) during the month overlap

- Typically you transport content from Production into Preview, but not exclusively

- Dev content would also be transported into it at times

- For 2 months of each quarter, it remains aligned with the Quarterly Release Cycle allowing you to easily transport content between all environments

- Remember from a license contract point of view Test Preview can not be used for development or productive purposes

Fast Track Update Release Cycle⤒

Fast Track⤒

- Updates are made ~ every 2 weeks, so about 26 times a year

- Not provided by default and needs to be ordered specially

- (SAP representative needs to request a ‘DevOps’ ticket to be raised prior to the order)

- Up to 8 wave versions ahead of the Quarterly Release Cycle

- As the version is considerably ahead its tricky to transports content in and out of it

- Transport of content from Fast Track to others requires you to ‘hold’ the content until the target is updated to the same version

- However the content must be the same or the previous version, so the ‘export’ needs to be performed in a small-time window, otherwise it is ‘blocked’! (1) (3)

- Transport of content into Fast Track from others is incredibly limited to a few times a year

- ‘Older’ content can be transported into Fast Track, but the target can only be the Quarterly Release version, otherwise it is ‘blocked’! (2) (3)

- 1) Technically, if content is transported via the ‘Content Network’ then all earlier versions (not just the current or previous) can be imported, but it is not supported.

- 2) Technically, if content is transported via the ‘Content Network’ then non-QRC versions can be imported, but it is not supported.

- 3) If content has been exported ‘manually’ (via Menu-Deployment-Export) then it is not even technically possible to import it. Additionally, this manual method is required if the source and target services are hosted in mixture of SAP and non-SAP data centres. If the source and target are all non-SAP (or all SAP) data centres then the ‘Content Network’ can be used to transport content, even across geographic regions. The manual method is legacy and its support could be withdrawn in the future.

Life-cycle use of ‘Fast Track’ in overall landscape⤒

- Perfect for validating and testing new features that will come to the Quarterly Release later

- Occasionally Beta features can be made available in non-test Services, allowing organisations to provide early feedback and allow for SAP to resolve issues ahead of general availability

- Suitable for Sandbox testing only

- Explicitly not suitable for productive or development of any content

- Do not rely upon the ability to transport content into or out of Fast Track

- For the Fast Track Update Release cycle:

- Occasionally the update schedule changes in an ad-hoc fashion to cater for platform updates or other un-planned events

- Rarely, but not completely unheard of, an entire update is skipped, so the next update updates by two versions. It’s possible the window of opportunity for transporting content is closed for some quarters

Update Release Cycles⤒

- All services, public and private editions, are updated at the same time when they are hosted in the same data centre

- Data centres are hosted all around the globe. Each has a schedule that will vary slightly from others

- Quarterly Release Cycle schedule dates are published in SAP Note 2888562

- Exact dates vary slightly by region and data centre host

- SAP, Amazon Web Services, Alibaba Cloud (China) and Microsoft Azure

- Some fluidity in the schedule is necessary for operational reasons so the update schedule for Fast Track is not published

- Data centres are complex and updates are occasionally delayed to ensure service levels and maintenance windows are not breached. Delays can be caused by urgent service updates to the underlying infrastructure

- It's important to ensure all your SAP Analytics Cloud Services are hosted in the same Data Centre to ensure version consistency with regard to life-cycle management

Why multiple environments⤒

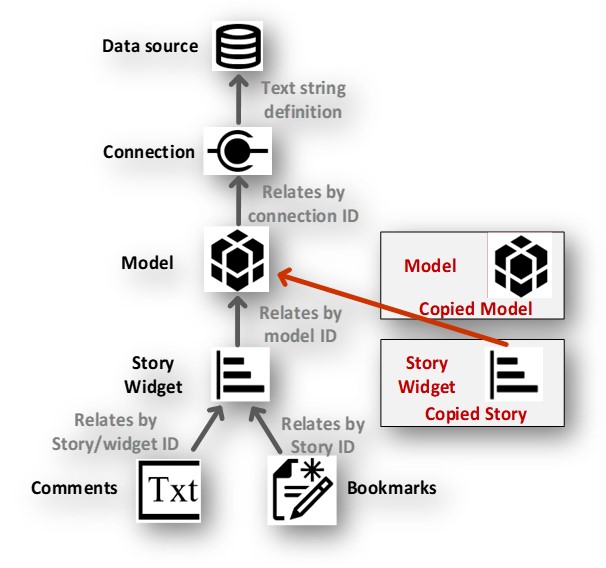

Objects relate to other objects by ID⤒

- Relationship of objects inside SAP Analytics Cloud is performed by identifiers

- It is therefore not possible to manage the life-cycle of different objects independently of each other within the same service

- For example, taking a copy of a story means that the copy will:

- still point to the original model (red line in diagram)

- not point to a copy of any model taken

- lose dependences of all comments or bookmarks associated with the original

- Other dependencies include SAP Digital Boardroom and discussions, but there are many more

- have a new ‘ID’ itself so end-user browser-based bookmarks will still point to the original story

- To re-point a copied story to a copied model requires considerable manual effort and is not appropriate for life-cycle management change control

- Multiple environments are thus mandatory for proper change and version control

What life-cycle management can be achieved within a single Service⤒

Some life-cycle aspects can be achieved within a single Service⤒

- Although objects dependent upon models (like Stories or Applications) cannot be independently managed within one ‘system’, some life-cycle aspects can be managed within one SAP Analytics Cloud Service

- This typically applies to ‘live’ data sources

- such as Data Warehouse Cloud, HANA, BW, BPC, S/4, BI Platform etc.

- this tends not to be applicable to ‘acquired’ data models, but that is still possible

- For example, when a new version of the data source (or copied SAC model) has been created, that new version can be validated in the same SAP Analytics Cloud Service

- For ‘live’ data sources, the step of creating a new Model is simple, quick and easy

- Creating a new Story with new, often simple, widgets can help validate ‘changed’ aspects of the data source (or copied SAC model)

- It will thus, help validate changes made to the next data source version

- ‘live’ data sources are typically hosted in other cloud services or on-premise systems

- It would not validate the existing SAP Analytic Cloud content within that same Service, but it does provide a level of validation and opportunity to re-develop or adjust the model/data source design

- Thus, albeit in a limited way, for the data source at least, two environments within a single SAP Analytics Cloud service can be supported

Simplifying the landscape⤒

- Some organisations have 4 systems in their on-premise landscape, however mirroring this setup is typically undesired with the Cloud as costs of more obvious

- The option to validate data source changes within one SAP Analytics Cloud service is available

- Means 4 data source systems can be managed with 3 SAP Analytics Cloud Services

- Sandbox could be removed for this purpose - still may need it for another!

- See Fast Track Services

- Sandbox could be removed for this purpose - still may need it for another!

- Development validates new data source (and SAC model) changes by creating new story widgets that are soon destroyed

- Once data source changes have been validated, the changes can then be made to the data source that supports all the existing content dependent upon it

- This enables the development and validation of SAP Analytics Cloud content independently of the ‘next’ data source version that may be in development

Typical landscape options chosen⤒

- Arrows indicate most common path of adoption

- First choice is typically Option 1

- Useful for initial valuation

- Next is to add a Dev environment, Option 2

- Ideal for developing new content and starting to use the transport services available

- ‘Test Preview’ is often followed, Option 4

- Most customers find a need to validate new upcoming features with productive content

- Sandbox options are typical for customers wishing to try out features way ahead of general availably. It reduces the risk to the project by having validated features ahead of time

- Update Release Cycles are as shown

- Sandbox and Preview are not on QRC

- Dev, QA and Prod are on QRC

- Non-productive environments only need a handful of users, perhaps 10 users or fewer

- Will need at least as many developers/testers, but doesn’t necessarily need to be a significant investment

- Due to license terms on Test Services, Preview has a minimum number of users: 20 for Public Editions and 50 for Private Editions

- A minimum of 25 users is required for the Scheduling Publications feature (see blog for more details and an exception for Partners)

- Typically, only the ‘Test Preview’ Service is using a ‘Test Service’, all others are non-Test Services including Sandbox, Dev, QA

- Private editions are recommended for large enterprise deployments, commonly for production

Mixing Public and Private Editions⤒

- Public and Private Services can be mixed

- There is no restriction except:

- a Test Preview Service must be a Private Edition

- The options shown here are just examples, there are many more supported combinations and permutations

Mixing regular Productive and Test Services⤒

- A regular (non-Test) ‘Productive’ SAP Analytics Cloud Service can be used for all environments with one exception:

- ‘Test Preview’ must be a ‘Private Test’ Service (option C)

- A Test Service can be used for all environments except ‘Prod’, otherwise any mixture is possible

- A Test Service must never contain personal data for contractual reasons

- If you need to ‘Dev’ or ‘QA’ with personal data, you’ll need a Production Service (option C)

- The options shown here are just examples, there are many more supported combinations and permutations

Typical Landscape connectivity (for live sources)⤒

- It's very important to validate content against production size and quality data

- Production data sources are accessed by Prod, but also Preview and occasionally Sandbox

- Preview requires access to Prod data source to validate content with the upcoming wave version

- Even though Preview is a ‘Test’ service, it can still connect to Prod data

- Use a copy of Production data for QA purposes where possible

- The user that performs the change to the connection, the user needs access to both the current and the new data source at the same time (hence the dotted line from Dev to QA and Prod)

- Switch the connection before transporting new model versions

- Since there is no concept of ‘connection mappings’ or ‘dynamic connection switching’

- Switch the connection back after creating the transport unit

- You only need to do this once and whilst it is presented here as changing connection in the source, its often done in the target

- Please see the next section on ‘respecting the id’ and in particular best practices for managing live connections

- Switch the connection before transporting new model versions

Respect the ID and Best Practices for updating objects⤒

Different objects, same name⤒

Updating objects is performed by ID, not by name

- When objects are transported from one environment to another, upon updating the target, objects are matched on the ID

- If the ID’s match, the object can be updated

- If there is no match by ID, the object will be new

- Can lead to multiple objects with the same name in the target as shown in this workflow

- It is impossible to create two new objects with the same ID, even across different environments

- An object, when transported from one environment to another, maintains its ID (and its Content Namespace of where it was first created)

Best Practices for updating objects⤒

- In order to update an object created in Production with a new version in Dev, it must first be transported to Dev (Step 2)

- The object can then be updated to the new version (Step 3) before being transported back to Prod (Step 4)

- Typically it should go to QA first for testing!

- Since the ‘ID’ has been respected, the object is matched by ID and is correctly updated

- There is no duplicated object name in the target

- All dependencies are also respected

Best Practices

- Only create objects once and then transport them to other environments

- Avoid ‘copying’ objects or ‘save as’ as this creates a new object with a new ID

- Create objects with a name that doesn’t refer to its current environment

- i.e. avoid “Test Finance”, instead use “Finance”

- Applicable for all object types: folders, stories, teams, roles, models etc

Special notes for live connections⤒

There are no exceptions and this includes live connection objects

- Create the connection object once across the entire landscape, just like any other object (step 1)

- Transport the live connection from the source environment to all other environments, just like all other objects

- This ensures the connection id is the same and consistent across the entire landscape (step 2)

- Update the connection details, within each environment so the connection points to the relevant data source for that environment (step 3)

- Never promote the connection again (since doing so would overwrite the change you just made)

- Then everything is easy:

- As you promote models around the landscape, the models and stories will connect to the data source for the environment they are stored in

Special notes for live connections

- Custom Groups created in stories are also stored inside stories, however, the Custom Group definitions are associated to the connection id, not the story!

- This means, if Custom Groups are developed in Dev and transported to Prod, those Custom Groups will only work in Prod if the connection id is the same as Dev

- Whilst you didn’t have to follow this best practice for managing connections before, since it was easy enough to just change the connection a model uses, now we have Custom Groups, it becomes necessary

Content Namespaces and Best Practices⤒

Changing the default⤒

- Each SAP Analytics Cloud Service has a Content Namespace

- Its default format is simple [Character].[Character]

- Default examples include: t.2, t.0, t.O

- You can change the Content Namespace to almost anything you like

- e.g. ‘MyDevelopment’

- but it's best to keep it to 3 characters to reduce payload size of metadata objects

- When any content is created, it keeps the Content Namespace that Service had at the time of its creation

- Like an objects’ ID, you cannot change the Content Namespace of an object

- This also means, that when content is transported, it keeps that Content Namespace with it

- As shown above, the model and story, when transported into QA, maintain the Content Namespace of Dev

- It's likely any one Service will contain content with different Content Namespaces

- For example, importing the Samples or Business Content from SAP or Partners, will have different Content Namespaces

Pros and cons of consistent values⤒

- Benefit of different Content Namespaces across environments:

- You can identify in which environment the object was originally created

- Though the namespace is hidden from end-users, you can see the namespace in logs and browser consoles

- You can identify in which environment the object was originally created

- Benefits of the same and consistent Content Namespace across all environments:

- Easier coding of Service APIs (REST, URL, SCIM)

- These APIs often refer or include the Content Namespace, so a consistent value means slightly easier coding

- Reduces project risk, typically when using APIs

- There are occasional issues when multiple Content Namespaces are used, whilst SAP will fix these, it might be better to avoid any surprises by using a consistent value

- Easier coding of Service APIs (REST, URL, SCIM)

- Known issues when changing Content Namespaces

- Teams with Custom Identity Provider

- Once changed, new objects will have new Content Namespaces and this includes ‘Teams’

- This also means the SCIM API used by Custom Identity Providers, if utilising the ‘Team’ attribute mapping could fail when Teams are using a mixture of Content Namespace values (KBA 2901506). A workaround is possible, but better to avoid it until a full solution is available

- Teams with Custom Identity Provider

Best Practices⤒

- Do set the Content Namespace to be the same and consistent value across all environments

- Change the Content Namespace as one of the first things you do when setting up your new Service

- Do this before setting up any custom Identity Provider

- Do this before creating any Teams

- Set the value with a small number of characters and keep the format the same (e.g. T.0)

- Lengthy values (e.g. MyWonderfulDevelopmentSystem) cause extra payload in communications and if the number of objects referred to in a call is large, this could have a performance impact

- No need for a lengthy name, just keep it short and simple!

- Do NOT change the Content Namespace if you already have a working productive landscape

- Especially if you are using a Custom Identify Provider and mapping user attributes to Teams

- “Don’t fix something that ain’t broke”

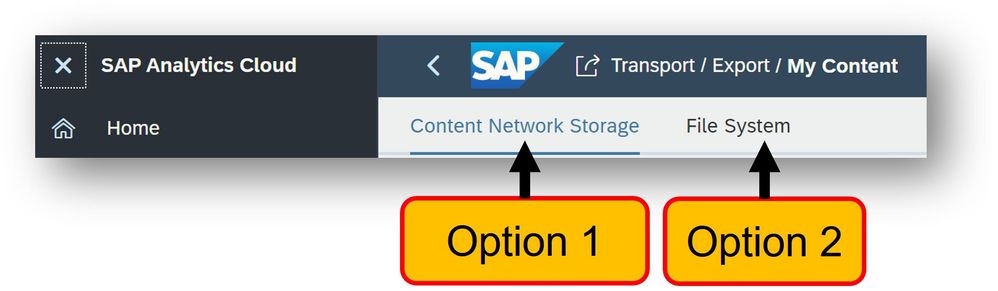

Transporting Objects, Best Practice and Recommendations⤒

There are 2 options for transporting content:

Option 1: Content Network (recommended)⤒

- ‘Unit’ files are hosted in the Cloud

- Can be organised into folders, including folder security options

- Processing occurs in the background

- No need for any manual download/upload

- though this is possible and still needed if transporting content across different data centres

- Unlike (legacy) manual deployment option:

- Supports a greater number of object types

- Will only show Units that can be imported into the Service

- Units created by newer versions of the Service will not be shown (i.e. Units created on Fast Track Release Cycle, but the current Service has yet to be updated to that version)

- Older units that are not supported can still be imported, but a warning message is shown

- Fully supported units are when the version is the same, the previous or the previous Quarter Release

Option 2: (legacy) ‘Deployment-Export/Import⤒

- Requires manual management of ‘Unit’ files: downloading from source and uploading to the target

- Has limitations on file size

- This option will be deprecated in the near future

Best Practice Summary⤒

Key takeaways

- Use multiple Services to life-cycle manage content

- Do keep Dev, QA and Prod on the same update release cycle

- Always validate content against production quantity and quality of data

- Use the Test Preview to validate new wave versions with existing Production content

- Use Fast Track to reduce risk to the project especially when success is dependent upon upcoming features

- Respect the ID of objects and create content once, then transport it to other environments

- Change the Content Namespace only at initial setup and set all Services to the same value

- Use the Content Network to transport objects between Services

Frequently Asked Questions⤒

Question: What is the best practice to manage the life-cycle of content with just one SAP Analytics Cloud Service?⤒

- Answer: SAP Analytics Cloud has been designed so that content life-cycle management requires multiple SAP Analytics Cloud services. It means a single SAP Analytics Cloud Service can not manage the life-cycle of content on its own

- The service provides various tools by which content can be transported from one SAP Analytics Cloud Service to another and these tools are constantly being developed and improved

- It is explicitly recommended not to attempt to manage the content life-cycle (of SAP Analytics Cloud content) within a single SAP Analytics Cloud Service

Question: Can I use Test Preview for ‘Dev’ and a regular productive service for ‘Prod’?⤒

- Answer: No

- A Test Preview agreement states you can not use a Test Preview for development or productive purposes

- Additionally, the schedule of the wave updates is not suited to support the life-cycle of content during the time the two release cycles are not aligned. It's critical, for good life-cycle management, to have the ability to transport content between Dev and Prod the whole time and not to have any black-out periods that could cause operational issues

Question: Is there a private cloud service available for SAP Analytics Cloud?⤒

- Answer: No

- Both the ‘Public’ and ‘Private’ Editions of SAP Analytics Cloud are public cloud services

- The term ‘Private’ is used to distinguish when the SAP HANA database is dedicated or not. A ‘Private’ edition is actually a public cloud service, just with a dedicated SAP HANA database, also hosted on a public cloud service

Your feedback is most welcome. Before posting a question, please read the article carefully and take note of any replies I’ve made to others. Please hit the ‘like’ button on this article or comments to indicate its usefulness. Many thanks

Matthew Shaw @MattShaw_on_BI

- SAP Managed Tags:

- SAP Analytics Cloud

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,661 -

Business Trends

87 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

64 -

Expert

1 -

Expert Insights

178 -

Expert Insights

273 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

Kafka

1 -

Life at SAP

784 -

Life at SAP

11 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,577 -

Product Updates

326 -

Replication Flow

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,886 -

Technology Updates

403 -

Workload Fluctuations

1

- SAP HANA Cloud Vector Engine: Quick FAQ Reference in Technology Blogs by SAP

- 10+ ways to reshape your SAP landscape with SAP BTP - Blog 4 Interview in Technology Blogs by SAP

- 10+ ways to reshape your SAP landscape with SAP Business Technology Platform – Blog 4 in Technology Blogs by SAP

- Top Picks: Innovations Highlights from SAP Business Technology Platform (Q1/2024) in Technology Blogs by SAP

- Unlocking Full-Stack Potential using SAP build code - Part 1 in Technology Blogs by Members

| User | Count |

|---|---|

| 13 | |

| 10 | |

| 10 | |

| 7 | |

| 7 | |

| 6 | |

| 6 | |

| 5 | |

| 5 | |

| 4 |