- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- Handling Large Data with Content Enricher and ODat...

Technology Blogs by SAP

Learn how to extend and personalize SAP applications. Follow the SAP technology blog for insights into SAP BTP, ABAP, SAP Analytics Cloud, SAP HANA, and more.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Advisor

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

11-08-2019

2:36 PM

In this blog post, you will learn how to better model a Content Enrich scenario in SAP Cloud Integration. All full-scale integrations would inevitably have one or more Enrich steps. Very often, the adapter connected to the Content Enricher pulls in all the available data from the backend system causing crashes and performance degradation. The efficiency of the integration therefore greatly depends on the finer modeling of the Enricher step especially when large data is involved.

In this blog post, we will model a simple integration flow to handling large data is using dynamic queries using property and OData v2 $filter query option. In the example here, we will be enriching CompoundEmployee entity with FOLocation by creating a dynamic filter in Successfactors OData v2 adapter.

I have configured a looping process(as seen below) to fetch and enrich Compound Employee records in pages. The script step "Parse keys to Properties" has the logic for creating a dynamic filter.

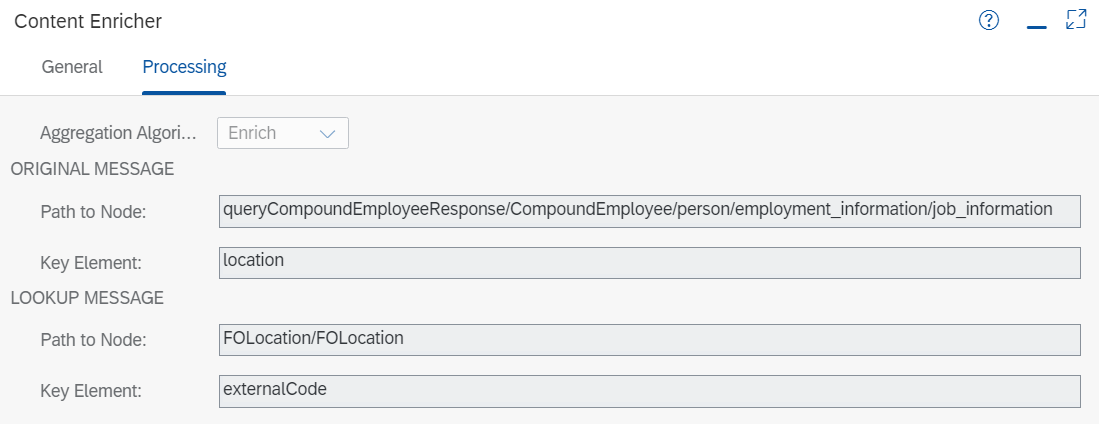

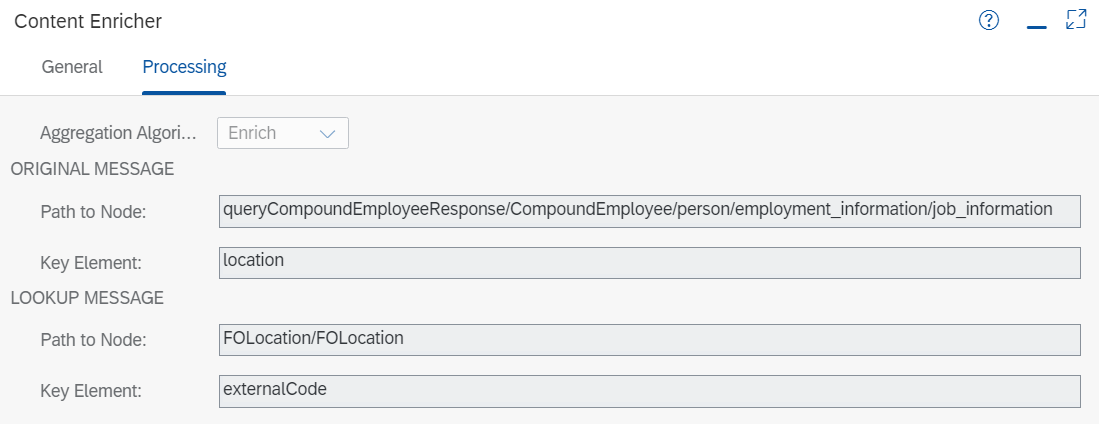

Content Enricher configuration for this scenario is shown below.

Content Enrich works by matching the key elements from Original Message(i.e. Compound Employee) to the key elements in Lookup Message(i.e. FOLocation) and then intelligently aggregating the snippets of the matching data from Lookup into the Original message.

We can always help optimize the data fetched in the Lookup Message by passing the appropriate key elements as $filter parameter for the adapter. In this example, we are comparing key element location from Compound Employee to key element externalCode and therefore we will define and configure a dynamic filter on externalCode for optimization.

The main step. Below is the Script Code for "Parse keys to Properties" to construct the dynamic filter. The first part of the script parses the Original Message and selects all the key elements using XmlSlurper. The second part forms the key element in the OData $filter format and sets it to property named leadKey.

You can see that I always have the property configured with a value. Else there would be a runtime failure if the property is missing.

PS: "in" operator is unique to Successfactors. For standard OData services ( such as Hybris Marketing, XSOData etc), you will need to use the "or" operator instead.

The simplest step. Add the property leadKey in the query option. And your scenario is good to go!

The above steps might look a bit overwhelming but is relatively easy to perform. The script I shared must suit your use case with minimum changes. In general, dynamic parameters can greatly help improve the performance of your integration scenarios and most adapters support dynamic injection of parameters. Do consider them in all your integration flow designs.

In this blog post, we will model a simple integration flow to handling large data is using dynamic queries using property and OData v2 $filter query option. In the example here, we will be enriching CompoundEmployee entity with FOLocation by creating a dynamic filter in Successfactors OData v2 adapter.

The Scenario

I have configured a looping process(as seen below) to fetch and enrich Compound Employee records in pages. The script step "Parse keys to Properties" has the logic for creating a dynamic filter.

Content Enricher configuration for this scenario is shown below.

Understanding Content Enrich Functionality

Content Enrich works by matching the key elements from Original Message(i.e. Compound Employee) to the key elements in Lookup Message(i.e. FOLocation) and then intelligently aggregating the snippets of the matching data from Lookup into the Original message.

We can always help optimize the data fetched in the Lookup Message by passing the appropriate key elements as $filter parameter for the adapter. In this example, we are comparing key element location from Compound Employee to key element externalCode and therefore we will define and configure a dynamic filter on externalCode for optimization.

Configuring Content Enrich to work with Large Data Volumes

Step 1: Defining the script

The main step. Below is the Script Code for "Parse keys to Properties" to construct the dynamic filter. The first part of the script parses the Original Message and selects all the key elements using XmlSlurper. The second part forms the key element in the OData $filter format and sets it to property named leadKey.

You can see that I always have the property configured with a value. Else there would be a runtime failure if the property is missing.

PS: "in" operator is unique to Successfactors. For standard OData services ( such as Hybris Marketing, XSOData etc), you will need to use the "or" operator instead.

import com.sap.gateway.ip.core.customdev.util.Message;

import java.util.HashMap;

def Message processData(Message message) {

//Body

def body = message.getBody(java.io.Reader);

//Parse the key from Original message

def response = new XmlSlurper().parse(body);

def keys = response.'**'.findAll{ node-> node.name() == 'location' }*.text();

//Formulate the filter query. Eg: $filter=externalCode in '1710-2018'

def leadKey = "'" + keys.unique().join("','") + "'";

if(leadKey == null || leadKey.trim().isEmpty()){

message.setProperty("leadKey", "");

} else{

message.setProperty("leadKey", "\$filter=externalCode in " + leadKey);

}

return message;

}Step 2: Configure the SucessFactors OData v2 adapter

The simplest step. Add the property leadKey in the query option. And your scenario is good to go!

Advantages of the approach

- Far lesser calls to the backend systems.

- Lesser and importantly relevant data only pulled in each enrich call.

- Overall improvement in throughput and execution times.

Points to consider

- Only elements defined as filterable can be used in a $filter query. A good design approach could be to select only fields that are filterable as Lookup key elements. In case the required key element is not filterable, you can also consider another filterable element that is related to the selected key element to form the query.

<Property Name="externalCode" Type="Edm.String" Nullable="false" sap:filterable="true" sap:required="true" sap:creatable="false" sap:updatable="false" sap:upsertable="true" sap:visible="true" sap:sortable="true" MaxLength="32" sap:label="Code"/>- API also can have limit restrictions. For instance, the 'in' operator in Successfactors only supports 1000 values in a call. You may need to have a looping process to handle these cases.

- Adding dynamic queries increases your overall query length and can lead to issues. The server usually has a limit on allowed length for a query. This is defined typically in power of 2, i.e. 4096(2^12) or 8192(2^13) with Successfactors.

- Process in pages option does not work with Enricher.

- Leaving the page size empty would be the best for most endpoints. This would ensure all the records are fetched optimally based on server paging configuration.

The above steps might look a bit overwhelming but is relatively easy to perform. The script I shared must suit your use case with minimum changes. In general, dynamic parameters can greatly help improve the performance of your integration scenarios and most adapters support dynamic injection of parameters. Do consider them in all your integration flow designs.

Labels:

12 Comments

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,661 -

Business Trends

88 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

65 -

Expert

1 -

Expert Insights

178 -

Expert Insights

280 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

Kafka

1 -

Life at SAP

784 -

Life at SAP

11 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,577 -

Product Updates

330 -

Replication Flow

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,886 -

Technology Updates

408 -

Workload Fluctuations

1

Related Content

- CAP Extensibility: Exended Properties displayed in UI but missing in Requests in Technology Q&A

- SAP PI - REST Receiver PDF Content application/octet-stream in Technology Q&A

- Managing Recurring Pay Components in SuccessFactors Using Batch Upsert in Technology Blogs by Members

- Introducing the new pipeline concept in Cloud Integration in Technology Blogs by SAP

- Unleashing Connectivity & Efficiency:Explore the Potential of SAP Event Mesh & Connectivity with CPI in Technology Blogs by Members

Top kudoed authors

| User | Count |

|---|---|

| 13 | |

| 11 | |

| 10 | |

| 9 | |

| 9 | |

| 7 | |

| 6 | |

| 5 | |

| 5 | |

| 5 |