- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- SAP DataHub 2.7.x Installation with SLC Bridge

Technology Blogs by SAP

Learn how to extend and personalize SAP applications. Follow the SAP technology blog for insights into SAP BTP, ABAP, SAP Analytics Cloud, SAP HANA, and more.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Product and Topic Expert

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

08-16-2019

6:51 PM

Last Changed: 23rd of May 2020

Blog: prepare the Installation Host for the SLC Bridge

Blog: Maintenance Planer and the SLC Bridge for Data Hub

Blog: SAP DataHub 2.7 Installation with SLC Bridge

Blog: Secure the Ingress for DH 2.7 or DI 3.0

Blog: Data Intelligence Hub - connecting the Dots …

SAP DataHub 2.7.x Installation/Upgrade with the SLC Bridge

One on the biggest steps towards the Intelligent Enterprise is the Implementation of the SAP Data Hub using the latest Version from 2.6.1 and above, e.g. 2.7.x

SAP Data Hub 2.7 is also the foundation for the Cloud Service "SAP Data Intelligence" - What is SAP Data Intelligence currently offered by AWS including ML capabilities.

SAP Data Hub allows the automated and reliable data processing across the entire data landscape within the company.

In the meantime (12th of August 2019) there are already some updated resources:

- SLPLUGIN00_25-70003322.SAR

- MP_Stack_1000756382_20190812_SDH_12082019.xml

- DHFOUNDATION06_1-80004015.ZIP

Upgrade to Version 2.7.0 (18th of October 2019)

Note 2838714 - SAP Data Hub 2.7 Release Note

- SLPLUGIN00_27-70003322.SAR

- MP_Stack_2000763611_20190925_CNT_upgrade27.xml

- DHFOUNDATION07_0-80004015.ZIP (2.7.147)

Upgrade to Version 2.7.1 (15th of November 2019)

- DOCKER00_30-70003322.EXE

- SLPLUGIN00_31-70003322.SAR

- MP_Stack_2000796777_271_15112019.xml

- DHFOUNDATION07_1-80004015.ZIP (2.7.151)

Upgrade to Version 2.7.2 (28th of November 2019)

- SLPLUGIN00_31-70003322.SAR

- MP_Stack_2000810166_20191128_CNT_272_28112019.xml

- DHFOUNDATION07_2-80004015.ZIP (2.7.152)

Upgrade to Version 2.7.3 (13th of December 2019)

- SLPLUGIN00_31-70003322.SAR

- MP_Stack_2000810166_20191128_CNT_273_02122019.xml

- DHFOUNDATION07_3-80004015.ZIP (2.7.155)

Upgrade to Version 2.7.4 (20th of February 2020)

- SLPLUGIN00_39-70003322.SAR

- MP_Stack_2000810166_20191128_CNT_274_02122019.xml

- DHFOUNDATION07_4-80004015.ZIP (2.7.159)

![]() no further updates for SAP Datahub 2.7 available anymore.

no further updates for SAP Datahub 2.7 available anymore.

for new functionality please update to the latest SAP Data Intelligence 3.0 Version - SAP Data Intelligence 3.0 – implement with slcb tool

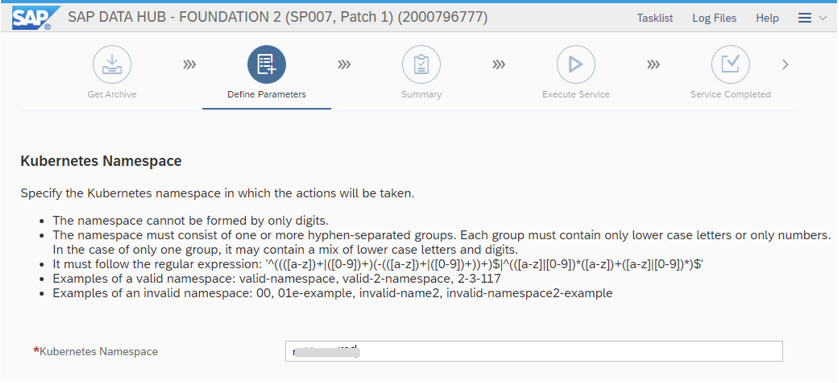

sidenote: the SLC bridge screens are not differ between Version 2.6.x and 2.7.x

sidenote: the SLC bridge screens are not differ between Version 2.6.x and 2.7.x

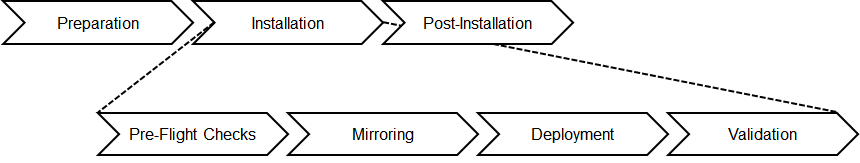

To see what is going on during the SAP Data Hub Installation,

see the @thorsten.schneider Blog - Installing SAP Data Hub

Your SAP on Azure – Part 13 – Install SAP Data Hub on Azure Kubernetes Service

@db8ac33b71d34a778adf273b064c4883

starting the Software Lifecycle Container Bridge (SLC Bridge)

Here you can choose as Customer/Partner/External your S-User/Password and as SAP employee your User-ID/Password

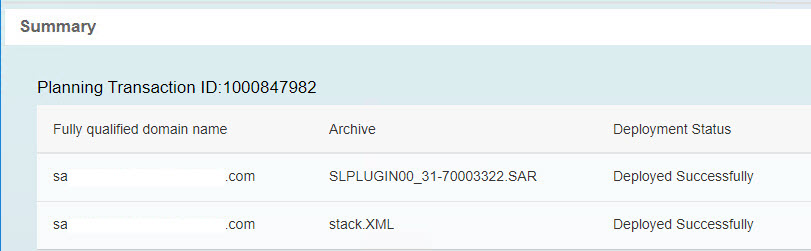

Depending to your result in the MP execution plan, the current SLPLUGIN version will be uploaded. The stack.sml war already uploaded before ...

If this is not the first upload of the DHFOUNDATION zip file, you can choose to use the existing one.

See the Information about the Target Software Level

As already described in the Blog - prepare the Installation Host for the SLC Bridge various follow up problems will occur, if the Prerequisites Check does not pass successfully ...

In Addition check that your SPN secret is still valid to prevent follow up errors like:

- check checkpoint store fails

- DNS problems within Azure Environment

- timeout while creating pods in $NAMESPACE

az aks get-upgrades --name <AKS cluster> --resource-group <resource group> --output table

Name ResourceGroup MasterVersion NodePoolVersion Upgrades

------- --------------- --------------- ----------------- --------------------------------

default <resource group> 1.14.8 1.14.8 1.15.4(preview), 1.15.5(preview)

If there are some Errors (Independent from the IaaS Distributor) in the Pre-Flight Check Phase SLP_HELM_CHECK - check the Blog: prepare the Installation Host for the SLC Bridge

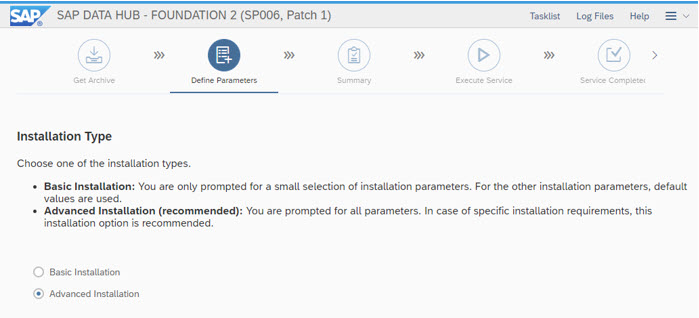

Choosing the Type "Advanced Installation" allows you to examine additional Details

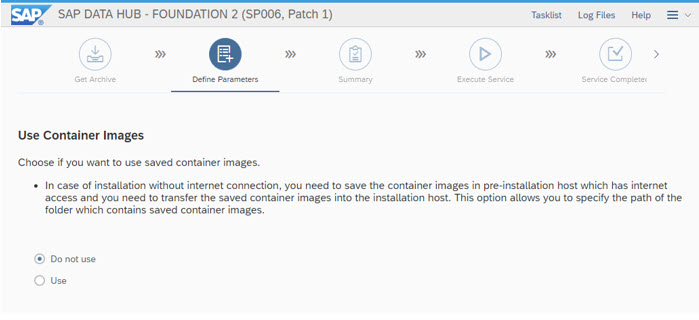

If you have no Internet Connection, either from IaaS or from on premise location, you can download the Container Images in advance. With an Internet Connection this is not necessary ...

The SLC Bridge based Installation allows you several option, seen as follows:

The mentioned "Technical User" here is a dedicated User, which is created based on your S-User or User-ID as has the format: <InstNr.>.<S-User>

The Container Registry is created in the Kubernetes Cluster (e.g. AKS)

A Certificate Domain is needed, e.g. *.westeurope.cloudapp.azure.com

In case (recommended from my side) you want to use an "image pull secret" take the SPN credentials here ...

kubectl create secret docker-registry docker-secret /

--docker-server=<registry service>.azurecr.io /

--docker-username=<SPN> /

--docker-password=<SPN-Secret> /

--docker-email=roland.kramer@sap.com -n $NAMESPACE

kubectl get secret docker-secret --output=yaml -n $NAMESPACEIn case you have to update the AKS cluster with new Service Principal credentials use the using the az aks update-credentials command.

In case of an error after the phase "Image pull secret":

server:~ # Error: configmaps is forbidden: User "system:serviceaccount:<namespace>:default" cannot list resource "configmaps" in API group "" in the namespace "<namespace>"

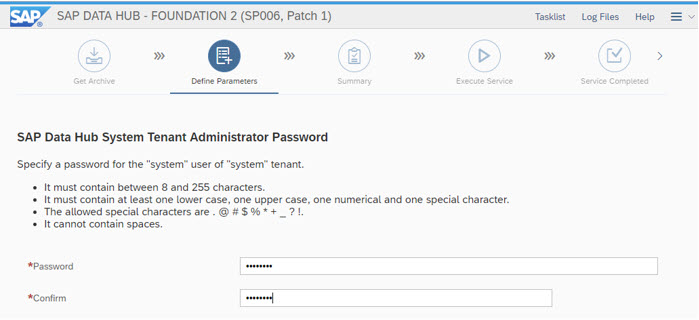

server:~ # kubectl create clusterrolebinding default-cluster-rule --clusterrole=cluster-admin --serviceaccount=<namespace>:default -n $NAMESPACESAP Data Hub System Tenant Administrator Password is needed:

To ease the initial Installation, the users in the tenants "default" and "system" can have the same password for now ...

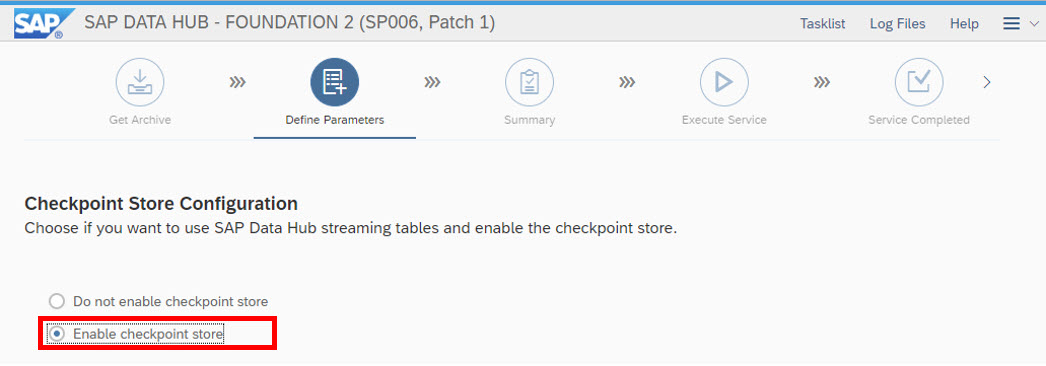

If the "enable checkpoint store" fails it mostly due to the outdated SPN secret (see above ...)

These are the different options, if you choose "enable checkpoint store" (Example Azure)

configuring default storage classes for certain types is suitable for SAP Datahub Installations

server:~ # kubectl patch storageclass managed-premium -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}'

server:~ # kubectl -n $NAMESPACE get storageclass ${STORAGE_CLASS} -o wide

NAME PROVISIONER AGE

default (default) kubernetes.io/azure-disk 5d14h

managed-premium (default) kubernetes.io/azure-disk 5d14h

vrep-<namespace> sap.com/vrep 4m34s

vrep-runtime-<namespace> sap.com/vrep 6d23h

- Default Storage Class

- System Management Storage Class

- Dlog Storage Class

- Disk Storage Class

- Consul Storage Class

- SAP HANA Storage Class

- SAP Data Hub Diagnostics Storage Class

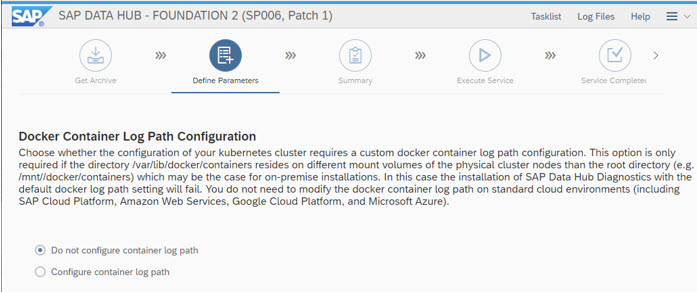

the Docker Container Log Path is provided by the IaaS Distributor (do not change ...)

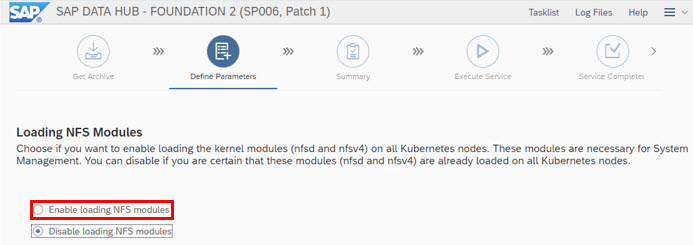

It is suitable to "Enable loading NFS modules" here to avoid follow up errors

Note 2776522 - SAP Data Hub 2: Specific Configurations for Installation on SUSE CaaS Platform

As of SAP Data Hub 2.5, the required Network File System (NFS) version is 4.2 by default. For Azure and the other hyperscalers this is not the case and you can use NFS v4.2.

(vora-vsystem.vRep.nfsv4MinorVersion=1)

-e vora-vsystem.vRep.nfsv4MinorVersion=1

-e vora-dqp.components.disk.replicas=4

-e vora-dqp.components.dlog.storageSize=200GiParameter use in this section start with vora-<service>. A wrong notation will lead into an Installation Error and you have to run from scratch again, as the SLC Bridge is not a SWPM

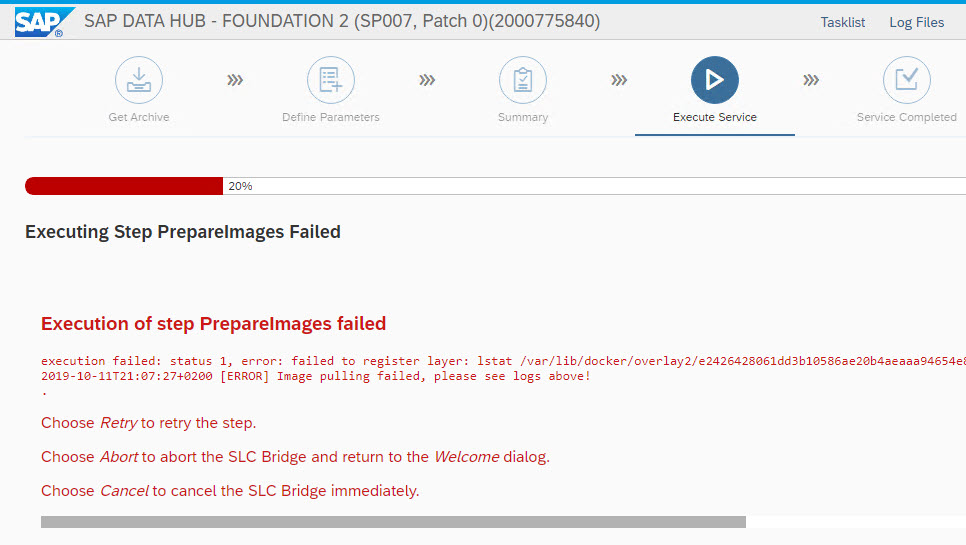

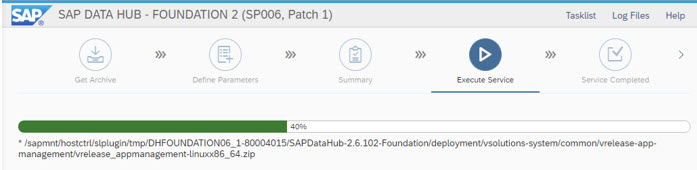

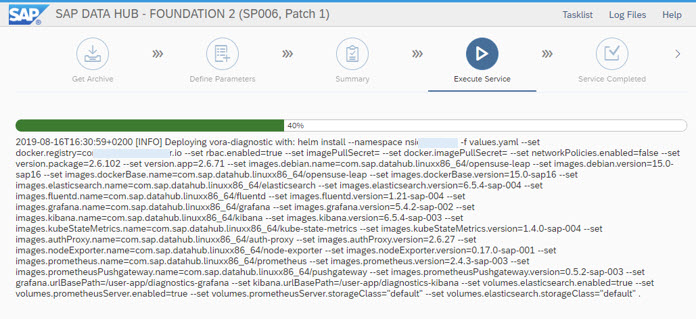

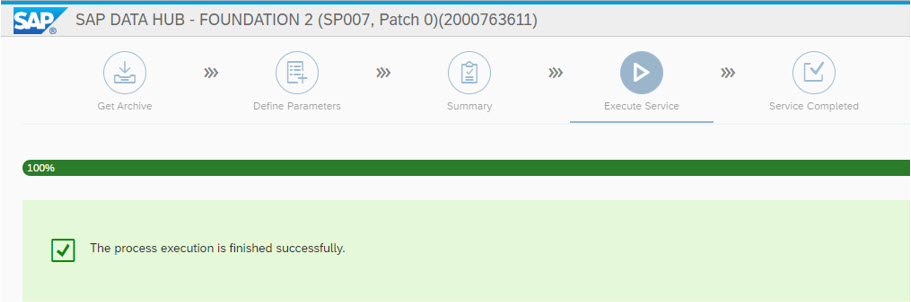

executing the Installation Service

Now the Installation starts with the execution of the Installation Service

cleaning out the old docker images by deleting the directories below /var/lib/docker/overlay2 will lead to inconsistencies.

Instead run the following procedure:

server:/var/lib/docker/overlay2 # docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

73554900100900002861.val.dockersrv.repositories.vlab-sapcloudplatformdev.cn/com.sap.datahub.linuxx86_64/vsolution-ml-python 2.7.74 2bb018c63e82 3 days ago 2.36GB

containerreg.azurecr.io/com.sap.datahub.linuxx86_64/vsolution-ml-python 2.7.74 2bb018c63e82 3 days ago 2.36GB

73554900100900002861.val.dockersrv.repositories.vlab-sapcloudplatformdev.cn/com.sap.datahub.linuxx86_64/vsolution-hana_replication 2.7.74 7119eba7f498 3 days ago 976MB

containerreg.azurecr.io/com.sap.datahub.linuxx86_64/vsolution-hana_replication 2.7.74 7119eba7f498 3 days ago 976MB

73554900100900002861.val.dockersrv.repositories.vlab-sapcloudplatformdev.cn/com.sap.datahub.linuxx86_64/vsystem-voraadapter 2.7.118 a732aeefe28f 11 days ago 676MB

containerreg.azurecr.io/com.sap.datahub.linuxx86_64/vsystem-voraadapter 2.7.118 a732aeefe28f 11 days ago 676MB

73554900100900002861.val.dockersrv.repositories.vlab-sapcloudplatformdev.cn/com.sap.datahub.linuxx86_64/hello-sap 1.1 c4d1d0758d85 11 months ago 2.01MB

sapb4hsrv:/var/lib/docker/overlay2 #server:~ # docker images

server:~ # docker images -aq

server:~ # docker rmi $(docker images -aq) --force

server:~ # docker images -aq

server:~ # service docker restart

server:~ # docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

server:~ #

Milestone Voracluster CRD has been deployed reached

details of the SAP Datahub Installation Service

until now the following start/create pods sequence is in use (pods creation time in minutes):

tiller-deploy (already running when "helm init" was successful)

vora-security-operator (> 1min)

hana-0 (> 8min)

[INFO] Waiting for hana... [605 / 1800]

auditlog (> 2min)

init-schema (> 3min)

vora-consul (> 2min)

spark-master (> 2min)

spark-worker (> 2min)

storagegateway (> 4min)

uaa (> 4min) [INFO] Waiting for uaa... [220 / 1800]

*****

Wait until vora cluster is ready...

[INFO] Waiting for vora cluster ... [960 / 1800]

storagegateway (> 7min)

vora-deployment-operator (> 4min)

vora-dlog (> 4min)

vora-dlog-admin (> 3min incl. "Completed")

vora-config-init (> 2min incl. "Completed")

vora-catalog (> 6min)

vora-disk (> 6min)

vora-landscape (> 5min)

vora-relational (> 5min)

vora-tx-broker (> 5min)

vora-tx-coordinator (> 5min)

vora-nats-streaming (> 2min)

vora-textanalysis (> 2min)

*****

internal-comm-secret (> 1min incl. "Completed")

vsystem-module-loader (> 2min)

vsystem (> 9min incl. "Init:CreateContainerConfigError" and "Error")

[INFO] Waiting for vsystem... [155 / 1800]

vsystem-vrep-0 (> 9min incl. "Error")

*****

data-app-daemon (> 1min)

data-application (> 1min)

datahub-app-db (> 2min)

license-management (> 1min)

voraadapter (> 1min)

diagnostics-fluentd (> 1min)

diagnostics-grafana (> 1min)

diagnostics-kibana (> 1min)

diagnostics-prometheus (> 1min)

launchpad (> 2min)If the hana-0 pod is not available at this time, find the root cause already here, as further pods will fail to start ...

Note 2823475 - System UI cannot be accessed - SAP Data Hub

Note 2789504 - A pod which is created by a statefulset keeps being terminated just after entering "R...

server:/ # kubectl get pods -n $NAMESPACE | grep vora/vsystem/hana/diagnostics

vora-catalog-5f48b8987-nzgmp 2/2 Running 0 36h

vora-config-init-xwnlf 0/2 Completed 0 36h

vora-consul-0 1/1 Running 0 36h

vora-consul-1 1/1 Running 0 36h

vora-consul-2 1/1 Running 0 36h

vora-deployment-operator-66fc5f5598-j9j8r 1/1 Running 0 36h

vora-disk-0 2/2 Running 0 36h

vora-disk-1 2/2 Running 0 36h

vora-disk-2 2/2 Running 0 36h

vora-disk-3 2/2 Running 0 36h

vora-dlog-0 2/2 Running 0 36h

vora-dlog-admin-28tlz 0/2 Completed 0 36h

vora-landscape-8c588c8b5-7l485 2/2 Running 0 36h

vora-nats-streaming-7c9f595679-tkl6x 1/1 Running 0 36h

vora-relational-67f674ff56-8z5k9 2/2 Running 0 36h

vora-security-operator-679bf94bff-49lms 1/1 Running 0 36h

vora-textanalysis-6649bc9594-tcjlq 1/1 Running 0 36h

vora-tools-zvd2t-689776879d-4ktvb 3/3 Running 0 35h

vora-tx-broker-6445448665-srkzv 2/2 Running 0 36h

vora-tx-coordinator-69f5cbcf74-hx4sw 2/2 Running 0 36h

voraadapter-2b5v2-79fccb794b-blndv 3/3 Running 0 36h

voraadapter-q4s4z-dbf9fd4dd-7ktpz 3/3 Running 0 36h

server:/ #

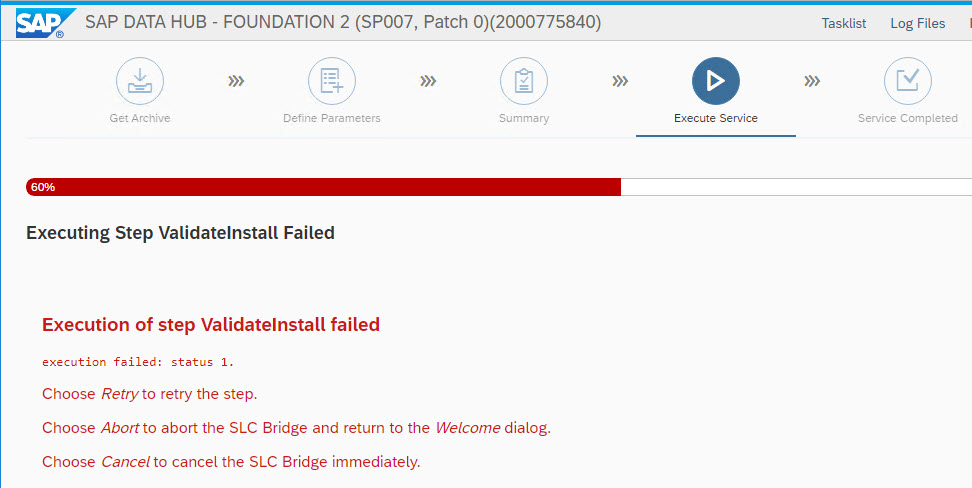

If you already started the Installation again, and eventually some artefacts are left, it can happen that some chart cannot be deployed and the SLC Bridge shows an error:

2019-08-16T12:38:43.061+0200 INFO cmd/cmd.go:243 2> Error: release kissed-catfish failed: storageclasses.storage.k8s.io "vrep-nsidnawdf03" already exists

2019-08-16T12:38:43.067+0200 INFO cmd/cmd.go:243 1> 2019-08-16T12:38:43+0200 [ERROR] Deployment failed, please check logs above and Kubernetes dashboard for more information!

2019-08-16T12:38:43.230+0200 INFO control/steps.go:288

----------------------------

Execution of step Install (Deploying SAP Data Hub.) finished with error: execution failed: status 1, error: Error: release kissed-catfish failed:

storageclasses.storage.k8s.io "vrep-nsidnawdf03" already exists

----------------------------

2019-08-16T12:38:43.230+0200 WARN control/controlfile.go:1430

----------------------------

Step Install failed: execution failed: status 1, error: Error: release kissed-catfish failed: storageclasses.storage.k8s.io "vrep-nsidnawdf03" already exists

----------------------------

2019-08-16T12:38:43.230+0200 ERROR slp/slp_monitor.go:102

----------------------------

Executing Step Install Failed:

Execution of step Install failed

execution failed: status 1, error: Error: release kissed-catfish failed: storageclasses.storage.k8s.io "vrep-nsidnawdf03" already exists

.

Choose Retry to retry the step.

Choose Abort to abort the SLC Bridge and return to the Welcome dialog.

Choose Cancel to cancel the SLC Bridge immediately.

----------------------------

The easiest way to solve the issue, is to Rollback the failed chart with helm ...

server:/ # helm ls

NAME REVISION UPDATED STATUS CHART APP VERSION

crazy-hedgehog 1 Fri Aug 16 12:28:25 2019 DEPLOYED vora-diagnostic-rbac-2.0.2

giggly-quoll 1 Fri Aug 16 12:28:39 2019 DEPLOYED vora-consul-0.9.0-sap13 0.9.0

honorary-lizard 1 Fri Aug 16 12:32:09 2019 DEPLOYED vora-security-operator-0.0.24

hoping-eel 1 Fri Aug 16 12:28:29 2019 DEPLOYED vora-deployment-rbac-0.0.21

invincible-possum 1 Fri Aug 16 12:28:37 2019 DEPLOYED hana-0.0.1

kissed-catfish 1 Fri Aug 16 12:38:42 2019 FAILED vora-vsystem-2.6.60

lame-maltese 1 Fri Aug 16 12:38:27 2019 DEPLOYED vora-textanalysis-0.0.33

peddling-porcupine 1 Fri Aug 16 12:33:31 2019 DEPLOYED vora-deployment-operator-0.0.21

piquant-bronco 1 Fri Aug 16 12:32:33 2019 DEPLOYED storagegateway-2.6.32

righteous-hare 1 Fri Aug 16 12:32:11 2019 DEPLOYED uaa-0.0.24

trendsetting-porcupine 1 Fri Aug 16 12:33:29 2019 DEPLOYED vora-cluster-0.0.21

unhinged-olm 1 Fri Aug 16 12:32:39 2019 DEPLOYED vora-sparkonk8s-2.6.22 2.6.22

vehement-ant 1 Fri Aug 16 12:31:58 2019 DEPLOYED vora-security-context-0.0.24

winning-emu 1 Fri Aug 16 12:32:07 2019 DEPLOYED auditlog-0.0.24

server:~ # helm rollback kissed-catfish 1

Rollback was a success! Happy Helming!

server:/ # helm list

NAME REVISION UPDATED STATUS CHART APP VERSION

crazy-hedgehog 1 Fri Aug 16 12:28:25 2019 DEPLOYED vora-diagnostic-rbac-2.0.2

giggly-quoll 1 Fri Aug 16 12:28:39 2019 DEPLOYED vora-consul-0.9.0-sap13 0.9.0

honorary-lizard 1 Fri Aug 16 12:32:09 2019 DEPLOYED vora-security-operator-0.0.24

hoping-eel 1 Fri Aug 16 12:28:29 2019 DEPLOYED vora-deployment-rbac-0.0.21

invincible-possum 1 Fri Aug 16 12:28:37 2019 DEPLOYED hana-0.0.1

kissed-catfish 2 Fri Aug 16 13:53:35 2019 DEPLOYED vora-vsystem-2.6.60

lame-maltese 1 Fri Aug 16 12:38:27 2019 DEPLOYED vora-textanalysis-0.0.33

peddling-porcupine 1 Fri Aug 16 12:33:31 2019 DEPLOYED vora-deployment-operator-0.0.21

piquant-bronco 1 Fri Aug 16 12:32:33 2019 DEPLOYED storagegateway-2.6.32

righteous-hare 1 Fri Aug 16 12:32:11 2019 DEPLOYED uaa-0.0.24

trendsetting-porcupine 1 Fri Aug 16 12:33:29 2019 DEPLOYED vora-cluster-0.0.21

unhinged-olm 1 Fri Aug 16 12:32:39 2019 DEPLOYED vora-sparkonk8s-2.6.22 2.6.22

vehement-ant 1 Fri Aug 16 12:31:58 2019 DEPLOYED vora-security-context-0.0.24

winning-emu 1 Fri Aug 16 12:32:07 2019 DEPLOYED auditlog-0.0.24

server:/ #

after the Installation/Upgrade to Version 2.7.x you might see some new animals .. 😉

server:/ # helm list

NAME REVISION UPDATED STATUS CHART APP VERSION

calico-mite 1 Sat Nov 2 01:07:07 2019 DEPLOYED vora-vsystem-2.7.123

cautious-squirrel 1 Sat Nov 2 00:51:25 2019 DEPLOYED vora-security-context-0.0.24

cert-manager 1 Sat Nov 2 01:45:49 2019 DEPLOYED cert-manager-v0.7.2 v0.7.2

errant-wasp 1 Sat Nov 2 01:00:24 2019 DEPLOYED storagegateway-2.7.44

erstwhile-dragonfly 1 Sat Nov 2 00:59:10 2019 DEPLOYED uaa-0.0.24

giggly-magpie 1 Sat Nov 2 01:00:31 2019 DEPLOYED vora-sparkonk8s-2.7.18 2.7.18

hopping-poodle 1 Sat Nov 2 01:06:52 2019 DEPLOYED vora-textanalysis-0.0.33

invincible-labradoodle 1 Sat Nov 2 01:13:11 2019 DEPLOYED vora-diagnostic-2.0.2

kissable-elk 1 Sat Nov 2 00:59:08 2019 DEPLOYED auditlog-0.0.24

lame-prawn 1 Sat Nov 2 01:01:42 2019 DEPLOYED vora-deployment-operator-0.0.21

linting-dog 1 Sat Nov 2 00:51:22 2019 DEPLOYED network-policies-0.0.1

nonexistent-bear 1 Sat Nov 2 01:01:41 2019 DEPLOYED vora-deployment-rbac-0.0.21

prodding-chicken 1 Sat Nov 2 01:01:22 2019 DEPLOYED vora-cluster-0.0.21

singing-anaconda 1 Sat Nov 2 00:52:08 2019 DEPLOYED vora-consul-0.9.0-sap13 0.9.0

vetoed-quokka 1 Sat Nov 2 01:28:09 2019 DEPLOYED nginx-ingress-1.24.4 0.26.1

vigilant-goose 1 Sat Nov 2 00:51:32 2019 DEPLOYED vora-security-operator-0.0.24

yodeling-seastar 1 Sat Nov 2 01:13:07 2019 DEPLOYED vora-diagnostic-rbac-2.0.2

yummy-sparrow 1 Sat Nov 2 00:52:07 2019 DEPLOYED hana-2.7.6

server:/ #

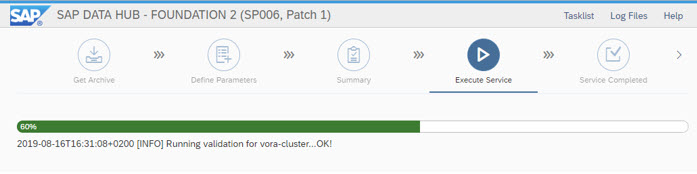

Milestone Initializing system tenant reached ...

Milestone Running validation for vora-cluster reached

If this Phase takes eventually longer as expected, then you should check the logfiles in advance for timeouts, issues, etc.

server:/sapmnt/hostctrl/slplugin/work # dir

total 544

drwxr-x--- 3 root root 4096 Aug 16 16:37 .

drwxr-x--- 9 sapadm sapsys 4096 Aug 14 11:19 ..

-rwxr-x--- 1 root root 31860 Aug 16 16:34 EvalForm.html

-rw-r----- 1 root root 11366 Aug 16 16:34 analytics.xml

-rw-r----- 1 root root 1226 Aug 16 16:32 auditlog_validation_log.txt

-rw-r----- 1 root root 628 Aug 16 16:20 cert_generation_log.txt

-rwxr-x--- 1 root root 59077 Aug 16 16:11 control.yml

-rw-r----- 1 root root 91 Aug 16 16:32 datahub-app-base-db_validation_log.txt

drwxr-x--- 2 root root 4096 Aug 16 16:32 displaytab

-rw-r----- 1 root root 154092 Aug 16 16:16 helm.tar.gz

-rw-r----- 1 root root 1566 Aug 16 16:12 inputs.log

-rw------- 1 root root 437 Aug 16 16:37 loginfo.yml

-rw-r----- 1 root root 219760 Aug 16 16:37 slplugin.log

-rw-r----- 1 root root 15 Aug 16 16:10 slplugin.port

-rw-r----- 1 root root 14349 Aug 16 16:15 variables.yml

-rw-r----- 1 root root 3625 Aug 16 16:31 vora-cluster_validation_log.txt

-rw-r----- 1 root root 1098 Aug 16 16:32 vora-diagnostic_validation_log.txt

-rw-r----- 1 root root 59 Aug 16 16:32 vora-textanalysis_validation_log.txt

-rw-r----- 1 root root 2077 Aug 16 16:31 vora-vsystem_validation_log.txt

server:/sapmnt/hostctrl/slplugin/work #

server:/ # tail -f vora-cluster_validation_log.txt

server:/ # kubectl describe pods -n $NAMESPACE | grep <pods>

When you see the successful SLC screen at this time, the SAP DataHub Validation can still fail ...

analyze the root cause here. In this case the log container path was modified during the Installation.

server:/ # kubectl get pods -n $NAMESPACE | grep Container

diagnostics-fluentd-4fslg 1/1 ContainerCreating 0 73m

diagnostics-fluentd-8vpbl 1/1 ContainerCreating 0 72m

diagnostics-fluentd-d6nmn 1/1 ContainerCreating 0 71m

diagnostics-fluentd-dcxhk 1/1 ContainerCreating 0 71m

diagnostics-fluentd-j2pct 1/1 ContainerCreating 0 72m

diagnostics-fluentd-qq6wc 1/1 ContainerCreating 0 73m

server:~ # kubectl describe pods diagnostics-fluentd-4fslg -n $NAMESPACE

...

Waring FailedMount 23 (x45 ofer 75m) kubelet, aks-agentpool-334774746-vmss

MountVolume.SetUp failed for volumes "varlibdockercontainersled:

/sapmnt/docker/container is not a directory

server:/ # kubectl get pods -n $NAMESPACE | grep diagnostics-fluentd

diagnostics-fluentd-4fslg 1/1 Running 0 35h

diagnostics-fluentd-8vpbl 1/1 Running 0 35h

diagnostics-fluentd-d6nmn 1/1 Running 0 35h

diagnostics-fluentd-dcxhk 1/1 Running 0 35h

diagnostics-fluentd-j2pct 1/1 Running 0 35h

diagnostics-fluentd-qq6wc 1/1 Running 0 35h

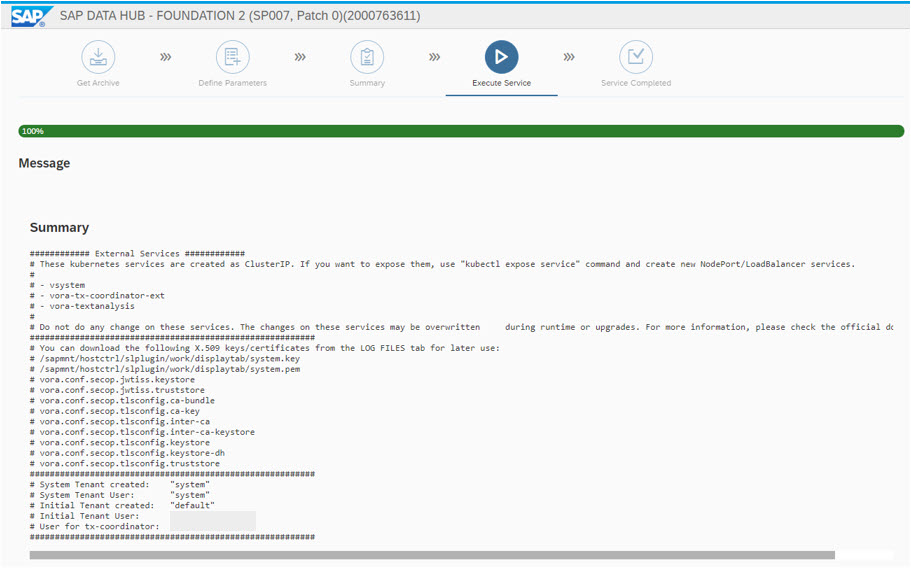

server:/ # After the successful Validation of the Vora Cluster, the SAP DataHub Installation is finished ...

The summary screen shows additional Informations about the Installation ...

Done (really?) ...

Upgrade SAP DataHub to Version 2.7.x

In case of Upgrading the SAP Datahub, the complete vflow-graphs have to be stopped/deleted

server:/ # kubectl delete pod -n $NAMESPACE $(kubectl get pods -n $NAMESPACE | grep vflow-graph | egrep 'Completed|Error|CrashLoopBackOff' | awk {'print $1'})

pod "vflow-graph-e828efc7f06745ecb8e856dce309a8c8-github-alert-btmb7" deleted

server:/ #

server:/ # kubectl delete pod -n $NAMESPACE $(kubectl get pods -n $NAMESPACE | grep pipeline-modeler | egrep 'Completed|Error|CrashLoopBackOff' | awk {'print $1'})

pod "pipeline-modeler-7c683d466815e0c3ea079a-6d8496fdb5-fv6bz" deleted

server:/ #

server:/ # kubectl get pods -n $NAMESPACE | grep vflow-graph

server:/ # kubectl delete pod vflow-graph-9f58354844fe4c3982d04454f1fd056c-com-sap-demo-kjdz5 -n $NAMESPACE

pod "vflow-graph-9f58354844fe4c3982d04454f1fd056c-com-sap-demo-kjdz5" deleted

server:/ #

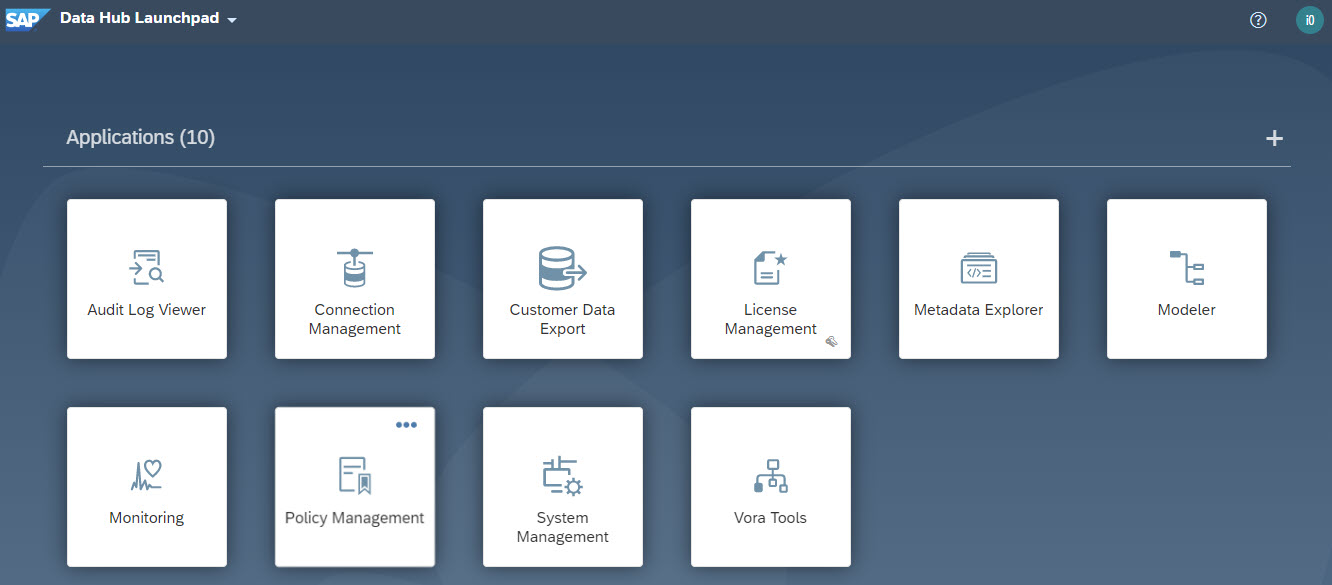

post Installation Steps for the SAP Data Hub

Finally you have to expose the SAP Data Hub Launchpad. This can be found in the

online help - Configuring SAP Data Hub Foundation on Cloud Platforms

After upgrading SAP Data Hub the base system runs in the new version, but applications on top still run in the previous version.

online help - Activate New SAP Data Hub System Management Applications

It looks like the way @db8ac33b71d34a778adf273b064c4883 exposed the SAP Data Hub Launchpad is more efficient then the sap online help - Your SAP on Azure – Part 20 – Expose SAP Data Hub Launchpad

server:/ # helm install stable/nginx-ingress -n kube-system

server:/ # kubectl -n kube-system get services -o wide | grep ingress-controller

dining-mule-nginx-ingress-controller LoadBalancer 10.0.110.14 51.144.74.205 80:30202/TCP,443:31424/TCP 38d app=nginx-ingress,component=controller,release=dining-mule

nonexistent-camel-nginx-ingress-controller LoadBalancer 10.0.112.222 13.80.131.18 80:30494/TCP,443:31168/TCP 3d18h app=nginx-ingress,component=controller,release=nonexistent-camel

virtuous-seal-nginx-ingress-controller LoadBalancer 10.0.161.96 13.80.71.39 80:31059/TCP,443:31342/TCP 70m app=nginx-ingress,component=controller,release=virtuous-seal

server:/ #

server:/ # kubectl -n kube-system delete services dining-mule-nginx-ingress-controller

server:/ # kubectl -n kube-system delete services nonexistent-camel-nginx-ingress-controller

Blog - Secure the Ingress for DH 2.7 or DI 3.0

If you already installed the SAP Data Hub again, then you have to check if there is already an Ingress Controller is running. There should be only on ingress-controller attached to one LoadBalancer and one external IP-Address.

online help - Expose SAP Vora Transaction Coordinator and SAP HANA Wire Externally

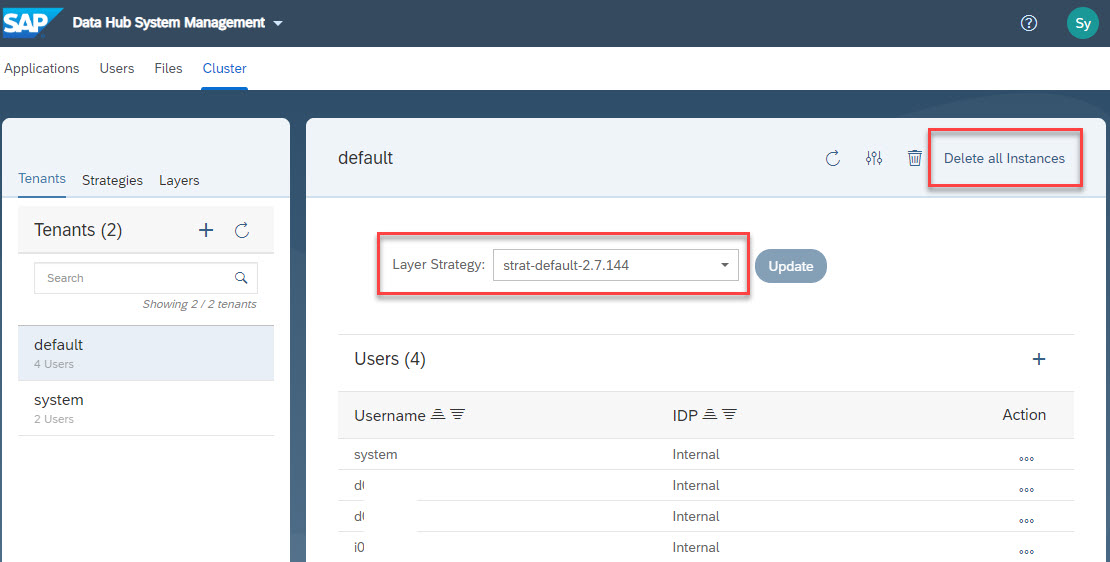

Log on to the SAP Data Hub

online help - Launchpad for SAP Data Hub

a new logon procedure is available with SAP Datahub Version 2.7.x

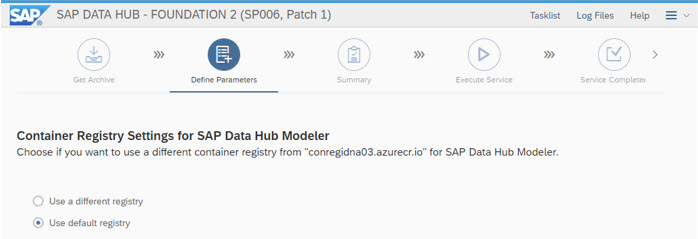

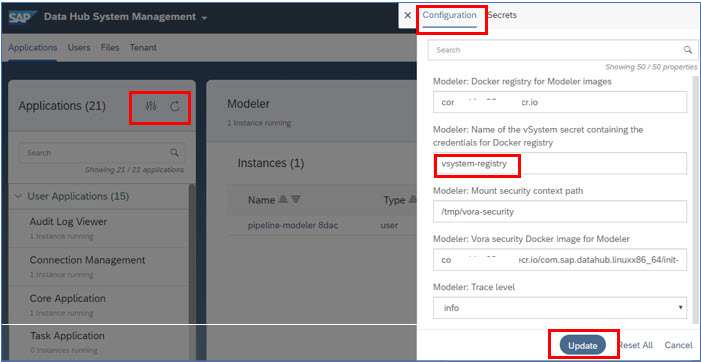

If you installed SAP Data Hub on Azure or if you use a password-protected container registry, then you must configure the access credentials for the container registry. To provide the access credential for the container registry to SAP Data Hub Modeler, you must define a secret within the corresponding tenant and associate it with the Modeler.

online help - Provide Access Credentials for a Password Protected Container Registry

To verify the SAP Data Hub installation, test the SAP Data Hub Modeler

online help - define a Pipeline

Important Post Steps for the vital health of the SAP Datahub

After some investigations, we encountered to increase some important values within the "on-premise" SAP Datahub Implementation.

Hopefully this is also reflected in the SAP Data Intelligence Cloud Environment ...

- increase vrep pod CPU upper limit from 1 to 4

- increase vrep pod memory upper limit from 2 to 8GB

- increase vsystem pod memory upper limit from 1 to 2GB

- increase the NFS threadcount

- increase the SAP Datahub vrep pvc from 10 to 50GB

The good news is, that you can increase the values online without any downtime and they are also persistent, except the NFS threadcount increase.

server:/ # kubectl patch statefulsets.apps -n $NAMESPACE vsystem-vrep -p '{"spec":{"template":{"spec":{"containers":[{"name":"vsystem-vrep","resources":{"limits":{"cpu":"4", "memory":"8Gi"}}}]}}}}'

server:/ # kubectl patch deploy vsystem -n $NAMESPACE -p '{"spec":{"template":{"spec":{"containers":[{"name":"vsystem","resources":{"limits":{"memory":"2Gi"}}}]}}}}'

server:/ # rpc.mountd -N2 -N3 -V4 -V4.1 -V4.2 -t20increasing the vrep persistent volume claim (pvc) is a bit more tricky ...

server:/ # kubectl -n $NAMESPACE get storageclass ${STORAGE_CLASS} -o wide

NAME PROVISIONER AGE

default (default) kubernetes.io/azure-disk 6h55m

managed-premium kubernetes.io/azure-disk 6h55m

vrep-<namespace> sap.com/vrep 46d

vrep-runtime-<namespace> sap.com/vrep 46d

server:/ # kubectl get pvc -n $NAMESPACE | grep vrep-0

layers-volume-vsystem-vrep-0 Bound pvc-b5e4c4dc-fd04-11e9-9d1f-6aff925ba50e 50Gi RWO managed-premium 46d

server:/ #

server:/ # kubectl patch statefulsets.apps -n $NAMESPACE vsystem-vrep -p '{"spec":{"replicas":0}}'

# wait until the event/message changes, ignore Azure issues related to VM

server:/ # kubectl get sc managed-premium -n $NAMESPACE -o yaml

server:/ #

server:/ # kubectl edit sc managed-premium -n $NAMESPACE

# add allowVolumeExpansion: true

server:/ #

server:/ # kubectl describe pvc layers-volume-vsystem-vrep-0 -n $NAMESPACE

server:/ # kubectl edit pvc layers-volume-vsystem-vrep-0 -n $NAMESPACE

# change Capacity from 10 to 50Gi

server:/ #

server:/ # kubectl patch statefulsets.apps -n $NAMESPACE vsystem-vrep -p '{"spec":{"replicas":1}}'

# wait until the event/message changes, ignore Azure issues related to VM

server:/ # kubectl exec -ti -n $NAMESPACE vsystem-vrep-0 -- bash

vsystem-vrep-0:/ # df -h

Filesystem Size Used Avail Use% Mounted on

overlay 97G 50G 48G 52% /

tmpfs 64M 0 64M 0% /dev

tmpfs 28G 0 28G 0% /sys/fs/cgroup

/dev/sda1 97G 50G 48G 52% /exports

/dev/sdf 59G 7.7G 52G 13% /vrep/layers

tmpfs 28G 4.0K 28G 1% /etc/certs

shm 64M 0 64M 0% /dev/shm

tmpfs 28G 4.0K 28G 1% /etc/certs/root-ca

tmpfs 28G 8.0K 28G 1% /etc/certs/vrep

vsystem-vrep-0:/ # <Strg> - D

vsystem-vrep-0:/ #

Additional SAP Notes for SAP Datahub troubleshooting:

Note 2784068 - Modeler instances show 500 error - SAP Data Hub

Note 2796073 - HANA authentication failed error in Conn Management - SAP Data Hub

Note 2807716 - UnknownHostException when testing connection - SAP Data Hub

Note 2813853 - Elasticsearch runs out of persistent volume disk space

Note 2823040 - Clean Up Completed Graphs - SAP Data Hub

Note 2857810 - How to automatically remove SAP Data Hub Pipeline Modeler graphs from the Status tab

Note 2851248 - Bad Gateway when testing HDFS connection - SAP Data Hub

Note 2863626 - vsystem pod failed to start with error 'start-di failed with ResourceRequest timed ou...

Note 2856566 - ABAP ODP Object Consumer not working - SAP Data Hub

Note 2866506 - Scheduling a graph with variables fails

Note 2751127 - Support information for SAP Data Hub

Note 2807438 - Release Restrictions for SAP Data Hub 2.6

Note 2838751 - Release Restrictions for SAP Data Hub 2.7

Note 2838714 - SAP Data Hub 2.7 Release Note

Note 2836631 – Known and Fixed Issues in Self-Service Data Preparation in SAP Data Hub and SAP Data ...

Note 2739161 - Replacements for Deprecated Operators and Dockerfiles in SAP Data Hub and SAP Data In...

Roland Kramer, SAP Platform Architect for Intelligent Data & Analytics

@RolandKramer

“I have no special talent, I am only passionately curious.”

- SAP Managed Tags:

- SAP Data Intelligence,

- SAP BW/4HANA,

- SAP S/4HANA,

- BW SAP HANA Data Warehousing,

- Big Data,

- SAP S/4HANA Public Cloud

Labels:

9 Comments

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,661 -

Business Trends

85 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

64 -

Expert

1 -

Expert Insights

178 -

Expert Insights

269 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

Kafka

1 -

Life at SAP

784 -

Life at SAP

10 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,578 -

Product Updates

318 -

Replication Flow

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,886 -

Technology Updates

391 -

Workload Fluctuations

1

Related Content

- Harnessing the Power of SAP HANA Cloud Vector Engine for Context-Aware LLM Architecture in Technology Blogs by SAP

- Pilot: SAP Datasphere Fundamentals in Technology Blogs by SAP

- SAP Datasphere - Space, Data Integration, and Data Modeling Best Practices in Technology Blogs by SAP

- Exploring Datasphere & BW Bridge : Technical Insights in Technology Blogs by Members

- SAP IQ のための容易なインストーラー - 「Q」 in Technology Blogs by SAP

Top kudoed authors

| User | Count |

|---|---|

| 11 | |

| 11 | |

| 10 | |

| 9 | |

| 9 | |

| 9 | |

| 9 | |

| 8 | |

| 7 | |

| 7 |