- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- Handling text files in Groovy script of CPI (SAP C...

Technology Blogs by SAP

Learn how to extend and personalize SAP applications. Follow the SAP technology blog for insights into SAP BTP, ABAP, SAP Analytics Cloud, SAP HANA, and more.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

subhojit_saha2

Participant

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

07-15-2019

8:59 AM

Introduction:

Handling huge text files (which are either csv or fixed length)is a challenge in CPI (SAP Cloud Platform Integration) .

Mostly before converting them to xml required for mapping, we do read them via groovy scripts and also manipulate the data. Most often this is done via converting them to string format , which is very much memory intensive.

In this blog post, I will show alternate ways to handle them, not only how to read large files but also how to manipulate them.

Hope you will enjoy the reading.

Main Section:

In CPI (SAP Cloud Platform Integration) sometimes, we come across scenarios where we need to process an input csv or any other character delimited text file.

Most often these files are huge compared to when we get data as xml or json format.

This date which can be “,” or tab or “|” delimited or is of fixed length, creates additional complexity as first they have to be read, sorted, converted to xml (for mapping to some target structure) before they can be finally processed. Also, sometimes we have to do various checks on number of fields to validate if a line in the file is worth processing or not , before hand, to stop flow of unnecessary data.

Like: File -> input.csv

A,12234,NO,C,20190711,……

A,26579,NO,D,20190701,…….

……………………………………………..

……………………………………………..

Say, we have to process all lines of above file where fourth field has Flag set to ‘D’, or Debit indicator.

So, in above example after reading the file we should only keep lines which has ‘D’ as fourth field and hence line 1 above should not be processed further.

Here in below we will see how to handle text, csv files. Especially, Huge files and how to process each lines from them with out converting to String which is more memory consuming.

*. Reading large files :

We normally start our scripts by converting the input payload to String Object.

String content= message.getBody(String) // this line is mostly used in scripts.

But in case of large files, the above line converts the whole data to String and stores them in memory, which is not at all a good practice. Further any new changes on them by creating or replacing with new String Objects takes more space. This also has the probability of having – OutOfMemoryError Exception.

The better way is to handle them as stream. There are two class that can handle stream data.

a. java.io.Reader -> handles data as character or text stream

b. java.io.InputStream -> handles data as raw or binary stream.

Depending on the level of control you need over data, or business requirement you can use one of them. Mostly the Reader class is easier to use as we get data as text/character (UTF-16)rather then raw binary data (UTF-8).

Reading Data in CPI groovy script via java.io.Reader:

Reading Data at each field or word level, for each line:

*. Not a good way to do replace on data in CPI Groovy:

The String way of doing it –

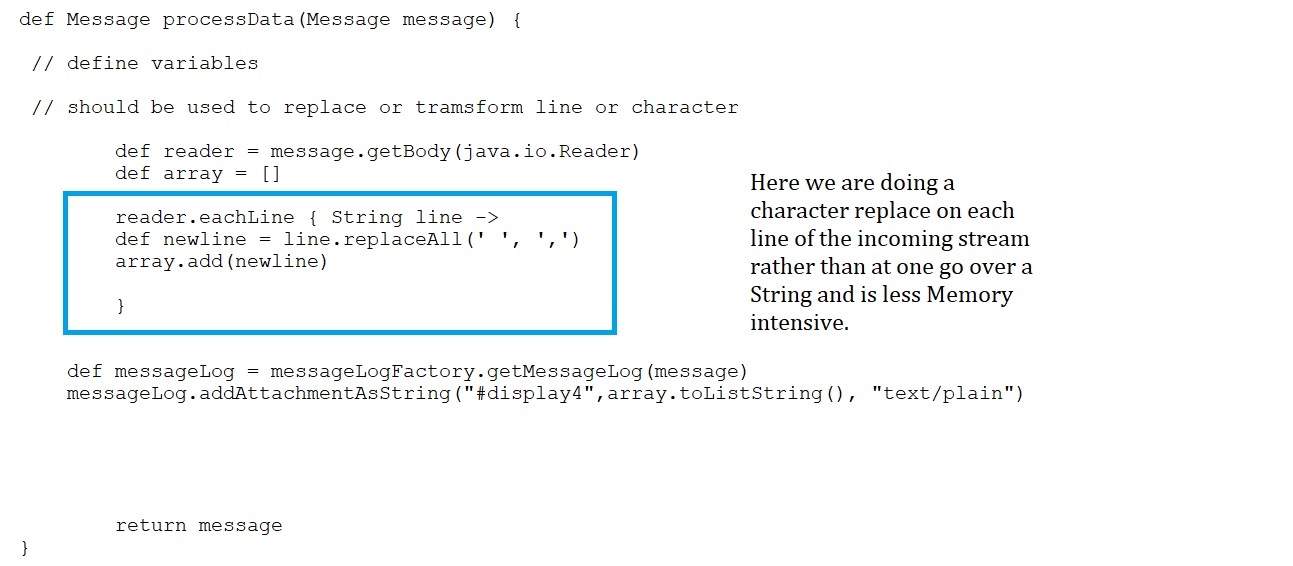

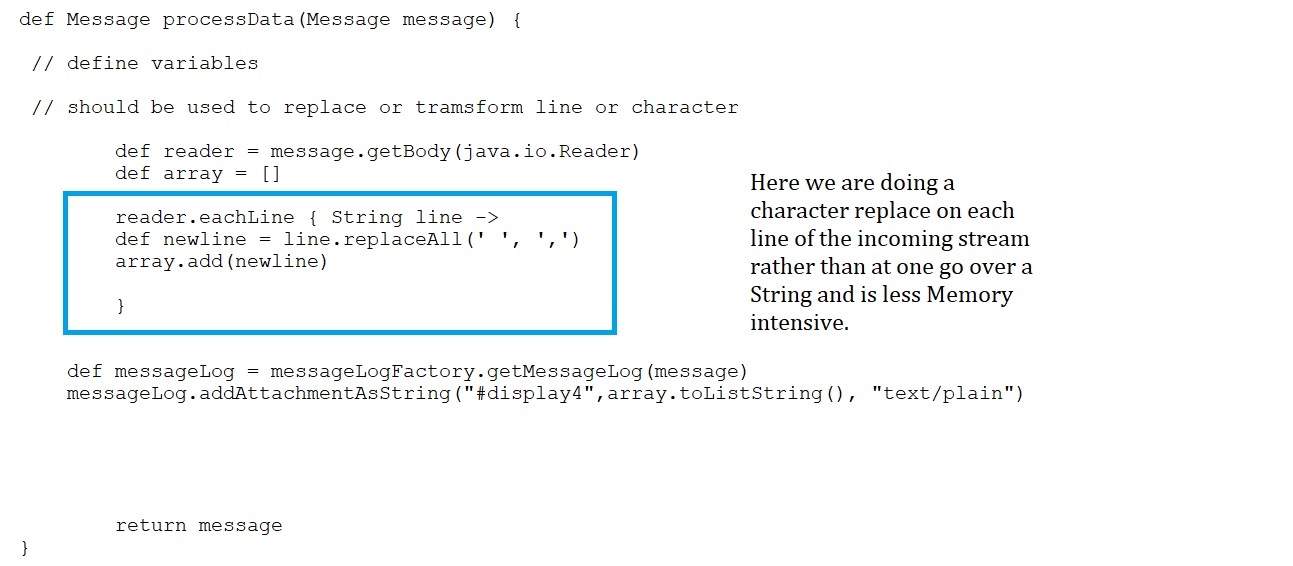

The better approach of doing a replace while reading it as Stream:

*. Reading payload as an java.io.InputStream, stream object:

Conclusion:

This blog post, is written to ease the pain of developers, as while building Iflows, we do come across multiple cases where in, we need to handle large text files in csv or in other delimited format, which requires reading the entire file, sometimes working on data of each line via parsing-text etc.

In all those cases, the above blog post can be helpful to build required groovy scripts quickly, to be used in CPI (SAP Cloud Platform Integration) iflows, to handle these types of data.

It hastens those developments by providing architecture and re-usable codes on how to achieve the outcome.

I will look forward to your inputs and suggestions.

Handling huge text files (which are either csv or fixed length)is a challenge in CPI (SAP Cloud Platform Integration) .

Mostly before converting them to xml required for mapping, we do read them via groovy scripts and also manipulate the data. Most often this is done via converting them to string format , which is very much memory intensive.

In this blog post, I will show alternate ways to handle them, not only how to read large files but also how to manipulate them.

Hope you will enjoy the reading.

Main Section:

In CPI (SAP Cloud Platform Integration) sometimes, we come across scenarios where we need to process an input csv or any other character delimited text file.

Most often these files are huge compared to when we get data as xml or json format.

This date which can be “,” or tab or “|” delimited or is of fixed length, creates additional complexity as first they have to be read, sorted, converted to xml (for mapping to some target structure) before they can be finally processed. Also, sometimes we have to do various checks on number of fields to validate if a line in the file is worth processing or not , before hand, to stop flow of unnecessary data.

Like: File -> input.csv

A,12234,NO,C,20190711,……

A,26579,NO,D,20190701,…….

……………………………………………..

……………………………………………..

Say, we have to process all lines of above file where fourth field has Flag set to ‘D’, or Debit indicator.

So, in above example after reading the file we should only keep lines which has ‘D’ as fourth field and hence line 1 above should not be processed further.

Here in below we will see how to handle text, csv files. Especially, Huge files and how to process each lines from them with out converting to String which is more memory consuming.

*. Reading large files :

We normally start our scripts by converting the input payload to String Object.

String content= message.getBody(String) // this line is mostly used in scripts.

But in case of large files, the above line converts the whole data to String and stores them in memory, which is not at all a good practice. Further any new changes on them by creating or replacing with new String Objects takes more space. This also has the probability of having – OutOfMemoryError Exception.

The better way is to handle them as stream. There are two class that can handle stream data.

a. java.io.Reader -> handles data as character or text stream

b. java.io.InputStream -> handles data as raw or binary stream.

Depending on the level of control you need over data, or business requirement you can use one of them. Mostly the Reader class is easier to use as we get data as text/character (UTF-16)rather then raw binary data (UTF-8).

Reading Data in CPI groovy script via java.io.Reader:

Reading Data at each field or word level, for each line:

*. Not a good way to do replace on data in CPI Groovy:

The String way of doing it –

The better approach of doing a replace while reading it as Stream:

*. Reading payload as an java.io.InputStream, stream object:

Conclusion:

This blog post, is written to ease the pain of developers, as while building Iflows, we do come across multiple cases where in, we need to handle large text files in csv or in other delimited format, which requires reading the entire file, sometimes working on data of each line via parsing-text etc.

In all those cases, the above blog post can be helpful to build required groovy scripts quickly, to be used in CPI (SAP Cloud Platform Integration) iflows, to handle these types of data.

It hastens those developments by providing architecture and re-usable codes on how to achieve the outcome.

I will look forward to your inputs and suggestions.

Labels:

11 Comments

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

93 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

66 -

Expert

1 -

Expert Insights

177 -

Expert Insights

300 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

13 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,577 -

Product Updates

345 -

Replication Flow

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,873 -

Technology Updates

429 -

Workload Fluctuations

1

Related Content

- Expanding Our Horizons: SAP's Build-Out and Datacenter Strategy for SAP Business Technology Platform in Technology Blogs by SAP

- Supporting Multiple API Gateways with SAP API Management – using Azure API Management as example in Technology Blogs by SAP

- SAP Build Process Automation Pre-built content for Finance Use cases in Technology Blogs by SAP

- Consuming SAP with SAP Build Apps - Mobile Apps for iOS and Android in Technology Blogs by SAP

- How to use AI services to translate Picklists in SAP SuccessFactors - An example in Technology Blogs by SAP

Top kudoed authors

| User | Count |

|---|---|

| 43 | |

| 25 | |

| 17 | |

| 15 | |

| 10 | |

| 7 | |

| 7 | |

| 6 | |

| 6 | |

| 6 |