- SAP Community

- Products and Technology

- Enterprise Resource Planning

- ERP Blogs by Members

- S/4 Data Migration – How to use Data Migration Coc...

Enterprise Resource Planning Blogs by Members

Gain new perspectives and knowledge about enterprise resource planning in blog posts from community members. Share your own comments and ERP insights today!

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

soujanya_krishn

Explorer

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

07-03-2019

5:38 PM

In S/4 data migrations, the data migration cockpit became an essential tool. With rapidly evolving changes in the S/4 world, SAP is coming up with new enhancements to the Data migration cockpit almost every week.

Let us look into a few tips and tricks today. Please note, this blog is for the team who is already familiar with data migration cockpit and know the basics of tcodes LTMC & LTMOM.

If you want to have a brief overview of the above tcodes, please let me know in the comments section, I’ll publish one.

Today, we will review the below points:

Move Data migration project from system to system:

Either within the same environment (Development -> Quality -> Production) or to other project environments, we will have a need to move the existing data migration project. Especially, once we extend the existing objects and /or add new objects to the project, it is very essential to move the project to avoid rework.

There are 2 steps involved in this.

2. Export and Import the Data Migration Cockpit project. This step is very similar to old LSMW days. LTMOM mapping and all related things get copied to a local file.To export, in the source system client -> Open LTMC –> Click on Export Content

Upload the file downloaded (from source system client), from local machine.

Tip: Make sure to ‘synchronize structures’ and ‘Generate runtime object’ the data objects in every client. This can be done from tcode – LTMOM. Open the project -> data object and execute the 2 steps. Generation of data objects should be done in every client in the system.

Process records with same key multiple times:

The full blown change functionality is not supported yet with standard data objects. We can enhance or create new data objects using LTMOM to support that (we can discuss it further in the coming blogs).

There will be situations we want to process the same record again but, data migration stops us with an error. This functionality is a good sanity check. However, if we need to process the same record again, we need to clear the old record from table DMC_FM_RESTART_K

There is a standard report from SAP supports the deletion of old records for the data migration object. Launch SE38 and execute report DMC_RESET_FM_RESTART_TABLE

Tip: If you are building the custom data objects, please make sure you build the similar functionality (not allowing duplicates until explicitly one tries it). This comes very handy while doing audit. Just letting the system update any and every record sabotages the purpose of these checks built in.

Performance issues:

There are many performance concerns in using data migration cockpit. A few tricks can help to improve the performance.

If we get the memory errors like the one below, clearing the logs help.

No more memory available to add rows to an internal table.

Go to the tile ‘Application log – Delete Expired Log’ or tcode SLG2. Provide the relevant input and delete the logs which are not needed. If there is a log exist for the project, System tries to read through it and it hinders the performance. Clearing logs helps to resolve that issue.

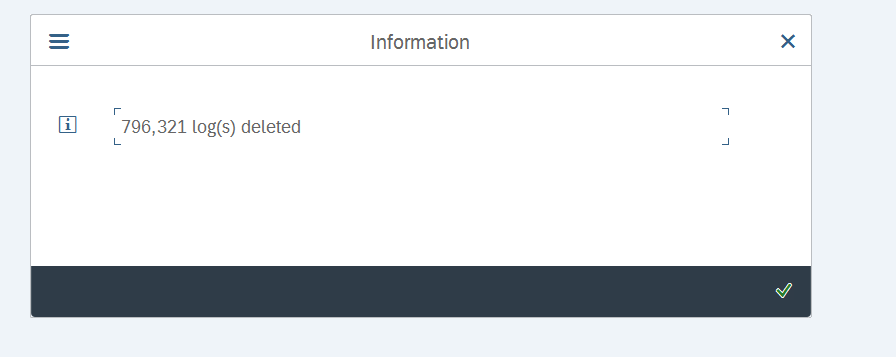

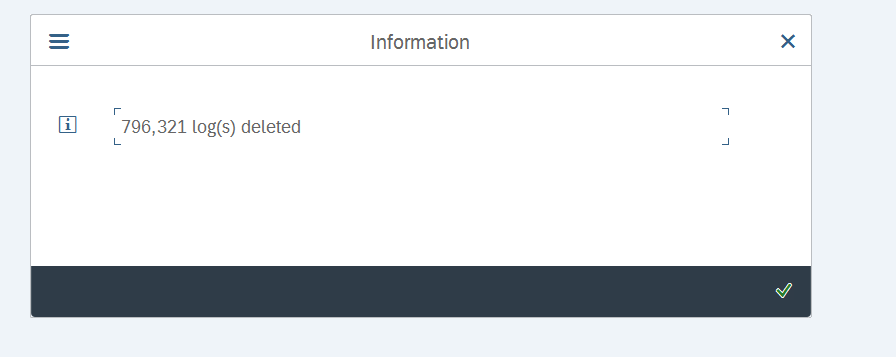

Once we execute the deletion of expired logs, it generates a back ground program to delete the logs.

While working with files, the default size limit for each uploaded XML file is 100MB, but it depends on parameter icm/HTTP/max_request_size_KB, which controls the size of the http request. You can increase the size limit for each uploaded XML file by changing the system parameter (icm/HTTP/max_request_size_KB).

However, it is very much possible that, even after increasing the file size parameters, the timeouts may happen. At that point, it is required to adjust the Timeout Options for ICM and Web Dispatcher accordingly to avoid timeouts during the file upload.

Please review the SAP Note – 2719524 for more information.

Working with staging tables can be one solution for file size issues. That is the main purpose of staging tables.

However, the processing time issue is the same. The processing times with data migration cockpit are slightly higher than old direct input methods supported by LSMW. There are various reasons for it. The solution working better at this point is, slicing the input file into multiple files and clone the data object to use multiple background processes with those small files.

This step needs to be balanced out always, with the number of background processes available and how many users consuming them at any given point.

In further blog posts let us discuss more tips and tricks of data migration cockpit. Please refer to the note 2537549 to learn, new happenings on Data Migration Cockpit.

Let us look into a few tips and tricks today. Please note, this blog is for the team who is already familiar with data migration cockpit and know the basics of tcodes LTMC & LTMOM.

If you want to have a brief overview of the above tcodes, please let me know in the comments section, I’ll publish one.

Today, we will review the below points:

- How to move the Data migration cockpit project from system to system

- How to process the records with the same key again

- How to overcome the performance issues

Move Data migration project from system to system:

Either within the same environment (Development -> Quality -> Production) or to other project environments, we will have a need to move the existing data migration project. Especially, once we extend the existing objects and /or add new objects to the project, it is very essential to move the project to avoid rework.

There are 2 steps involved in this.

- When we extend the objects we change the structures, system creates workbench transport. Same goes with creating new objects and adding new source code creates a workbench transport as well. Move the workbench requests for structure changes and source code changes just like any workbench transport.

2. Export and Import the Data Migration Cockpit project. This step is very similar to old LSMW days. LTMOM mapping and all related things get copied to a local file.To export, in the source system client -> Open LTMC –> Click on Export Content

Upload the file downloaded (from source system client), from local machine.

Tip: Make sure to ‘synchronize structures’ and ‘Generate runtime object’ the data objects in every client. This can be done from tcode – LTMOM. Open the project -> data object and execute the 2 steps. Generation of data objects should be done in every client in the system.

Process records with same key multiple times:

The full blown change functionality is not supported yet with standard data objects. We can enhance or create new data objects using LTMOM to support that (we can discuss it further in the coming blogs).

There will be situations we want to process the same record again but, data migration stops us with an error. This functionality is a good sanity check. However, if we need to process the same record again, we need to clear the old record from table DMC_FM_RESTART_K

There is a standard report from SAP supports the deletion of old records for the data migration object. Launch SE38 and execute report DMC_RESET_FM_RESTART_TABLE

Tip: If you are building the custom data objects, please make sure you build the similar functionality (not allowing duplicates until explicitly one tries it). This comes very handy while doing audit. Just letting the system update any and every record sabotages the purpose of these checks built in.

Performance issues:

There are many performance concerns in using data migration cockpit. A few tricks can help to improve the performance.

- Memory Issues

If we get the memory errors like the one below, clearing the logs help.

No more memory available to add rows to an internal table.

Go to the tile ‘Application log – Delete Expired Log’ or tcode SLG2. Provide the relevant input and delete the logs which are not needed. If there is a log exist for the project, System tries to read through it and it hinders the performance. Clearing logs helps to resolve that issue.

Once we execute the deletion of expired logs, it generates a back ground program to delete the logs.

- File size

While working with files, the default size limit for each uploaded XML file is 100MB, but it depends on parameter icm/HTTP/max_request_size_KB, which controls the size of the http request. You can increase the size limit for each uploaded XML file by changing the system parameter (icm/HTTP/max_request_size_KB).

However, it is very much possible that, even after increasing the file size parameters, the timeouts may happen. At that point, it is required to adjust the Timeout Options for ICM and Web Dispatcher accordingly to avoid timeouts during the file upload.

Please review the SAP Note – 2719524 for more information.

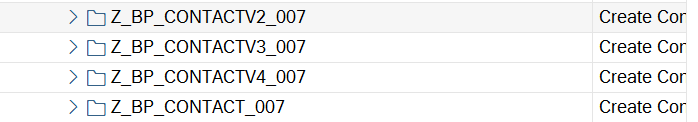

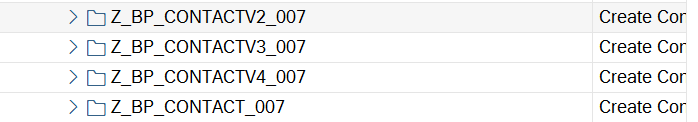

- Cloning the data object

Working with staging tables can be one solution for file size issues. That is the main purpose of staging tables.

However, the processing time issue is the same. The processing times with data migration cockpit are slightly higher than old direct input methods supported by LSMW. There are various reasons for it. The solution working better at this point is, slicing the input file into multiple files and clone the data object to use multiple background processes with those small files.

This step needs to be balanced out always, with the number of background processes available and how many users consuming them at any given point.

In further blog posts let us discuss more tips and tricks of data migration cockpit. Please refer to the note 2537549 to learn, new happenings on Data Migration Cockpit.

- SAP Managed Tags:

- SAP S/4HANA migration cockpit

11 Comments

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

"mm02"

1 -

A_PurchaseOrderItem additional fields

1 -

ABAP

1 -

ABAP Extensibility

1 -

ACCOSTRATE

1 -

ACDOCP

1 -

Adding your country in SPRO - Project Administration

1 -

Advance Return Management

1 -

AI and RPA in SAP Upgrades

1 -

Approval Workflows

1 -

ARM

1 -

ASN

1 -

Asset Management

1 -

Associations in CDS Views

1 -

auditlog

1 -

Authorization

1 -

Availability date

1 -

Azure Center for SAP Solutions

1 -

AzureSentinel

2 -

Bank

1 -

BAPI_SALESORDER_CREATEFROMDAT2

1 -

BRF+

1 -

BRFPLUS

1 -

Bundled Cloud Services

1 -

business participation

1 -

Business Processes

1 -

CAPM

1 -

Carbon

1 -

Cental Finance

1 -

CFIN

1 -

CFIN Document Splitting

1 -

Cloud ALM

1 -

Cloud Integration

1 -

condition contract management

1 -

Connection - The default connection string cannot be used.

1 -

Custom Table Creation

1 -

Customer Screen in Production Order

1 -

Data Quality Management

1 -

Date required

1 -

Decisions

1 -

desafios4hana

1 -

Developing with SAP Integration Suite

1 -

Direct Outbound Delivery

1 -

DMOVE2S4

1 -

EAM

1 -

EDI

2 -

EDI 850

1 -

EDI 856

1 -

EHS Product Structure

1 -

Emergency Access Management

1 -

Energy

1 -

EPC

1 -

Find

1 -

FINSSKF

1 -

Fiori

1 -

Flexible Workflow

1 -

Gas

1 -

Gen AI enabled SAP Upgrades

1 -

General

1 -

generate_xlsx_file

1 -

Getting Started

1 -

HomogeneousDMO

1 -

IDOC

2 -

Integration

1 -

Learning Content

2 -

LogicApps

2 -

low touchproject

1 -

Maintenance

1 -

management

1 -

Material creation

1 -

Material Management

1 -

MD04

1 -

MD61

1 -

methodology

1 -

Microsoft

2 -

MicrosoftSentinel

2 -

Migration

1 -

MRP

1 -

MS Teams

2 -

MT940

1 -

Newcomer

1 -

Notifications

1 -

Oil

1 -

open connectors

1 -

Order Change Log

1 -

ORDERS

2 -

OSS Note 390635

1 -

outbound delivery

1 -

outsourcing

1 -

PCE

1 -

Permit to Work

1 -

PIR Consumption Mode

1 -

PIR's

1 -

PIRs

1 -

PIRs Consumption

1 -

PIRs Reduction

1 -

Plan Independent Requirement

1 -

Premium Plus

1 -

pricing

1 -

Primavera P6

1 -

Process Excellence

1 -

Process Management

1 -

Process Order Change Log

1 -

Process purchase requisitions

1 -

Product Information

1 -

Production Order Change Log

1 -

Purchase requisition

1 -

Purchasing Lead Time

1 -

Redwood for SAP Job execution Setup

1 -

RISE with SAP

1 -

RisewithSAP

1 -

Rizing

1 -

S4 Cost Center Planning

1 -

S4 HANA

1 -

S4HANA

3 -

Sales and Distribution

1 -

Sales Commission

1 -

sales order

1 -

SAP

2 -

SAP Best Practices

1 -

SAP Build

1 -

SAP Build apps

1 -

SAP Cloud ALM

1 -

SAP Data Quality Management

1 -

SAP Maintenance resource scheduling

2 -

SAP Note 390635

1 -

SAP S4HANA

2 -

SAP S4HANA Cloud private edition

1 -

SAP Upgrade Automation

1 -

SAP WCM

1 -

SAP Work Clearance Management

1 -

Schedule Agreement

1 -

SDM

1 -

security

2 -

Settlement Management

1 -

soar

2 -

SSIS

1 -

SU01

1 -

SUM2.0SP17

1 -

SUMDMO

1 -

Teams

2 -

User Administration

1 -

User Participation

1 -

Utilities

1 -

va01

1 -

vendor

1 -

vl01n

1 -

vl02n

1 -

WCM

1 -

X12 850

1 -

xlsx_file_abap

1 -

YTD|MTD|QTD in CDs views using Date Function

1

- « Previous

- Next »

Related Content

- migration jobs in S/4HANA Cloud troubleshooting in Enterprise Resource Planning Q&A

- Data migration approach for Open PO and Contract in Public Cloud in Enterprise Resource Planning Q&A

- WORKFLOW FORWARDING FROM MIGRATION USER in Enterprise Resource Planning Q&A

- How to Migrate of Product variant configuration data ? in Enterprise Resource Planning Q&A

- FP2402 New Installation in Enterprise Resource Planning Q&A

Top kudoed authors

| User | Count |

|---|---|

| 2 | |

| 2 | |

| 2 | |

| 2 | |

| 2 | |

| 2 | |

| 2 | |

| 2 | |

| 1 | |

| 1 |