- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- Smart Data Integration: HanaAdapter from HANA Expr...

Technology Blogs by SAP

Learn how to extend and personalize SAP applications. Follow the SAP technology blog for insights into SAP BTP, ABAP, SAP Analytics Cloud, SAP HANA, and more.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

lsubatin

Active Contributor

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

06-14-2019

5:58 PM

If I'm doing something for the first time and I know I will need to replicate it multiple times, I tend to take screenshots and notes for my self of the future. Since I got this question a couple of times in the last weeks, I'm publishing those notes.

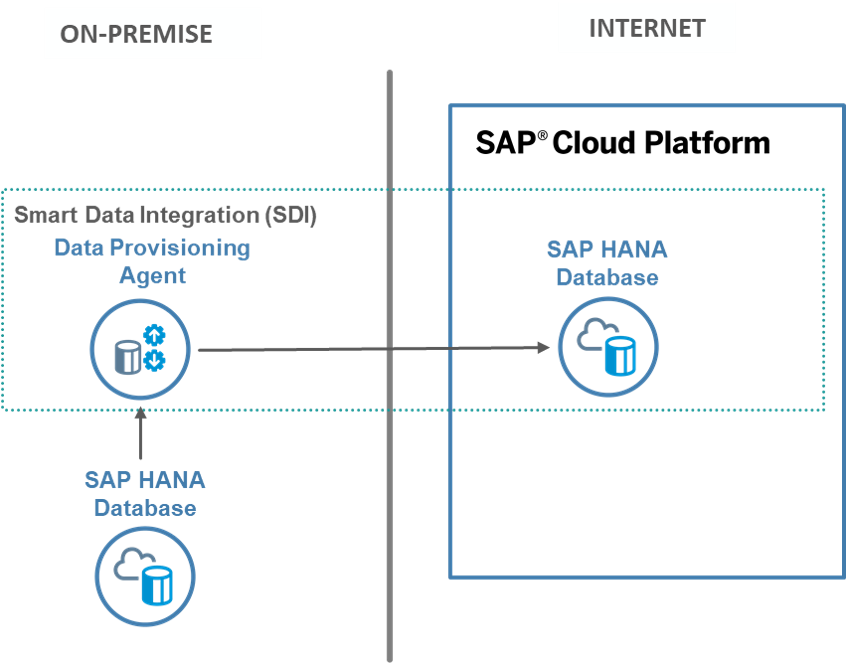

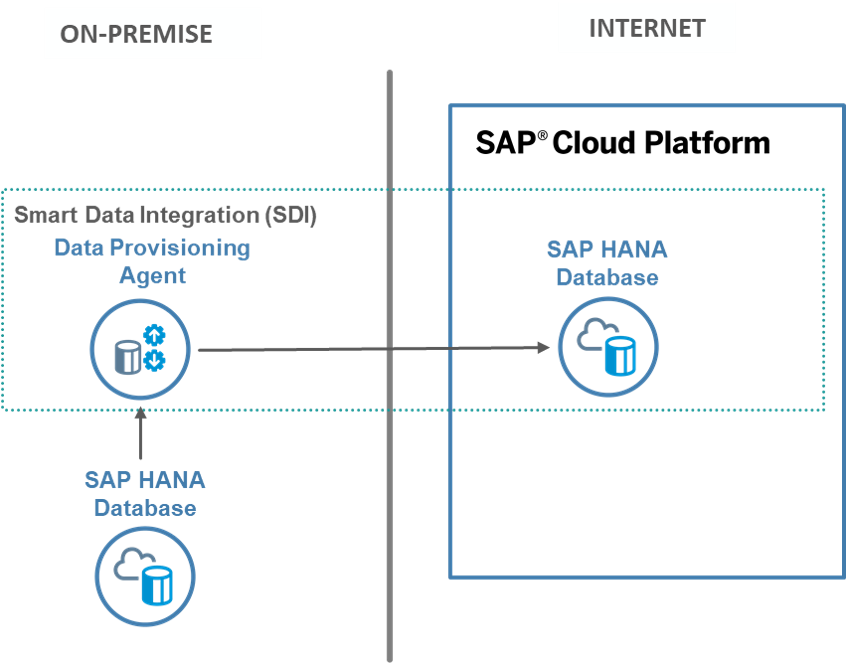

This post is about setting up Smart Data Integration from an on-premise SAP HANA instance (HANA Express in my case) to my tenant of SAP Cloud Platform, SAP HANA Service on Cloud Foundry.

(as usual, check your license agreements...)

I will not duplicate information that I have already published here: https://developers.sap.com/group.haas-dm-sdi.html

I am using the FileAdapter there, I will use the HanaAdapter here so I will only cover the differences.

You will still need to generate the cross-container access, a user for the connection and a remote source. If you are struggling to understand these concepts and how the wiring works, you are not alone: here is a post with some drawings that hopefully helps.

I got it from the Software Downloads but you can also get it from the SAP Development Tools. Just make sure you have version 2.3.5.2 or higher so you can connect through Web Sockets.

At the time of writing this blog post, the version of the SDI adapter in HXE's download manager was not high enough, but that would have been the best option:

You may already have it in your /Downloads folder in HXE too.

If your file ends with extension ".SAR" or ".CAR", you will need to use sapcar to inflate the file first. Else, good old tar -xvzf on your HXE machine command will do.

The DPagent will ask for two ports to listen. If you are running in the cloud like me, make sure you open those ports. The defaults are 5050 and 5051.

My on-premise SAP HANA, express edition instance lives on Google Cloud, so I uploaded the inflated dpagent file first and these commands did the trick:

.

.

I hit enter on everything as I wanted the default directory and ports, but of course you can change these.

Note the installation path is /usr/sap/dataprovagent .

Set the environment variables first and navigate into the directory where you installed DPAgent:

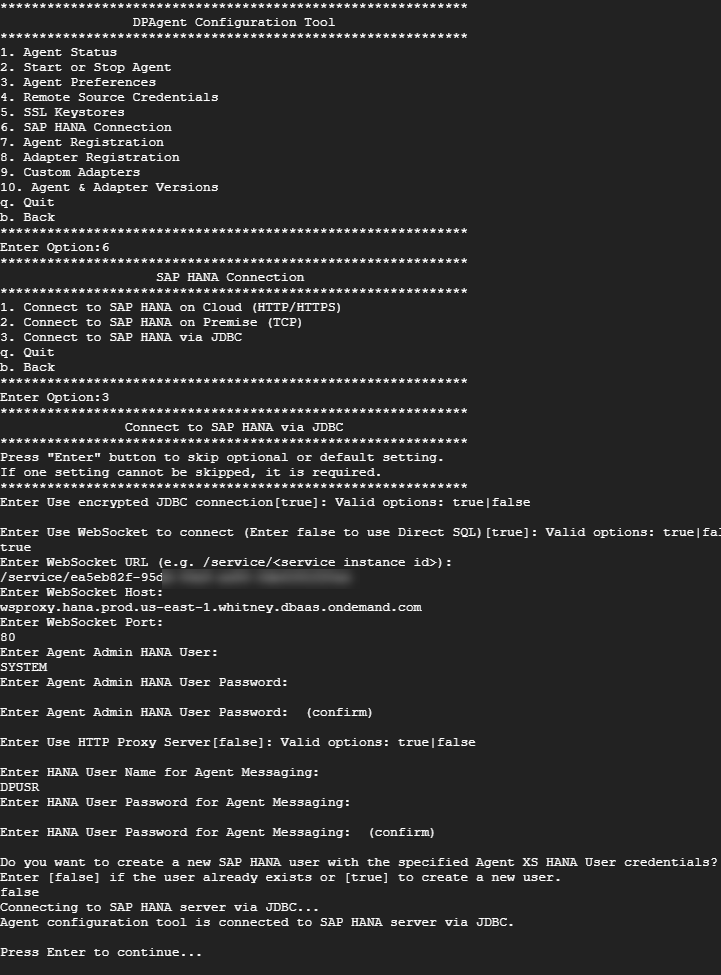

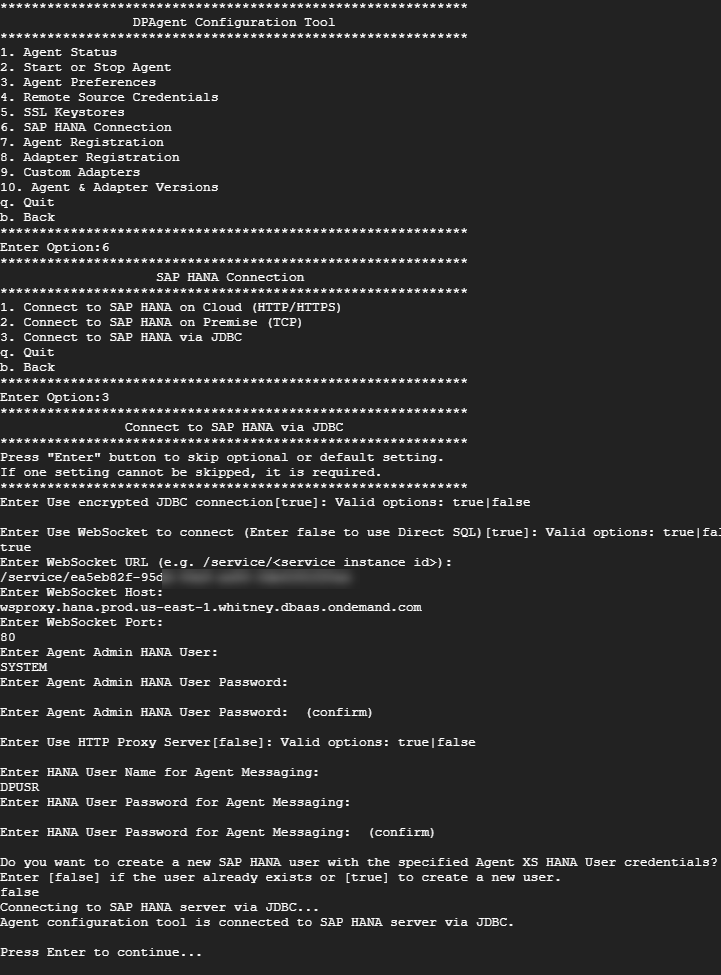

And start the configuration of the Data Provisioning Agent

Go with option 2 first, Start or Stop Agent:

Don't forget! You will need to have whitelisted the public IP address of your source machine for access to your HANA service.

Go back to the main menu, choose 5. SAP HANA Connection to log in to your SAP HANA Service:

You will need your instance ID and the websocket endpoint. You get these from the Cockpit of your database instance:

Once you have connected successfully, register the agent with option 7. Agent Registration

You should now see the agent in the Database Explorer in HANA as a Service

Finally, register the HanaAdapter with 8. Adapter Registration:

You will need a user with the right access in the source database (HANA Express in my case). Something like:

I also want to replicate data from HDI containers, so I open the SQL console as Admin:

And run something like:

Some notes:

Finally, on the database explorer connected to you SAP HANA Service on Cloud Foundry, right-click on Remote Sources --> Add Remote Source:

You will see the agent and adapter you registered before.

Enter the host of the source machine (in my case, the public static IP address of my HXE machine.

Enter the port number where the indexserver of your source tenant database is listening (39015 for the default first tenant on HXE, 39013 for the SYSTEMDB which you should not be using anyways ).

If you don't know the port for your database, querying the table M_SERVICES will tell you the SQL_PORT of your indexserver.

Set the credentials to Technical User and enter the user and password for the user you created in your source database:

And you are ready to roll!

If you want to consume the remote source you have just created in Replication Tasks, Flowgraph and Virtual Tables from an HDI container in Web IDE, follow the steps in this tutorial group: https://developers.sap.com/group.haas-dm-sdi.html

Was this useful? Hit that "Like" button and remember to keep encouraging other community members who go out of their way to share their knowledge.

This post is about setting up Smart Data Integration from an on-premise SAP HANA instance (HANA Express in my case) to my tenant of SAP Cloud Platform, SAP HANA Service on Cloud Foundry.

(as usual, check your license agreements...)

Check the existing tutorials first

I will not duplicate information that I have already published here: https://developers.sap.com/group.haas-dm-sdi.html

I am using the FileAdapter there, I will use the HanaAdapter here so I will only cover the differences.

You will still need to generate the cross-container access, a user for the connection and a remote source. If you are struggling to understand these concepts and how the wiring works, you are not alone: here is a post with some drawings that hopefully helps.

Prereqs:

- You have an instance of SAP HANA Service in Cloud Foundry (the trial HDI containers will unfortunately not work... this makes me sad too...)

- You have access to the cockpit for your SAP HANA Service

- You have a HANA instance that can be accessed from the outside world

- You have whitelisted the IP address of your HANA express or on-premise instance

- You have admin rights to create users in both HANA instances

Download the Data Provisioning Agent

I got it from the Software Downloads but you can also get it from the SAP Development Tools. Just make sure you have version 2.3.5.2 or higher so you can connect through Web Sockets.

At the time of writing this blog post, the version of the SDI adapter in HXE's download manager was not high enough, but that would have been the best option:

cd /usr/sap/HXE/home/bin

./HXEDownloadManager_linux.bin -XYou may already have it in your /Downloads folder in HXE too.

If your file ends with extension ".SAR" or ".CAR", you will need to use sapcar to inflate the file first. Else, good old tar -xvzf on your HXE machine command will do.

Check the ports

The DPagent will ask for two ports to listen. If you are running in the cloud like me, make sure you open those ports. The defaults are 5050 and 5051.

Install the Data Provisioning Agent

My on-premise SAP HANA, express edition instance lives on Google Cloud, so I uploaded the inflated dpagent file first and these commands did the trick:

sudo su - hxeadm

cd /extracted_folder/HANA_DP_AGENT_20_LIN_X86_64

./hdbinst .

.I hit enter on everything as I wanted the default directory and ports, but of course you can change these.

Note the installation path is /usr/sap/dataprovagent .

Configure the Data Provisioning Agent

Set the environment variables first and navigate into the directory where you installed DPAgent:

export DPA_INSTANCE=/usr/sap/dataprovagent

cd $DPA_INSTANCE

cd bin

And start the configuration of the Data Provisioning Agent

./agentcli.sh --configAgentGo with option 2 first, Start or Stop Agent:

Don't forget! You will need to have whitelisted the public IP address of your source machine for access to your HANA service.

Go back to the main menu, choose 5. SAP HANA Connection to log in to your SAP HANA Service:

You will need your instance ID and the websocket endpoint. You get these from the Cockpit of your database instance:

Once you have connected successfully, register the agent with option 7. Agent Registration

You should now see the agent in the Database Explorer in HANA as a Service

Finally, register the HanaAdapter with 8. Adapter Registration:

Create a user in the source database

You will need a user with the right access in the source database (HANA Express in my case). Something like:

create user remousr password P455w0Rd NO FORCE_FIRST_PASSWORD_CHANGE;

grant select, trigger on schema FOOD to REMOUSR;

I also want to replicate data from HDI containers, so I open the SQL console as Admin:

And run something like:

set schema "ANALYTICS_1#DI";

CREATE LOCAL TEMPORARY TABLE #PRIVILEGES LIKE _SYS_DI.TT_SCHEMA_PRIVILEGES;

INSERT INTO #PRIVILEGES (PRIVILEGE_NAME, PRINCIPAL_SCHEMA_NAME, PRINCIPAL_NAME) values ('SELECT','','REMOUSR');

CALL "ANALYTICS_1#DI".GRANT_CONTAINER_SCHEMA_PRIVILEGES(#PRIVILEGES, _SYS_DI.T_NO_PARAMETERS, ?, ?, ?);

DROP TABLE #PRIVILEGES;

Some notes:

- ANALYTICS_1#DI: Comes from doing right-click on a table in the HDI container, choosing "Generate SELECT statement" and adding "#DI" to the schema.

- You can also grant roles, which is preferable. More information here.

Create a remote source

Finally, on the database explorer connected to you SAP HANA Service on Cloud Foundry, right-click on Remote Sources --> Add Remote Source:

You will see the agent and adapter you registered before.

Enter the host of the source machine (in my case, the public static IP address of my HXE machine.

Enter the port number where the indexserver of your source tenant database is listening (39015 for the default first tenant on HXE, 39013 for the SYSTEMDB which you should not be using anyways ).

If you don't know the port for your database, querying the table M_SERVICES will tell you the SQL_PORT of your indexserver.

Set the credentials to Technical User and enter the user and password for the user you created in your source database:

And you are ready to roll!

If you want to consume the remote source you have just created in Replication Tasks, Flowgraph and Virtual Tables from an HDI container in Web IDE, follow the steps in this tutorial group: https://developers.sap.com/group.haas-dm-sdi.html

Was this useful? Hit that "Like" button and remember to keep encouraging other community members who go out of their way to share their knowledge.

- SAP Managed Tags:

- SAP HANA,

- SAP HANA smart data integration,

- SAP HANA, express edition,

- SAP HANA service for SAP BTP

Labels:

2 Comments

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,661 -

Business Trends

87 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

64 -

Expert

1 -

Expert Insights

178 -

Expert Insights

271 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

Kafka

1 -

Life at SAP

784 -

Life at SAP

11 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,578 -

Product Updates

323 -

Replication Flow

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,886 -

Technology Updates

396 -

Workload Fluctuations

1

Related Content

- 10+ ways to reshape your SAP landscape with SAP Business Technology Platform – Blog 4 in Technology Blogs by SAP

- Top Picks: Innovations Highlights from SAP Business Technology Platform (Q1/2024) in Technology Blogs by SAP

- What’s New in SAP Analytics Cloud Release 2024.08 in Technology Blogs by SAP

- It has never been easier to print from SAP with Microsoft Universal Print in Technology Blogs by Members

- Consuming SAP with SAP Build Apps - Connectivity options for low-code development - part 2 in Technology Blogs by SAP

Top kudoed authors

| User | Count |

|---|---|

| 11 | |

| 10 | |

| 10 | |

| 9 | |

| 8 | |

| 7 | |

| 7 | |

| 7 | |

| 7 | |

| 6 |