- SAP Community

- Groups

- Interest Groups

- Application Development

- Blog Posts

- GLADIUS Is Getting Sharper

Application Development Blog Posts

Learn and share on deeper, cross technology development topics such as integration and connectivity, automation, cloud extensibility, developing at scale, and security.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

ennowulff

Active Contributor

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

05-24-2019

5:43 PM

Introducing: GLADIUS - A Test Unit Framework

GLADIUS - The Next Level

My God, Amazing How We Got This Far - GLADIUS Secrets

The above blog posts are about the Test unit challenge and learning framework GLADIUS. In this series I try to evolve the framework to help better understand how the framework works and to document the development process.

Even though I have a view-like ratio of 0.003 for the last post I decided to continue this series because I don't like unfinished things.

With this 4th step of the blog series I made another big step towards a neat application. Unluckily I encountered some new problems...

But let's start with the latest improvements.

The previous version of GLADIUS had a splitted frame for the source code: One for the data declarations and one for the functionality itself. The reason why the code was fragmented in this way is easy to explain: the SAP demo report for evaluating source code did it this way... 😉

Splitting the code into declaration and implementation parts is a bit outdated when inline declarations are state-of-the-art. Therefor I adjusted the GLADIUS editor to having only one editor like it's common.

Since the tests are getting harder and I had to try more inside the editor, I missed one really important function: Saving the code. A very simple thing and I don't know why I waited so long to implement this functionality. But now it's there. The code for each user and task can be saved.?

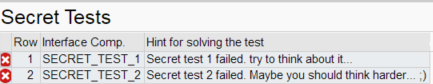

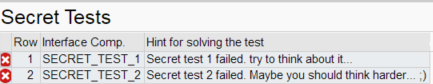

The implemented function for having secret tests was exactly this: an implemented function. But no helpful display of the tests. I added a section for displaying the result of the secret tests:

My idea is to display the result of the main unit tests in a same way. The main unit tests are displayed with the standard SAP view for unit tests which has for my case three disadvantages:

The defined tasks should have some attributes which must be configured in an easy way. My idea is, to have one object (however this object might look like) where all this configuration can be defined.

One interesting thing is to define a whitelist and a blacklist to force the contestant to use only a special set of commands.

A very important thing is the description of the task. The user should know, what to do. The description can give some hints or provide examples.

One thing that I am very happy with is, that I know have integrated metrics into the task. Metrics are attributes of the solution which come from the code itself:

That means that you can define in a task how many statements are allowed to be used. Or you can challenge the user to use less than a certain amount of characters.

To have a good example for how to use the metrics and how to challenge someone and how to teach new ABAP statements, I chose the following task where I prepared the test units and configuration for:

This setting is quite common: a ranges table and strings.

I also retrieve the signature of the used test method to inform the user about what is coming in and what should go out.

One solution could look like the one I prepared for this screenshot:

Did you recognize the red circle in the metrics window? It indicates that the metric failed. I defined in the configuration class that you are only allowed to use one statement... 😄

If one door closes, another one opens. If one problem solved, seven other bugs appear...

For the solution of this demonstration task I decided that the output must be a text string. To make this text readable there must be lines. Lines will be achieved by using Carriage Return and Linefeed characters. These are defined in cl_abap_char_utilities=>cr_lf. Unluckily blacklisted "=>" to prevent the user to call any "dangerous" methods. So I will have to spend some time to allow this kind of usage but still ban method calls.

The comparison of strings is quite easy. CL_ABAP_UNIT_ASSERT=>ASSERT_EQUALS does this also quite well. What is not so good is the presentation of not equal values. The unit test framework only shows something like this [**] or this [ * ]. That does not help the user to understand what is wrong with the code. I think there should be some elegant way to display the differing values.

It's getting even more important if the test method expect tables. I am not sure if I can get the different values somehow...

Maybe Klaus, the Terrible can help? It seems as if he coded quite a huge amount of the test framework... 😄

I learned that there are more and more attributes and things that are needed for a task object. You must provide a description (would be great to have some HTML instead of plain tex), blacklist and whitelist, used interface, test units, secret tests, metrics and I am sure there will be more to come.

I would like to have all these parameters in one object. I do not want to have one description text somewhere and the used signature somewhere else. At least I think there will be a database entry for each task so that I don't have to bother with repository objects.

GLADIUS is getting more and more complex. Meanwhile it is again really hard to understand all the parts and remember how things work. Before I will do some more enhancements I fear I will have to do a complete refactoring of the code and its elements.

All the code is on github. Unluckily we have some configuration trouble with our internal SAP system so I cannot reach github for updating the code. I will do as soon as possible,

GLADIUS has reached a level where I think that it is only a few steps away from be used by you. The application now looks somehow like I once thought it could look like. All main parts are there and have only be refactored and pimped up a bit.

The administration and maintenance of the tasks and challenges will come next. This is where we actually started and then crisscrossed between all the parts. I hope that the ingredients will be clearer and defined better so I can administrate them better.

If you want to see more screenshots of Klaus, the Terrible or some more information about what is GLADIUS about then you can have a look at my presentation from last SAP Inside Track in Hanover.

GLADIUS - The Next Level

My God, Amazing How We Got This Far - GLADIUS Secrets

The above blog posts are about the Test unit challenge and learning framework GLADIUS. In this series I try to evolve the framework to help better understand how the framework works and to document the development process.

Even though I have a view-like ratio of 0.003 for the last post I decided to continue this series because I don't like unfinished things.

With this 4th step of the blog series I made another big step towards a neat application. Unluckily I encountered some new problems...

But let's start with the latest improvements.

Split Screen

The previous version of GLADIUS had a splitted frame for the source code: One for the data declarations and one for the functionality itself. The reason why the code was fragmented in this way is easy to explain: the SAP demo report for evaluating source code did it this way... 😉

Splitting the code into declaration and implementation parts is a bit outdated when inline declarations are state-of-the-art. Therefor I adjusted the GLADIUS editor to having only one editor like it's common.

Saving The Solution

Since the tests are getting harder and I had to try more inside the editor, I missed one really important function: Saving the code. A very simple thing and I don't know why I waited so long to implement this functionality. But now it's there. The code for each user and task can be saved.?

Secrets Revealed

The implemented function for having secret tests was exactly this: an implemented function. But no helpful display of the tests. I added a section for displaying the result of the secret tests:

My idea is to display the result of the main unit tests in a same way. The main unit tests are displayed with the standard SAP view for unit tests which has for my case three disadvantages:

- There is a lot of information I do not want to see

- By clicking on the stack information in the analysis window, the use can jump to the failed unit test. Then the solution is only another double click away. That's too easy...

- if there are simple result structures in the test then it is sufficient to display a message. If there are texts, strings or tables, there should be a better way for displaying the expected values and the computed results.

Configuration Commander

The defined tasks should have some attributes which must be configured in an easy way. My idea is, to have one object (however this object might look like) where all this configuration can be defined.

One interesting thing is to define a whitelist and a blacklist to force the contestant to use only a special set of commands.

A very important thing is the description of the task. The user should know, what to do. The description can give some hints or provide examples.

Metrics

One thing that I am very happy with is, that I know have integrated metrics into the task. Metrics are attributes of the solution which come from the code itself:

- runtime

- number of used statements

- number of used tokens

- number of characters

That means that you can define in a task how many statements are allowed to be used. Or you can challenge the user to use less than a certain amount of characters.

Example

To have a good example for how to use the metrics and how to challenge someone and how to teach new ABAP statements, I chose the following task where I prepared the test units and configuration for:

Your task is to convert the given ranges table into a string.

If the SIGN is "I" the text has to be "including".

If the SIGN is 'E' the text has to be "excluding".

If the parameter HIGH is not empty then you have to separate LOW and HIGH by "to"

EXAMPLE: SIGN: I, OPTION: BT, LOW = 20000504, HIGH: 20010607

RESULT: including 04.05.2000 to 07.06.2001

This setting is quite common: a ranges table and strings.

I also retrieve the signature of the used test method to inform the user about what is coming in and what should go out.

One solution could look like the one I prepared for this screenshot:

Did you recognize the red circle in the metrics window? It indicates that the metric failed. I defined in the configuration class that you are only allowed to use one statement... 😄

Encountered Problems

If one door closes, another one opens. If one problem solved, seven other bugs appear...

CL_ABAP_CHAR_UTILITIES=>CR_LF

For the solution of this demonstration task I decided that the output must be a text string. To make this text readable there must be lines. Lines will be achieved by using Carriage Return and Linefeed characters. These are defined in cl_abap_char_utilities=>cr_lf. Unluckily blacklisted "=>" to prevent the user to call any "dangerous" methods. So I will have to spend some time to allow this kind of usage but still ban method calls.

Character Issues

The comparison of strings is quite easy. CL_ABAP_UNIT_ASSERT=>ASSERT_EQUALS does this also quite well. What is not so good is the presentation of not equal values. The unit test framework only shows something like this [**] or this [ * ]. That does not help the user to understand what is wrong with the code. I think there should be some elegant way to display the differing values.

It's getting even more important if the test method expect tables. I am not sure if I can get the different values somehow...

Maybe Klaus, the Terrible can help? It seems as if he coded quite a huge amount of the test framework... 😄

Configuration

I learned that there are more and more attributes and things that are needed for a task object. You must provide a description (would be great to have some HTML instead of plain tex), blacklist and whitelist, used interface, test units, secret tests, metrics and I am sure there will be more to come.

I would like to have all these parameters in one object. I do not want to have one description text somewhere and the used signature somewhere else. At least I think there will be a database entry for each task so that I don't have to bother with repository objects.

Complexity

GLADIUS is getting more and more complex. Meanwhile it is again really hard to understand all the parts and remember how things work. Before I will do some more enhancements I fear I will have to do a complete refactoring of the code and its elements.

Code Sharing

All the code is on github. Unluckily we have some configuration trouble with our internal SAP system so I cannot reach github for updating the code. I will do as soon as possible,

Conclusion

GLADIUS has reached a level where I think that it is only a few steps away from be used by you. The application now looks somehow like I once thought it could look like. All main parts are there and have only be refactored and pimped up a bit.

The administration and maintenance of the tasks and challenges will come next. This is where we actually started and then crisscrossed between all the parts. I hope that the ingredients will be clearer and defined better so I can administrate them better.

If you want to see more screenshots of Klaus, the Terrible or some more information about what is GLADIUS about then you can have a look at my presentation from last SAP Inside Track in Hanover.

to be continued...

- SAP Managed Tags:

- ABAP Development

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

A Dynamic Memory Allocation Tool

1 -

ABAP

8 -

abap cds

1 -

ABAP CDS Views

14 -

ABAP class

1 -

ABAP Cloud

1 -

ABAP Development

4 -

ABAP in Eclipse

1 -

ABAP Keyword Documentation

2 -

ABAP OOABAP

2 -

ABAP Programming

1 -

abap technical

1 -

ABAP test cockpit

7 -

ABAP test cokpit

1 -

ADT

1 -

Advanced Event Mesh

1 -

AEM

1 -

AI

1 -

API and Integration

1 -

APIs

8 -

APIs ABAP

1 -

App Dev and Integration

1 -

Application Development

2 -

application job

1 -

archivelinks

1 -

Automation

4 -

BTP

1 -

CAP

1 -

CAPM

1 -

Career Development

3 -

CL_GUI_FRONTEND_SERVICES

1 -

CL_SALV_TABLE

1 -

Cloud Extensibility

8 -

Cloud Native

7 -

Cloud Platform Integration

1 -

CloudEvents

2 -

CMIS

1 -

Connection

1 -

container

1 -

Debugging

2 -

Developer extensibility

1 -

Developing at Scale

4 -

DMS

1 -

dynamic logpoints

1 -

Eclipse ADT ABAP Development Tools

1 -

EDA

1 -

Event Mesh

1 -

Expert

1 -

Field Symbols in ABAP

1 -

Fiori

1 -

Fiori App Extension

1 -

Forms & Templates

1 -

IBM watsonx

1 -

Integration & Connectivity

10 -

JavaScripts used by Adobe Forms

1 -

joule

1 -

NodeJS

1 -

ODATA

3 -

OOABAP

3 -

Outbound queue

1 -

Product Updates

1 -

Programming Models

13 -

RFC

1 -

RFFOEDI1

1 -

SAP BAS

1 -

SAP BTP

1 -

SAP Build

1 -

SAP Build apps

1 -

SAP Build CodeJam

1 -

SAP CodeTalk

1 -

SAP Odata

1 -

SAP UI5

1 -

SAP UI5 Custom Library

1 -

SAPEnhancements

1 -

SapMachine

1 -

security

3 -

text editor

1 -

Tools

16 -

User Experience

5

Top kudoed authors

| User | Count |

|---|---|

| 6 | |

| 5 | |

| 3 | |

| 3 | |

| 2 | |

| 2 | |

| 2 | |

| 1 | |

| 1 | |

| 1 |