- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- SAP Data Hub 2.5 fresh Installation on Google Clou...

Technology Blogs by SAP

Learn how to extend and personalize SAP applications. Follow the SAP technology blog for insights into SAP BTP, ABAP, SAP Analytics Cloud, SAP HANA, and more.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

former_member29

Explorer

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

04-24-2019

8:20 AM

Summary

In this document we will step by step demonstrate how to install SAP Data Hub 2.5.60 beta version on Google Cloud Platform. Starting from the scratch, create virtual machine instance. We will walk through pre-installation tasks on jump box, create a Kubernetes Cluster, install SAP Data Hub Foundation, and post-installation configurations. At the final step, we will validate the Data Hub installation, make sure everything works fine.

Pre-requisite: You need to have a GCP account with payment set up. Free trial account will not work.

SAP Data Hub 2.5.60

Jump Box OS: Red Hat Enterprise Linux for SAP with update services 7.4

Document Version: 1.1

Apr.18.2019

Author: James Yao

SAP DBS CoE - EIM Team

1 SAP Data Hub 2.5 Overview

SAP Data Hub is a fundamental technology in the SAP strategy and is part of the digital platform. The whole SAP product portfolio will be connected to get ready for the Intelligent Enterprise. SAP Data Hub supports the Intelligent Enterprise as a key connector and processing layer to build new applications. It provides data orchestration and metadata management across heterogeneous data sources.

SAP Data Hub is fully containerized, and flow-based applications will be built and deployed as Docker containers. The orchestration of Docker containers is utilized by using Kubernetes.

Simplified deployment of SAP Data Hub in cloud environments and on-premise:

- All necessary components are fully containerized and delivered as a Docker image, including SAP HANA

- Decoupling data processing (in Kubernetes) and data storage (any support cloud store)

- Deployment in multiple Kubernetes managed environments

- – Leveraging managed cloud Kubernetes services in AWS, Microsoft Azure, Google Cloud Platform

- – Support for private cloud and on-premise installations

Installation Overview

▪ Preparing for installation:

− Configure Jump Box

− Configure Kubernetes

− Configure proxy settings

− Set up (cloud) storage

− Set up provider-specific settings

▪ Installation of SAP Data Hub

− Use install.sh via command line

− Manually install SAP Data Hub

▪ Post Installation activities

➔ Installation Guide: https://help.sap.com/viewer/e66c399612e84a83a8abe97c0eeb443a/2.4.latest/en-US/0400621a63a348ab904972...

Prerequisites for installing SAP Data Hub 2: https://launchpad.support.sap.com/#/notes/2686169

2 Pre-installation

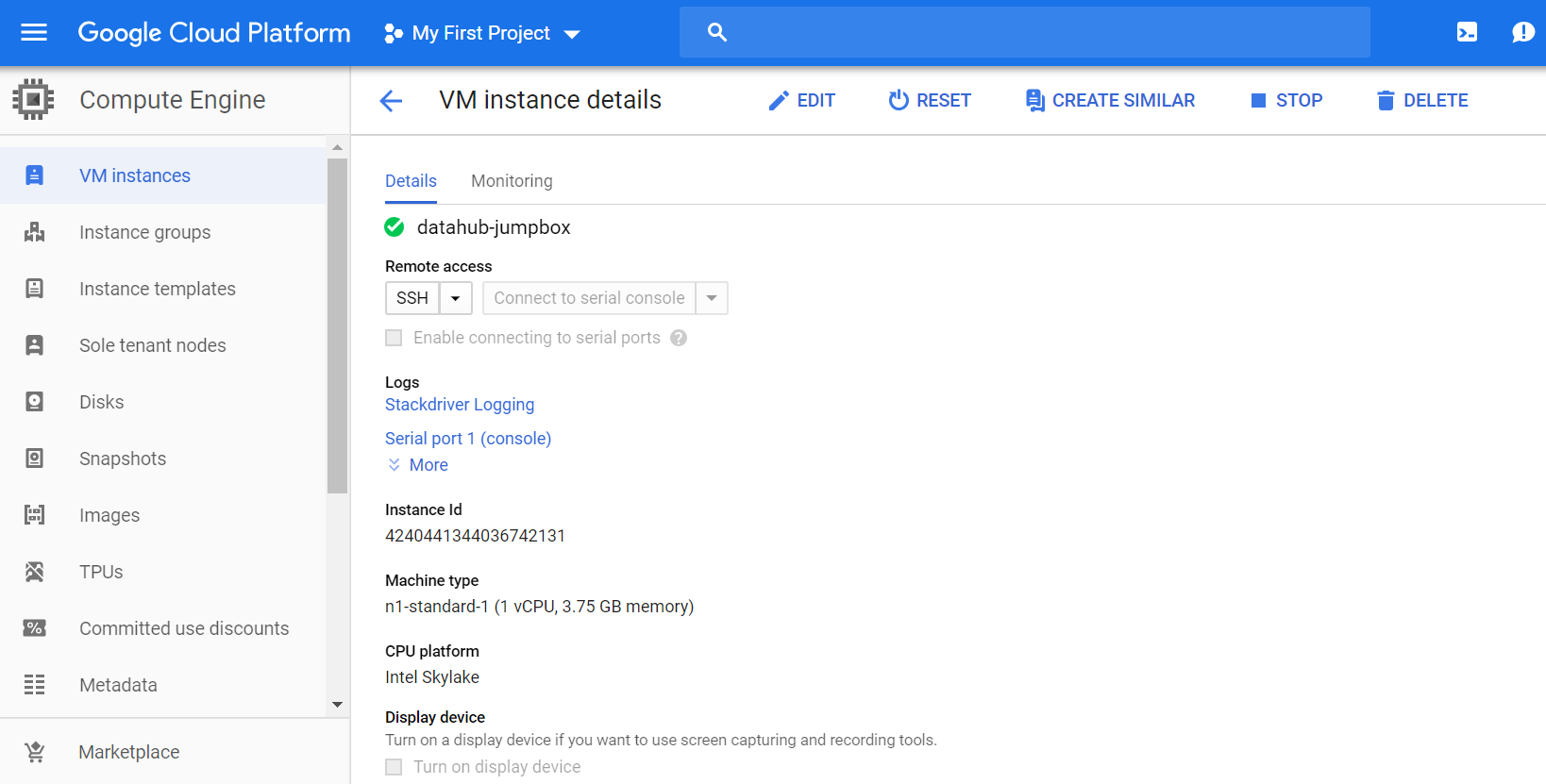

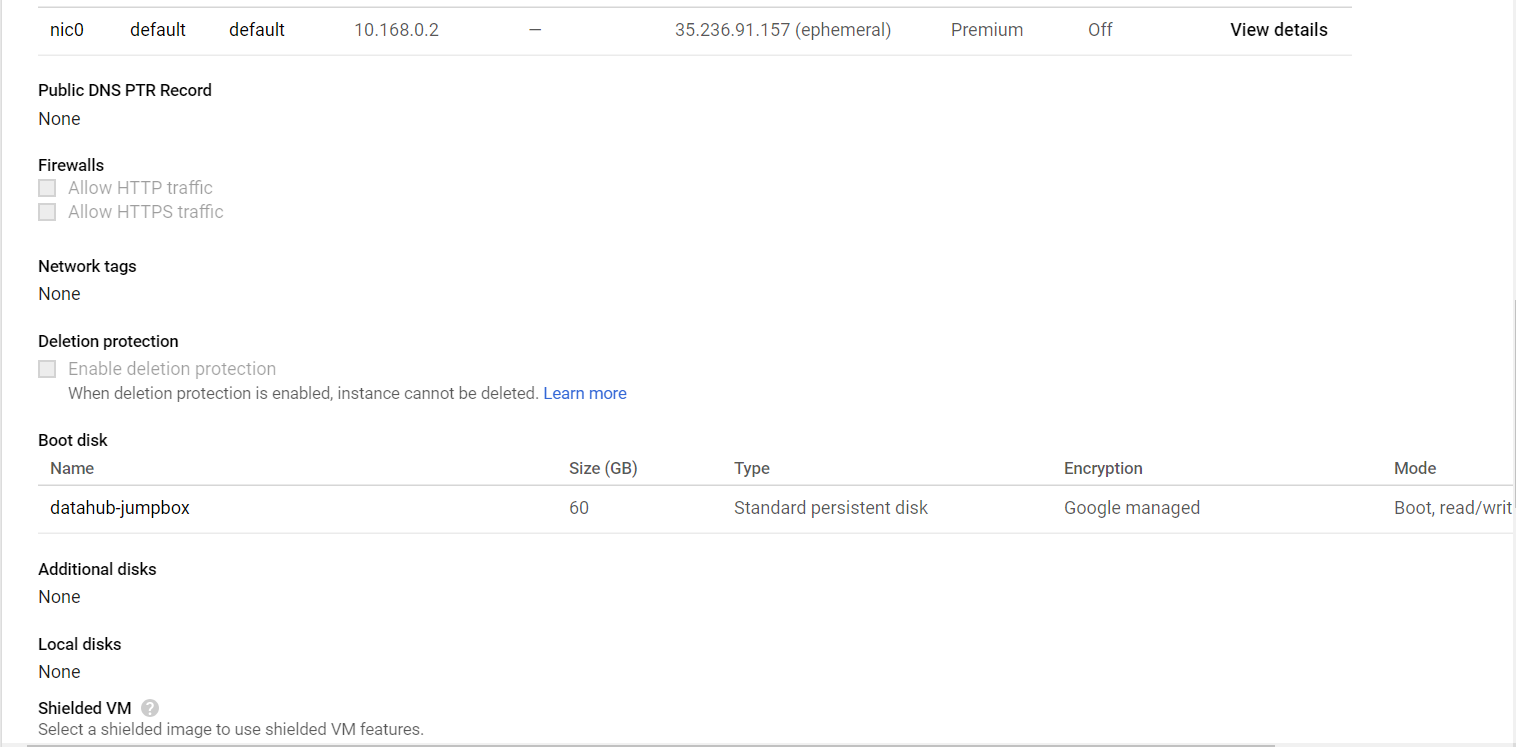

2.1 GCP --> Create Jump box

Select OS system: Red Hat Enterprise Linux for SAP with update services 7.4

Comparing to SUSE, RHEL doesn’t have some prerequisite tools, need to manual install them, but it is cheaper. In this document, we will use RHEL as an example, but SUSE is very similar and even easier.

Wait for the instance startup by GCP. Connect to system via Console.

sudo passwd root (Welcome1)

su root

2.2 Get the prerequisite tools for Data Hub installation

2.2.1 Install yum

yum -y install wget

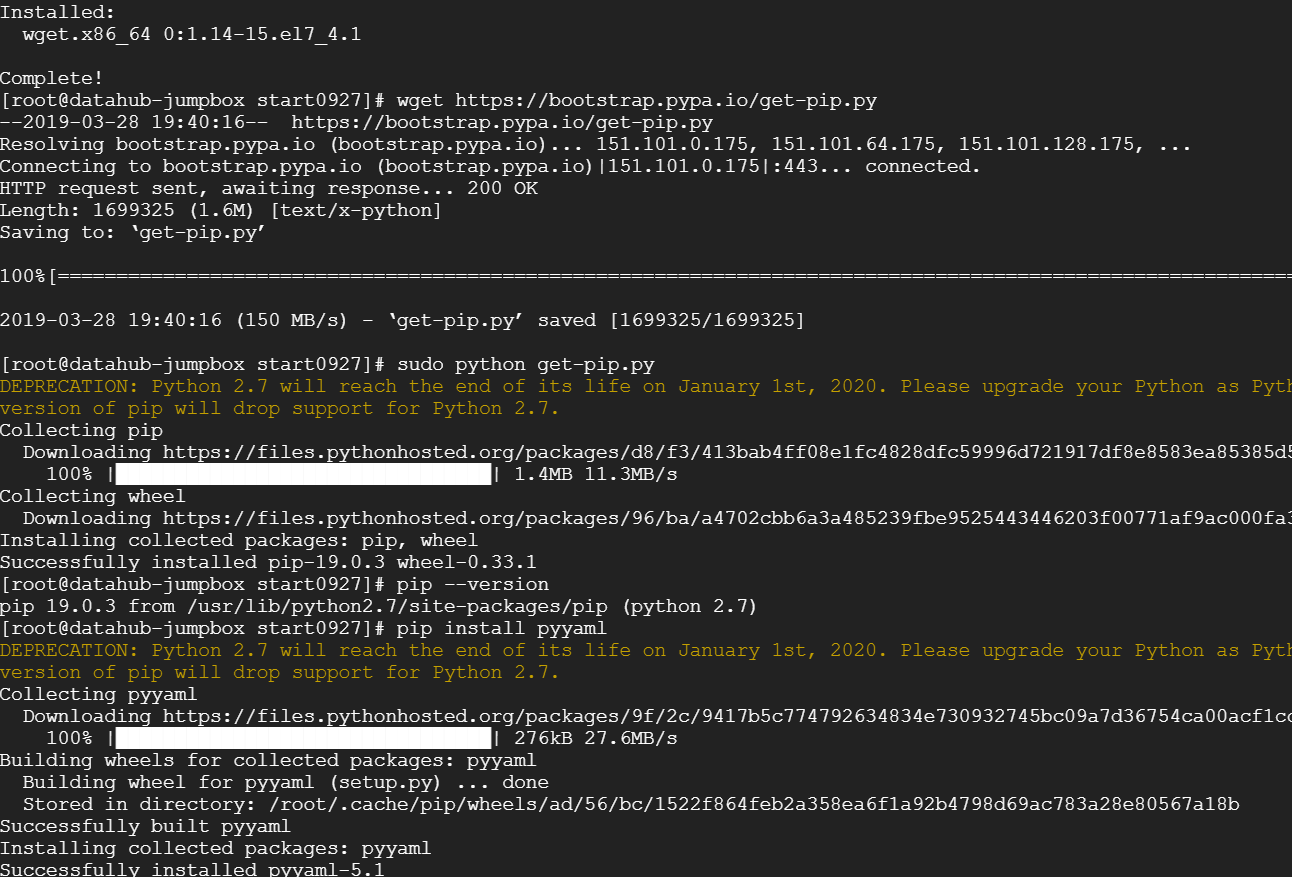

2.2.2 Install Python YAML package (PyYAML)

wget https://bootstrap.pypa.io/get-pip.py

sudo python get-pip.py

pip --version

pip install pyyaml

2.2.3 Install lower version of Helm

Helm downgrade, remove latest version if already installed(rm /usr/local/bin/helm),

If first installation then go directly:

curl -O https://storage.googleapis.com/kubernetes-helm/helm-v2.11.0-linux-amd64.tar.gz

tar -zxvf helm-v2.11.0-linux-amd64.tar.gz

mv linux-amd64/helm /usr/local/bin/helm

# Helm version too high will cause problem in later steps.

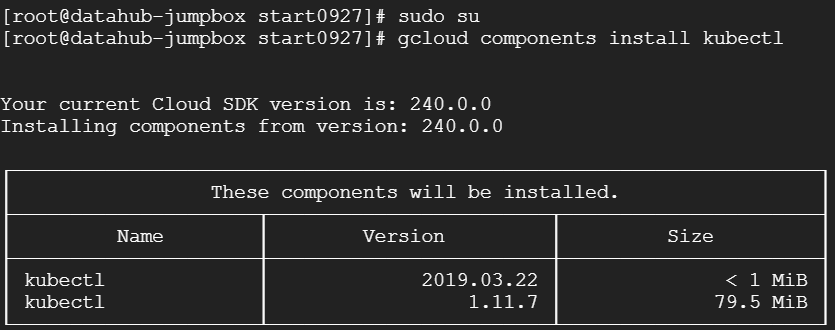

2.2.4 Download Google Cloud SDK

curl -O https://dl.google.com/dl/cloudsdk/channels/rapid/downloads/google-cloud-sdk-240.0.0-linux-x86_64.tar...

tar zxvf google-cloud-sdk-240.0.0-linux-x86_64.tar.gz google-cloud-sdk

./google-cloud-sdk/install.sh

Need to restart terminal, in order to make gcloud work.

sudo su

gcloud components install kubectl

$ sudo yum update

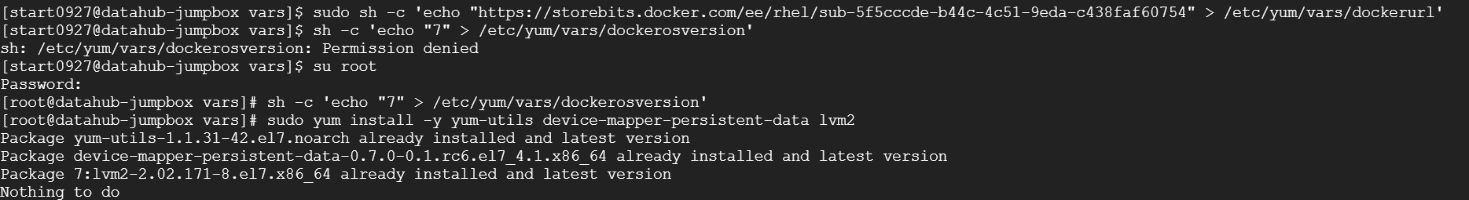

2.2.5 Install Docker-EE

Docker-CE does not work in RHEL7, have to install Docker EE.

Official guide from Docker: https://docs.docker-cn.com/engine/installation/linux/docker-ee/rhel/#%E5%85%88%E5%86%B3%E6%9D%A1%E4%...

One-month free Docker EE can be applied on Docker website,get the download URL,aka: <DOCKER-EE-URL> (everybody has a different download URL)

https://storebits.docker.com/ee/rhel/sub-5f5cccde-b44c-4c51-9eda-c438faf60754

export DOCKERURL="<DOCKER-EE-URL>"

In /etc/yum/vars/ add 2 yum parameters as below.(need su root authorization)

sudo sh -c 'echo "https://storebits.docker.com/ee/rhel/sub-5f5cccde-b44c-4c51-9eda-c438faf60754" > /etc/yum/vars/dockerurl'

sudo sh -c 'echo "7" > /etc/yum/vars/dockerosversion'

sudo yum install -y yum-utils device-mapper-persistent-data lvm2

sudo yum makecache fast

sudo yum-config-manager --enable rhel-7-server-extras-rpms

Use below commends to add stable mirror library

sudo -E yum-config-manager \

--add-repo \

"https://storebits.docker.com/ee/rhel/sub-5f5cccde-b44c-4c51-9eda-c438faf60754/rhel/docker-ee.repo"

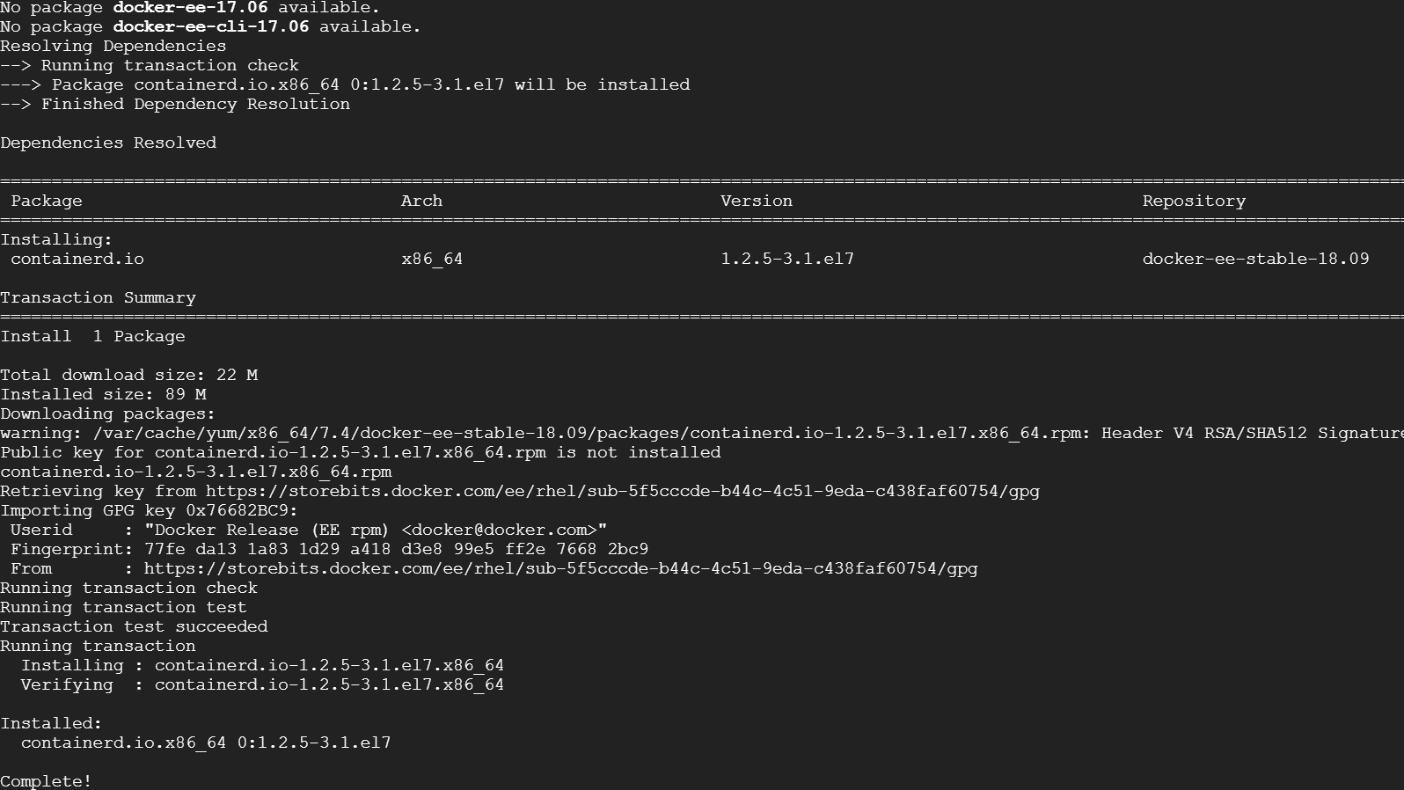

sudo yum-config-manager --enable docker-ee-stable-18.09

sudo yum -y install docker-ee docker-ee-cli containerd.io

The latest version seems to have some problem.

Change to a lower version, 17.06 -->

sudo yum -y install docker-ee-17.06 docker-ee-cli-17.06 containerd.io

There’s still error in the next process,,,need to fix the container-selinux problem.

RHEL doesn’t have container-selinux by default,we need to install it:

yum install http://vault.centos.org/centos/7.3.1611/extras/x86_64/Packages/container-selinux-2.9-4.el7.noarch.rp...

Run the Docker EE installation again:

sudo yum -y install docker-ee docker-ee-cli containerd.io

After installing container-selinux, we can successfully install Docker EE 18.09.

sudo systemctl start docker

Validate that Docker installation is successful:

sudo docker run hello-world

Next: configure Docker start options

- Enable Docker Daemon upon system startup.

#systemctl enable docker

- Docker will automatically create a user group name docker, user who wants to use Docker must be in docker group, so that to communicate to DockerDaemon.

#sudo usermod -a -G docker root

vim /etc/ssh/sshd_config

Change below lines:

PermitRootLogin yes

PasswordAuthentication yes

Restart ssh service:service sshd restart

2.2.6 Download and prepare Data Hub 2.5 Foundation image

curl -O http://nexus.wdf.sap.corp:8081/nexus/content/repositories/build.milestones/com/sap/datahub/SAPDataHu...

Can’t interpret server ...

Download to local machine and use winscp to upload to jumpbox, Path: /usr/etc/

Install unzip:

yum install zip unzip

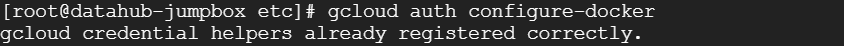

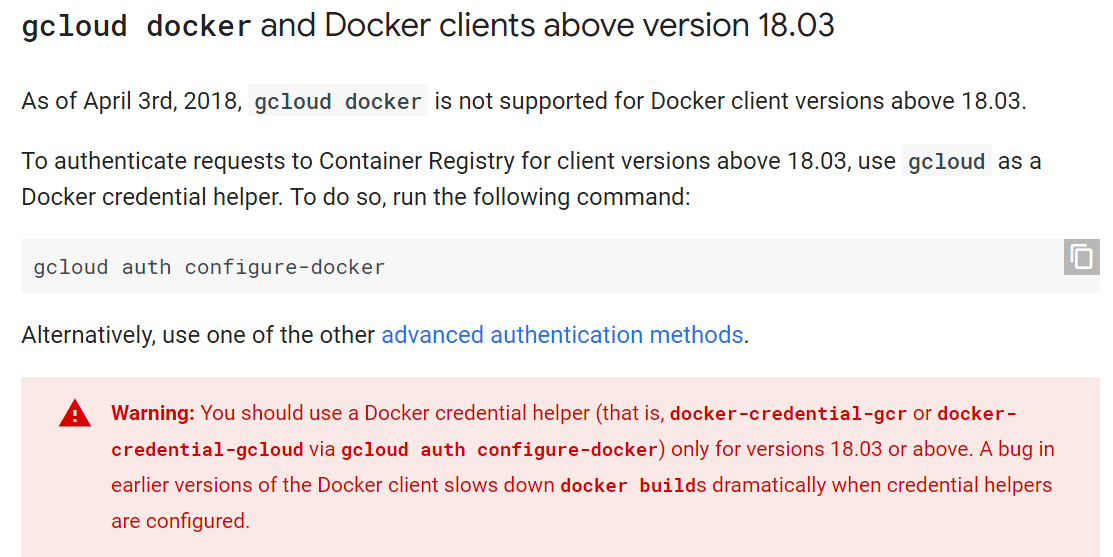

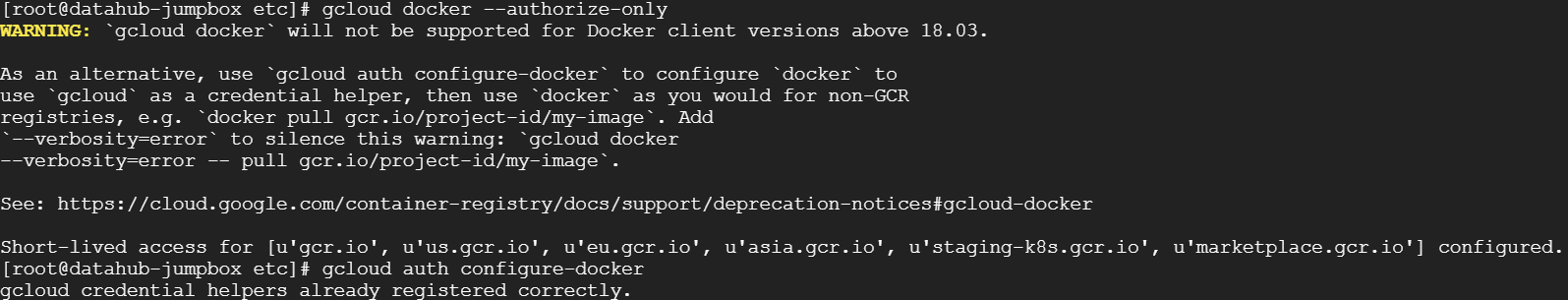

2.2.7 Grant jumpbox’s docker client access to cloud registry

GKE

Allow access to Google Container Registry (GCR)

gcloud auth configure-docker

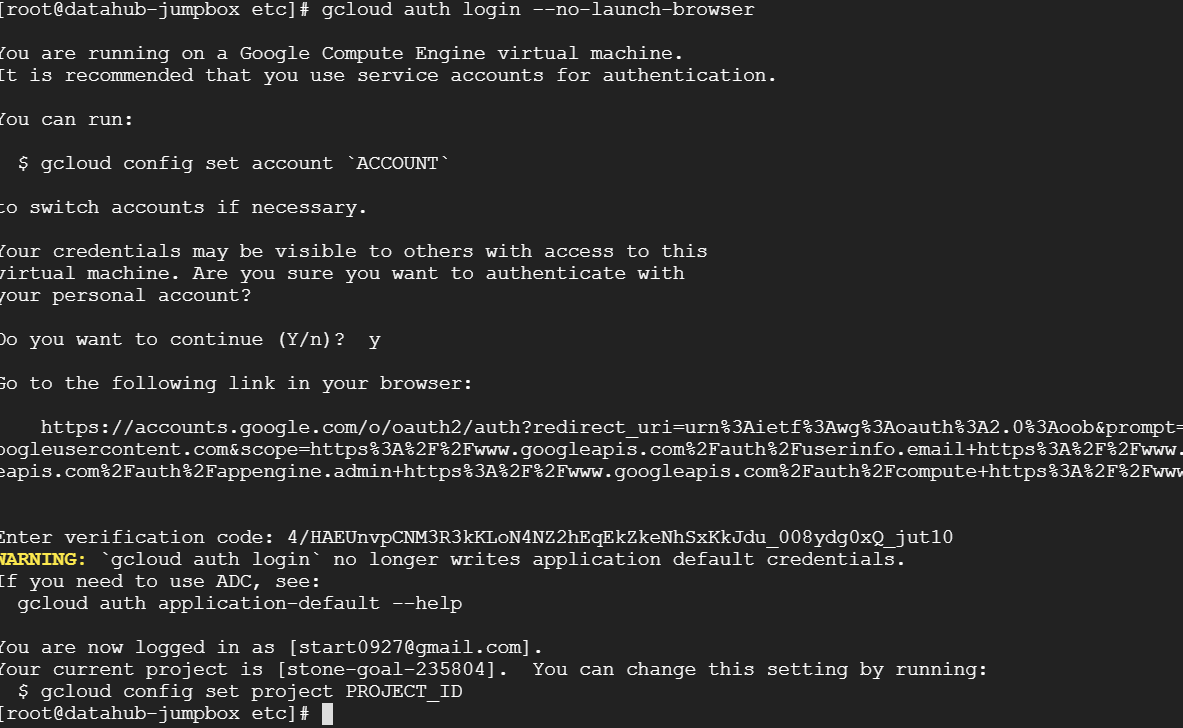

gcloud auth login --no-launch-browser

gcloud docker --authorize-only

Docker version too high (18.09) warning

Run: gcloud auth configure-docker

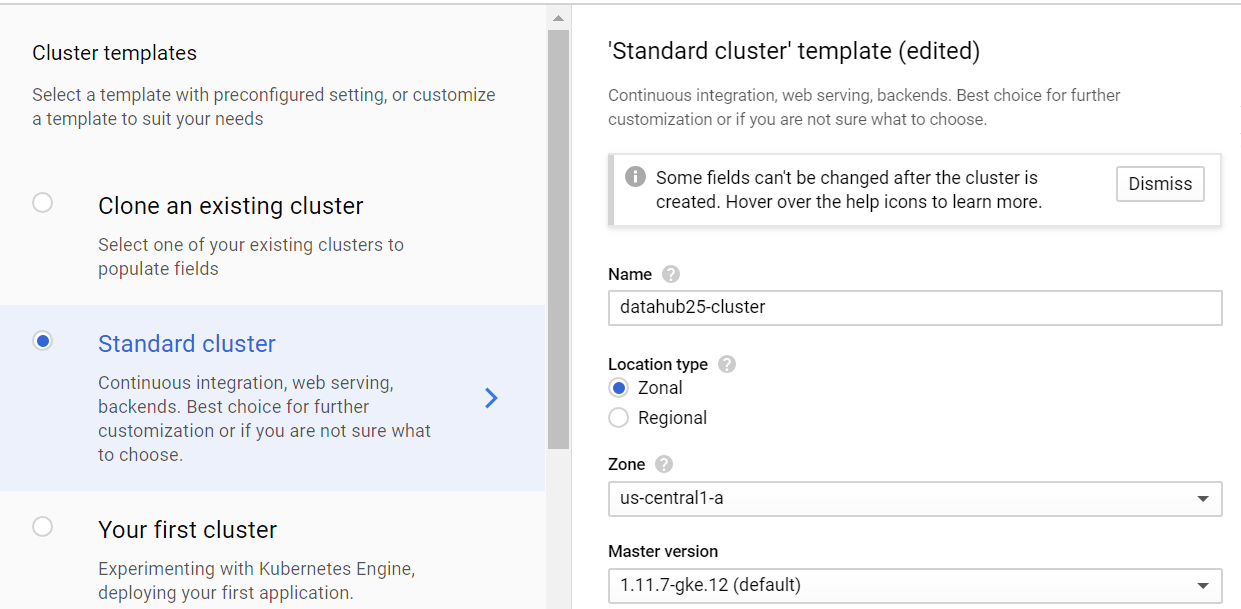

2.3 Create Kubernetes Cluster

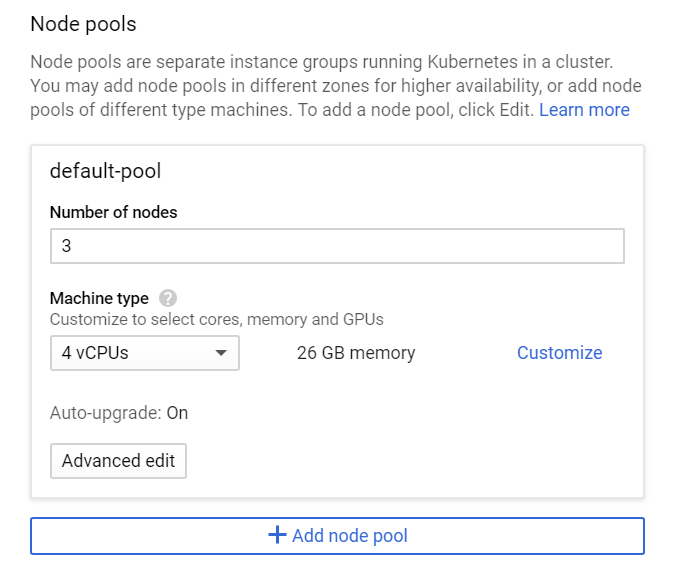

In GCP, go to menu -> Kubernetes Cluster, create a new cluster.

For Test installation, we use Data Hub minimum setup - 3 nodes 4 CPUs

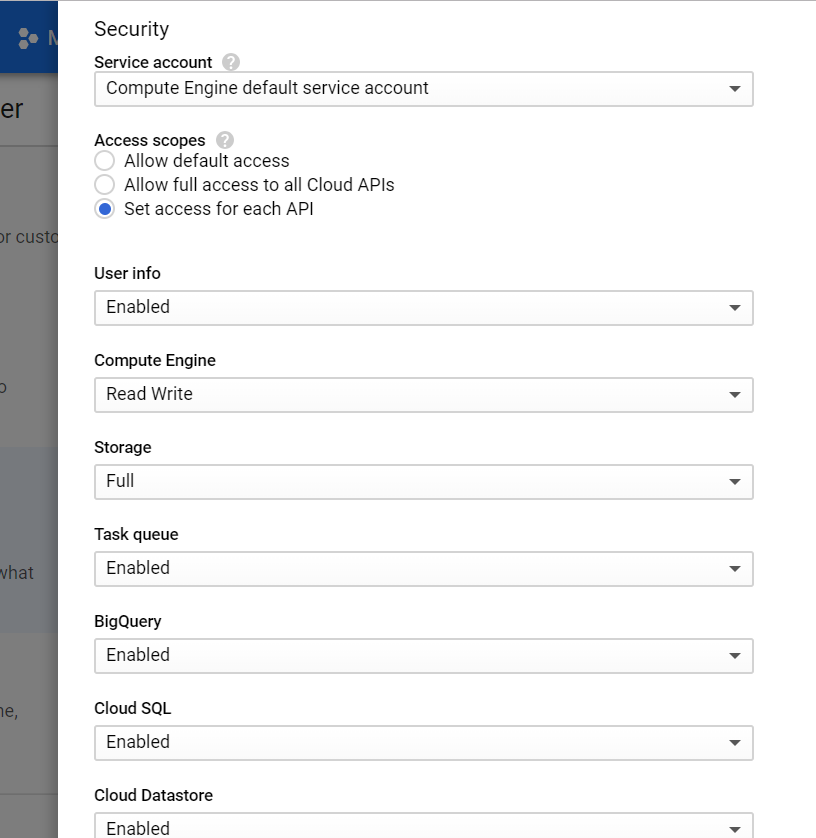

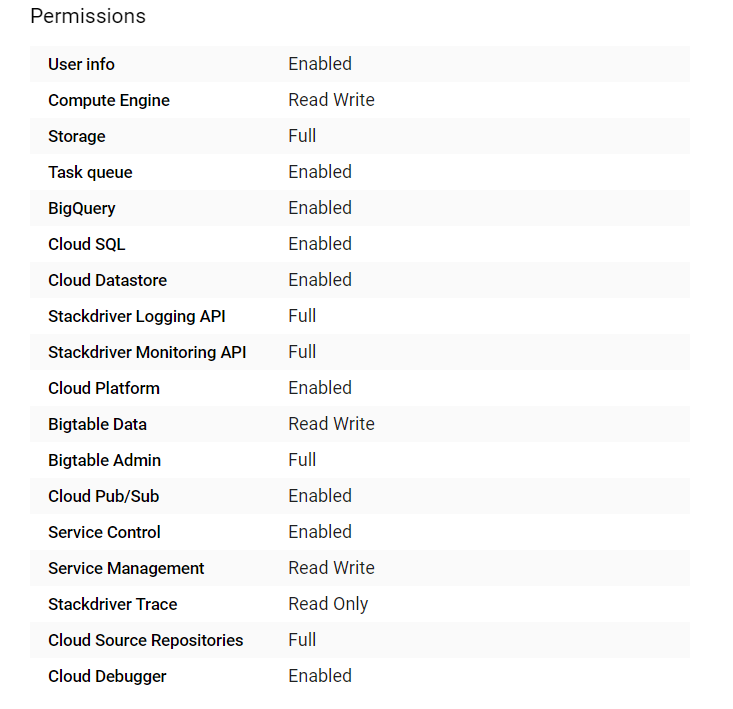

Go to Advanced edit -> Set Access for each API

It must have Full access to Storage.

2.4 Configure K8S Cluster

2.4.1 Configure the connect between jump box and K8S Cluster

Make sure your config is set to the project - gcloud config set project [PROJECT_ID]

- Run a checklist of the Clusters in the account: gcloud container clusters list

- Check the output : NAME LOCATION MASTER_VERSION MASTER_IP MACHINE_TYPE NODE_VE. alpha-cluster asia-south1-a 1.9.7-gke.6 35.200.254.78 f1-micro 1.9.7- NUM_NODES STATUS gke.6 3 RUNNING

- Run the following cmd -

gcloud container clusters get-credentials your-cluster-name --zone your-zone --project your-project

- The following output follows:

Fetching cluster endpoint and auth data. kubeconfig entry generated for alpha-cluster.

- Try checking details of the node running kubectlsuch as-

kubectl get nodes -o wide

gcloud container clusters get-credentials datahub25-cluster --zone us-central1-a --project stone-goal-235804

2.4.2 Prepare Helm

Helm is used by the Data Hub installer to deploy Data Hub in the Kubernetes cluster

- Provide roles and permissions

- kubectl create serviceaccount --namespace kube-system tiller

- kubectl create clusterrolebinding tiller --clusterrole=cluster-admin --serviceaccount=kube-system:tiller

- Initialize Helm

- helm init --service-account tiller

- Verify (following should not throw an error)

- helm ls

3 Install SAP Data Hub 2.5 Foundation

Go to https://repositories.sap.ondemand.com/ui/www/webapp/

Register

| sap-sh*****eve | WfKnNmGnl3Q*******tHd8v6alAtECgk |

Then we can use technical user to logon SAP Container Artifactory later

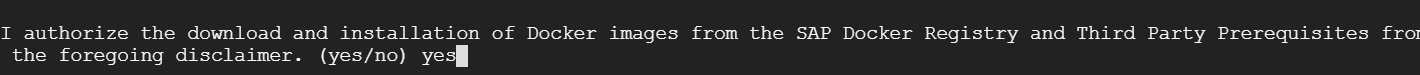

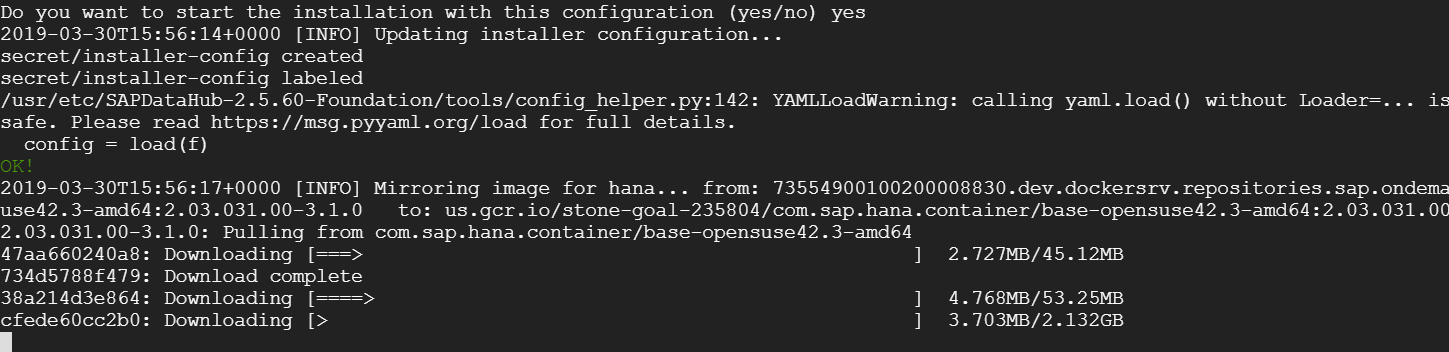

Go to Data Hub Foundation path folder, run:

./install.sh --sap-registry=73554900100200008830.dev.dockersrv.repositories.sap.ondemand.com --namespace=datahub-namespace --registry=us.gcr.io/stone-goal-235804

name it anything like datahubblackbelt.com because this will be the browser url you will use to access it after install and exposing the vsystem as an ingress in k8s. you will also edit your local hosts file on your laptop to point this datahubblackbelt.com to the external ip from the ingress.

I would set FQDN to: datahub25-cluster.c.stone-goal-235804.internal

Enter and Go next ->

define a password

sh****eve

Password: set to same with system

No Hadoop, hit Enter to skip

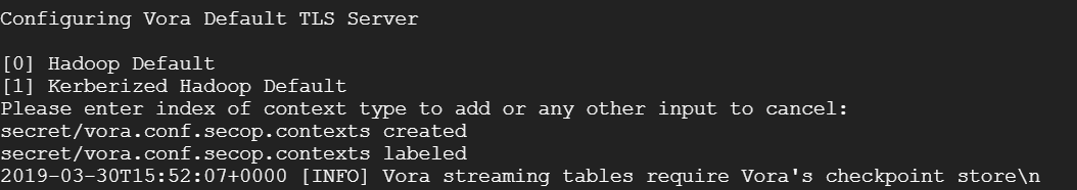

Answer no to “Enable Vora Checkpoint Store” ->

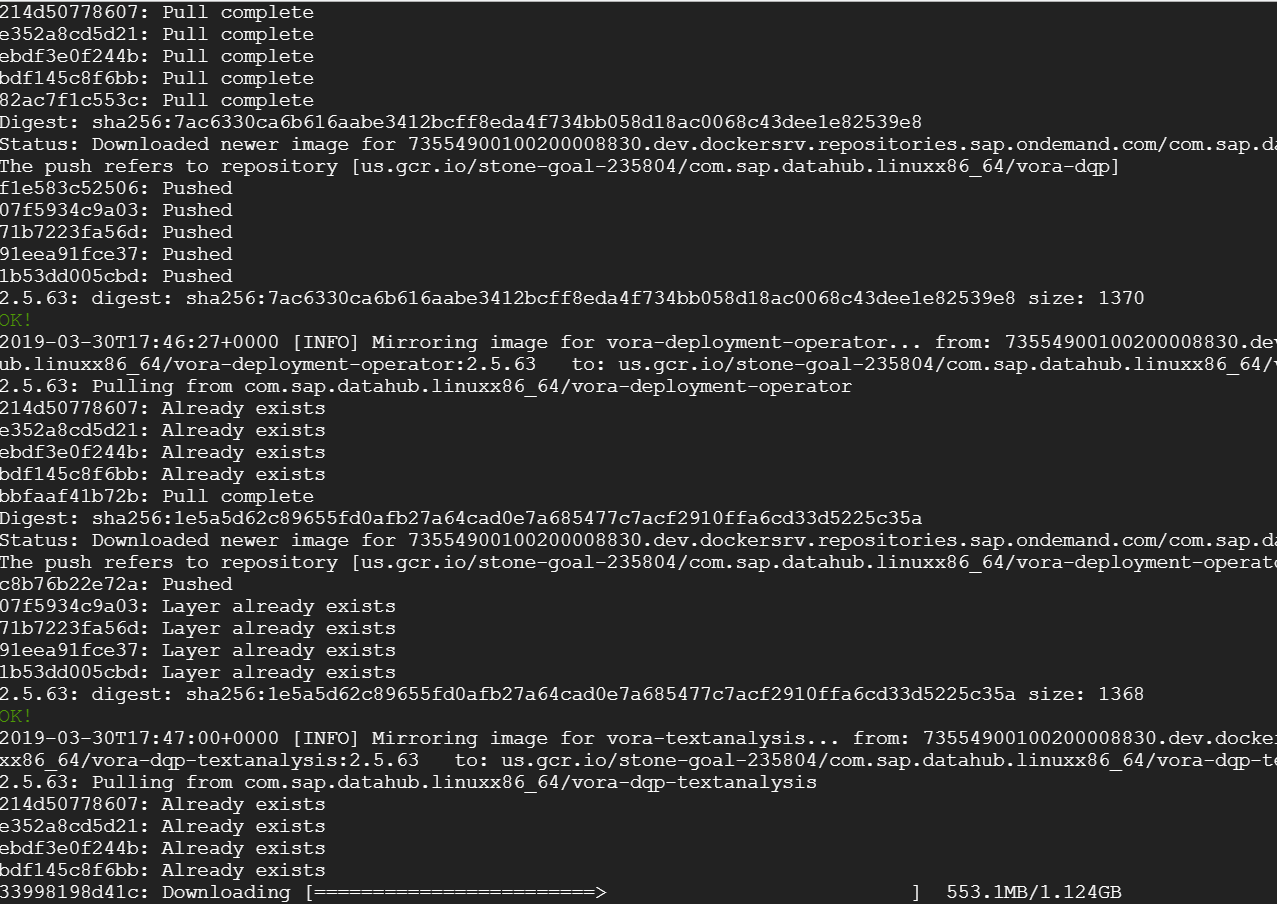

Downloading starts…

After a lot of downloads, push and pull…

8+ hours

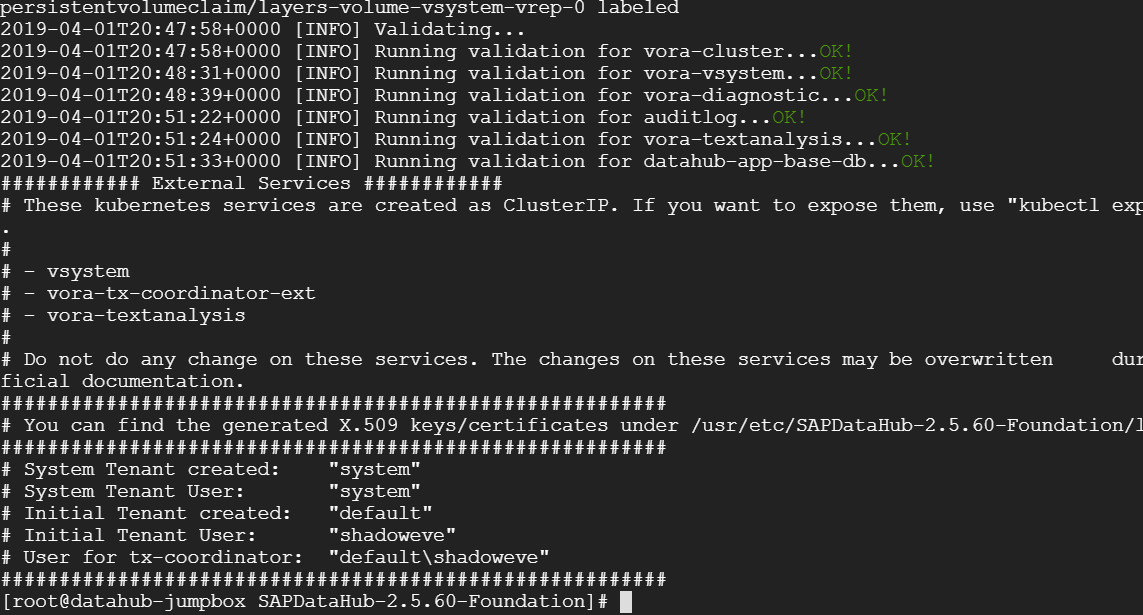

The installation is now complete.

4 Post-Installation Configurations

4.1 Expose SAP Data Hub System Management Externally on Google Cloud Platform

1.On the installation host, set the following environment variable:

export NAMESPACE=<Kubernetes Namespace>

2.Expose vsystem service as NodePort by creating a new service:

kubectl -n $NAMESPACE expose service vsystem --type NodePort --name=vsystem-ext

3.Patch the new service for ingress configuration:

kubectl -n $NAMESPACE patch service vsystem-ext -p '{"spec": {"ports" : [{"name": "vsystem-ext" , "port" : 8797 }]}}'

kubectl -n $NAMESPACE annotate service vsystem-ext service.alpha.kubernetes.io/app-protocols='{"vsystem-ext":"HTTPS"}'

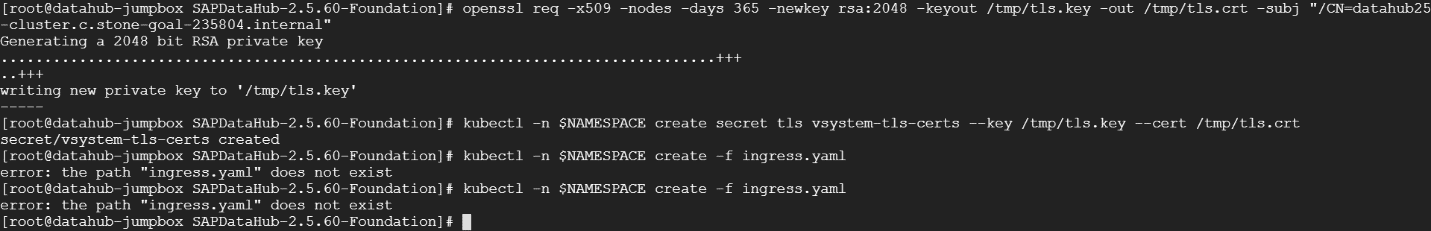

4.To create a new TLS certificate for your cluster, use the root user on the installation host to replace the placeholder <SAP Data Hub domain\> with the fully qualified domain name \(FQDN\) that you want to use for your service endpoints and that you will register in your DNS.

For example, you can use openSSL to generate a self-signed certificate, and expose it as a secret in Kubernetes.

openssl req -x509 -nodes -days 365 -newkey rsa:2048 -keyout /tmp/tls.key -out /tmp/tls.crt -subj "/CN=datahub25-cluster.c.stone-goal-235804.internal"

kubectl -n $NAMESPACE create secret tls vsystem-tls-certs --key /tmp/tls.key --cert /tmp/tls.crt

5.

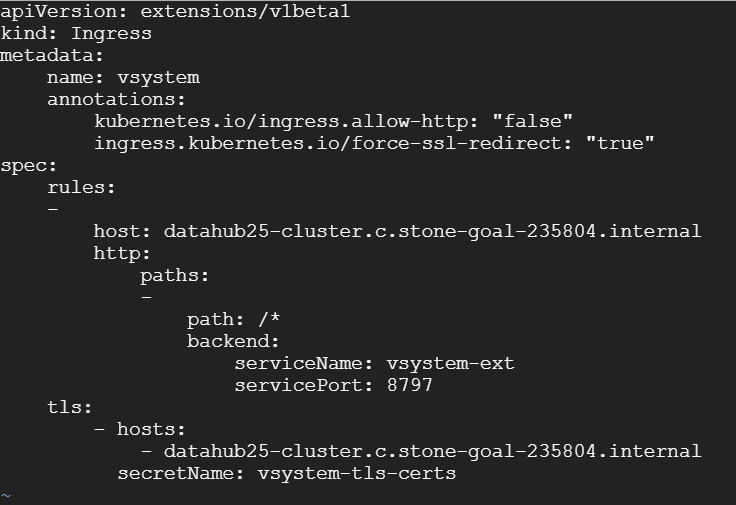

Use the root user on the installation host to create an ingress with the following YAML template, replacing the placeholder <SAP Data Hub domain\> with the same FQDN that you used to generate the certificate:

//ingress.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: vsystem

annotations:

kubernetes.io/ingress.allow-http: "false"

ingress.kubernetes.io/force-ssl-redirect: "true"

spec:

rules:

-

host: datahub25-cluster.c.stone-goal-235804.internal

http:

paths:

-

path: /*

backend:

serviceName: vsystem-ext

servicePort: 8797

tls:

- hosts:

- datahub25-cluster.c.stone-goal-235804.internal

secretName: vsystem-tls-certs

6.

Run following command in the same path with the ingress YAML file that you previously defined:

kubectl -n <$NAMESPACE\> create -f ingress.yaml

Note

Ingresses created on the Google Cloud Engine have an automatic timeout of 30 seconds. If a request takes more than this threshold, the connection is terminated by the ingress. As a consequence, users might experience frequent _502 Bad Gateway_ responses. The timeout can be incremented through the Google Cloud Platform Console or through its command-line interface.

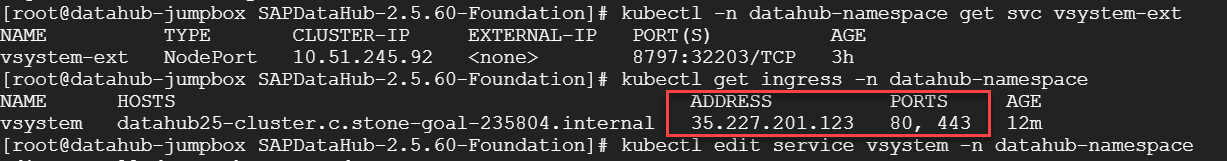

4.2 Publish the IP address assigned to the ingress that you created.

#

Once you create the ingress via yaml file, you can check the public IP by executing:

kubectl get ingress -n <namespace>

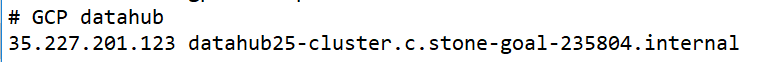

Note: After creating the ingress please wait about 5 mins to see the public IP from the above command. Once you get the IP, you have to put in your /etc/hosts file in the format: <host name> <IP address>

#

/Windows/system32/drivers/etc/hosts

4.3 Expose SAP Vora Transaction Coordinator and SAP HANA Wire Externally

4.3.1 Expose the Service in the Same Network

- Create the Kubernetes service and add the cloud-specific annotation to make it an internal load balancer:

export NAMESPACE=datahub-namespace

kubectl -n $NAMESPACE get service vora-tx-coordinator-ext -o="custom-columns=IP:.spec.clusterIP,PORT_TXC_AND_HANAWIRE:.spec.ports[*].targetPort"

Create a service of type LoadBalancer with the name vora-tx-coordinator-ext-lb-internal:

kubectl -n $NAMESPACE expose service vora-tx-coordinator-ext --type LoadBalancer --name=vora-tx-coordinator-ext-lb-internal

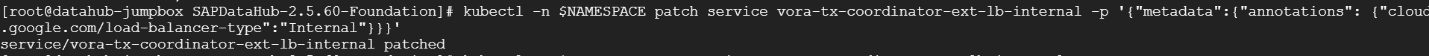

Add a cloud-specific annotation to make it an internal load balancer:

kubectl -n $NAMESPACE patch service vora-tx-coordinator-ext-lb-internal -p '{"metadata":{"annotations": {"cloud.google.com/load-balancer-type":"Internal"}}}'

2. Show the host and port of the new service:

kubectl -n $NAMESPACE get service vora-tx-coordinator-ext-lb-internal -w

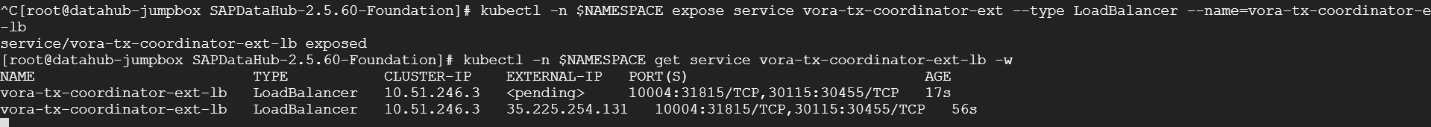

4.3.2 Expose the Service from Outside the Network

To make the SAP Vora Transaction Coordinator and SAP HANA Wire accessible from a location outside the Kubernetes network, you must create a service of type LoadBalancer with no internal annotations.

kubectl -n $NAMESPACE expose service vora-tx-coordinator-ext --type LoadBalancer --name=vora-tx-coordinator-ext-lb

kubectl -n $NAMESPACE get service vora-tx-coordinator-ext-lb -w

5 Validation

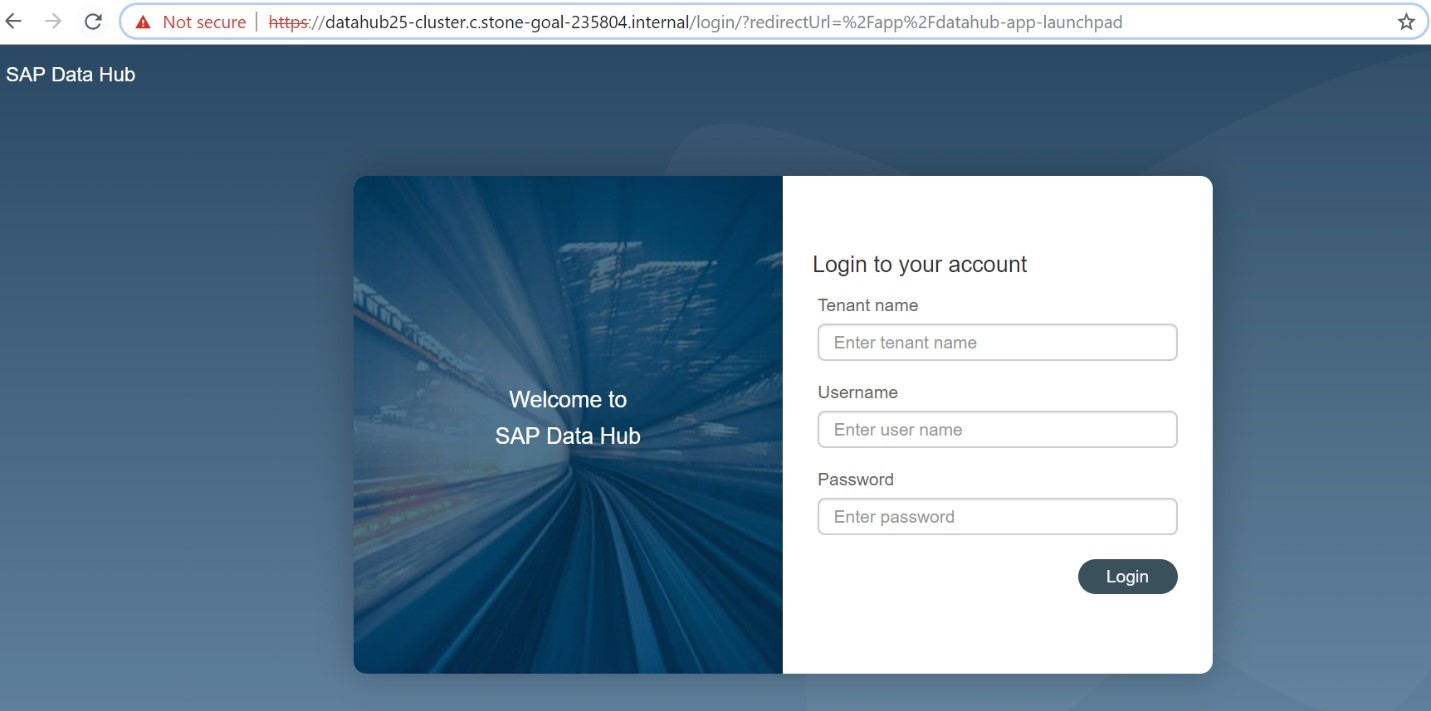

5.1 Logon to Data Hub

Logon to: https://datahub25-cluster.c.stone-goal-235804.internal:443

Use the default tenant user and password.

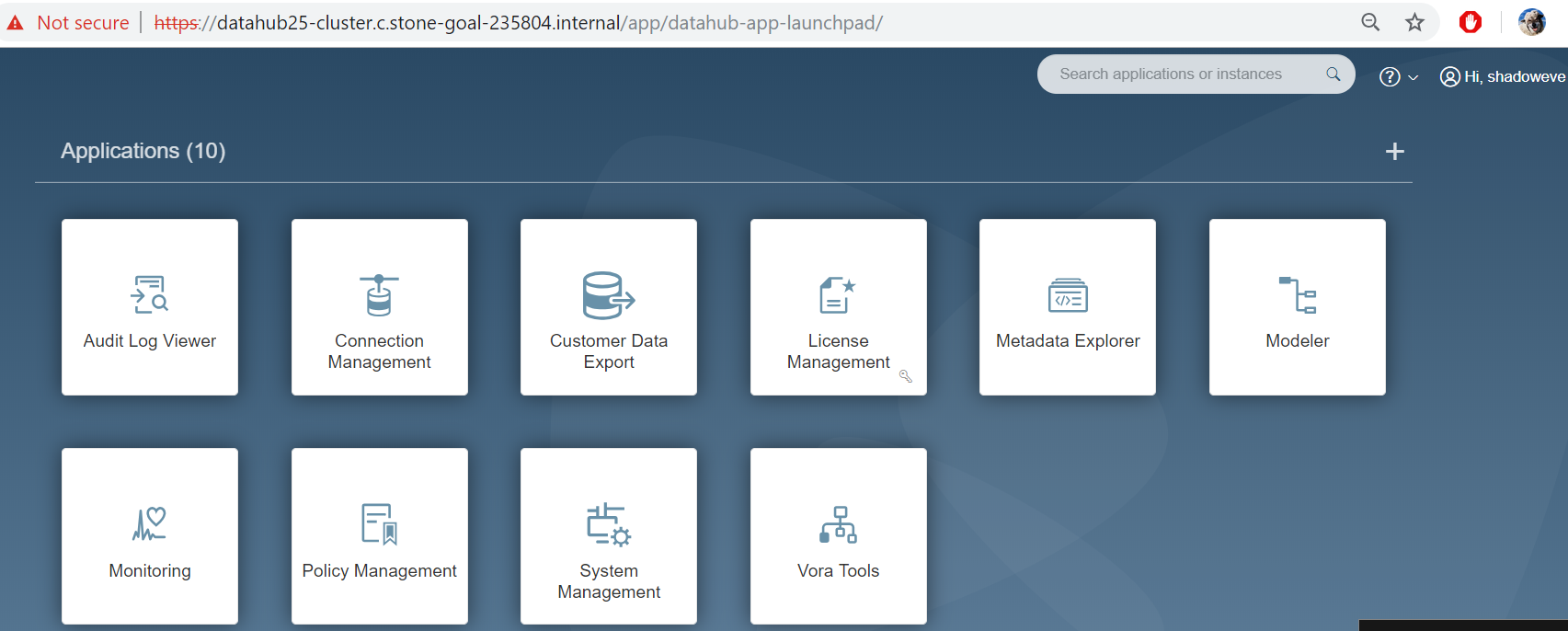

- Log in to Launchpad

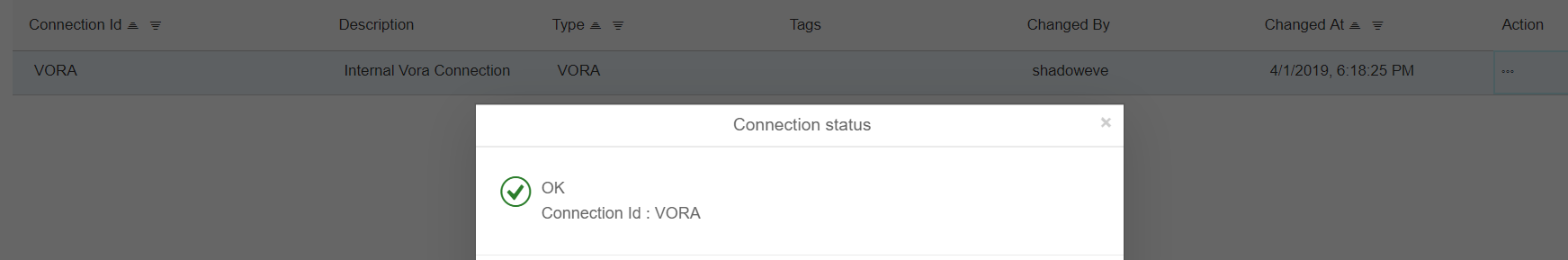

- Create and validate a connection in Connection Management

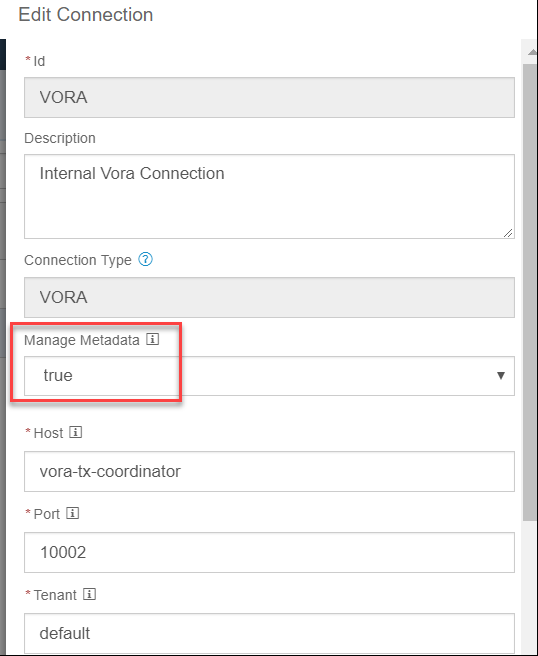

- Check Vora connection:

- vora-tx-coordinator:10002 use the same credentials used to log on to Data Hub Launchpad

- Create connection to public cloud storage

- S3, GCS, etc.

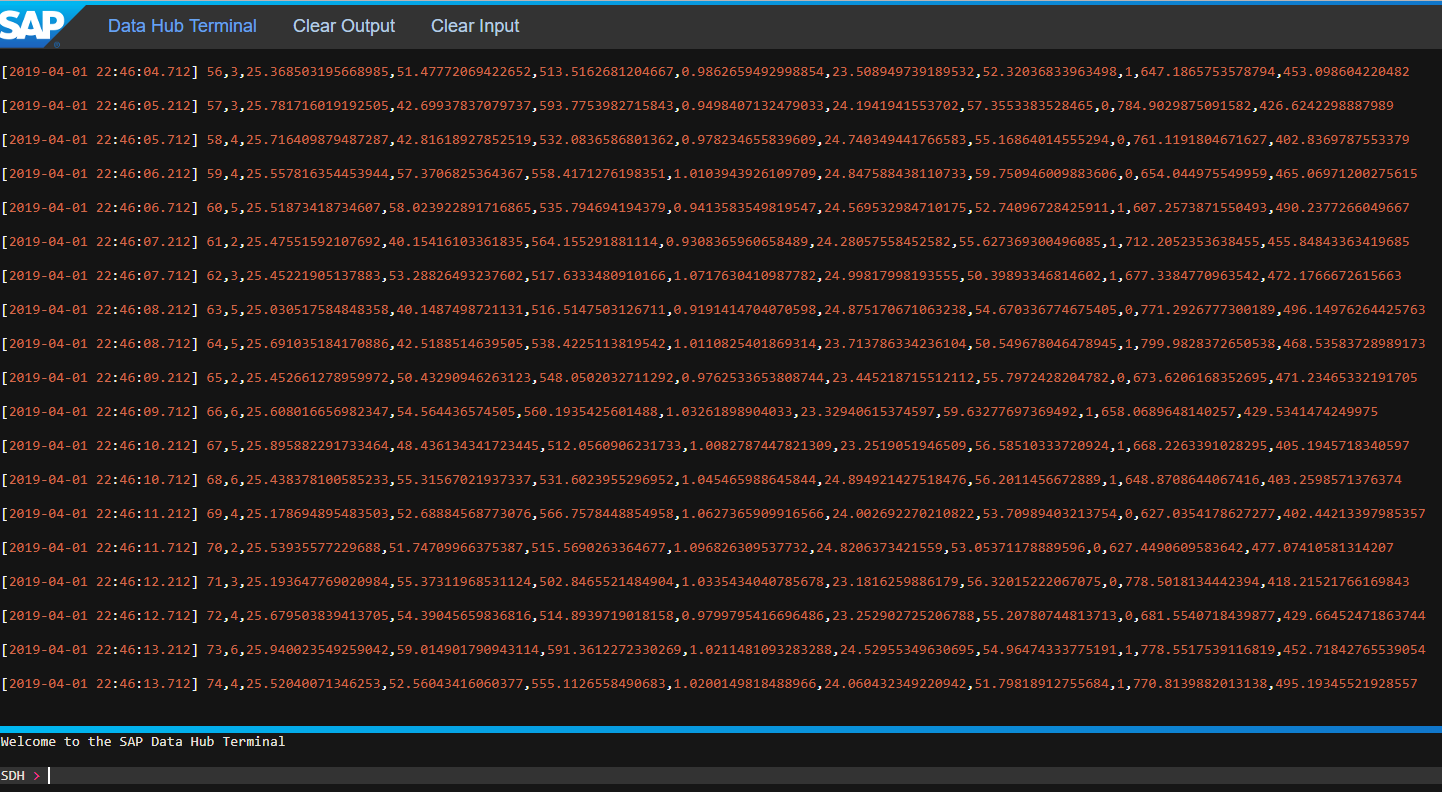

Create a simple pipeline:

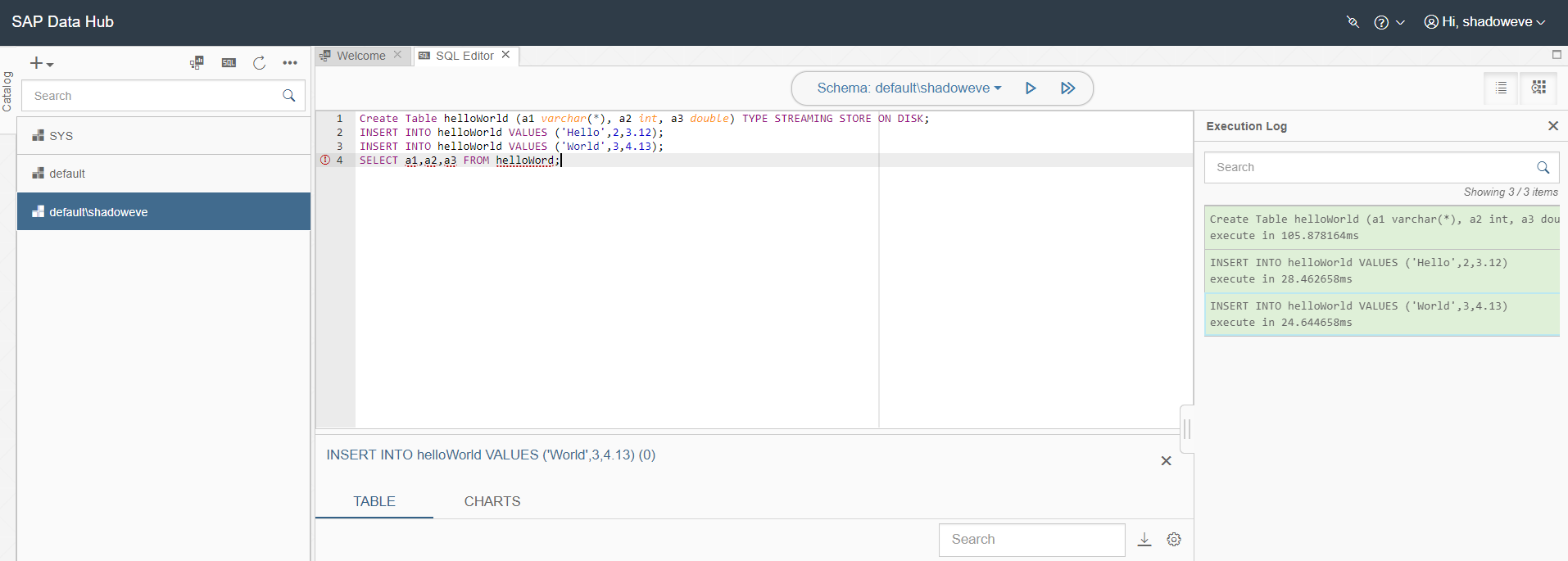

5.2 Manual validation – Create a Vora Streaming table in Vora Tools

Create Table helloWorld (a1 varchar(*), a2 int, a3 double) TYPE STREAMING STORE ON DISK;

INSERT INTO helloWorld VALUES ('Hello',2,3.12);

INSERT INTO helloWorld VALUES ('World',3,4.13);

SELECT a1,a2,a3 FROM helloWorld;

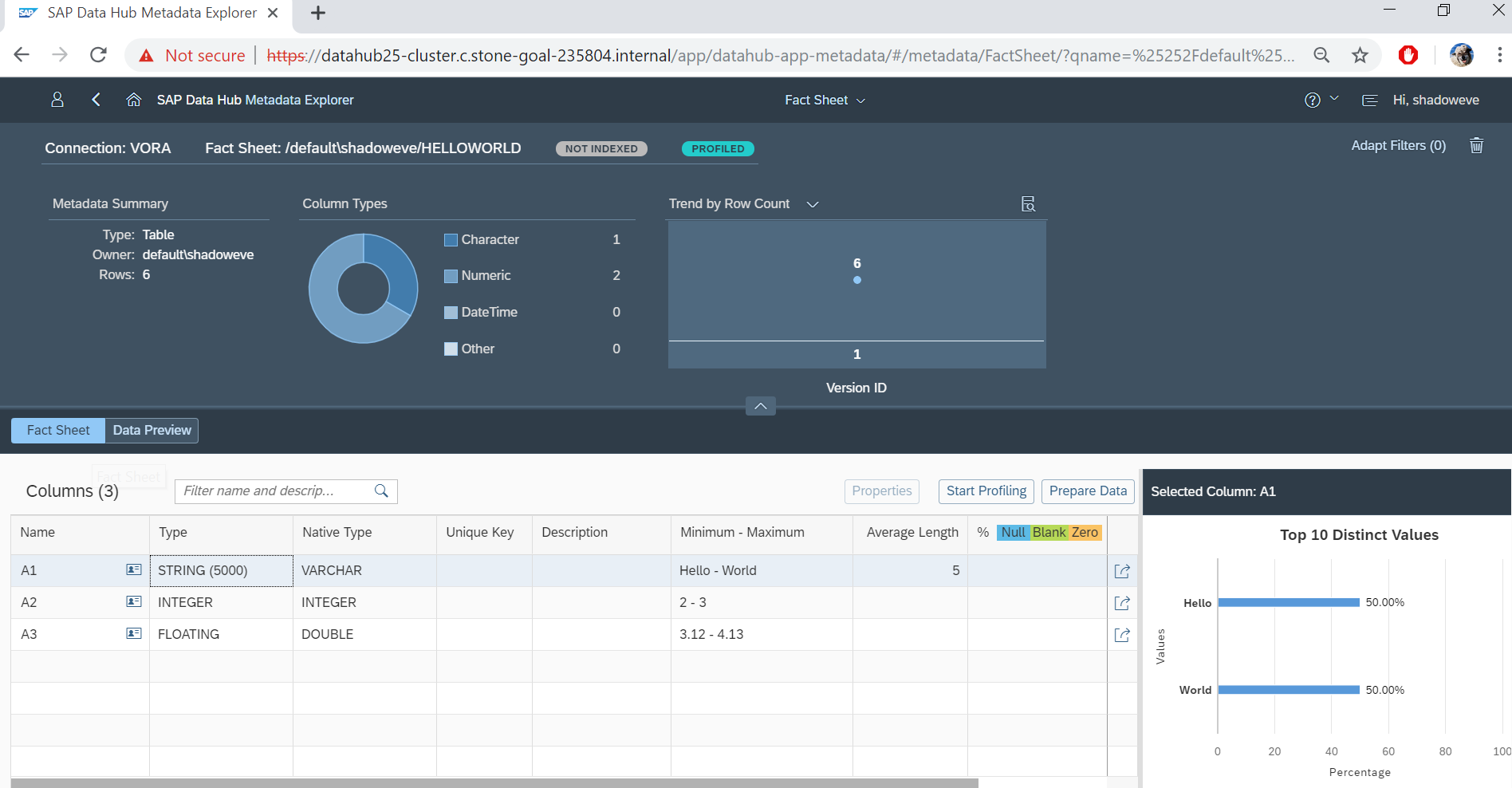

5.3 Manual validation – View/Profile a Vora table Metadata Explorer

THIS has to be set to TRUE !!! ->

Log can be seen in Modeler

result:

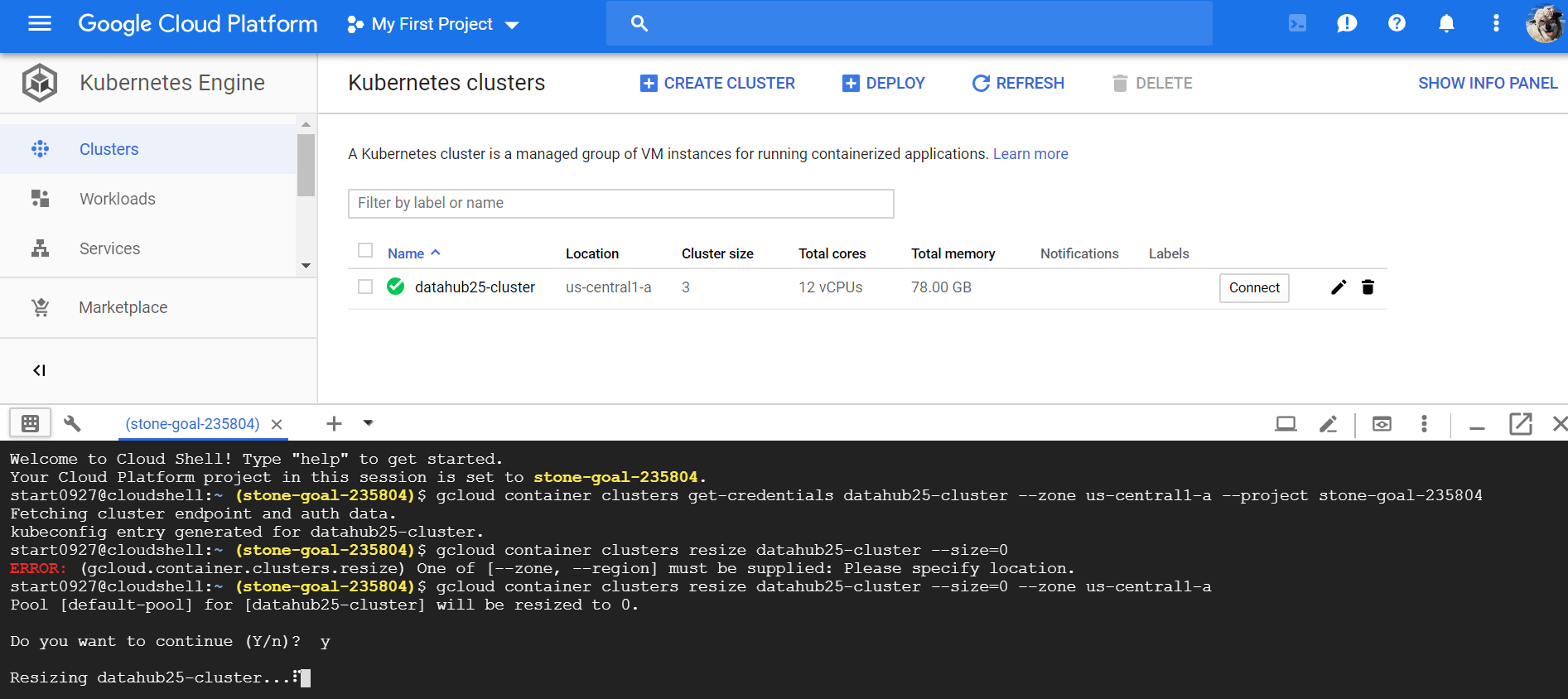

6 Suspending K8S cluster to Save money

gcloud container clusters resize $CLUSTER_NAME --size=0

Notice: Unless you delete your cluster & vm & storage completely, Google is still charging you, but much cheaper.

Thanks for reading.

Now we have successfully deployed SAP Data Hub 2.5 on Google Cloud Platform. You can explore more with the GCP local offerings such as cloud storage or HDFS. For more document regarding Data Hub installation on AWS or Azure or other environments, there're other documents available on help.sap.com

- SAP Managed Tags:

- SAP Data Intelligence

Labels:

2 Comments

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,661 -

Business Trends

86 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

64 -

Expert

1 -

Expert Insights

178 -

Expert Insights

270 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

Kafka

1 -

Life at SAP

784 -

Life at SAP

11 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,578 -

Product Updates

323 -

Replication Flow

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,886 -

Technology Updates

395 -

Workload Fluctuations

1

Related Content

- explore the business continuity recovery sap solutions on AWS DRS in Technology Blogs by Members

- Harnessing the Power of SAP HANA Cloud Vector Engine for Context-Aware LLM Architecture in Technology Blogs by SAP

- Long Awaited Transformation for Your Credentials in Technology Blogs by Members

- Configuration: SAP Ariba SSO with SAP Cloud Identity Services - Identity Authentication in Technology Blogs by SAP

- SAP BTP and Third-Party Cookies Deprecation in Technology Blogs by SAP

Top kudoed authors

| User | Count |

|---|---|

| 11 | |

| 10 | |

| 10 | |

| 9 | |

| 8 | |

| 7 | |

| 7 | |

| 7 | |

| 7 | |

| 6 |