- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- Annotated links: Episode 12 of Hands-on SAP dev wi...

Technology Blogs by SAP

Learn how to extend and personalize SAP applications. Follow the SAP technology blog for insights into SAP BTP, ABAP, SAP Analytics Cloud, SAP HANA, and more.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Developer Advocate

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

04-04-2019

3:04 PM

This is a searchable description of the content of a live stream recording, specifically "Episode 12 - Exploring and understanding parts of @Sap/cds JS - code & style" in the "Hands-on SAP dev with qmacro" series. There are links directly to specific highlights in the video recording. For links to annotations of other episodes, please see the "Catch the replays" section of the series blog post.

This episode, titled "Exploring and understanding parts of @sap/cds JS - code & style", was streamed live on Fri 15 Mar 2019 and is approximately one hour in length. The stream recording is available on YouTube.

Below is a brief synopsis, and links to specific highlights - use these links to jump directly to particular places of interest in the recording, based on 'hh:mm:ss' style timestamps.

I had an itch to scratch, in that I wanted to be able to filter out columns in CSV files. In this episode we take a look at some of the JavaScript within the @Sap/cds module – what it offers and how it’s written. We then see how some of that was useful in building a simple CSV filter mechanism.

This episode was live streamed from the SAP offices in Maidenhead, just before the start of SAP Inside Track Maidenhead which was taking place that day.

00:03:30: A quick update, showing the new playlist of live stream recordings on YouTube in the SAP Developers channel (if you're not suscribed already to the channel, please consider doing so!).

00:05:00: A look at the annotations of the live stream recordings - check out the links in the main Hands-on SAP dev blog post.

00:07:48: Looking at the brand new updated UI5 course on openSAP: Evolved Web Apps with SAPUI5 - don't forget to enrol!

00:08:50: There's another new course on openSAP that we look at too, and this one, SAP Cloud Platform Essentials (Update Q2/2019), which covers the SAP Cloud Application Programming Model, hurray!

00:11:10: Starting to take a look at what we're going to do in this episode, talking about the source code of the

00:12:35: Looking at the

In grabbing this data we naturally get all of the fields, i.e. values for all of the properties in the entities that we're retrieving (Products, Suppliers and Categories). If we want fewer fields, it is quite cumbersome to manually remove from from the CSV files that are produced. This gave me the idea to write something that would do it for me, taking some ideas and code from the

00:15:52: Hacking my sitting position by finding a couple of stackable chairs and sitting on them both instead for a better posture. Stacked chairs ftw!

00:16:32: The "initializing from csv files at ./db/csv..." message that we see when invoking

This pointed me to the

00:18:00: Reminding ourselves of the different options available to us with the

00:19:00: Digging further into the

00:19:34: It does occur to me that the name "bin" (short for "binary") is a little anachronistic, or at least not particularly appropriate, given that the contents are not binary files as they're not compiled - they're JavaScript, after all. Perhaps a better term is "executable".

00:21:45: In the

... and the JavaScript files in that

00:22:28: We take a look at one of these scripts -

00:24:00: Running

So we start debug mode with F5, but that also is not quite what we want - what is executed is

This is because of the default launch configuration in VS Code that comes with a new CAP initialised project, in

We can see here that the argument to

00:25:30: So what we do is duplicate the launch configuration stanza, creating a new one for

00:27:11: In the debug console we look at the data available to us at the breakpoint, specifically

00:28:45: A look at how the module uses destructuring, a feature available in ES6, to pull specific functions from

00:30:55: The

00:34:35: Looking into where

00:36:10: Someone (an SAP Inside Track Maidenhead attendee) looking at me through the internal office window, wondering, perhaps, what the heck I'm doing.

00:36:40: Switching over to the CSV filter project

A sample run is successful:

and in

00:40:20: Taking a look in

I'm also using the command-line-args module which allows me to very simply build a nice command line interface with a rich set of options.

00:45:10: Cameo appearance in the room from Jan van Ansem, one of the organisers of SAP Inside Track Maidenhead!

00:45:49: Looking at my attempts to write functions that are pure, by looking at how I approached writing the logging function. While in the past I would have written a logging function to refer to a global value (outside of the function) that indicated whether log output was generally required, I wrote this logging function that didn't refer to any values outside of itself, by writing it so it can be partially applied, and then adopting the IIFE (immediately invoked function expression) technique, like this:

Note the two fat arrows in there, and the implicit use of a closure.

00:50:07: Looking at the simple

00:51:40: Looking how the

00:53:00: Looking at the

In other words we're calling

When we look at the definition of the

00:55:10: Now our confidence has grown by looking at the

One interesting thing is that I changed the original definition of this function, to switch around the order of the arguments, so it could be more easily (and usefully) partially applied. Of course, we can't continue without a quick reference to the excellent talk "Hey Underscore, You're Doing It Wrong!" by Brian Lonsdorf. If you haven't watched this talk, go and watch it now! And if you have watched it already, go and watch it again!

Let's finish this annotation post by looking at where that

Take a moment to stare at that, especially the

This episode, titled "Exploring and understanding parts of @sap/cds JS - code & style", was streamed live on Fri 15 Mar 2019 and is approximately one hour in length. The stream recording is available on YouTube.

Below is a brief synopsis, and links to specific highlights - use these links to jump directly to particular places of interest in the recording, based on 'hh:mm:ss' style timestamps.

Brief synopsis

I had an itch to scratch, in that I wanted to be able to filter out columns in CSV files. In this episode we take a look at some of the JavaScript within the @Sap/cds module – what it offers and how it’s written. We then see how some of that was useful in building a simple CSV filter mechanism.

This episode was live streamed from the SAP offices in Maidenhead, just before the start of SAP Inside Track Maidenhead which was taking place that day.

Links to specific highlights

00:03:30: A quick update, showing the new playlist of live stream recordings on YouTube in the SAP Developers channel (if you're not suscribed already to the channel, please consider doing so!).

00:05:00: A look at the annotations of the live stream recordings - check out the links in the main Hands-on SAP dev blog post.

00:07:48: Looking at the brand new updated UI5 course on openSAP: Evolved Web Apps with SAPUI5 - don't forget to enrol!

00:08:50: There's another new course on openSAP that we look at too, and this one, SAP Cloud Platform Essentials (Update Q2/2019), which covers the SAP Cloud Application Programming Model, hurray!

00:11:10: Starting to take a look at what we're going to do in this episode, talking about the source code of the

@Sap/cds module family, which is a rich source of learning for us in our journey towards ES6 mastery.00:12:35: Looking at the

grab.js script that we've been building, which allows us to retrieve data from the Northwind service, paging through it where skip tokens are used.In grabbing this data we naturally get all of the fields, i.e. values for all of the properties in the entities that we're retrieving (Products, Suppliers and Categories). If we want fewer fields, it is quite cumbersome to manually remove from from the CSV files that are produced. This gave me the idea to write something that would do it for me, taking some ideas and code from the

@Sap/cds module family.00:15:52: Hacking my sitting position by finding a couple of stackable chairs and sitting on them both instead for a better posture. Stacked chairs ftw!

00:16:32: The "initializing from csv files at ./db/csv..." message that we see when invoking

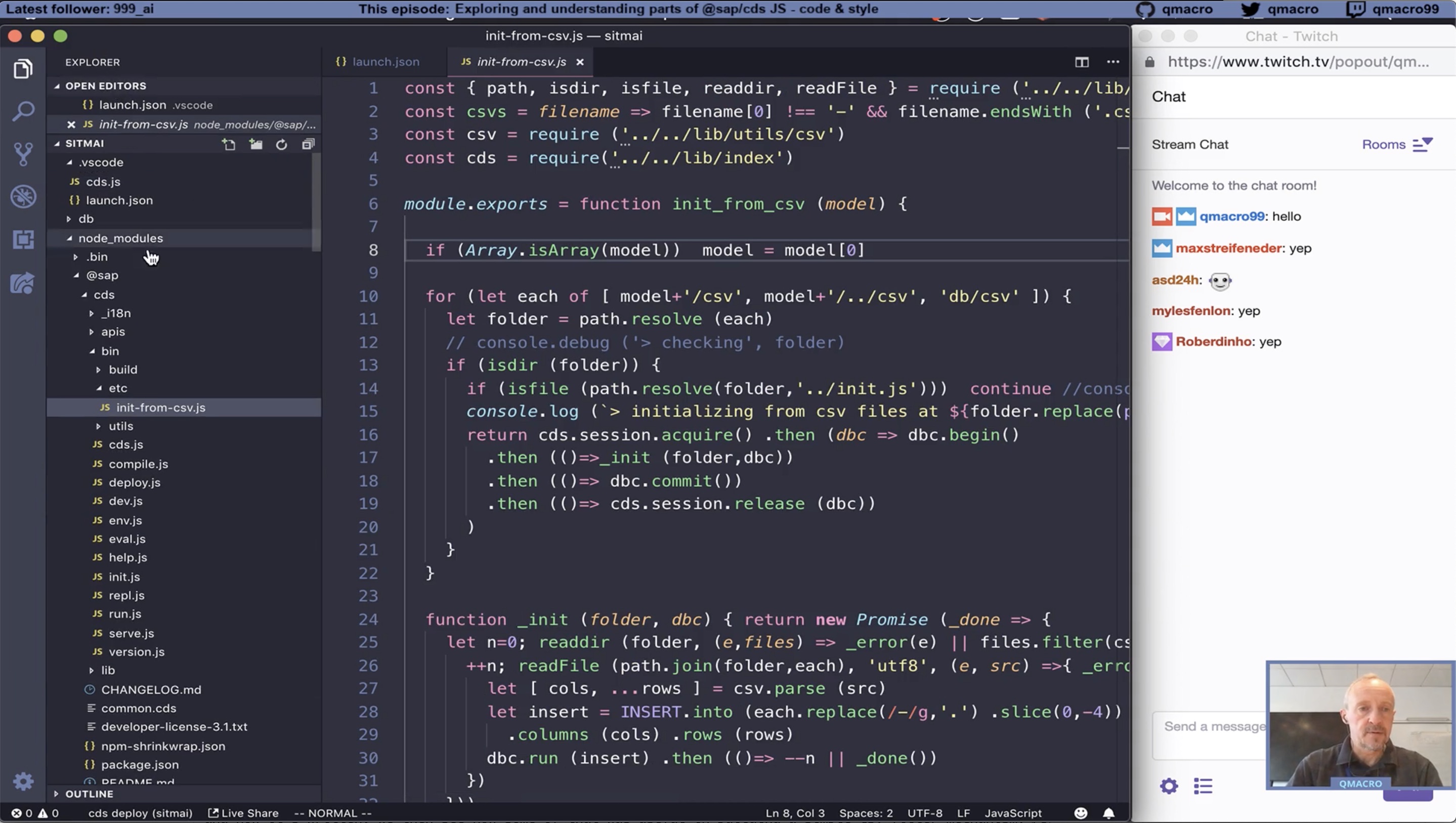

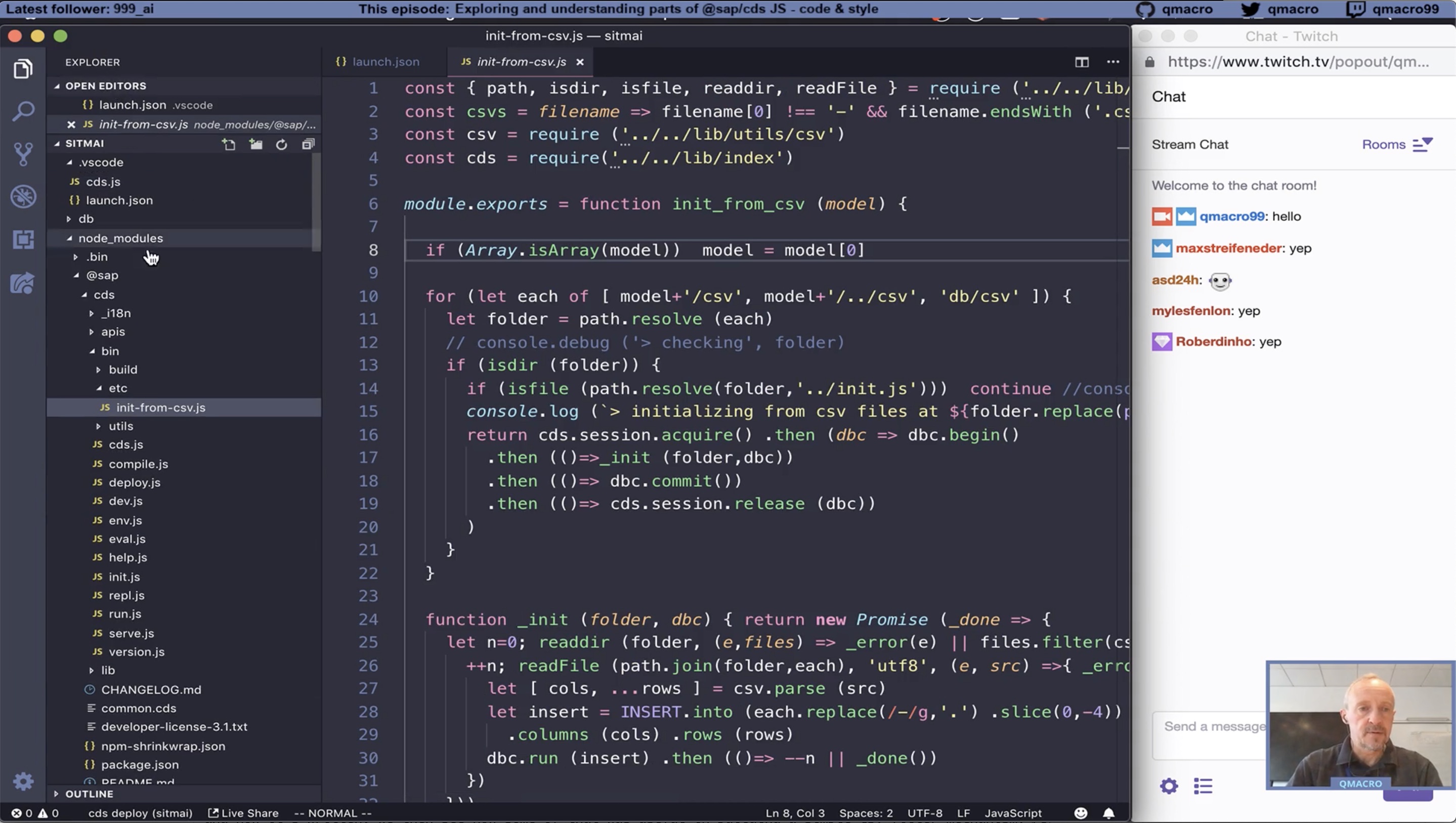

cds deploy gave me a clue that parts of @Sap/cds were indeed (of course) handling CSV data, and I was curious to take a look:grep -R 'initializing from csv' *This pointed me to the

node_modules/@sap/cds/bin/etc/init-from-csv.js file.00:18:00: Reminding ourselves of the different options available to us with the

cds command line tool, and taking a brief look at how the other @Sap/cds modules are related via the "dependencies" information shown in the output of:npm info @sap/cds00:19:00: Digging further into the

@Sap/cds/ directory in the node_modules/ directory in the project, we see the common.cds file that we've looked at before, and also lib/ and bin/ directories.00:19:34: It does occur to me that the name "bin" (short for "binary") is a little anachronistic, or at least not particularly appropriate, given that the contents are not binary files as they're not compiled - they're JavaScript, after all. Perhaps a better term is "executable".

00:21:45: In the

bin/ directory we have cds.js that responds when we invoke the cds command line client, and we can then also see more or less a one-to-one relationship between what you commands are available:=> cds

USAGE

cds <command> [<args>]

COMMANDS

c | compile ...individual models (= the default)

d | deploy ...data models to a database

s | serve ...service models to REST clients

b | build ...whole modules or projects

i | init ...jump-starts a new project

e | env get/set current cds configuration

r | repl cds's read-eval-event-loop

h | help shows usage for cds and individual commands

v | version prints detailed version information

[...]... and the JavaScript files in that

bin/ directory:=> ls -1 node_modules/@sap/cds/bin | grep .js

cds.js*

compile.js

deploy.js

dev.js

env.js

eval.js

help.js

init.js

repl.js

run.js

serve.js

version.js00:22:28: We take a look at one of these scripts -

deploy.js - where we see a promise chain that makes use of the init-from-csv module! Inside this module we place a breakpoint to see what happens when we invoke the cds deploy command.00:24:00: Running

cds d within the integrated terminal in VS Code - but of course we don't hit the breakpoint like this as we're not in debug mode.So we start debug mode with F5, but that also is not quite what we want - what is executed is

cds run not cds deploy here.This is because of the default launch configuration in VS Code that comes with a new CAP initialised project, in

.vscode/launch.json:{

"version": "0.2.0",

"configurations": [

{

"name": "cds run",

"type": "node",

"request": "launch",

"program": "${workspaceFolder}/.vscode/cds", "args": [ "run" ],

"skipFiles": [

"<node_internals>/**/*.js",

"**/cds-reflect/lib/index.js",

"**/cds/lib/index.js",

"**/.vscode/cds.js",

],

"autoAttachChildProcesses": true,

"console": "integratedTerminal"

}

]

}We can see here that the argument to

cds here is "run".00:25:30: So what we do is duplicate the launch configuration stanza, creating a new one for

cds deploy. Then we can re-start debugging mode, choose the specific "cds deploy" launch configuration, and we end up at the breakpoint we set. Lovely!00:27:11: In the debug console we look at the data available to us at the breakpoint, specifically

model, which is an Array with two values, "db" and "srv". Now we know that, reading the code in init-from-csv becomes easier - it looks for CSV files and loads the contents into the persistence layer via the CDS API.00:28:45: A look at how the module uses destructuring, a feature available in ES6, to pull specific functions from

lib/utils/fs.js, and in turn, how this custom fs module bases itself on the builtin fs module, via the __proto__ feature.00:30:55: The

_init function in the init-from-csv module parses the CSV data and then inserts into the tables. We take a quick look at what exactly the expression .slice(0,-4) is doing here, which looks initially a bit odd, until we realise that slice works on strings on a character-by-character basis: 'filename.ext'.slice(0,-4) produces "filename".00:34:35: Looking into where

csv.parse comes from, which is another module in the @Sap/cds bundle - in lib/utils/csv.js. There is a parsing function and a serialising function in this module - I can definitely make use of the former!00:36:10: Someone (an SAP Inside Track Maidenhead attendee) looking at me through the internal office window, wondering, perhaps, what the heck I'm doing.

00:36:40: Switching over to the CSV filter project

csvf, and having a look what it does:=> node ./cli.js

Usage: csvf [options]

Options:

-i, --input Input CSV file (mandatory)

-o, --output Output CSV file (defaults to _out.csv)

-f, --fields List of fields to output (space separated)

-h, --help Shows this help

-v, --verbose Talkative mode

Example:

csvf -i data.csv -f supplierID companyName city -o smaller.csvA sample run is successful:

=> node ./cli.js -i tmp/Suppliers.csv --fields supplierID companyName -v

>> Processing tmp/Suppliers.csv

>> Filtering to supplierID,companyName

>> Written to _out.csvand in

_out.csv we see that we have a reduced CSV set:supplierID,companyName

1,Exotic Liquids

2,New Orleans Cajun Delights

3,Grandma Kelly's Homestead00:40:20: Taking a look in

cli.js to see how we can use the @Sap/cds's csv module in our own program - by installing @Sap/cds in the project, I have access to all the modules in the bundle, which means I can do this:const csv = require('@sap/cds/lib/utils/csv')I'm also using the command-line-args module which allows me to very simply build a nice command line interface with a rich set of options.

00:45:10: Cameo appearance in the room from Jan van Ansem, one of the organisers of SAP Inside Track Maidenhead!

00:45:49: Looking at my attempts to write functions that are pure, by looking at how I approached writing the logging function. While in the past I would have written a logging function to refer to a global value (outside of the function) that indicated whether log output was generally required, I wrote this logging function that didn't refer to any values outside of itself, by writing it so it can be partially applied, and then adopting the IIFE (immediately invoked function expression) technique, like this:

const log = (isVerbose => x => isVerbose && console.log(">>", x))(options.verbose)Note the two fat arrows in there, and the implicit use of a closure.

00:50:07: Looking at the simple

serialise function which also uses an ES6 feature, specifically default function parameters.00:51:40: Looking how the

csv.parse function is used in this script:let [cols, ...rows] = csv.parse(src)00:53:00: Looking at the

indices function in the script, where we use the interesting function application thus:const selectedIndices = indices(cols)(options.fields)In other words we're calling

indices(cols) which produces a function, which we then call, passing options.fields.When we look at the definition of the

indices function, we can better understand how this works:const indices = ref => fields => fields.map(x => ref.indexOf(x))00:55:10: Now our confidence has grown by looking at the

indices function, we take a quick look in the last moments at the pick function which is possibly a little bit more exciting:const pick = indices => source =>

indices.reduce((a, x) => (_ => a)(a.push(source[x])), [])One interesting thing is that I changed the original definition of this function, to switch around the order of the arguments, so it could be more easily (and usefully) partially applied. Of course, we can't continue without a quick reference to the excellent talk "Hey Underscore, You're Doing It Wrong!" by Brian Lonsdorf. If you haven't watched this talk, go and watch it now! And if you have watched it already, go and watch it again!

Let's finish this annotation post by looking at where that

pick function is partially applied:writefile(

options.output.

serialise(outCols, rows.map(pick(selectedIndices))),

'utf8',

e => err(e) || log(`Written to ${options.output}`)

)Take a moment to stare at that, especially the

map call. And then go and watch that video! 😉

- SAP Managed Tags:

- SAP Cloud Application Programming Model,

- JavaScript,

- SAP Business Technology Platform

Labels:

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

93 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

67 -

Expert

1 -

Expert Insights

177 -

Expert Insights

301 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

GraphQL

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

13 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,577 -

Product Updates

346 -

Replication Flow

1 -

REST API

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,873 -

Technology Updates

429 -

Workload Fluctuations

1

Related Content

- SAP Inside Track the Netherlands #sitNL returns November 10 and 11 in Technology Blogs by SAP

- Cloud-Native Lab #2 - Comparing Cloud Foundry and Kyma Manifests in Technology Blogs by SAP

- Introducing SAP Cloud Platform Enthusiast Series & Highlights of Episode 1 in Technology Blogs by Members

- Annotated links: Episode 58 of Hands-on SAP dev with qmacro in Technology Blogs by SAP

- Annotated links: Episode 57 of Hands-on SAP dev with qmacro in Technology Blogs by SAP

Top kudoed authors

| User | Count |

|---|---|

| 35 | |

| 17 | |

| 17 | |

| 15 | |

| 11 | |

| 9 | |

| 8 | |

| 8 | |

| 8 | |

| 7 |