- SAP Community

- Products and Technology

- Technology

- Technology Blogs by Members

- Using the Cloud SDK in an existing Spring app

Technology Blogs by Members

Explore a vibrant mix of technical expertise, industry insights, and tech buzz in member blogs covering SAP products, technology, and events. Get in the mix!

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

former_member19

Active Participant

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

02-08-2019

9:31 AM

There seems to be a lot of hype these days around the S/4 Cloud SDK / new Cloud Application Programming Model. I've briefly used it in the past, but decided that it is not mature enough to invest time into it.

As I am between projects right now, I decided to take another look over it. Most of the blogs here on SCN focus on how to build new applications ("side by side extensions") using this SDK. Even though I have my disagreements with the approach proposed for creating new apps, I decided to look into another use case: adding stuff to existing applications.

This will be a terribly honest look into how my journey was and all the problems that I encountered.

GitHub: https://github.com/serban-petrescu/spring-training-workshop

As it happens, I have a small Spring Boot application lying around. Initially, I created it during a small workshop that I held internally at our company, but I re-purposed it to also explore the SDK.

The business context of the app is very simple: we want to help a teacher manage grades for his students. Basically, we have three phases during a university year:

During the aforementioned workshop, I implemented everything using typical Java + Spring constructs:

Before starting with the SDK, I did a small cleanup, upgraded to the latest version of Spring Boot and finally wrote a simple docker compose file for setting up a local PostgreSQL database (instead of using one installed directly on my machine).

Let's imagine the following situation: we built our Spring app and delivered it to production. Some external applications are already using the REST API that we provided. Now our customer contacts us asking if he can integrate our app into his SAP ecosystem.

As we all know, SAP took a strategic decision to go for OData as a protocol some while ago, so after some analysis we decide to also provide an OData API for our app. It goes without saying that the existing consumers should not be impacted at all or as little as possible.

Because we are unsure exactly who will consume our APIs, we also think that providing both OData V2 and OData V4 would make sense (if possible).

After reading a little around, I realized that I only need a small portion of the SDK, namely the service development part. I used Olingo a while back for creating V2 services and it was for sure not a pleasant development experience.

At a glance, it looked like I will just need to annotate some methods and that's it. Well, after thinking about it a little, I then realized there must be some hooks or listeners to trigger the annotations scanning at the very least.

First I read and went through some blogs. carlos.roggan created a nice blog series dedicated to the OData service development, so I started from there.

Initially, I dissected the V2 provider libraries, but I struggled a lot to get the correct dependencies inside my POM. So this is my first point of contention: there are a lot of dependencies and it is very difficult to navigate through them. In the BOM(s), many of them are listed, some with exclusions, basically forcing you to import the BOM even if you actually only need one single dependency.

I ended up including the following dependencies:

This seems to pull a lot of other stuff that I don't want, but I don't have a better alternative (without having to exclude manually transitive dependencies that I don't care about):

Then I added the V4 dependencies.

They are mostly symmetrical to the V2 dependencies, with two annoying differences:

Verdict: for sure it is a good thing that we can use Maven Central to pull the right dependencies. But the way in which the artifacts are organized is not clean in my view, having a lot of dependencies to each other and forcing developers to do trial-and-error rounds and include dependencies step by step until we don't get

Ok, so the first step I did was to manually create metadata for all my operations. This was fairly easy, I basically just created three entities (Group, Student, Grade), a complex type (AverageGrade) and several function imports (GetAverageGrades, GetHomeWorkCheck) to match the REST operations.

I created two metadata files: one for V2 and one for V4. This was actually the first time I created a V4 metadata file by hand and I must say that it is a lot less verbose than its V2 counterpart.

The next step is to bootstrap the V2 API. I found out that in my dependencies, I have:

I initialized everything through a configuration:

I wrote some simple controllers annotated with the SAP OData annotations (

This actually went pretty well, the annotations are pretty intuitive, although with slightly inconsistent naming (the

When running the app, I hit a problem: these annotated classes were being instantiated with

Luckily, the library uses the native ServiceLoader mechanism for creating an

Due to the structure of the entities and the REST API, I wanted to be able to directly create a student inside a group by doing

Trivia: I found out that the SDK uses the Jackson's convertValue method to transform POJOs into data maps. This means that if you put any

Verdict: This part went pretty smooth, and SAP actually used a well-known mechanism for allowing developers to connect to their framework, so I was pretty happy at this point.

The V4 configuration is very similar, with the difference that we don't have an

After doing this configuration, sh*t started hitting the fan.

It looks like there is a so-called

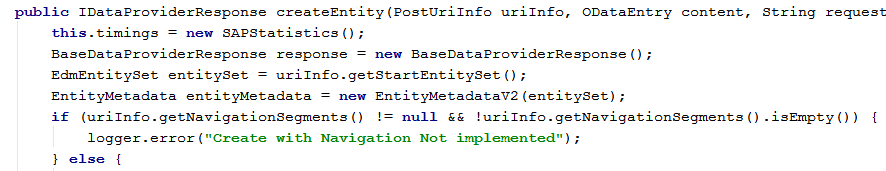

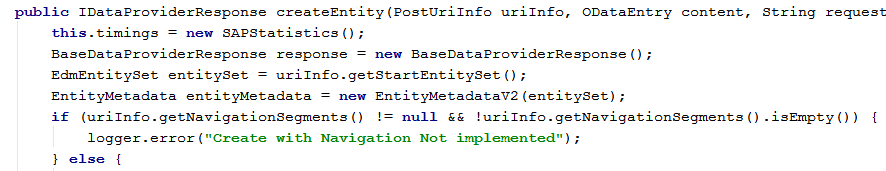

Trivia: when I was digging through this to find a solution, I stumbled upon a magnificent piece of coding:

I then thought that maybe at some point I will want to do something slightly different in V4 than in V2, so I extracted some base classes for the OData controllers and created dedicated controllers for V2 and for V4.

Now I had a slightly different problem: both V2 and V4 context listeners use the same

To achieve this, I created a simple decorator for the listeners which spoofs the

The next thing that I had to do was to give different names to the v2 and v4 services (by adjusting the metadata file names accordingly):

Lastly, the V4 seems to not use the ServiceLoader mechanism for creating controller instances as the V2 library, but instead directly does a

To circumvent this problem but still get the dependencies from Spring, I had to create a no-arg constructor in each V4 controller and get the dependencies from my

After doing this final change, my OData services were up and running.

Verdict: During this phase, I wanted to throw my laptop out of the window. It looks like the V4 libraries are of noticeably worse quality than the V2 ones. There is also a clear lack of consistency between the two (as the V2 uses the ServiceLoader concept whilst the V4 does not).

Initially I added one more listener for the V2 service: the

One positive side effect of this listener was that it adds support for XSLX. Initially, I manually added the configuration for getting this to work even without the listener:

But then I noticed that I keep seeing a random XLSX file in my project directory after making a call requesting

So I decided to scrap support for this format.

I am sure that some stuff can be done better on my side, so I would appreciate some hints.

Nevertheless, I would suggest several actions for product owning team to improve the developer experience while using the SDK:

Frankly, as a software architect I reject the notion that I should be pushed by a framework to chose a particular structure, application server, lifecycle management, testing strategy, etc. for my solutions.

It is ok to provide recommendations, but when most of the information is about this end-to-end scenario when all the technical decisions you could take are dictated by the SDK, then they are no longer just recommendations. I have a slight sense of deja vu when thinking about the SDK and its on-premise ABAP cousin, who dictates pretty firmly how an application must be structured.

As I am between projects right now, I decided to take another look over it. Most of the blogs here on SCN focus on how to build new applications ("side by side extensions") using this SDK. Even though I have my disagreements with the approach proposed for creating new apps, I decided to look into another use case: adding stuff to existing applications.

This will be a terribly honest look into how my journey was and all the problems that I encountered.

A "school" app

GitHub: https://github.com/serban-petrescu/spring-training-workshop

As it happens, I have a small Spring Boot application lying around. Initially, I created it during a small workshop that I held internally at our company, but I re-purposed it to also explore the SDK.

The business context of the app is very simple: we want to help a teacher manage grades for his students. Basically, we have three phases during a university year:

- Start of the year: the teacher gets a list of students in each group from the university.

- He should be able to import this data into our app.

- During the course of the year: he will browse through his groups, randomly select some students for homework checks each week and grade his students.

- He should be able to view all the groups / students / grades, add new grades into the system and also get a random subset of students for the homework check.

- At the end of the year: he will compute the average grades for each student.

- Our app should compute this for him.

An existing code base

During the aforementioned workshop, I implemented everything using typical Java + Spring constructs:

- Flyway for managing the database structure, organized into three migrations: initial data structure, some mock data and a view for computing the averages.

- JPA entities for representing the database tables in our Java runtime + a set of Spring Data repositories for handling the communication with the DB.

- Simple transactional services containing the very little business logic of the app. These services map the entities into DTOs using specialized classes.

- Some REST controllers for exposing the services via a REST API.

Before starting with the SDK, I did a small cleanup, upgraded to the latest version of Spring Boot and finally wrote a simple docker compose file for setting up a local PostgreSQL database (instead of using one installed directly on my machine).

Our goals

Let's imagine the following situation: we built our Spring app and delivered it to production. Some external applications are already using the REST API that we provided. Now our customer contacts us asking if he can integrate our app into his SAP ecosystem.

As we all know, SAP took a strategic decision to go for OData as a protocol some while ago, so after some analysis we decide to also provide an OData API for our app. It goes without saying that the existing consumers should not be impacted at all or as little as possible.

Because we are unsure exactly who will consume our APIs, we also think that providing both OData V2 and OData V4 would make sense (if possible).

Starting with the SDK

After reading a little around, I realized that I only need a small portion of the SDK, namely the service development part. I used Olingo a while back for creating V2 services and it was for sure not a pleasant development experience.

At a glance, it looked like I will just need to annotate some methods and that's it. Well, after thinking about it a little, I then realized there must be some hooks or listeners to trigger the annotations scanning at the very least.

First I read and went through some blogs. carlos.roggan created a nice blog series dedicated to the OData service development, so I started from there.

Managing our dependencies

Initially, I dissected the V2 provider libraries, but I struggled a lot to get the correct dependencies inside my POM. So this is my first point of contention: there are a lot of dependencies and it is very difficult to navigate through them. In the BOM(s), many of them are listed, some with exclusions, basically forcing you to import the BOM even if you actually only need one single dependency.

I ended up including the following dependencies:

<dependency>

<groupId>com.sap.cloud.servicesdk.prov</groupId>

<artifactId>odata-core</artifactId>

</dependency>

<dependency>

<groupId>com.sap.cloud.servicesdk.prov</groupId>

<artifactId>odatav2</artifactId>

<type>pom</type>

</dependency>

<dependency>

<groupId>com.sap.cloud.servicesdk.prov</groupId>

<artifactId>odatav2-prov</artifactId>

</dependency>

<dependency>

<groupId>com.sap.cloud.servicesdk.prov</groupId>

<artifactId>odatav2-lib</artifactId>

</dependency>odata-coreseems to have dependencies and code which is decoupled from the concrete OData version.odatav2seems to be a BOM-like artifact, pulling in various other modules.odatav2-libseems to be a shallow wrapper around Olingo.

This seems to pull a lot of other stuff that I don't want, but I don't have a better alternative (without having to exclude manually transitive dependencies that I don't care about):

Then I added the V4 dependencies.

<dependency>

<groupId>com.sap.cloud.servicesdk.prov</groupId>

<artifactId>odatav4</artifactId>

</dependency>

<dependency>

<groupId>com.sap.cloud.servicesdk.prov</groupId>

<artifactId>odatav4-prov</artifactId>

</dependency>

<dependency>

<groupId>com.sap.cloud.servicesdk.prov</groupId>

<artifactId>odatav4-lib</artifactId>

<version>${s4.sdk.version}</version>

</dependency>They are mostly symmetrical to the V2 dependencies, with two annoying differences:

- The

odatav4-libmodule's version is not managed via the BOM. - The

odatav4"BOM", even though it contains absolutely no classes at all, is of typeJAR.

Verdict: for sure it is a good thing that we can use Maven Central to pull the right dependencies. But the way in which the artifacts are organized is not clean in my view, having a lot of dependencies to each other and forcing developers to do trial-and-error rounds and include dependencies step by step until we don't get

ClassNotFoundExceptions anymore.The metadata

Ok, so the first step I did was to manually create metadata for all my operations. This was fairly easy, I basically just created three entities (Group, Student, Grade), a complex type (AverageGrade) and several function imports (GetAverageGrades, GetHomeWorkCheck) to match the REST operations.

I created two metadata files: one for V2 and one for V4. This was actually the first time I created a V4 metadata file by hand and I must say that it is a lot less verbose than its V2 counterpart.

Bootstrapping V2

The next step is to bootstrap the V2 API. I found out that in my dependencies, I have:

- One

EndPointsListservlet which exposes the OData V2 root document for each service. - One

ODataServletwhich comes from Olingo and for which I have to pass a service factory (which is also from the dependencies). - One

ServiceInitializerlistener which does some initialization in the various singletons that the libraries are built upon.

I initialized everything through a configuration:

@Bean

public ServletContextListener serviceInitializerV2() {

return new ServiceInitializer();

}

@Bean

public ServletRegistrationBean endpointsServletV2() {

ServletRegistrationBean<EndPointsList> bean = new ServletRegistrationBean<>(new EndPointsList());

bean.addUrlMappings("/api/odata/v2/");

return bean;

}

@Bean

public ServletRegistrationBean odataServletV2(ApplicationContext context) {

ODataInstanceProvider.setContext(context);

ServletRegistrationBean<ODataServlet> bean = new ServletRegistrationBean<>(new ODataServlet());

bean.setUrlMappings(Collections.singletonList("/api/odata/v2/*"));

bean.addInitParameter("org.apache.olingo.odata2.service.factory", ServiceFactory.class.getName());

bean.addInitParameter("org.apache.olingo.odata2.path.split", "1");

bean.setLoadOnStartup(1);

return bean;

}I wrote some simple controllers annotated with the SAP OData annotations (

@Query, @Read, etc), whose only job is to extract the data from the incoming requests, call the service and then map the result back to the OData objects.This actually went pretty well, the annotations are pretty intuitive, although with slightly inconsistent naming (the

@Function has a first-capital-letter Name property).When running the app, I hit a problem: these annotated classes were being instantiated with

newInstance, but I did not have a no-arg constructor (because of constructor-based dependency injection).Luckily, the library uses the native ServiceLoader mechanism for creating an

InstanceProvider. With some small hacks, I linked my own provider which uses the Spring Application Context for retrieving instances:public class ODataInstanceProvider implements InstanceProvider {

private static ApplicationContext context;

static void setContext(ApplicationContext context) {

ODataInstanceProvider.context = context;

}

@Override

public Object getInstance(Class clazz) {

return context.getBean(clazz);

}

}Due to the structure of the entities and the REST API, I wanted to be able to directly create a student inside a group by doing

POST Groups(1)/Students. This seems to not be possible, so I had to create some specialized POJOs for the OData side (which also have the parent entity IDs inside the child entities):

Trivia: I found out that the SDK uses the Jackson's convertValue method to transform POJOs into data maps. This means that if you put any

@JsonProperty annotations on your fields, they will be renamed in the output (as long as the metadata also has this name in it). I also don't know how I feel about the performance of this, considering that Jackson performs a serialization and then a de-serialization to do this mapping.Verdict: This part went pretty smooth, and SAP actually used a well-known mechanism for allowing developers to connect to their framework, so I was pretty happy at this point.

Bootstrapping V4

The V4 configuration is very similar, with the difference that we don't have an

EndPointsList servlet: @Bean

public ServletContextListener odataApplicationInitializerV4() {

return new ODataApplicationInitializer();

}

@Bean

public ServletRegistrationBean<ODataServlet> odataServletV4() {

ServletRegistrationBean<ODataServlet> bean = new ServletRegistrationBean<>(new ODataServlet());

bean.setUrlMappings(Collections.singletonList("/api/odata/v4/*"));

bean.setName("ODataServletV4");

bean.setLoadOnStartup(1);

return bean;

}After doing this configuration, sh*t started hitting the fan.

It looks like there is a so-called

AnnotationRepository which stores all the annotated methods. Unfortunately, this singleton class keeps everything inside a map of lists. Both the V2 and the V4 perform the same annotation scanning, so these lists will contain exact duplicates. In some well intended logic there is a sanity check that throws an exception because of this situation.Trivia: when I was digging through this to find a solution, I stumbled upon a magnificent piece of coding:

I then thought that maybe at some point I will want to do something slightly different in V4 than in V2, so I extracted some base classes for the OData controllers and created dedicated controllers for V2 and for V4.

Now I had a slightly different problem: both V2 and V4 context listeners use the same

package init parameter to determine where to perform the scanning. I needed to be able to specify a different package for each listener.To achieve this, I created a simple decorator for the listeners which spoofs the

package parameter. This hack looks like so:@RequiredArgsConstructor

class SingleParameterSettingListener implements ServletContextListener {

private static final String PARAMETER_NAME = "package";

private final ServletContextListener delegate;

private final String parameterValue;

@Override

public void contextInitialized(ServletContextEvent sce) {

delegate.contextInitialized(new ServletContextEvent(new Context(sce.getServletContext())));

}

@Override

public void contextDestroyed(ServletContextEvent sce) {

this.delegate.contextDestroyed(sce);

}

private interface GetInitParameter {

String getInitParameter(String name);

}

@RequiredArgsConstructor

private class Context implements ServletContext {

@Delegate(excludes = GetInitParameter.class)

private final ServletContext delegate;

@Override

public String getInitParameter(String name) {

if (PARAMETER_NAME.equals(name)) {

return parameterValue;

} else {

return delegate.getInitParameter(name);

}

}

}

}The next thing that I had to do was to give different names to the v2 and v4 services (by adjusting the metadata file names accordingly):

Lastly, the V4 seems to not use the ServiceLoader mechanism for creating controller instances as the V2 library, but instead directly does a

newInstance call on the class.To circumvent this problem but still get the dependencies from Spring, I had to create a no-arg constructor in each V4 controller and get the dependencies from my

ODataInstanceProvider from before:@Component

@RequiredArgsConstructor

public class RandomODataV4Controller {

private final RandomODataController base;

public RandomODataV4Controller() {

this.base = ODataInstanceProvider.getInstanceTyped(RandomODataController.class);

}

// annotated methods follow...

}

After doing this final change, my OData services were up and running.

Verdict: During this phase, I wanted to throw my laptop out of the window. It looks like the V4 libraries are of noticeably worse quality than the V2 ones. There is also a clear lack of consistency between the two (as the V2 uses the ServiceLoader concept whilst the V4 does not).

An extra listener

Initially I added one more listener for the V2 service: the

ServletListener. Based on the listener initialization order, I encountered several problems (and I decided to just dump it):- The

CDSDataProviderwould be used instead of theCXSDataProvider, so I would get errors regarding the HANA connection. - The

AnnotationContainerseems to have a list of supported annotations. All the SAP annotations for service development (@Read,@Query, etc) are added in all three listeners. The difference is that this listener does not check if they were previously added by someone else. If this is run after another listener adds these annotations, then each annotation is present twice in this container, which then causes exceptions down the road.

One positive side effect of this listener was that it adds support for XSLX. Initially, I manually added the configuration for getting this to work even without the listener:

private void initializexlsxSupport() {

try {

CustomFormatProvider.setCustomFormatRepository((ICustomFormatRepository)Class.forName("com.sap.cloud.sdk.service.prov.v2.rt.core.xls.CustomFormatRepository").newInstance());

} catch (Exception var2) {

logger.error("Error Initializing XLSX Processor", var2);

}

}But then I noticed that I keep seeing a random XLSX file in my project directory after making a call requesting

$format=xlsx. After checking the source code I found another magnificent implementation:

So I decided to scrap support for this format.

Overall verdict

I am sure that some stuff can be done better on my side, so I would appreciate some hints.

Nevertheless, I would suggest several actions for product owning team to improve the developer experience while using the SDK:

- Improve the dependency structure or provide "starters" to allow developer to include a single dependency in the POM and get support for plain OData Vx (maybe one starter with CDS and one without).

- Some more improvements to the code base. Sorry, but it is far from clean and decoupled. Looking at the sources, I get the impression that everything is tightly coupled and in some artifacts, assumptions are even made related to the archetype usage.

- A lot of stuff is done via static properties, singletons and

newInstance. This is generally bad practice and reduces the capacity of integrating your SDK with other libraries. - Put the source code on GitHub. The source code is already readily available on the Maven Central, why not put it on GitHub? At least then you can get issues and pull requests to fix stuff like the XLSX issue from above.

- Some more documentation. IMO, the JavaDocs are sometimes useful, but at times they provide absolutely no insight.

- A more library-centric mindset: right now for me it looks like the SDK is purely focused on supporting the creation of new "side by side extensions" in a way that completely "marries" the extension with the SDK. In my view, you should consider the other use cases as well (in which only a sub-section of the SDK is used, and only in smaller components of an app).

Frankly, as a software architect I reject the notion that I should be pushed by a framework to chose a particular structure, application server, lifecycle management, testing strategy, etc. for my solutions.

It is ok to provide recommendations, but when most of the information is about this end-to-end scenario when all the technical decisions you could take are dictated by the SDK, then they are no longer just recommendations. I have a slight sense of deja vu when thinking about the SDK and its on-premise ABAP cousin, who dictates pretty firmly how an application must be structured.

- SAP Managed Tags:

- SAP Cloud Application Programming Model,

- Java,

- OData

2 Comments

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

"automatische backups"

1 -

"regelmäßige sicherung"

1 -

"TypeScript" "Development" "FeedBack"

1 -

505 Technology Updates 53

1 -

ABAP

14 -

ABAP API

1 -

ABAP CDS Views

2 -

ABAP CDS Views - BW Extraction

1 -

ABAP CDS Views - CDC (Change Data Capture)

1 -

ABAP class

2 -

ABAP Cloud

2 -

ABAP Development

5 -

ABAP in Eclipse

1 -

ABAP Platform Trial

1 -

ABAP Programming

2 -

abap technical

1 -

absl

2 -

access data from SAP Datasphere directly from Snowflake

1 -

Access data from SAP datasphere to Qliksense

1 -

Accrual

1 -

action

1 -

adapter modules

1 -

Addon

1 -

Adobe Document Services

1 -

ADS

1 -

ADS Config

1 -

ADS with ABAP

1 -

ADS with Java

1 -

ADT

2 -

Advance Shipping and Receiving

1 -

Advanced Event Mesh

3 -

AEM

1 -

AI

7 -

AI Launchpad

1 -

AI Projects

1 -

AIML

9 -

Alert in Sap analytical cloud

1 -

Amazon S3

1 -

Analytical Dataset

1 -

Analytical Model

1 -

Analytics

1 -

Analyze Workload Data

1 -

annotations

1 -

API

1 -

API and Integration

3 -

API Call

2 -

API security

1 -

Application Architecture

1 -

Application Development

5 -

Application Development for SAP HANA Cloud

3 -

Applications and Business Processes (AP)

1 -

Artificial Intelligence

1 -

Artificial Intelligence (AI)

5 -

Artificial Intelligence (AI) 1 Business Trends 363 Business Trends 8 Digital Transformation with Cloud ERP (DT) 1 Event Information 462 Event Information 15 Expert Insights 114 Expert Insights 76 Life at SAP 418 Life at SAP 1 Product Updates 4

1 -

Artificial Intelligence (AI) blockchain Data & Analytics

1 -

Artificial Intelligence (AI) blockchain Data & Analytics Intelligent Enterprise

1 -

Artificial Intelligence (AI) blockchain Data & Analytics Intelligent Enterprise Oil Gas IoT Exploration Production

1 -

Artificial Intelligence (AI) blockchain Data & Analytics Intelligent Enterprise sustainability responsibility esg social compliance cybersecurity risk

1 -

ASE

1 -

ASR

2 -

ASUG

1 -

Attachments

1 -

Authorisations

1 -

Automating Processes

1 -

Automation

2 -

aws

2 -

Azure

1 -

Azure AI Studio

1 -

Azure API Center

1 -

Azure API Management

1 -

B2B Integration

1 -

Backorder Processing

1 -

Backup

1 -

Backup and Recovery

1 -

Backup schedule

1 -

BADI_MATERIAL_CHECK error message

1 -

Bank

1 -

BAS

1 -

basis

2 -

Basis Monitoring & Tcodes with Key notes

2 -

Batch Management

1 -

BDC

1 -

Best Practice

1 -

bitcoin

1 -

Blockchain

3 -

bodl

1 -

BOP in aATP

1 -

BOP Segments

1 -

BOP Strategies

1 -

BOP Variant

1 -

BPC

1 -

BPC LIVE

1 -

BTP

12 -

BTP Destination

2 -

Business AI

1 -

Business and IT Integration

1 -

Business application stu

1 -

Business Application Studio

1 -

Business Architecture

1 -

Business Communication Services

1 -

Business Continuity

1 -

Business Data Fabric

3 -

Business Partner

12 -

Business Partner Master Data

10 -

Business Technology Platform

2 -

Business Trends

4 -

CA

1 -

calculation view

1 -

CAP

3 -

Capgemini

1 -

CAPM

1 -

Catalyst for Efficiency: Revolutionizing SAP Integration Suite with Artificial Intelligence (AI) and

1 -

CCMS

2 -

CDQ

12 -

CDS

2 -

Cental Finance

1 -

Certificates

1 -

CFL

1 -

Change Management

1 -

chatbot

1 -

chatgpt

3 -

CL_SALV_TABLE

2 -

Class Runner

1 -

Classrunner

1 -

Cloud ALM Monitoring

1 -

Cloud ALM Operations

1 -

cloud connector

1 -

Cloud Extensibility

1 -

Cloud Foundry

4 -

Cloud Integration

6 -

Cloud Platform Integration

2 -

cloudalm

1 -

communication

1 -

Compensation Information Management

1 -

Compensation Management

1 -

Compliance

1 -

Compound Employee API

1 -

Configuration

1 -

Connectors

1 -

Consolidation Extension for SAP Analytics Cloud

2 -

Control Indicators.

1 -

Controller-Service-Repository pattern

1 -

Conversion

1 -

Cosine similarity

1 -

cryptocurrency

1 -

CSI

1 -

ctms

1 -

Custom chatbot

3 -

Custom Destination Service

1 -

custom fields

1 -

Customer Experience

1 -

Customer Journey

1 -

Customizing

1 -

cyber security

3 -

cybersecurity

1 -

Data

1 -

Data & Analytics

1 -

Data Aging

1 -

Data Analytics

2 -

Data and Analytics (DA)

1 -

Data Archiving

1 -

Data Back-up

1 -

Data Flow

1 -

Data Governance

5 -

Data Integration

2 -

Data Quality

12 -

Data Quality Management

12 -

Data Synchronization

1 -

data transfer

1 -

Data Unleashed

1 -

Data Value

8 -

database tables

1 -

Datasphere

3 -

datenbanksicherung

1 -

dba cockpit

1 -

dbacockpit

1 -

Debugging

2 -

Defender

1 -

Delimiting Pay Components

1 -

Delta Integrations

1 -

Destination

3 -

Destination Service

1 -

Developer extensibility

1 -

Developing with SAP Integration Suite

1 -

Devops

1 -

digital transformation

1 -

Documentation

1 -

Dot Product

1 -

DQM

1 -

dump database

1 -

dump transaction

1 -

e-Invoice

1 -

E4H Conversion

1 -

Eclipse ADT ABAP Development Tools

2 -

edoc

1 -

edocument

1 -

ELA

1 -

Embedded Consolidation

1 -

Embedding

1 -

Embeddings

1 -

Employee Central

1 -

Employee Central Payroll

1 -

Employee Central Time Off

1 -

Employee Information

1 -

Employee Rehires

1 -

Enable Now

1 -

Enable now manager

1 -

endpoint

1 -

Enhancement Request

1 -

Enterprise Architecture

1 -

ETL Business Analytics with SAP Signavio

1 -

Euclidean distance

1 -

Event Dates

1 -

Event Driven Architecture

1 -

Event Mesh

2 -

Event Reason

1 -

EventBasedIntegration

1 -

EWM

1 -

EWM Outbound configuration

1 -

EWM-TM-Integration

1 -

Existing Event Changes

1 -

Expand

1 -

Expert

2 -

Expert Insights

2 -

Exploits

1 -

Fiori

14 -

Fiori Elements

2 -

Fiori SAPUI5

12 -

Flask

1 -

Full Stack

8 -

Funds Management

1 -

General

1 -

General Splitter

1 -

Generative AI

1 -

Getting Started

1 -

GitHub

8 -

Grants Management

1 -

GraphQL

1 -

groovy

1 -

GTP

1 -

HANA

6 -

HANA Cloud

2 -

Hana Cloud Database Integration

2 -

HANA DB

2 -

HANA XS Advanced

1 -

Historical Events

1 -

home labs

1 -

HowTo

1 -

HR Data Management

1 -

html5

8 -

HTML5 Application

1 -

Identity cards validation

1 -

idm

1 -

Implementation

1 -

input parameter

1 -

instant payments

1 -

Integration

3 -

Integration Advisor

1 -

Integration Architecture

1 -

Integration Center

1 -

Integration Suite

1 -

intelligent enterprise

1 -

iot

1 -

Java

1 -

job

1 -

Job Information Changes

1 -

Job-Related Events

1 -

Job_Event_Information

1 -

joule

4 -

Journal Entries

1 -

Just Ask

1 -

Kerberos for ABAP

8 -

Kerberos for JAVA

8 -

KNN

1 -

Launch Wizard

1 -

Learning Content

2 -

Life at SAP

5 -

lightning

1 -

Linear Regression SAP HANA Cloud

1 -

Loading Indicator

1 -

local tax regulations

1 -

LP

1 -

Machine Learning

2 -

Marketing

1 -

Master Data

3 -

Master Data Management

14 -

Maxdb

2 -

MDG

1 -

MDGM

1 -

MDM

1 -

Message box.

1 -

Messages on RF Device

1 -

Microservices Architecture

1 -

Microsoft Universal Print

1 -

Middleware Solutions

1 -

Migration

5 -

ML Model Development

1 -

Modeling in SAP HANA Cloud

8 -

Monitoring

3 -

MTA

1 -

Multi-Record Scenarios

1 -

Multiple Event Triggers

1 -

Myself Transformation

1 -

Neo

1 -

New Event Creation

1 -

New Feature

1 -

Newcomer

1 -

NodeJS

2 -

ODATA

2 -

OData APIs

1 -

odatav2

1 -

ODATAV4

1 -

ODBC

1 -

ODBC Connection

1 -

Onpremise

1 -

open source

2 -

OpenAI API

1 -

Oracle

1 -

PaPM

1 -

PaPM Dynamic Data Copy through Writer function

1 -

PaPM Remote Call

1 -

PAS-C01

1 -

Pay Component Management

1 -

PGP

1 -

Pickle

1 -

PLANNING ARCHITECTURE

1 -

Popup in Sap analytical cloud

1 -

PostgrSQL

1 -

POSTMAN

1 -

Process Automation

2 -

Product Updates

4 -

PSM

1 -

Public Cloud

1 -

Python

4 -

python library - Document information extraction service

1 -

Qlik

1 -

Qualtrics

1 -

RAP

3 -

RAP BO

2 -

Record Deletion

1 -

Recovery

1 -

recurring payments

1 -

redeply

1 -

Release

1 -

Remote Consumption Model

1 -

Replication Flows

1 -

research

1 -

Resilience

1 -

REST

1 -

REST API

2 -

Retagging Required

1 -

Risk

1 -

Rolling Kernel Switch

1 -

route

1 -

rules

1 -

S4 HANA

1 -

S4 HANA Cloud

1 -

S4 HANA On-Premise

1 -

S4HANA

3 -

S4HANA_OP_2023

2 -

SAC

10 -

SAC PLANNING

9 -

SAP

4 -

SAP ABAP

1 -

SAP Advanced Event Mesh

1 -

SAP AI Core

8 -

SAP AI Launchpad

8 -

SAP Analytic Cloud Compass

1 -

Sap Analytical Cloud

1 -

SAP Analytics Cloud

4 -

SAP Analytics Cloud for Consolidation

3 -

SAP Analytics Cloud Story

1 -

SAP analytics clouds

1 -

SAP API Management

1 -

SAP BAS

1 -

SAP Basis

6 -

SAP BODS

1 -

SAP BODS certification.

1 -

SAP BTP

21 -

SAP BTP Build Work Zone

2 -

SAP BTP Cloud Foundry

6 -

SAP BTP Costing

1 -

SAP BTP CTMS

1 -

SAP BTP Innovation

1 -

SAP BTP Migration Tool

1 -

SAP BTP SDK IOS

1 -

SAP Build

11 -

SAP Build App

1 -

SAP Build apps

1 -

SAP Build CodeJam

1 -

SAP Build Process Automation

3 -

SAP Build work zone

10 -

SAP Business Objects Platform

1 -

SAP Business Technology

2 -

SAP Business Technology Platform (XP)

1 -

sap bw

1 -

SAP CAP

2 -

SAP CDC

1 -

SAP CDP

1 -

SAP CDS VIEW

1 -

SAP Certification

1 -

SAP Cloud ALM

4 -

SAP Cloud Application Programming Model

1 -

SAP Cloud Integration for Data Services

1 -

SAP cloud platform

8 -

SAP Companion

1 -

SAP CPI

3 -

SAP CPI (Cloud Platform Integration)

2 -

SAP CPI Discover tab

1 -

sap credential store

1 -

SAP Customer Data Cloud

1 -

SAP Customer Data Platform

1 -

SAP Data Intelligence

1 -

SAP Data Migration in Retail Industry

1 -

SAP Data Services

1 -

SAP DATABASE

1 -

SAP Dataspher to Non SAP BI tools

1 -

SAP Datasphere

9 -

SAP DRC

1 -

SAP EWM

1 -

SAP Fiori

3 -

SAP Fiori App Embedding

1 -

Sap Fiori Extension Project Using BAS

1 -

SAP GRC

1 -

SAP HANA

1 -

SAP HCM (Human Capital Management)

1 -

SAP HR Solutions

1 -

SAP IDM

1 -

SAP Integration Suite

9 -

SAP Integrations

4 -

SAP iRPA

2 -

SAP LAGGING AND SLOW

1 -

SAP Learning Class

1 -

SAP Learning Hub

1 -

SAP Master Data

1 -

SAP Odata

2 -

SAP on Azure

2 -

SAP PartnerEdge

1 -

sap partners

1 -

SAP Password Reset

1 -

SAP PO Migration

1 -

SAP Prepackaged Content

1 -

SAP Process Automation

2 -

SAP Process Integration

2 -

SAP Process Orchestration

1 -

SAP S4HANA

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Cloud for Finance

1 -

SAP S4HANA Cloud private edition

1 -

SAP Sandbox

1 -

SAP STMS

1 -

SAP successfactors

3 -

SAP SuccessFactors HXM Core

1 -

SAP Time

1 -

SAP TM

2 -

SAP Trading Partner Management

1 -

SAP UI5

1 -

SAP Upgrade

1 -

SAP Utilities

1 -

SAP-GUI

8 -

SAP_COM_0276

1 -

SAPBTP

1 -

SAPCPI

1 -

SAPEWM

1 -

sapmentors

1 -

saponaws

2 -

SAPS4HANA

1 -

SAPUI5

5 -

schedule

1 -

Script Operator

1 -

Secure Login Client Setup

8 -

security

9 -

Selenium Testing

1 -

Self Transformation

1 -

Self-Transformation

1 -

SEN

1 -

SEN Manager

1 -

service

1 -

SET_CELL_TYPE

1 -

SET_CELL_TYPE_COLUMN

1 -

SFTP scenario

2 -

Simplex

1 -

Single Sign On

8 -

Singlesource

1 -

SKLearn

1 -

Slow loading

1 -

soap

1 -

Software Development

1 -

SOLMAN

1 -

solman 7.2

2 -

Solution Manager

3 -

sp_dumpdb

1 -

sp_dumptrans

1 -

SQL

1 -

sql script

1 -

SSL

8 -

SSO

8 -

Substring function

1 -

SuccessFactors

1 -

SuccessFactors Platform

1 -

SuccessFactors Time Tracking

1 -

Sybase

1 -

system copy method

1 -

System owner

1 -

Table splitting

1 -

Tax Integration

1 -

Technical article

1 -

Technical articles

1 -

Technology Updates

14 -

Technology Updates

1 -

Technology_Updates

1 -

terraform

1 -

Threats

2 -

Time Collectors

1 -

Time Off

2 -

Time Sheet

1 -

Time Sheet SAP SuccessFactors Time Tracking

1 -

Tips and tricks

2 -

toggle button

1 -

Tools

1 -

Trainings & Certifications

1 -

Transformation Flow

1 -

Transport in SAP BODS

1 -

Transport Management

1 -

TypeScript

2 -

ui designer

1 -

unbind

1 -

Unified Customer Profile

1 -

UPB

1 -

Use of Parameters for Data Copy in PaPM

1 -

User Unlock

1 -

VA02

1 -

Validations

1 -

Vector Database

2 -

Vector Engine

1 -

Visual Studio Code

1 -

VSCode

1 -

Vulnerabilities

1 -

Web SDK

1 -

work zone

1 -

workload

1 -

xsa

1 -

XSA Refresh

1

- « Previous

- Next »

Related Content

- Supporting Multiple API Gateways with SAP API Management – using Azure API Management as example in Technology Blogs by SAP

- Consuming on-Premise Service in CAP Project in Technology Q&A

- SAP Cloud ALM and Identity Authentication Service (IAS) in Technology Blogs by SAP

- SAP Build Process Automation Pre-built content for Finance Use cases in Technology Blogs by SAP

- Consuming SAP with SAP Build Apps - Mobile Apps for iOS and Android in Technology Blogs by SAP

Top kudoed authors

| User | Count |

|---|---|

| 8 | |

| 5 | |

| 5 | |

| 4 | |

| 4 | |

| 4 | |

| 4 | |

| 3 | |

| 3 | |

| 3 |