- SAP Community

- Products and Technology

- Technology

- Technology Blogs by Members

- Your SAP on Azure – Part 12 – Highly Available SAP...

Technology Blogs by Members

Explore a vibrant mix of technical expertise, industry insights, and tech buzz in member blogs covering SAP products, technology, and events. Get in the mix!

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

BJarkowski

Active Contributor

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

12-21-2018

9:32 AM

Using file shares to host the system mount directory was introduced a couple months ago and since then the implementation got significantly easier. No more manual editing of profile parameter files – now everything is reflected during system provisioning. Recently I went through the process again and I think the changes are so big, that it’s worth revisiting my past post. As there are a few improvements on Azure side as well I decided to write a brand-new guide and include additional information about protecting the SQL Server using AlwaysOn availability groups. The file share is still provisioned using a combination of the Storage Spaces Direct and the Scale-Out File Server functionalities in Windows Server 2016. To simplify the setup and avoid building large and expensive landscapes I deployed all required components to just a two-node Failover Cluster.

RESOURCE PROVISIONING IN MICROSOFT AZURE

The first innovation can be noticed in the process of provisioning the virtual machines. Previously I explained the concept of Availability Sets to protect system against unexpected hardware failure. Currently, selected Azure regions consist of more than one datacentre – for example the West Europe region which I use in this guide, is divided into three physical locations with separate power supply, cooling and networking. When VMs are deployed in a separate availability zones the probability of potential failure is much lower – Microsoft offers the 99,99% uptime SLA. The higher availability comes with a slightly higher network latency between servers – especially if there is a long distance between zones. Always check if that will cause a negative impact to your environment - I recommend running the database and the application server in the same zone and to failover them together.

(source: Microsoft.com)

My cluster is based on two two DS4_v2 VMs deployed in the West Europe region in two separate Availability Zones. I already have a Windows Domain in my network, and I won’t describe the steps needed to configure active directory, but you can use almost any guide from the internet.

Each VM has a three data disks attached. Storage Spaces Direct functionality needs at least two disks and the third will be used to store applications and data files.

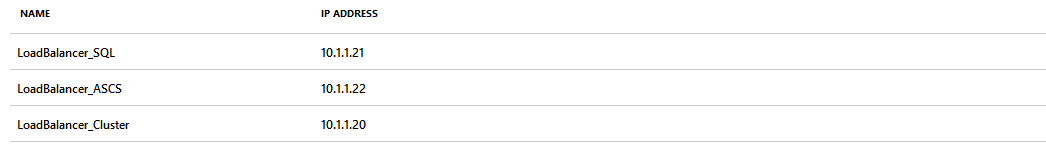

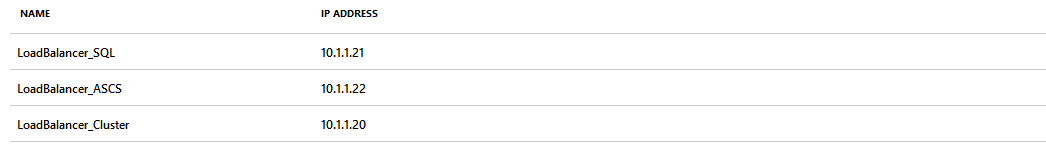

Each component deployed to failover cluster uses a separate virtual IP for communication. Such approach requires an Internal Load Balancer with three Frontend IP’s to direct traffic to a correct address. You can only use a Standard Load Balancer to distribute the traffic to VMs deployed in the Availability Zone.

Two cluster nodes are forming the backend pool:

I created three health probes to monitor the availability and distribution of services.

The last resource required is a Storage Account that I use as a Cloud Witness:

FAILOVER CLUSTER CONFIGURATION

Storage Spaces Direct uses all unallocated disks to form a storage pool which then hosts the SAP mount directory. Application and database files should reside on a separate disk that won’t be part of the storage cluster so before enabling S2D functionality I created a partition on selected disk

Next, I’m using a PowerShell script to add computers to the domain and install required features:

I decided to check the configuration before forming a cluster:

The outcome is a nice report which says which drives will be used for Storage Spaces Direct. The partition I created on one of the disks excluded it from the storage pool.

There were no errors in the report so I’m ready to build the cluster. Use the Load Balancer IP address as the cluster IP. Later we will configure load balancing rules to direct the traffic to the active node.

I use the previously created storage account as a Cloud Witness

When the cluster is operational, I can enable the Storage Spaces Direct functionality:

Four disks (two from each VM) formed a storage pool. Following command creates a new volume and mount it in the C:\ClusterStorage\

Details about the storage pool and the volume are also visible in the Server Manager:

Last PowerShell command used today will enable the Scale-Out File Server role on the cluster:

I created the SAP mount directory on the Storage Spaces Direct volume:

A file share can be created in the Failover Cluster Manager:

The highly available file share is deployed and can be used to install Central Services instance.

SQL SERVER ALWAYS ON AVAILABILITY GROUPS

Following chapter shows how to deploy a Microsoft SQL Server and enable the AlwaysOn functionality. I included only the important installation steps for the first node, but the process has to be repeated on the second VMs.

As a preparation, I created two users in the Active Directory to run the database processes:

Then during the software installation, I assigned them against Database Engine and Server Agent:

I almost forgot to change the Collation! ?

In the database engine configuration, I chose a Mixed Mode authentication and configured data directories:

Installation doesn’t take too much time:

Once the installation is completed, we have two standalone SQL Servers installed on two nodes of the cluster. As the next step we need to enable the AlwaysOn functionality and configure the replication. In the SQL Configuration Manager select “Enable AlwaysOn Availability Groups”

System account needs additional permissions to manage the availability group:

Before I continue with the AlwaysOn activation I need to create an empty database and execute a Full Backup:

You can follow the wizard to create availability group:

In the first window I’m asked to provide the Availability Group name:

Then I selected the database to replicate:

Specifies Replicas step allows to change the replication settings and include the hosts that should be included in the Availability Group. Synchronous replication minimizes the amount of lost data in case of node failure, but it comes with a negative impact to system performance. In most cases you should go with Asynchronous replication.

I haven’t created a Listener at this point.

I use Automatic Seeding to perform initial data synchronization.

When the configuration is completed, we receive a summary:

The database listener is a combination of virtual hostname and port that will be used for communication with the database. You can use the SQL Management Studio to define new listener:

The listener IP should match the Load Balancer SQL Front-end IP. Saving the settings will also create additional DNS entry in the domain.

The AlwaysOn configuration is quick and straightforward. The current cluster status can be monitored from the Cluster Manager, but the failover can only be triggered from the AlwaysOn dashboard.

Azure Load Balancer routes each request addressed to the listener to the correct host. But at this moment the Load Balancer is not aware on which node the SQL service is running. A health probe is a solution - every few seconds the Azure service will try to establish the connection to both nodes, but the health probe is active only on the host running the SQL process. This way the Load Balancer can identify the active node and direct the network traffic accordingly.

Use the following PowerShell script to create a health probe:

How to get input parameters?

Cluster Network Name – can be retrieved using command Get-ClusterNetwork

IP Resource Name – can be retrieved from IP Address properties in the failover cluster manager or using the command Get-ClusterResource | ? ResourceType -eq "IP Address"

Listener – IP address assigned to the LoadBalancer Front-End IP Address

Probe Port – port defined in the Load Balancer

Now I can define a rule on the Load Balancer to route the requests to the correct host.

You can verify the configuration using sqlcmd.

CENTRAL SERVICES INSTANCE – FIRST CLUSTER NODE

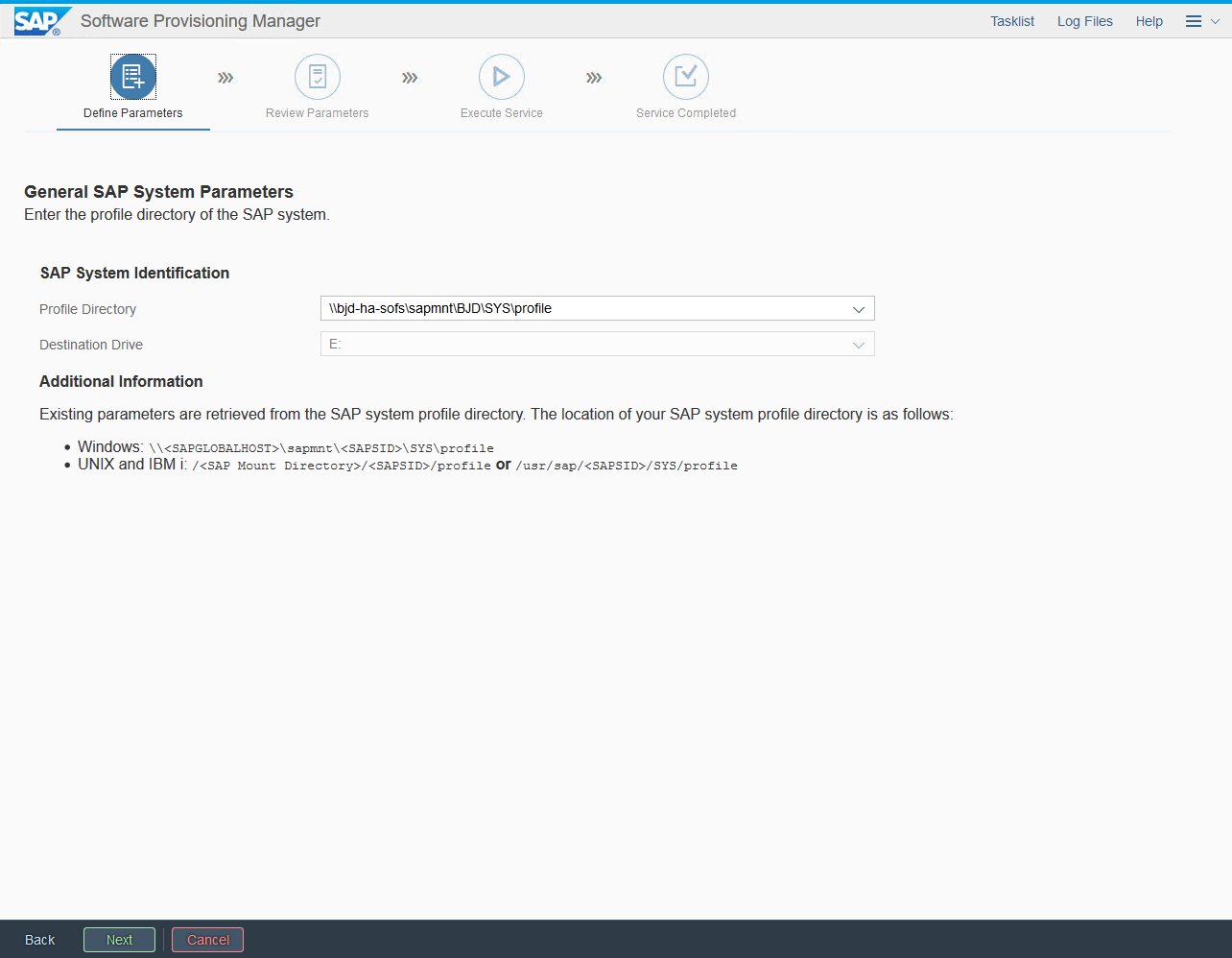

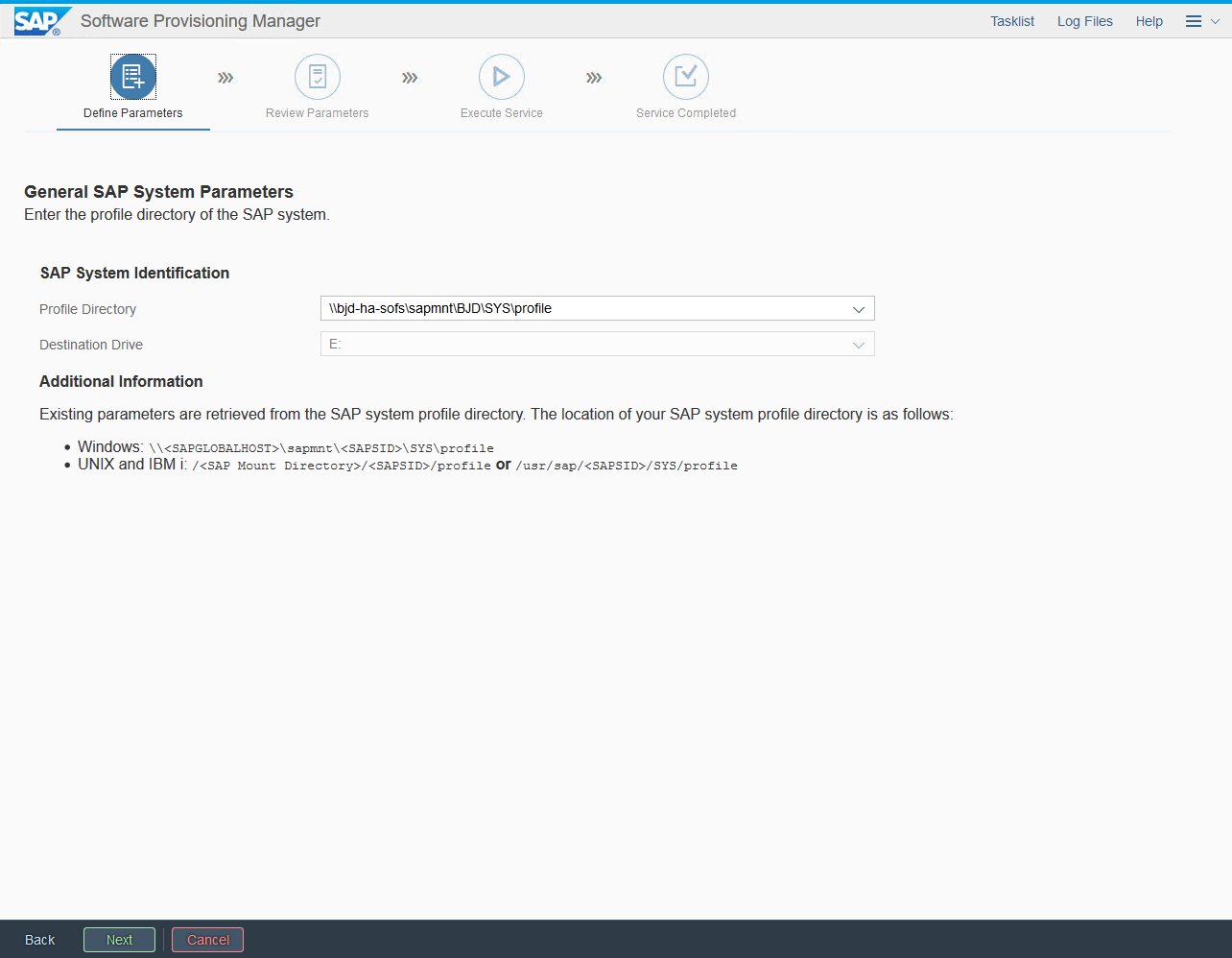

To enable the high availability of the SAP NetWeaver it is required to install the central services instance on both nodes of the cluster. Start the Software Provisioning Manager and select the installation on the First Cluster Node.

In my initial blog post about using a file share as an SAP mount directory most of the configuration had to be performed manually. Fortunately, such deployment option is now available already in the Software Provisioning Manager.

In the SAP System Cluster Parameters type the desired system ID. Network name is the hostname that the Central Services instance uses for communication. It has to be associated with the ASCS Load Balancer Front-End IP – DNS entry should be created before clicking Next. In the File Share Host Name enter the Scale-Out File Server hostname that you chose during initial cluster configuration.

Carefully choose the system numbers. If you use different instance number than bellow some of the load balancer rules will require an update:

When the installation is completed, I can create a health probe assigned to the ASCS Cluster IP resource.

Load Balancer configuration has to be enhanced to include Central Services instance ports: 3210, 3310, 3610, 3910, 51013, 51016, 8110,

The status of Central Services instance is displayed in SAP Management Console:

DATABASE INSTANCE

The database is running and the central services instance is deployed to the first node so it’s time to start the database instance installation.

At the beginning the Software Provisioning Manager asks to supply the path to profile directory. Of course, we should provide the file share created using Scale-Out File Server:

The installer also asks to provide the database connection. Please use the database listener and not the node hostname! Otherwise the profile parameter will have the incorrect information and the communication won’t happen through the Load Balancer but just directly with a SQL Server node. If you can’t see the listener name then please revisit the SQL Server configuration, as most probably something is not OK. Possible reasons are missing listener or problems with DNS entry.

Select the previously created database which is replicated to the secondary node:

The server has multiple components installed on the host, so the standard memory configuration won’t apply.

The database instance installation takes the most time as it takes the installation data and import it to the database during the Import Proper step.

SECONDARY ASCS NODE

Install the Central Services instance on the secondary node. The process is basically the same as for the primary node, so I don’t think you require additional explanation.

SECONDARY SQL NODE

SQL Server AlwaysOn works a bit differently compared to standard High Available solutions. Instead of using a shared storage it uses a replication mechanism to propagate the data from the primary to secondary node. Such approach has a lot of benefits, but it also requires additional work from us.

Not all SQL objects are created within the database, but they may apply to the entire SQL Server configuration. For example, all logins configuration doesn’t belong directly to the database and therefore won’t be replicated in the secondary node but should be re-created manually. Fortunately, SAP has a solution ready and we don’t have to copy the objects.

This step requires that the database software is running on the currently inactive node. Before starting the Software Provisioning Manager, it is required to perform a failover. That’s also a good test to check if SQL AlwaysOn is correctly working. Open the dashboard and follow the wizard to failover the database:

Now you can start the Software Provisioning Manager and configure the SQL Cluster node:

Provide the details about the current installation. Don’t forget to update the domain name:

Unlike during the central services install this time it’s OK to provide the node details:

The installer asks pretty much the same questions as during the database install.

When the process is finished you can go ahead to install the Dialog Instances.

APPLICATION INSTANCE

A highly available SAP NetWeaver system requires at least two instances of the application server – one instance per cluster node. Open the SWPM and select the Primary Application Server installation on one of the hosts – then when the installation is completed start the process of deploying Additional Application Server.

The process is again very similar to what we’ve done before, so I won’t go screen by screen. Remember to point the system to the correct file share.

You need to provide the instance number. I decided to go for 00 on both nodes:

Provide the details of the Message Server port and the file share to store the transport files:

A few moments later the installation is completed:

Congratulations! You have completed the setup of SAP NetWeaver running on two node failover cluster!

HOW TO MANAGE THE CLUSTER

The easy way to manage the cluster is to use the tools delivered by SAP and Microsoft. To start and stop SAP Central Services instance and check where the processes are currently running, I recommend using the Failover Cluster Manager:

To see the details about database replication I use the AlwaysOn dashboard available in SQL Management Studio.

Starting and stopping SAP Dialog Instances can be done using SAP MMC. Don’t use it to manage the central services instance.

Wow! That's quite a long post, but I hope such optimised scenario let's you practice managing highly available SAP solutions without spending a fortune on Azure VMs!

| Using a two node cluster to host all SAP components is not recommended by SAP in production environment. Please read the SAP NetWeaver Master Guide as well as Installation Guide and ensure you understand all risks that comes with such architecture. If you'd prefer to distribute the SAP components you can still follow the guide, just the number of servers will be higher. |

RESOURCE PROVISIONING IN MICROSOFT AZURE

The first innovation can be noticed in the process of provisioning the virtual machines. Previously I explained the concept of Availability Sets to protect system against unexpected hardware failure. Currently, selected Azure regions consist of more than one datacentre – for example the West Europe region which I use in this guide, is divided into three physical locations with separate power supply, cooling and networking. When VMs are deployed in a separate availability zones the probability of potential failure is much lower – Microsoft offers the 99,99% uptime SLA. The higher availability comes with a slightly higher network latency between servers – especially if there is a long distance between zones. Always check if that will cause a negative impact to your environment - I recommend running the database and the application server in the same zone and to failover them together.

(source: Microsoft.com)

My cluster is based on two two DS4_v2 VMs deployed in the West Europe region in two separate Availability Zones. I already have a Windows Domain in my network, and I won’t describe the steps needed to configure active directory, but you can use almost any guide from the internet.

Each VM has a three data disks attached. Storage Spaces Direct functionality needs at least two disks and the third will be used to store applications and data files.

| VM | IP | Size | Data disk 1 | Data disk 2 | Data disk 3 |

|---|---|---|---|---|---|

| Bjd-ha-0 | 10.1.1.13 | DS4_v2 | 128 GB | 128 GB | 256 GB |

| Bjd-ha-1 | 10.1.1.14 | DS4_v2 | 128 GB | 128 GB | 256 GB |

Each component deployed to failover cluster uses a separate virtual IP for communication. Such approach requires an Internal Load Balancer with three Frontend IP’s to direct traffic to a correct address. You can only use a Standard Load Balancer to distribute the traffic to VMs deployed in the Availability Zone.

Name | IP | Backend Pool | Health probe |

LoadBalancer_Cluster | 10.1.1.20 | bjd-vm | HealthProbe_Cluster (62500) |

LoadBalancer_SQL | 10.1.1.21 | bjd-vm | HealthProbe_SQL (62501) |

LoadBalancer_ASCS | 10.1.1.22 | bjd-vm | HealthProbe_ASCS (62502) |

Two cluster nodes are forming the backend pool:

I created three health probes to monitor the availability and distribution of services.

The last resource required is a Storage Account that I use as a Cloud Witness:

FAILOVER CLUSTER CONFIGURATION

Storage Spaces Direct uses all unallocated disks to form a storage pool which then hosts the SAP mount directory. Application and database files should reside on a separate disk that won’t be part of the storage cluster so before enabling S2D functionality I created a partition on selected disk

Next, I’m using a PowerShell script to add computers to the domain and install required features:

$domain = "myDomain"

$user = "username"

$password = "myPassword!" | ConvertTo-SecureString -asPlainText -Force

$username = "$domain\$user"

$credential = New-Object System.Management.Automation.PSCredential($username,$password)

Add-Computer -DomainName $domain -Credential $credential

Install-WindowsFeature -Name “Failover-Clustering”, “Data-Center-Bridging”, “RSAT-Clustering-PowerShell”, “FS-FileServer” –IncludeManagementTools(source: Internet)

I decided to check the configuration before forming a cluster:

Test-Cluster –Node <Node1>, <Node2> –Include "Storage Spaces Direct", "Inventory", "Network", "System Configuration"

The outcome is a nice report which says which drives will be used for Storage Spaces Direct. The partition I created on one of the disks excluded it from the storage pool.

There were no errors in the report so I’m ready to build the cluster. Use the Load Balancer IP address as the cluster IP. Later we will configure load balancing rules to direct the traffic to the active node.

New-Cluster –Name <ClusterName> –Node <Node1>, <Node2> -StaticAddress <LoadBalancerClusterIp> -StaticAddress <LoadBalancerClusterFrontEndIP> –NoStorage

I use the previously created storage account as a Cloud Witness

Set-ClusterQuorum -CloudWitness -AccountName <StorageAccountName> -AccessKey <StorageAccountAccessKey>

When the cluster is operational, I can enable the Storage Spaces Direct functionality:

Enable-ClusterStorageSpacesDirect –CimSession <ClusterName>Four disks (two from each VM) formed a storage pool. Following command creates a new volume and mount it in the C:\ClusterStorage\

New-Volume -FriendlyName "VolumeName" -FileSystem CSVFS_ReFS -StoragePoolFriendlyName S2D* -UseMaximumSize

Details about the storage pool and the volume are also visible in the Server Manager:

Last PowerShell command used today will enable the Scale-Out File Server role on the cluster:

Add-ClusterScaleOutFileServerRole -Cluster <ClusterName> -Name <SOFSHostname>

I created the SAP mount directory on the Storage Spaces Direct volume:

A file share can be created in the Failover Cluster Manager:

The highly available file share is deployed and can be used to install Central Services instance.

SQL SERVER ALWAYS ON AVAILABILITY GROUPS

Following chapter shows how to deploy a Microsoft SQL Server and enable the AlwaysOn functionality. I included only the important installation steps for the first node, but the process has to be repeated on the second VMs.

As a preparation, I created two users in the Active Directory to run the database processes:

Then during the software installation, I assigned them against Database Engine and Server Agent:

I almost forgot to change the Collation! ?

In the database engine configuration, I chose a Mixed Mode authentication and configured data directories:

Installation doesn’t take too much time:

Once the installation is completed, we have two standalone SQL Servers installed on two nodes of the cluster. As the next step we need to enable the AlwaysOn functionality and configure the replication. In the SQL Configuration Manager select “Enable AlwaysOn Availability Groups”

System account needs additional permissions to manage the availability group:

USE [master]

GO

GRANT ALTER ANY AVAILABILITY GROUP TO [NT AUTHORITY\SYSTEM]

GO

GRANT CONNECT SQL TO [NT AUTHORITY\SYSTEM]

GO

GRANT VIEW SERVER STATE TO [NT AUTHORITY\SYSTEM]

GO

(source: Microsoft.com)

Before I continue with the AlwaysOn activation I need to create an empty database and execute a Full Backup:

You can follow the wizard to create availability group:

In the first window I’m asked to provide the Availability Group name:

Then I selected the database to replicate:

Specifies Replicas step allows to change the replication settings and include the hosts that should be included in the Availability Group. Synchronous replication minimizes the amount of lost data in case of node failure, but it comes with a negative impact to system performance. In most cases you should go with Asynchronous replication.

I haven’t created a Listener at this point.

I use Automatic Seeding to perform initial data synchronization.

When the configuration is completed, we receive a summary:

The database listener is a combination of virtual hostname and port that will be used for communication with the database. You can use the SQL Management Studio to define new listener:

The listener IP should match the Load Balancer SQL Front-end IP. Saving the settings will also create additional DNS entry in the domain.

The AlwaysOn configuration is quick and straightforward. The current cluster status can be monitored from the Cluster Manager, but the failover can only be triggered from the AlwaysOn dashboard.

Azure Load Balancer routes each request addressed to the listener to the correct host. But at this moment the Load Balancer is not aware on which node the SQL service is running. A health probe is a solution - every few seconds the Azure service will try to establish the connection to both nodes, but the health probe is active only on the host running the SQL process. This way the Load Balancer can identify the active node and direct the network traffic accordingly.

Use the following PowerShell script to create a health probe:

$ClusterNetworkName = "<ClusterNetworkName>"

$IPResourceName = "<IPResourceName>"

$ListenerILBIP = "<n.n.n.n>"

[int]$ListenerProbePort = <nnnnn>

Import-Module FailoverClusters

Get-ClusterResource $IPResourceName | Set-ClusterParameter -Multiple @{"Address"="$ListenerILBIP";"ProbePort"=$ListenerProbePort;"SubnetMask"="255.255.255.255";"Network"="$ClusterNetworkName";"EnableDhcp"=0}

(source: Microsoft.com)

How to get input parameters?

Cluster Network Name – can be retrieved using command Get-ClusterNetwork

IP Resource Name – can be retrieved from IP Address properties in the failover cluster manager or using the command Get-ClusterResource | ? ResourceType -eq "IP Address"

Listener – IP address assigned to the LoadBalancer Front-End IP Address

Probe Port – port defined in the Load Balancer

Now I can define a rule on the Load Balancer to route the requests to the correct host.

You can verify the configuration using sqlcmd.

sqlcmd -S <listenerName> -E

CENTRAL SERVICES INSTANCE – FIRST CLUSTER NODE

To enable the high availability of the SAP NetWeaver it is required to install the central services instance on both nodes of the cluster. Start the Software Provisioning Manager and select the installation on the First Cluster Node.

In my initial blog post about using a file share as an SAP mount directory most of the configuration had to be performed manually. Fortunately, such deployment option is now available already in the Software Provisioning Manager.

In the SAP System Cluster Parameters type the desired system ID. Network name is the hostname that the Central Services instance uses for communication. It has to be associated with the ASCS Load Balancer Front-End IP – DNS entry should be created before clicking Next. In the File Share Host Name enter the Scale-Out File Server hostname that you chose during initial cluster configuration.

Carefully choose the system numbers. If you use different instance number than bellow some of the load balancer rules will require an update:

When the installation is completed, I can create a health probe assigned to the ASCS Cluster IP resource.

Load Balancer configuration has to be enhanced to include Central Services instance ports: 3210, 3310, 3610, 3910, 51013, 51016, 8110,

The status of Central Services instance is displayed in SAP Management Console:

DATABASE INSTANCE

The database is running and the central services instance is deployed to the first node so it’s time to start the database instance installation.

At the beginning the Software Provisioning Manager asks to supply the path to profile directory. Of course, we should provide the file share created using Scale-Out File Server:

The installer also asks to provide the database connection. Please use the database listener and not the node hostname! Otherwise the profile parameter will have the incorrect information and the communication won’t happen through the Load Balancer but just directly with a SQL Server node. If you can’t see the listener name then please revisit the SQL Server configuration, as most probably something is not OK. Possible reasons are missing listener or problems with DNS entry.

Select the previously created database which is replicated to the secondary node:

The server has multiple components installed on the host, so the standard memory configuration won’t apply.

The database instance installation takes the most time as it takes the installation data and import it to the database during the Import Proper step.

SECONDARY ASCS NODE

Install the Central Services instance on the secondary node. The process is basically the same as for the primary node, so I don’t think you require additional explanation.

SECONDARY SQL NODE

SQL Server AlwaysOn works a bit differently compared to standard High Available solutions. Instead of using a shared storage it uses a replication mechanism to propagate the data from the primary to secondary node. Such approach has a lot of benefits, but it also requires additional work from us.

Not all SQL objects are created within the database, but they may apply to the entire SQL Server configuration. For example, all logins configuration doesn’t belong directly to the database and therefore won’t be replicated in the secondary node but should be re-created manually. Fortunately, SAP has a solution ready and we don’t have to copy the objects.

This step requires that the database software is running on the currently inactive node. Before starting the Software Provisioning Manager, it is required to perform a failover. That’s also a good test to check if SQL AlwaysOn is correctly working. Open the dashboard and follow the wizard to failover the database:

Now you can start the Software Provisioning Manager and configure the SQL Cluster node:

Provide the details about the current installation. Don’t forget to update the domain name:

Unlike during the central services install this time it’s OK to provide the node details:

The installer asks pretty much the same questions as during the database install.

When the process is finished you can go ahead to install the Dialog Instances.

APPLICATION INSTANCE

A highly available SAP NetWeaver system requires at least two instances of the application server – one instance per cluster node. Open the SWPM and select the Primary Application Server installation on one of the hosts – then when the installation is completed start the process of deploying Additional Application Server.

The process is again very similar to what we’ve done before, so I won’t go screen by screen. Remember to point the system to the correct file share.

You need to provide the instance number. I decided to go for 00 on both nodes:

Provide the details of the Message Server port and the file share to store the transport files:

A few moments later the installation is completed:

Congratulations! You have completed the setup of SAP NetWeaver running on two node failover cluster!

HOW TO MANAGE THE CLUSTER

The easy way to manage the cluster is to use the tools delivered by SAP and Microsoft. To start and stop SAP Central Services instance and check where the processes are currently running, I recommend using the Failover Cluster Manager:

To see the details about database replication I use the AlwaysOn dashboard available in SQL Management Studio.

Starting and stopping SAP Dialog Instances can be done using SAP MMC. Don’t use it to manage the central services instance.

Wow! That's quite a long post, but I hope such optimised scenario let's you practice managing highly available SAP solutions without spending a fortune on Azure VMs!

2 Comments

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

"automatische backups"

1 -

"regelmäßige sicherung"

1 -

"TypeScript" "Development" "FeedBack"

1 -

505 Technology Updates 53

1 -

ABAP

14 -

ABAP API

1 -

ABAP CDS Views

2 -

ABAP CDS Views - BW Extraction

1 -

ABAP CDS Views - CDC (Change Data Capture)

1 -

ABAP class

2 -

ABAP Cloud

2 -

ABAP Development

5 -

ABAP in Eclipse

1 -

ABAP Platform Trial

1 -

ABAP Programming

2 -

abap technical

1 -

absl

2 -

access data from SAP Datasphere directly from Snowflake

1 -

Access data from SAP datasphere to Qliksense

1 -

Accrual

1 -

action

1 -

adapter modules

1 -

Addon

1 -

Adobe Document Services

1 -

ADS

1 -

ADS Config

1 -

ADS with ABAP

1 -

ADS with Java

1 -

ADT

2 -

Advance Shipping and Receiving

1 -

Advanced Event Mesh

3 -

AEM

1 -

AI

7 -

AI Launchpad

1 -

AI Projects

1 -

AIML

9 -

Alert in Sap analytical cloud

1 -

Amazon S3

1 -

Analytical Dataset

1 -

Analytical Model

1 -

Analytics

1 -

Analyze Workload Data

1 -

annotations

1 -

API

1 -

API and Integration

3 -

API Call

2 -

Application Architecture

1 -

Application Development

5 -

Application Development for SAP HANA Cloud

3 -

Applications and Business Processes (AP)

1 -

Artificial Intelligence

1 -

Artificial Intelligence (AI)

5 -

Artificial Intelligence (AI) 1 Business Trends 363 Business Trends 8 Digital Transformation with Cloud ERP (DT) 1 Event Information 462 Event Information 15 Expert Insights 114 Expert Insights 76 Life at SAP 418 Life at SAP 1 Product Updates 4

1 -

Artificial Intelligence (AI) blockchain Data & Analytics

1 -

Artificial Intelligence (AI) blockchain Data & Analytics Intelligent Enterprise

1 -

Artificial Intelligence (AI) blockchain Data & Analytics Intelligent Enterprise Oil Gas IoT Exploration Production

1 -

Artificial Intelligence (AI) blockchain Data & Analytics Intelligent Enterprise sustainability responsibility esg social compliance cybersecurity risk

1 -

ASE

1 -

ASR

2 -

ASUG

1 -

Attachments

1 -

Authorisations

1 -

Automating Processes

1 -

Automation

2 -

aws

2 -

Azure

1 -

Azure AI Studio

1 -

B2B Integration

1 -

Backorder Processing

1 -

Backup

1 -

Backup and Recovery

1 -

Backup schedule

1 -

BADI_MATERIAL_CHECK error message

1 -

Bank

1 -

BAS

1 -

basis

2 -

Basis Monitoring & Tcodes with Key notes

2 -

Batch Management

1 -

BDC

1 -

Best Practice

1 -

bitcoin

1 -

Blockchain

3 -

bodl

1 -

BOP in aATP

1 -

BOP Segments

1 -

BOP Strategies

1 -

BOP Variant

1 -

BPC

1 -

BPC LIVE

1 -

BTP

12 -

BTP Destination

2 -

Business AI

1 -

Business and IT Integration

1 -

Business application stu

1 -

Business Application Studio

1 -

Business Architecture

1 -

Business Communication Services

1 -

Business Continuity

1 -

Business Data Fabric

3 -

Business Partner

12 -

Business Partner Master Data

10 -

Business Technology Platform

2 -

Business Trends

4 -

CA

1 -

calculation view

1 -

CAP

3 -

Capgemini

1 -

CAPM

1 -

Catalyst for Efficiency: Revolutionizing SAP Integration Suite with Artificial Intelligence (AI) and

1 -

CCMS

2 -

CDQ

12 -

CDS

2 -

Cental Finance

1 -

Certificates

1 -

CFL

1 -

Change Management

1 -

chatbot

1 -

chatgpt

3 -

CL_SALV_TABLE

2 -

Class Runner

1 -

Classrunner

1 -

Cloud ALM Monitoring

1 -

Cloud ALM Operations

1 -

cloud connector

1 -

Cloud Extensibility

1 -

Cloud Foundry

4 -

Cloud Integration

6 -

Cloud Platform Integration

2 -

cloudalm

1 -

communication

1 -

Compensation Information Management

1 -

Compensation Management

1 -

Compliance

1 -

Compound Employee API

1 -

Configuration

1 -

Connectors

1 -

Consolidation Extension for SAP Analytics Cloud

2 -

Control Indicators.

1 -

Controller-Service-Repository pattern

1 -

Conversion

1 -

Cosine similarity

1 -

cryptocurrency

1 -

CSI

1 -

ctms

1 -

Custom chatbot

3 -

Custom Destination Service

1 -

custom fields

1 -

Customer Experience

1 -

Customer Journey

1 -

Customizing

1 -

cyber security

3 -

cybersecurity

1 -

Data

1 -

Data & Analytics

1 -

Data Aging

1 -

Data Analytics

2 -

Data and Analytics (DA)

1 -

Data Archiving

1 -

Data Back-up

1 -

Data Flow

1 -

Data Governance

5 -

Data Integration

2 -

Data Quality

12 -

Data Quality Management

12 -

Data Synchronization

1 -

data transfer

1 -

Data Unleashed

1 -

Data Value

8 -

database tables

1 -

Datasphere

3 -

datenbanksicherung

1 -

dba cockpit

1 -

dbacockpit

1 -

Debugging

2 -

Delimiting Pay Components

1 -

Delta Integrations

1 -

Destination

3 -

Destination Service

1 -

Developer extensibility

1 -

Developing with SAP Integration Suite

1 -

Devops

1 -

digital transformation

1 -

Documentation

1 -

Dot Product

1 -

DQM

1 -

dump database

1 -

dump transaction

1 -

e-Invoice

1 -

E4H Conversion

1 -

Eclipse ADT ABAP Development Tools

2 -

edoc

1 -

edocument

1 -

ELA

1 -

Embedded Consolidation

1 -

Embedding

1 -

Embeddings

1 -

Employee Central

1 -

Employee Central Payroll

1 -

Employee Central Time Off

1 -

Employee Information

1 -

Employee Rehires

1 -

Enable Now

1 -

Enable now manager

1 -

endpoint

1 -

Enhancement Request

1 -

Enterprise Architecture

1 -

ETL Business Analytics with SAP Signavio

1 -

Euclidean distance

1 -

Event Dates

1 -

Event Driven Architecture

1 -

Event Mesh

2 -

Event Reason

1 -

EventBasedIntegration

1 -

EWM

1 -

EWM Outbound configuration

1 -

EWM-TM-Integration

1 -

Existing Event Changes

1 -

Expand

1 -

Expert

2 -

Expert Insights

2 -

Exploits

1 -

Fiori

14 -

Fiori Elements

2 -

Fiori SAPUI5

12 -

Flask

1 -

Full Stack

8 -

Funds Management

1 -

General

1 -

General Splitter

1 -

Generative AI

1 -

Getting Started

1 -

GitHub

8 -

Grants Management

1 -

GraphQL

1 -

groovy

1 -

GTP

1 -

HANA

6 -

HANA Cloud

2 -

Hana Cloud Database Integration

2 -

HANA DB

2 -

HANA XS Advanced

1 -

Historical Events

1 -

home labs

1 -

HowTo

1 -

HR Data Management

1 -

html5

8 -

HTML5 Application

1 -

Identity cards validation

1 -

idm

1 -

Implementation

1 -

input parameter

1 -

instant payments

1 -

Integration

3 -

Integration Advisor

1 -

Integration Architecture

1 -

Integration Center

1 -

Integration Suite

1 -

intelligent enterprise

1 -

iot

1 -

Java

1 -

job

1 -

Job Information Changes

1 -

Job-Related Events

1 -

Job_Event_Information

1 -

joule

4 -

Journal Entries

1 -

Just Ask

1 -

Kerberos for ABAP

8 -

Kerberos for JAVA

8 -

KNN

1 -

Launch Wizard

1 -

Learning Content

2 -

Life at SAP

5 -

lightning

1 -

Linear Regression SAP HANA Cloud

1 -

local tax regulations

1 -

LP

1 -

Machine Learning

2 -

Marketing

1 -

Master Data

3 -

Master Data Management

14 -

Maxdb

2 -

MDG

1 -

MDGM

1 -

MDM

1 -

Message box.

1 -

Messages on RF Device

1 -

Microservices Architecture

1 -

Microsoft Universal Print

1 -

Middleware Solutions

1 -

Migration

5 -

ML Model Development

1 -

Modeling in SAP HANA Cloud

8 -

Monitoring

3 -

MTA

1 -

Multi-Record Scenarios

1 -

Multiple Event Triggers

1 -

Myself Transformation

1 -

Neo

1 -

New Event Creation

1 -

New Feature

1 -

Newcomer

1 -

NodeJS

2 -

ODATA

2 -

OData APIs

1 -

odatav2

1 -

ODATAV4

1 -

ODBC

1 -

ODBC Connection

1 -

Onpremise

1 -

open source

2 -

OpenAI API

1 -

Oracle

1 -

PaPM

1 -

PaPM Dynamic Data Copy through Writer function

1 -

PaPM Remote Call

1 -

PAS-C01

1 -

Pay Component Management

1 -

PGP

1 -

Pickle

1 -

PLANNING ARCHITECTURE

1 -

Popup in Sap analytical cloud

1 -

PostgrSQL

1 -

POSTMAN

1 -

Process Automation

2 -

Product Updates

4 -

PSM

1 -

Public Cloud

1 -

Python

4 -

Qlik

1 -

Qualtrics

1 -

RAP

3 -

RAP BO

2 -

Record Deletion

1 -

Recovery

1 -

recurring payments

1 -

redeply

1 -

Release

1 -

Remote Consumption Model

1 -

Replication Flows

1 -

research

1 -

Resilience

1 -

REST

1 -

REST API

2 -

Retagging Required

1 -

Risk

1 -

Rolling Kernel Switch

1 -

route

1 -

rules

1 -

S4 HANA

1 -

S4 HANA Cloud

1 -

S4 HANA On-Premise

1 -

S4HANA

3 -

S4HANA_OP_2023

2 -

SAC

10 -

SAC PLANNING

9 -

SAP

4 -

SAP ABAP

1 -

SAP Advanced Event Mesh

1 -

SAP AI Core

8 -

SAP AI Launchpad

8 -

SAP Analytic Cloud Compass

1 -

Sap Analytical Cloud

1 -

SAP Analytics Cloud

4 -

SAP Analytics Cloud for Consolidation

3 -

SAP Analytics Cloud Story

1 -

SAP analytics clouds

1 -

SAP BAS

1 -

SAP Basis

6 -

SAP BODS

1 -

SAP BODS certification.

1 -

SAP BTP

21 -

SAP BTP Build Work Zone

2 -

SAP BTP Cloud Foundry

6 -

SAP BTP Costing

1 -

SAP BTP CTMS

1 -

SAP BTP Innovation

1 -

SAP BTP Migration Tool

1 -

SAP BTP SDK IOS

1 -

SAP Build

11 -

SAP Build App

1 -

SAP Build apps

1 -

SAP Build CodeJam

1 -

SAP Build Process Automation

3 -

SAP Build work zone

10 -

SAP Business Objects Platform

1 -

SAP Business Technology

2 -

SAP Business Technology Platform (XP)

1 -

sap bw

1 -

SAP CAP

2 -

SAP CDC

1 -

SAP CDP

1 -

SAP CDS VIEW

1 -

SAP Certification

1 -

SAP Cloud ALM

4 -

SAP Cloud Application Programming Model

1 -

SAP Cloud Integration for Data Services

1 -

SAP cloud platform

8 -

SAP Companion

1 -

SAP CPI

3 -

SAP CPI (Cloud Platform Integration)

2 -

SAP CPI Discover tab

1 -

sap credential store

1 -

SAP Customer Data Cloud

1 -

SAP Customer Data Platform

1 -

SAP Data Intelligence

1 -

SAP Data Migration in Retail Industry

1 -

SAP Data Services

1 -

SAP DATABASE

1 -

SAP Dataspher to Non SAP BI tools

1 -

SAP Datasphere

9 -

SAP DRC

1 -

SAP EWM

1 -

SAP Fiori

2 -

SAP Fiori App Embedding

1 -

Sap Fiori Extension Project Using BAS

1 -

SAP GRC

1 -

SAP HANA

1 -

SAP HCM (Human Capital Management)

1 -

SAP HR Solutions

1 -

SAP IDM

1 -

SAP Integration Suite

9 -

SAP Integrations

4 -

SAP iRPA

2 -

SAP Learning Class

1 -

SAP Learning Hub

1 -

SAP Odata

2 -

SAP on Azure

1 -

SAP PartnerEdge

1 -

sap partners

1 -

SAP Password Reset

1 -

SAP PO Migration

1 -

SAP Prepackaged Content

1 -

SAP Process Automation

2 -

SAP Process Integration

2 -

SAP Process Orchestration

1 -

SAP S4HANA

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Cloud for Finance

1 -

SAP S4HANA Cloud private edition

1 -

SAP Sandbox

1 -

SAP STMS

1 -

SAP successfactors

3 -

SAP SuccessFactors HXM Core

1 -

SAP Time

1 -

SAP TM

2 -

SAP Trading Partner Management

1 -

SAP UI5

1 -

SAP Upgrade

1 -

SAP Utilities

1 -

SAP-GUI

8 -

SAP_COM_0276

1 -

SAPBTP

1 -

SAPCPI

1 -

SAPEWM

1 -

sapmentors

1 -

saponaws

2 -

SAPS4HANA

1 -

SAPUI5

4 -

schedule

1 -

Script Operator

1 -

Secure Login Client Setup

8 -

security

9 -

Selenium Testing

1 -

Self Transformation

1 -

Self-Transformation

1 -

SEN

1 -

SEN Manager

1 -

service

1 -

SET_CELL_TYPE

1 -

SET_CELL_TYPE_COLUMN

1 -

SFTP scenario

2 -

Simplex

1 -

Single Sign On

8 -

Singlesource

1 -

SKLearn

1 -

soap

1 -

Software Development

1 -

SOLMAN

1 -

solman 7.2

2 -

Solution Manager

3 -

sp_dumpdb

1 -

sp_dumptrans

1 -

SQL

1 -

sql script

1 -

SSL

8 -

SSO

8 -

Substring function

1 -

SuccessFactors

1 -

SuccessFactors Platform

1 -

SuccessFactors Time Tracking

1 -

Sybase

1 -

system copy method

1 -

System owner

1 -

Table splitting

1 -

Tax Integration

1 -

Technical article

1 -

Technical articles

1 -

Technology Updates

14 -

Technology Updates

1 -

Technology_Updates

1 -

terraform

1 -

Threats

2 -

Time Collectors

1 -

Time Off

2 -

Time Sheet

1 -

Time Sheet SAP SuccessFactors Time Tracking

1 -

Tips and tricks

2 -

toggle button

1 -

Tools

1 -

Trainings & Certifications

1 -

Transformation Flow

1 -

Transport in SAP BODS

1 -

Transport Management

1 -

TypeScript

2 -

ui designer

1 -

unbind

1 -

Unified Customer Profile

1 -

UPB

1 -

Use of Parameters for Data Copy in PaPM

1 -

User Unlock

1 -

VA02

1 -

Validations

1 -

Vector Database

2 -

Vector Engine

1 -

Visual Studio Code

1 -

VSCode

1 -

Vulnerabilities

1 -

Web SDK

1 -

work zone

1 -

workload

1 -

xsa

1 -

XSA Refresh

1

- « Previous

- Next »

Related Content

- JMS driver based on jakarta.jms available for SAP Netweaver in Technology Q&A

- MYINBOX: Issue with user decision task link from MYINBOX in Technology Q&A

- App to automatically configure a new ABAP Developer System in Technology Blogs by Members

- How to do client copy in SAP BW after database content refresh in Technology Q&A

- It has never been easier to print from SAP with Microsoft Universal Print in Technology Blogs by Members

Top kudoed authors

| User | Count |

|---|---|

| 5 | |

| 5 | |

| 5 | |

| 4 | |

| 4 | |

| 4 | |

| 4 | |

| 4 | |

| 3 | |

| 3 |