- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- Installing SAP Data Hub

Technology Blogs by SAP

Learn how to extend and personalize SAP applications. Follow the SAP technology blog for insights into SAP BTP, ABAP, SAP Analytics Cloud, SAP HANA, and more.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Advisor

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

11-17-2018

10:24 AM

In my last blog post I wrote about what it means that SAP Data Hub is a containerized application. Today I want to talk about the installation of SAP Data Hub. All explanations relate to SAP Data Hub 2.3 or newer.

This has a prelude: For SAP TechEd I had submitted a MeetUp about installing SAP Data Hub. I wanted to demonstrate the installation during this MeetUp with a live demo… and unfortunately realized a few hours before that the MeetUp room did not come with a monitor ☹. My improvisation consisted of a few hastily prepared whiteboard drawings. I took these drawings as a basis for this blog post.

There is a lot written about installing SAP Data Hub in the official documentation (in particular here and here). My intention behind this blog post is clearly not to enable you to install SAP Data Hub without consulting the documentation. Instead I like to complement the documentation by looking a bit behind the scenes.

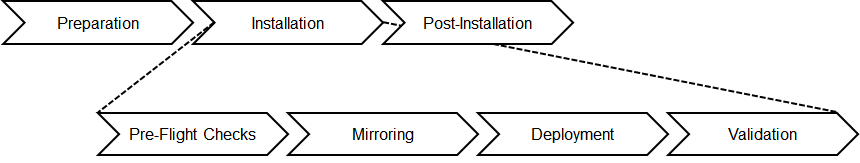

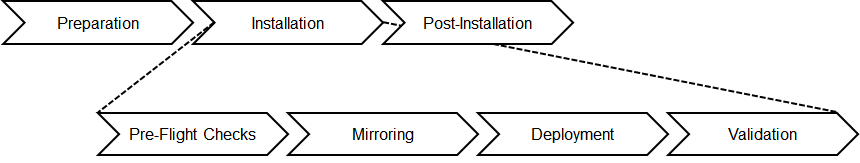

Every installation of SAP Data Hub – independent of where you install the software – simply spoken consists of three phases: preparation, installation and post-installation.

The installation phase consists of four sub-phases: pre-flight checks, mirroring, deployment and validation. That’s how it looks:

During the installation you get in touch with different “things”. I have tried to depict them in the following diagram:

During the preparation phase you need to set up an installation host (1) to run the installation procedure as well as a Kubernetes cluster (2) and a “local” container registry (3) to install SAP Data Hub through the installation procedure.

Remark: If SAP Data Hub is operated as part of an SAP system landscape, the recommended installation procedure to install SAP Data Hub is using SAP Maintenance Planner (see here). An alternative is a command-line tool (install.sh) delivered with SAP Data Hub. For today, I will use the command line tool. I might write a separate blog post about using SAP Maintenance Planner… or maybe a colleague will do. Behind the scenes always install.sh runs. So, what you learn today will stay valid.

The installation host (1) is a Linux computer / virtual machine. It must meet certain requirements. For example, it needs to have Docker, Python, the Kubernetes command-line tool (kubectl) and the Kubernetes package manager (helm) installed.

The installation procedure for SAP Data Hub assumes that you have a running Kubernetes cluster (2) as well as “local” container registry (3). Just like the installation host, both must meet certain requirements. The Kubernetes cluster (2) needs to consist of at least three nodes (all details can be found here).

Depending on whether you like to install SAP Data Hub on-premise or in the cloud (and on which cloud provider) the steps to spin up the cluster and registry defer. I do not like to bloat this blog post by listing the individual commands.

After you have prepared for the installation, you install SAP Data Hub. Thereto you download the software archive from the SAP Software Download Center (4) to the installation host (1):

After unpacking the software archive (in this example DHFOUNDATION03_3-80004015.zip, i.e. SAP Data Hub 2.3 patch 3), you find the following folder structure on the installation host (1):

Now the fun begins. You start the installation by running the command line tool (install.sh). The command line tool has two mandatory parameters: the Kubernetes namespace used to deploy SAP Data Hub and the “local” container registry (3). You can start the installation like this:

The command line tool has many more additional parameters. It will later prompt for (some of) these, if you do not pass them.

After you have started the installation, the command line tool performs a couple of checks to ensure that the necessary prerequisites to install SAP Data Hub are fulfilled. These checks are supposed to ensure that the installation does not break halfway. They are comparable to the checks a pilot performs prior to takeoff to minimize the risk of a plane crash. Hence SAP’s developers called them “pre-flight checks”:

Subsequently to the pre-flight checks, the command line tool prompts for additional parameters (which you did not pass when calling install.sh). Finally, it asks you to confirm the parameters (aka “configuration”) for the installation:

After you have confirmed the parameters, the command line tool first mirrors the container images for SAP Data Hub. They will later be used to run the different components of SAP Data Hub on the Kubernetes cluster (2).

Mirroring means the command line tool pulls the necessary container images from the (private) SAP container registry (5) as well as from (public) third party container registries (6) to the installation host (1). Afterwards it tags the container images for and pushes them to the “local” container registry (3). “Local” means the container registry which is used by the Kubernetes cluster (2).

The following screenshot shows some of the container images on the installation host (1):

You can see that each container image is listed two times:

The SAP container registry (5) includes the container images for all versions (support packages, patches) of SAP Data Hub. The command line tool is “bound” to one version of SAP Data Hub (in this example SAP Data Hub 2.3 patch 3). All relevant container images are listed in the ./tools/images.sh file inside the software archive downloaded from the SAP Software Download Center (4).

After all necessary container images have been mirrored, the command line tool deploys the different components of SAP Data Hub. For this it uses the Kubernetes package manager (helm). At the end of the deployment, all containers needed by SAP Data Hub will run on the Kubernetes cluster (2). The cluster will look similar to this now (the screenshot shows all running containers):

Necessary files for helm are stored in the ./deployment directory inside the software archive downloaded from the SAP Download Center (4).

Finally, install.sh runs a couple of validations to ensure that SAP Data Hub is functional. The following screenshot shows the output in case all validations are successful:

The validations include:

You can find the detailed results of the validations in the ./logs folder:

After you have successfully installed the software, additional post-installation steps can be necessary. Again (just like for the preparation), the steps depend on whether you like to install SAP Data Hub on-premise or in the cloud (and on which cloud provider). And again, I do not like to bloat this blog post by listing the individual commands. If you like to know the details, then you can take a look at the official documentation.

Hooray. SAP Data Hub is running. you can log on with the user / password passed to the command line tool earlier:

That’s all for now. I hope you found this blog post interesting. Next time I will most likely write something about data pipelines and workflows…

This has a prelude: For SAP TechEd I had submitted a MeetUp about installing SAP Data Hub. I wanted to demonstrate the installation during this MeetUp with a live demo… and unfortunately realized a few hours before that the MeetUp room did not come with a monitor ☹. My improvisation consisted of a few hastily prepared whiteboard drawings. I took these drawings as a basis for this blog post.

There is a lot written about installing SAP Data Hub in the official documentation (in particular here and here). My intention behind this blog post is clearly not to enable you to install SAP Data Hub without consulting the documentation. Instead I like to complement the documentation by looking a bit behind the scenes.

Overview of an installation

Every installation of SAP Data Hub – independent of where you install the software – simply spoken consists of three phases: preparation, installation and post-installation.

The installation phase consists of four sub-phases: pre-flight checks, mirroring, deployment and validation. That’s how it looks:

During the installation you get in touch with different “things”. I have tried to depict them in the following diagram:

Preparation

During the preparation phase you need to set up an installation host (1) to run the installation procedure as well as a Kubernetes cluster (2) and a “local” container registry (3) to install SAP Data Hub through the installation procedure.

Remark: If SAP Data Hub is operated as part of an SAP system landscape, the recommended installation procedure to install SAP Data Hub is using SAP Maintenance Planner (see here). An alternative is a command-line tool (install.sh) delivered with SAP Data Hub. For today, I will use the command line tool. I might write a separate blog post about using SAP Maintenance Planner… or maybe a colleague will do. Behind the scenes always install.sh runs. So, what you learn today will stay valid.

The installation host (1) is a Linux computer / virtual machine. It must meet certain requirements. For example, it needs to have Docker, Python, the Kubernetes command-line tool (kubectl) and the Kubernetes package manager (helm) installed.

The installation procedure for SAP Data Hub assumes that you have a running Kubernetes cluster (2) as well as “local” container registry (3). Just like the installation host, both must meet certain requirements. The Kubernetes cluster (2) needs to consist of at least three nodes (all details can be found here).

Depending on whether you like to install SAP Data Hub on-premise or in the cloud (and on which cloud provider) the steps to spin up the cluster and registry defer. I do not like to bloat this blog post by listing the individual commands.

Installation

After you have prepared for the installation, you install SAP Data Hub. Thereto you download the software archive from the SAP Software Download Center (4) to the installation host (1):

After unpacking the software archive (in this example DHFOUNDATION03_3-80004015.zip, i.e. SAP Data Hub 2.3 patch 3), you find the following folder structure on the installation host (1):

Now the fun begins. You start the installation by running the command line tool (install.sh). The command line tool has two mandatory parameters: the Kubernetes namespace used to deploy SAP Data Hub and the “local” container registry (3). You can start the installation like this:

The command line tool has many more additional parameters. It will later prompt for (some of) these, if you do not pass them.

Pre-Flight Checks

After you have started the installation, the command line tool performs a couple of checks to ensure that the necessary prerequisites to install SAP Data Hub are fulfilled. These checks are supposed to ensure that the installation does not break halfway. They are comparable to the checks a pilot performs prior to takeoff to minimize the risk of a plane crash. Hence SAP’s developers called them “pre-flight checks”:

Subsequently to the pre-flight checks, the command line tool prompts for additional parameters (which you did not pass when calling install.sh). Finally, it asks you to confirm the parameters (aka “configuration”) for the installation:

Mirroring

After you have confirmed the parameters, the command line tool first mirrors the container images for SAP Data Hub. They will later be used to run the different components of SAP Data Hub on the Kubernetes cluster (2).

Mirroring means the command line tool pulls the necessary container images from the (private) SAP container registry (5) as well as from (public) third party container registries (6) to the installation host (1). Afterwards it tags the container images for and pushes them to the “local” container registry (3). “Local” means the container registry which is used by the Kubernetes cluster (2).

The following screenshot shows some of the container images on the installation host (1):

You can see that each container image is listed two times:

- Once the container image is tagged with the container registry it was pulled from, e.g. the SAP container registry (73554900100200008830.dockersrv.repositories.sap.ondemand.com) or Docker Hub (docker.io).

- Once it is tagged with the container registry it was pushed to, i.e. the “local” container registry (3) used by the Kubernetes cluster (2). In this example this is the container registry eu.gcr.io/…234664.

The SAP container registry (5) includes the container images for all versions (support packages, patches) of SAP Data Hub. The command line tool is “bound” to one version of SAP Data Hub (in this example SAP Data Hub 2.3 patch 3). All relevant container images are listed in the ./tools/images.sh file inside the software archive downloaded from the SAP Software Download Center (4).

Deployment

After all necessary container images have been mirrored, the command line tool deploys the different components of SAP Data Hub. For this it uses the Kubernetes package manager (helm). At the end of the deployment, all containers needed by SAP Data Hub will run on the Kubernetes cluster (2). The cluster will look similar to this now (the screenshot shows all running containers):

Necessary files for helm are stored in the ./deployment directory inside the software archive downloaded from the SAP Download Center (4).

Validation

Finally, install.sh runs a couple of validations to ensure that SAP Data Hub is functional. The following screenshot shows the output in case all validations are successful:

The validations include:

- Creation of tables in SAP Vora, execution of several queries (vora-cluster)

- Execution of smoke tests for Spark (vora-sparkkonk8s)

Remark: Certain features of SAP Data Hub make use of Spark und run Spark workloads on the Kubernetes cluster. - Connection to SAP Data Hub System Management and verification of installed applications, e.g. Connection Management, Metadata Explorer, Vora Tools (vora-vsystem)

- Verification of the SAP HANA database used by applications like the aforementioned ones (datahub-app-base-db)

You can find the detailed results of the validations in the ./logs folder:

Post-Installation

After you have successfully installed the software, additional post-installation steps can be necessary. Again (just like for the preparation), the steps depend on whether you like to install SAP Data Hub on-premise or in the cloud (and on which cloud provider). And again, I do not like to bloat this blog post by listing the individual commands. If you like to know the details, then you can take a look at the official documentation.

Hooray. SAP Data Hub is running. you can log on with the user / password passed to the command line tool earlier:

That’s all for now. I hope you found this blog post interesting. Next time I will most likely write something about data pipelines and workflows…

- SAP Managed Tags:

- SAP Data Intelligence,

- Big Data

Labels:

34 Comments

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

91 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

66 -

Expert

1 -

Expert Insights

177 -

Expert Insights

293 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

13 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,577 -

Product Updates

340 -

Replication Flow

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,873 -

Technology Updates

418 -

Workload Fluctuations

1

Related Content

- Has the SAP Master Data Integration service been deprecated? in Technology Q&A

- Recommended approach for Fiori Adapt UI in S/4H On-Premise system (Customizing client) with no data in Technology Q&A

- App to automatically configure a new ABAP Developer System in Technology Blogs by Members

- Data Privacy Embedding Model via Core AI in Technology Q&A

- ABAP Programming Error LIST_TOPOFPAGE_OVERFLOW in Technology Q&A

Top kudoed authors

| User | Count |

|---|---|

| 35 | |

| 25 | |

| 13 | |

| 7 | |

| 7 | |

| 6 | |

| 6 | |

| 6 | |

| 5 | |

| 4 |