- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- Machine Learning Prototype - Automating ETL

Technology Blogs by SAP

Learn how to extend and personalize SAP applications. Follow the SAP technology blog for insights into SAP BTP, ABAP, SAP Analytics Cloud, SAP HANA, and more.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Product and Topic Expert

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

11-09-2018

6:26 PM

Here's a write up of a prototype we demonstrated at the Intel booth at SAP TechEd 2018. This was performed using Intel Skylake CPUs with Machine Learning (ML) to automate ETL (Extract Transform and Load) mappings. There's a video of the demo at the bottom.

As often is the case this was a team effort, you can see those who built this prototype on the slides, both the SAP team and the Intel team. The main contributors who I would like to recognise would be nidhi.sawhney, stojanm and John Santerre.

The following components were used for the prototype

The scenario we are address is the following.

A company receives data from their customers in a variety of different formats. A single data file received could contain a variety of formats and a variety of data fields, even different types of transactions. This prevents them from using a traditional ETL approach. These files and formats do change, but the type of data remains consistent.

They have already built rules manually, which are then used for ETL mappings.

The rules are held at a row (record) level.

For each record they would know.

We then wanted to see if we could teach a machine to perform the same task and to what level of accuracy could we achieve.

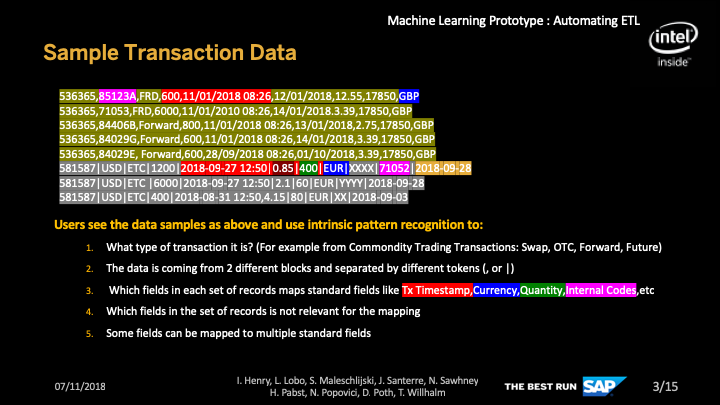

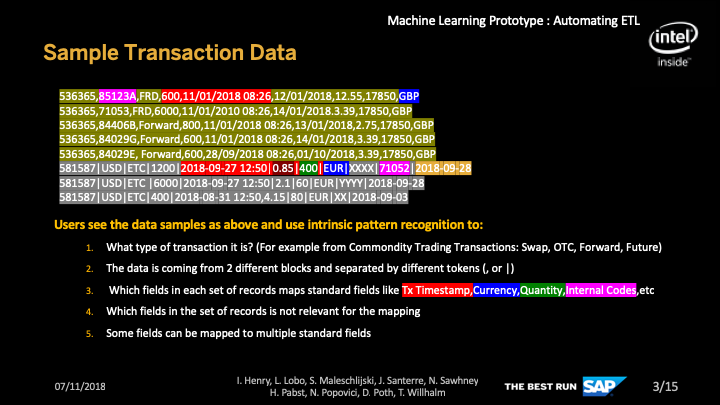

If you look closely at the same of data below, you should be able to see that within one file we have numerous problems that would need to be handled within an ETL process. None of these are insurmountable, but significant logic and rules are required.

We then wanted to teach a machine to learn how to process this data. We would train a deep learning models with previously labelled data. These models will learn to understand patterns from strings of transactions.

First we identify the type of transaction, by teaching it from previous transactions.

Secondly we perform the field to column identification.

To train the ML model we used both a horizontal scan and vertical scan of the data, this allows the model to "see" the data, so that it recognises different types of data, much in the same way that we do. We also used Intel CPUs and not GPUs and with the right configuration (correctly compiled MKL tensorflow python wheel and environment variables shown below)

The Architecture is shown below, the model training and scoring is performed outside of SAP HANA, using similar Intel Skylake hardware.

The specification of the Intel hardware that we used is below.

One of the challenges with ML is having specialised hardware with GPUs, however we proved that these are not always required.

I made a short video that captures the main pieces of the Machine Learning Prototype.

It begins with the training of the ML model using Python.

We then evaluate the model using SAC, here we can see instances where there is very data the model does note perform well as it requires more data. The bars represent the record count for each of the fields, and the line is the model accuracy (F1 Score).

Finally we use the SAP HANA WebIDE to acquire new data with SDI and then to score this data using the External Machine Learning. The model captured in the video is classifying the transaction (trade) type.

As often is the case this was a team effort, you can see those who built this prototype on the slides, both the SAP team and the Intel team. The main contributors who I would like to recognise would be nidhi.sawhney, stojanm and John Santerre.

The following components were used for the prototype

- Python for implementing a Google TensorFlow model created with Keras

- SAP HANA Smart Data Integration (SDI) for acquiring new data

- SAP HANA External Machine Learning (EML) for inference (scoring) new data

- SAP Analytics Cloud for model evaluation.

The scenario we are address is the following.

A company receives data from their customers in a variety of different formats. A single data file received could contain a variety of formats and a variety of data fields, even different types of transactions. This prevents them from using a traditional ETL approach. These files and formats do change, but the type of data remains consistent.

They have already built rules manually, which are then used for ETL mappings.

The rules are held at a row (record) level.

For each record they would know.

- What type of data it was.

- What is the field separator

- Field to Column mapping

We then wanted to see if we could teach a machine to perform the same task and to what level of accuracy could we achieve.

If you look closely at the same of data below, you should be able to see that within one file we have numerous problems that would need to be handled within an ETL process. None of these are insurmountable, but significant logic and rules are required.

- Different field separators ("," and "|")

- Different field orders, look at the currency (GBP, EUR)

- Different number of fields per record

- Different date formats

- Different type of data (transactions)

We then wanted to teach a machine to learn how to process this data. We would train a deep learning models with previously labelled data. These models will learn to understand patterns from strings of transactions.

First we identify the type of transaction, by teaching it from previous transactions.

Secondly we perform the field to column identification.

To train the ML model we used both a horizontal scan and vertical scan of the data, this allows the model to "see" the data, so that it recognises different types of data, much in the same way that we do. We also used Intel CPUs and not GPUs and with the right configuration (correctly compiled MKL tensorflow python wheel and environment variables shown below)

## Linux OS Variables

MKL_THREADING_LAYER=GNU

KMP_BLOCKTIME=1

The Architecture is shown below, the model training and scoring is performed outside of SAP HANA, using similar Intel Skylake hardware.

The specification of the Intel hardware that we used is below.

One of the challenges with ML is having specialised hardware with GPUs, however we proved that these are not always required.

I made a short video that captures the main pieces of the Machine Learning Prototype.

- Model Training in Python

- Model Evaluation in SAP Analytics Cloud (SAC)

- Data Acquisition with SAP HANA Smart Data Integration (SDI)

- Scoring Data with SAP HANA External Machine Learning (EML)

It begins with the training of the ML model using Python.

We then evaluate the model using SAC, here we can see instances where there is very data the model does note perform well as it requires more data. The bars represent the record count for each of the fields, and the line is the model accuracy (F1 Score).

Finally we use the SAP HANA WebIDE to acquire new data with SDI and then to score this data using the External Machine Learning. The model captured in the video is classifying the transaction (trade) type.

- SAP Managed Tags:

- Machine Learning,

- SAP Analytics Cloud,

- SAP TechEd,

- SAP HANA

Labels:

1 Comment

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

92 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

66 -

Expert

1 -

Expert Insights

177 -

Expert Insights

295 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

13 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,577 -

Product Updates

341 -

Replication Flow

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,873 -

Technology Updates

419 -

Workload Fluctuations

1

Related Content

- UNVEILING THE INNOVATIONS OF ARTIFICIAL INTELLIGENCE in Technology Q&A

- ABAP Matrix (AFM/ALM) in Technology Blogs by Members

- 10+ ways to reshape your SAP landscape with SAP BTP: Blog 2 Interview in Technology Blogs by SAP

- Augmenting SAP BTP Use Cases with AI Foundation: A Deep Dive into the Generative AI Hub in Technology Blogs by SAP

- Details of SAP Data and Analytics Advisory Methodology Phase III (Capability Map & Sol. Architect.) in Technology Blogs by SAP

Top kudoed authors

| User | Count |

|---|---|

| 36 | |

| 25 | |

| 17 | |

| 13 | |

| 8 | |

| 7 | |

| 6 | |

| 6 | |

| 6 | |

| 6 |