- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- Practical Industrial IoT Security Part 2: SAP’s La...

Technology Blogs by SAP

Learn how to extend and personalize SAP applications. Follow the SAP technology blog for insights into SAP BTP, ABAP, SAP Analytics Cloud, SAP HANA, and more.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Product and Topic Expert

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

07-09-2018

6:36 PM

In a previous blog on this topic from earlier in the year, we already lifted the curtain on how SAP approaches security in Digital Manufacturing. With the Digital Manufacturing cloud applications released at SAPPHIRE this June, we can now complete the story and work it out in even further detail.

More on the general context and further information on what was released at SAPPHIRE can be found in Hans Thalbauer's Enable an Intelligent Digital Supply Chain of One to Transform Your Business presentation, this short video or the Manufacturing section on the SAP website.

Over the last years, we have seen a significant number of security incidents in industrial landscapes on top of attacks against IT infrastructure and cloud services. From the "ground zero" of StuxNet to the WannaCry, Petya and NotWannaCry ransomware attacks of 2017, it is clear that threats are increasing. At the same time, IoT and Industrie 4.0 connect more things than ever, and there is a drive towards increasing automation and optimization through predictive algorithms, which promise great business benefits but further increase the threat landscape.

With threat modeling, we can get clarity on where the dangers in the landscape are hidden, and what we need to protect. To that end, we look at the entire landscape of the solution, and focus specifically on:

To keep things manageable, I'll make some reasonable assumptions for the rest of this article:

I believe these assumptions are reasonable as they match common best practices, are documented in the various product and services administration-, configuration- and security guides, and follow common practices in IT and Security policies in large enterprises. That is of course not to say they are always applied. But it should be no surprise such protections should be in place. We cannot build on top of an insecure foundation.

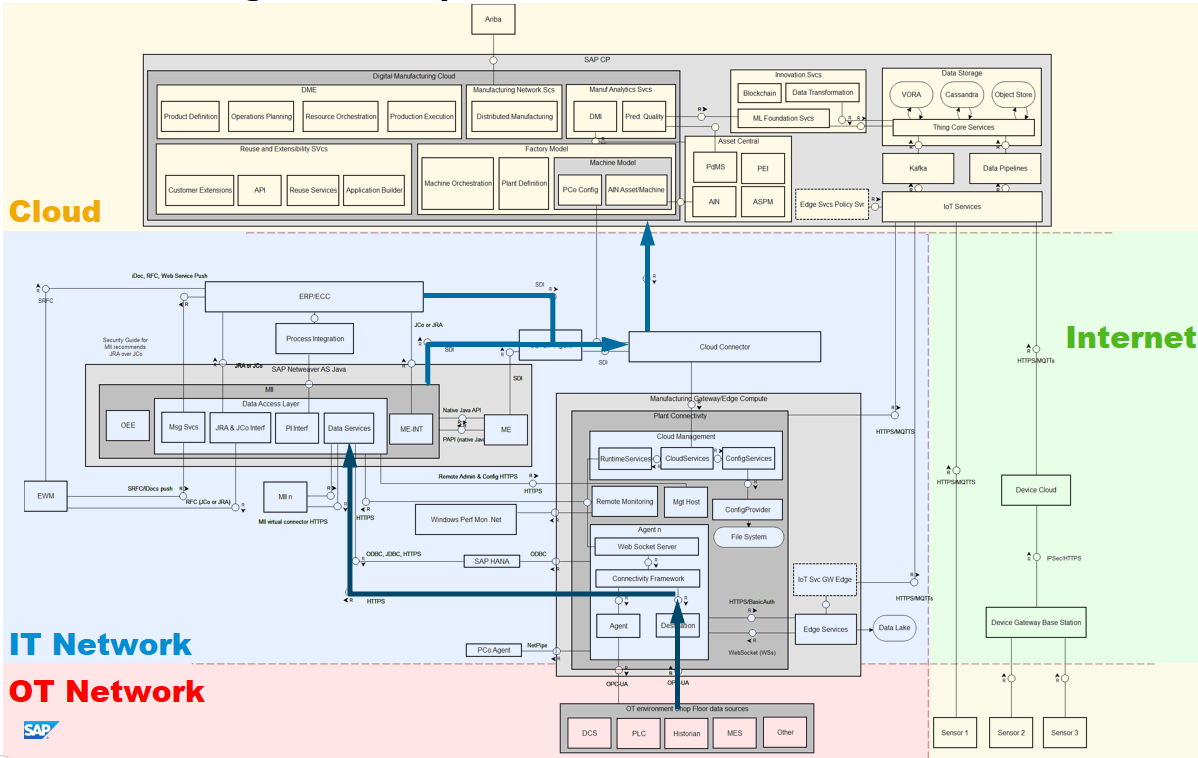

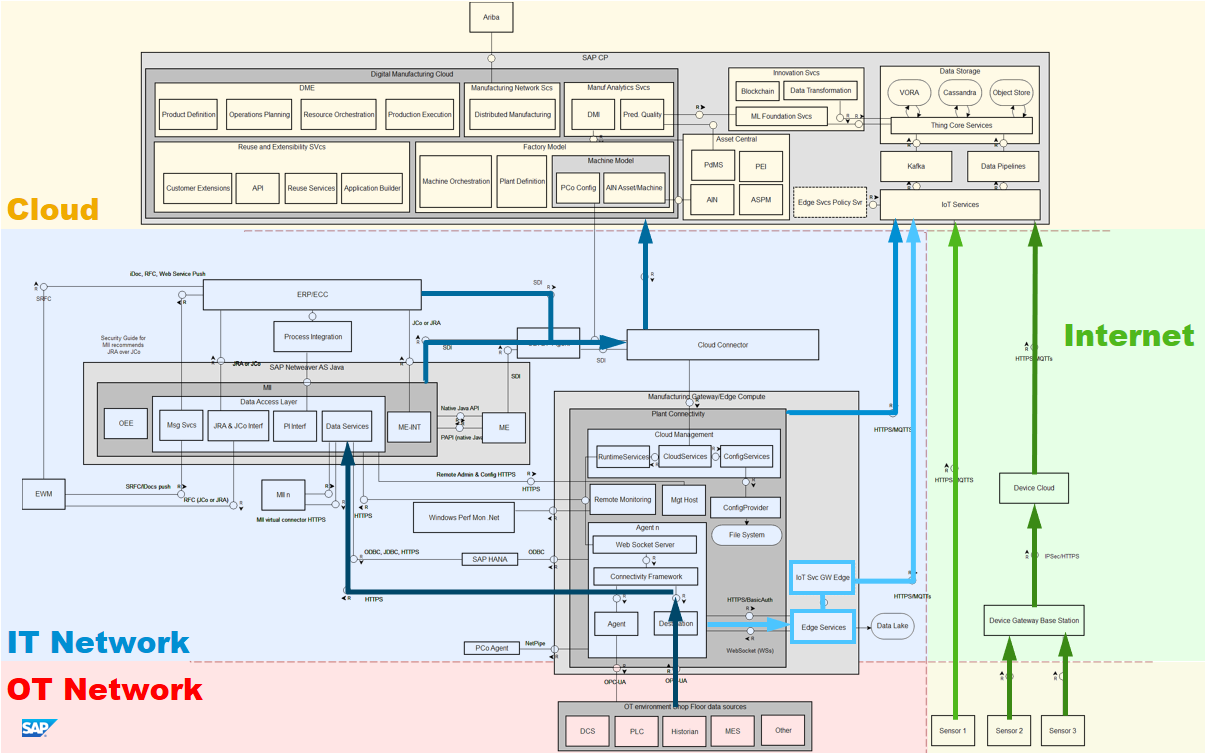

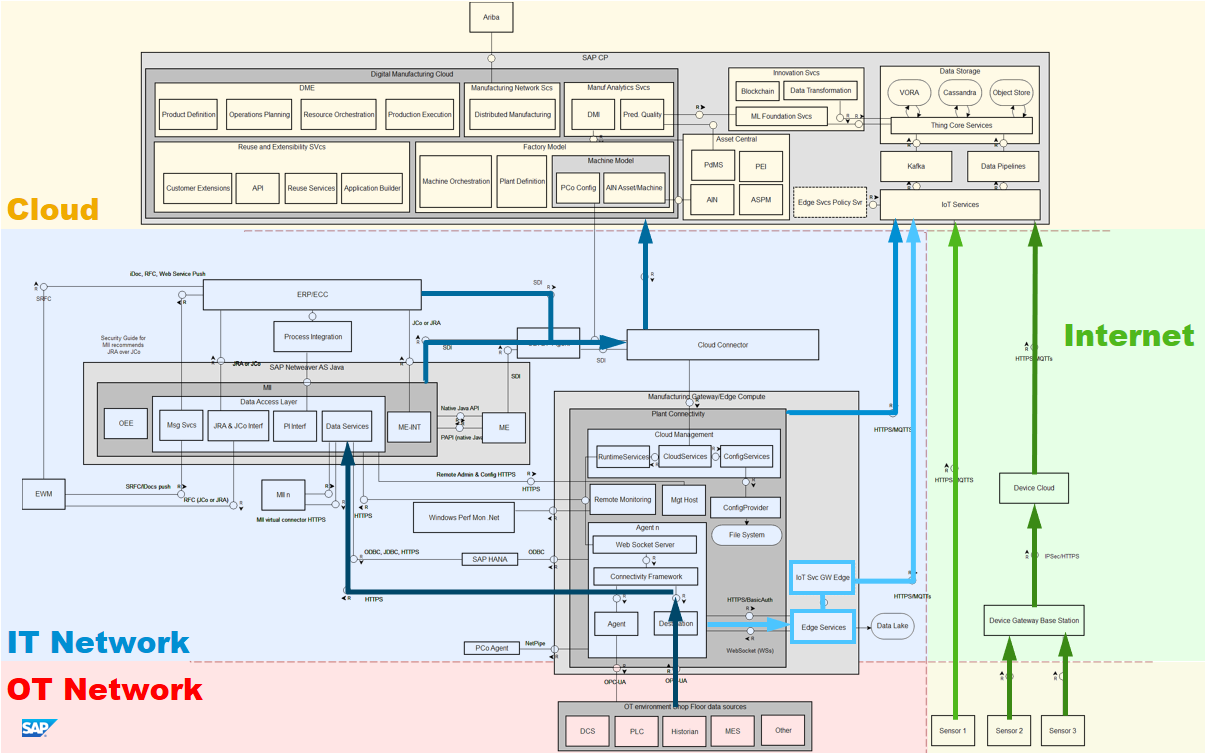

That leaves for this article specifically data flows and communications, which is also where most questions will arise, and where we typically see most concerns. We will therefore return to the Digital Manufacturing landscape, with an updated diagram that reflects the recent changes and additions.

As complete as the diagram looked before in the previous article, we can now show a lot more detail in the cloud layer and further analyze the various data flows in the landscape.

We have discussed the components underneath the cloud section before and refer back there if their function is not clear, but let's walk briefly through the additions in the cloud before we dive into the data movements.

The main block is the Digital Manufacturing Cloud, the newly released cloud manufacturing suite, that includes DME, Distributed Manufacturing, DMI and Predictive Quality Management, as well as the integration with the Ariba business network. This is supported by other applications that are not strictly part of the Digital Manufacturing Suite and can also be subscribed to independently, but further enrich the offering, like Asset Intelligence Network (AIN) and Predictive Maintenance (PdMS).

On the very right is the data ingestion and storage layer. IoT Services as we will see is primarily for direct sensor/machine communications with the cloud. Data Pipelines functions for data streaming and cloud-to-cloud integration. The DM cloud itself uses VORA and the Object Store for data, but all three can be used for sensor and machine data ingestion as makes sense for the use case, including further custom application developed with Application Enablement. The important thing is that once the data is in the storage area, it is available to the rest of the environment, whether pre-built applications or customer- or partner developed ones.

As we saw before, the environment is still strictly separated by network. In fact, the traditional approach of passing machine data from the shop floor through to MII is still the basis of the solution.

PlantConnectivity connects to machines in the OT network over OPC-UA and retrieves data from tags it subscribed to, and passes that into MII. This data flow is in place already at SAP customers running the on-premise Manufacturing Suite.

However, what we can do in addition, is push this data through the Cloud Connector and Cloud Platform Integration (CPI) into the cloud environment. This is primarily the real-time flow of machine data associated with the context of the business data of what is currently being produced on the production line, and associated master data. We saw this as well in the previous article. A good reason for this flow, for instance, would be the use of DMI or DME.

What is newer, is the ability for PlantConnectivity to push data directly to cloud, through IoT Services. The purpose of this data flow is a bit different. Whereas in the earlier the context is very much a near-real time one with machine data associated with a particular batch in production, this data is used for more long-tail analysis, i.e. the behavior of machines over weeks, months and years. This will allow us to use machine behavior for further optimization of scheduled maintenance and productive use of the machinery.

In the next data flow, we see the interaction between PlantConnectivity and Edge Services. Edge Services allows for edge processing, and for instance can be used to aggregate data that comes in at high frequency, but we don't need at that granularity. Think for instance of a temperate sensor that gives a reading at 20 Hz. But for our analysis we only need it every minute. We can then capture 1,200 data points and average them, or take the minimum and maximum value (depending on the use case). We can then let Edge Services only send those values up to the cloud every minute. Note also that instead of sending this data to the cloud, we can store it (instead) locally on premise in a data lake as well, should that be preferred. Of course, then any analysis of that data should occur locally as well.

The fifth data flow shows the connectivity from separate sensor networks. As explained in the previous article, running separate sensor networks completely outside the OT and IT networks provides strict network separation, and without any connectivity into those networks, it has no ability to affect them adversely, at least not directly. Only after processing of this data in the cloud will it be used in analysis and drive predictive models.

We see here two models: the first might be called Device Cloud infrastructure or a managed device service, where sensors connect to a gateway base station and from there pass the data to a device cloud. From there, using cloud-to-cloud integration through IoT Services, this data is ingested into the SAP Cloud Platform. This is a model we see increasingly where sensors come with connectivity pre-packaged as a managed service (whether by a network provider, an OEM, or otherwise), and data is first ingested in such device clouds, from where it can be moved elsewhere. This is a clear trend, where a particular provider focuses just on this aspect: managing devices, device security, data ingestion and management. Cloud-to-cloud data movements is relatively easy to set up, and wouldn't require separate work for each customer. I foresee that soon we will see such providers advertise their sensor equipment with "SAP CP ready" or "AWS ready". This would really ease secure adoption and operation of sensor networks.

Alternatively, sensors and things that have their own independent connection to the internet (for instance, GSM connected devices) can send that data directly to IoT Services.

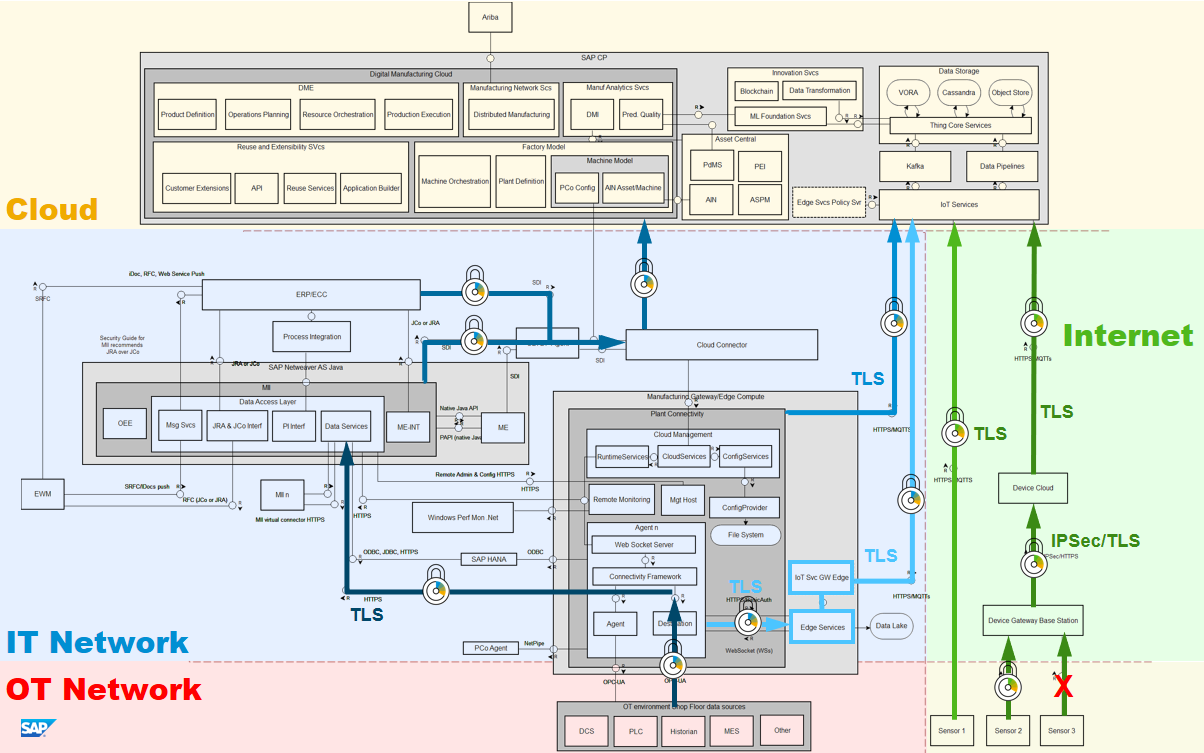

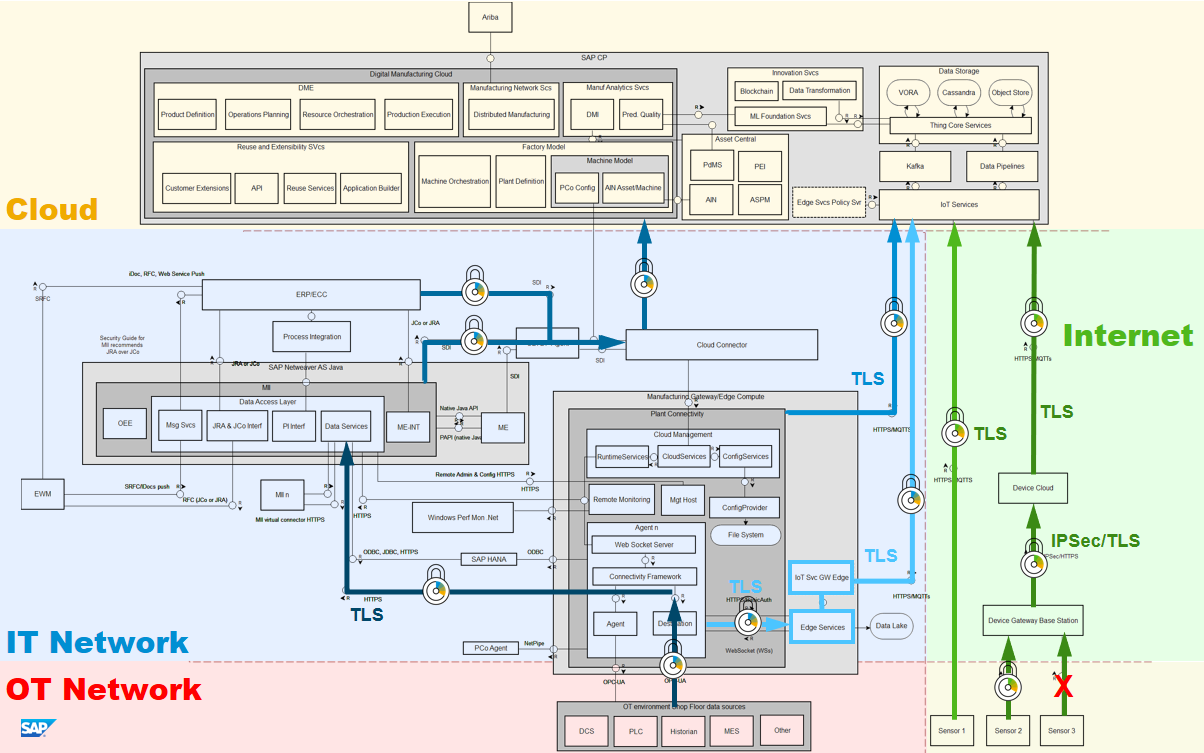

So, now we have identified these data flows, what risks do we face, and how do we protect against them.

The two risks we face in these communication is eavesdropping and data manipulation. Eavesdropping on communications leads to the threat of information disclosure. This in itself can be damaging in terms of loss of intellectual property, confidential information, and in some cases personal information. The risk of data manipulation would be more serious, leading to making the system unreliable, or even be turned against itself and resulting in threats of provoked industrial accidents or sabotage with potentially significant costs.

We therefore need to protect communications against both these risks. We do this through the use of strong encryption, i.e. X.509 PKI, or alternatives where that may not be possible. This keeps the data protected in transit and in key situations also guarantees the integrity of sender and receiver of the data.

Starting at the machine layer, this is OPC-UA. This provides us with both encryption and mutual verification of certificates on both the machine-end as well as PlantConnectivity. It is guaranteed that PlantConnectivity communicates with the machine it believes it is communicating with, and in reverse, the machine verifies it is communicating with the PlantConnectivity instance it should be talking to.

For the data flows within the IT network and from there to the cloud is protected with TLS. The Cloud Connector provides a VPN tunnel. The data flows from the Manufacturing Gateway to the cloud is done with certificate-based authentication over TLS. And sensors and machine making their own connection to the SAP Cloud Platform IoT Services do the same.

Sensors and sensor networks themselves come in a wide variety of flavors and communication protocols. For devices that have their own TCP stack and can handle TLS connections, this is not a problem, as the devices themselves are capable of providing good security. However, and this is the reason for the red 'X' on one of the sensor to gateway communication, for the gateway model the landscape is very mixed, from reasonably secure down to no security at all. For smaller sensors based on micro controllers, for instance, communicating over LPWAN, TLS is not feasible as their chip architecture doesn't support it, and even if it would, could create problems with battery lifetime. LPWAN itself has some significant constraints on bandwidth and message length, and doesn't encrypt data from the device to the base station.

I referred already to the emerging trend of specialization amoung the various (I)IoT cloud providers. Rather than try to offer every service under the sun, some are starting to focus on just device and device connectivity, as well as security. A variety of solutions ranging from telecommunications providers to machine-, device-, chip- and sensor vendors to security companies with hardened embedded OS and communication services are offering to connect the edge and bring the data to a device cloud, from where it can be moved into the SAP Cloud Platform for further analysis. Connections from a device cloud to SAP CP are relatively easy to secure, even more so when both run on the same public cloud service. Meanwhile dedicated specialists take care of secure transmission of data from the devices it originates from up to the device cloud in a variety of ways. I see this trend continue, as it makes a lot of sense to have specialists involved here.

It should make it clear though that device/sensor selecting is a critical aspect of any IIoT solution. This is where things most likely will go wrong, and the selection should be made through a risk assessment, where the potential impact of manipulation of data is taken into account. The more critical the potential impact this device feed can have, the more important it is to select a device and sensor network infrastructure and provider that can secure that transmission.

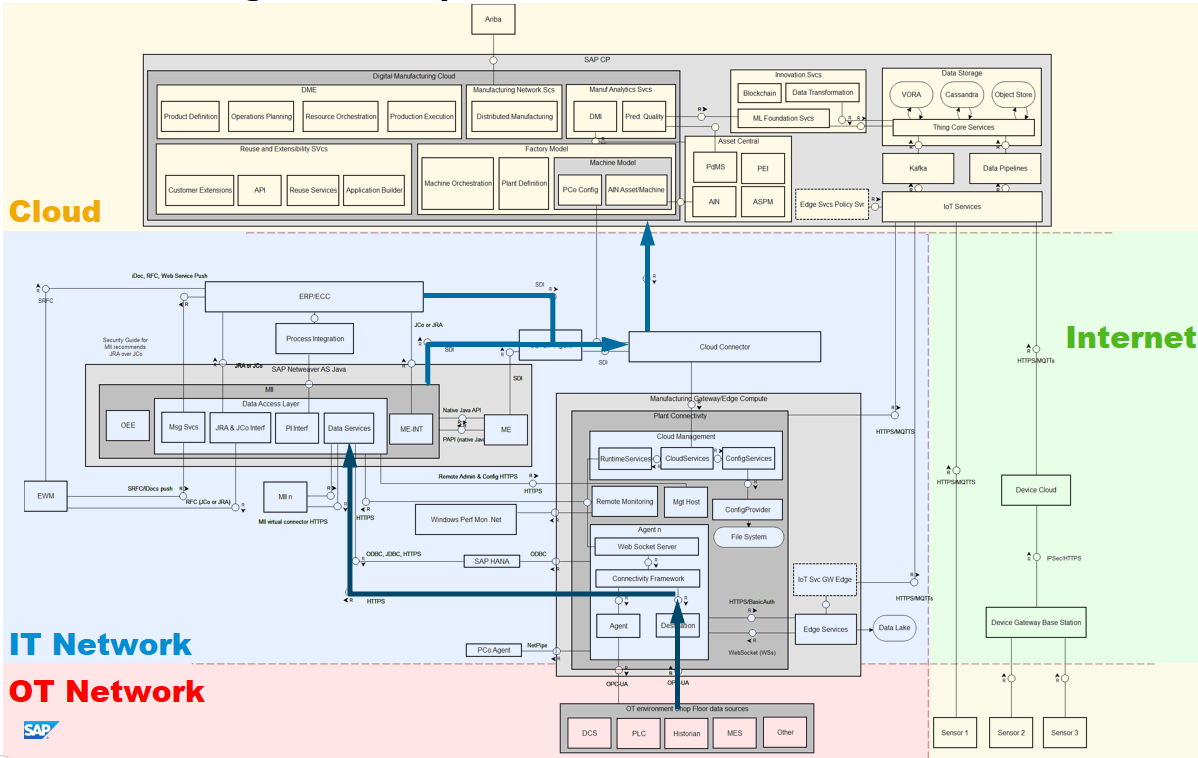

We have one more data flow to discuss, and that is data movement back down into the on-premise environment from the cloud.This movement contains of instructions to the ERP and MII system, as well as configuration changes in the OT environment, as well as edge processing capabilities.

We have a flow back from the cloud to the on-premise environment through the Cloud Connector, the same VPN connection we used from the IT environment to the Cloud. This is used for PlantConnectivity configuration (a web interface), as well as to push information back down to MI, ERP, and from MII down to PlantConnectivity and into the OT network via OPC-UA. When CPI is used, the connection is initiated from the IT network to the cloud, where a message server runs for the on-premise client to pick up any message from that may have been left there for it, rather than have the cloud component connect directly into the IT environment. That also means no interface has to be opened to the IT environment from the cloud, and thus no firewalling is required. As explained before, this uses a TLS connection.

The only other link down to the on-premise environment is via Edge Services. Here the down-stream is used for configuration settings, streaming rules, and predictive models to execute at the edge. This uses the same TLS connection as during upload.

It is important to note that each connection from the SAP Cloud Platform to the on-premise environment is to (and through) an SAP component. That means that any connection back down into the OT network is mediated through on-premise SAP systems first before hitting the machine layer. There is no direct connection from the cloud to the OT network, nor is there a direct connection from the OT network to the cloud. This mediation means that messages sent back and forth must make sense to those applications. That is, a vulnerability triggered in an SAP system - should an attack find one - has no direct impact on the machine layer. It may stop the data feed, but not affect the shop floor. This is in contrast with a direct connection with the machine layer, which might trigger a vulnerability directly in the PLC, for instance.

To clarify what I mean by this mediation, let me draw a simplified comparison with a SQL injection. SQL injections work, when an attacker can control input of data, which is then used directly in a SQL statement. We can easily prevent the most serious attacks of this kind when we use stored procedures or prepared statements, where the structure of the query statement is fixed, and parameters need to be (at least) of the correct data type. Only when the input makes some kind of sense would the query even get executed at all.

When such mediation takes place through multiple SAP systems, attempted attacks that target the physical layer of the environment through the environment (i.e. without attacking the OT environment directly, either physically or from within the OT network itself) become exceedingly complex. Attacks that try to force an exploit of some kind on the machine layer through SAP systems are not going to succeed.

Follow the data streams in the diagrams above: consider all the systems that would need to be compromised (or "fooled", that is, the receiving system accepts the manipulated data as acceptable input) by an attacker in order to get a malicious message down into the machine layer. If the aim of the attacker is the machine layer, this is a highly complex route to take. An attack that requires first to take over critical and highly secured on-premise business systems is at least one with significant obstacles, more likely one of the very last options an attacker would consider. An attack attempted from the cloud only adds additional layers of mediation where connections, authorizations and data would need to line up. I struggle to come up with realistic attack scenarios.

But it is useful to follow two possible scenarios along their data flows to understand what would be required. In both the SQL injection mitigation and the mediation through SAP systems, the mitigation stop the most destructive attacks, but attacks that use input that is semantically acceptable to the receiving components are still possible. If we take the weakest link in the landscape we have been discussing to be the sensor network, and this data is used for predictive modeling in which we have a training phase and an execution phase, we can pose two attack scenarios:

The first scenario would require to take over a significant portion of the sensor network for a significant amount of time during the generation of the training data. If we suppose this is possible, this data is combined in the cloud with data from the OT network itself, which during training is analyzed together with the device data. At this point, data scientists working with engineers will likely spot a data problem.

In the second scenario, the attacker may not need to take over as large a portion of the sensor network, nor necessarily for a long time, but it will be practically impossible for any attacker to get feedback from the system to understand what effect the manipulation had. Even with significant understanding of the entire landscape, it would be difficult to anticipate what the device values would need to be in order to create a result desired by the attacker. Any attempts to feed manipulated data into the environment is highly likely to be detected as well. This manipulated data would still need to make some sort of sense to the predictive model, but is more likely to raise an alert of outlier values.

More significantly, such manipulation of sensor data would require physical proximity to these sensors if not actual direct physical access, which means the attacker is already on the manufacturing floor, or at least within range of the base station. If that is the case, your physical security has failed. And if the attacker's goal was to attack the machine layer in the first place, such attacks are much easier and less complex when an attacker has physical access to the machines, or can plug a USB stick with malicious code directly into a host on the OT network.

As should be clear from the various data flow diagrams above, we have built on top of what is already there. Each of the individual diagrams present legitimate use cases and value. Not every customer is ready for automated reconfiguration of the machine layer, and a lot can be gained before any write-back occurs. This is a journey, and not everybody may end up at the same end stage.

Nevertheless, we believe that security concerns should not be the main obstacle to embark on digital transformation in Manufacturing. This Open Book on the security in Digital Manufacturing hopefully takes away some of these concerns. A lot of security discussions in IIoT take place in the abstract, and can easily increase fear and doubt, rather than mitigate them. By instead looking at a real landscape and analyzing the actual data flows, we can have concrete discussions how to protect such environments, and make progress.

As always, this is an emerging field. Things move quickly and we will keep monitoring new developments. I always welcome feedback, especially where there might be disagreements, as we all learn from that. But I am increasingly convinced we have the tools and expertise to get this right. Now, it's primarily a question of execution.

More on the general context and further information on what was released at SAPPHIRE can be found in Hans Thalbauer's Enable an Intelligent Digital Supply Chain of One to Transform Your Business presentation, this short video or the Manufacturing section on the SAP website.

Threat Modeling

Over the last years, we have seen a significant number of security incidents in industrial landscapes on top of attacks against IT infrastructure and cloud services. From the "ground zero" of StuxNet to the WannaCry, Petya and NotWannaCry ransomware attacks of 2017, it is clear that threats are increasing. At the same time, IoT and Industrie 4.0 connect more things than ever, and there is a drive towards increasing automation and optimization through predictive algorithms, which promise great business benefits but further increase the threat landscape.

With threat modeling, we can get clarity on where the dangers in the landscape are hidden, and what we need to protect. To that end, we look at the entire landscape of the solution, and focus specifically on:

- Users and Authorizations

- Assets containing critical or sensitive data

- Data flows and communications

To keep things manageable, I'll make some reasonable assumptions for the rest of this article:

- All SAP products (applications and platform) have users, roles and authorization concepts built in. We will assume that best practices are applied in system configurations, strong passwords are used for both regular users, administrators and technical user accounts, and appropriate roles and authorizations are assigned based on least-privilege principles

- Systems containing our assets - that is databases and potentially file systems, both on-premise and in the cloud - have appropriate access rights set, authentication and authorizations in place, disk encryption set up, file system permissions set up, and strong passwords or certificate-based authentication used.

- Hosts in the landscape are reasonably secured technically - OS protections used, firewalls in place, no default passwords or undocumented admin interfaces, network security, intrusion prevention and detection systems and monitoring deployed, etc. - as well as physically - secured data centers, authenticated access to facilities, protections against tampering, controlled access to equipment, security guards, etc. - against unauthorized access

I believe these assumptions are reasonable as they match common best practices, are documented in the various product and services administration-, configuration- and security guides, and follow common practices in IT and Security policies in large enterprises. That is of course not to say they are always applied. But it should be no surprise such protections should be in place. We cannot build on top of an insecure foundation.

That leaves for this article specifically data flows and communications, which is also where most questions will arise, and where we typically see most concerns. We will therefore return to the Digital Manufacturing landscape, with an updated diagram that reflects the recent changes and additions.

As complete as the diagram looked before in the previous article, we can now show a lot more detail in the cloud layer and further analyze the various data flows in the landscape.

Functional Overview

We have discussed the components underneath the cloud section before and refer back there if their function is not clear, but let's walk briefly through the additions in the cloud before we dive into the data movements.

The main block is the Digital Manufacturing Cloud, the newly released cloud manufacturing suite, that includes DME, Distributed Manufacturing, DMI and Predictive Quality Management, as well as the integration with the Ariba business network. This is supported by other applications that are not strictly part of the Digital Manufacturing Suite and can also be subscribed to independently, but further enrich the offering, like Asset Intelligence Network (AIN) and Predictive Maintenance (PdMS).

On the very right is the data ingestion and storage layer. IoT Services as we will see is primarily for direct sensor/machine communications with the cloud. Data Pipelines functions for data streaming and cloud-to-cloud integration. The DM cloud itself uses VORA and the Object Store for data, but all three can be used for sensor and machine data ingestion as makes sense for the use case, including further custom application developed with Application Enablement. The important thing is that once the data is in the storage area, it is available to the rest of the environment, whether pre-built applications or customer- or partner developed ones.

Data Flows

As we saw before, the environment is still strictly separated by network. In fact, the traditional approach of passing machine data from the shop floor through to MII is still the basis of the solution.

PlantConnectivity connects to machines in the OT network over OPC-UA and retrieves data from tags it subscribed to, and passes that into MII. This data flow is in place already at SAP customers running the on-premise Manufacturing Suite.

However, what we can do in addition, is push this data through the Cloud Connector and Cloud Platform Integration (CPI) into the cloud environment. This is primarily the real-time flow of machine data associated with the context of the business data of what is currently being produced on the production line, and associated master data. We saw this as well in the previous article. A good reason for this flow, for instance, would be the use of DMI or DME.

What is newer, is the ability for PlantConnectivity to push data directly to cloud, through IoT Services. The purpose of this data flow is a bit different. Whereas in the earlier the context is very much a near-real time one with machine data associated with a particular batch in production, this data is used for more long-tail analysis, i.e. the behavior of machines over weeks, months and years. This will allow us to use machine behavior for further optimization of scheduled maintenance and productive use of the machinery.

In the next data flow, we see the interaction between PlantConnectivity and Edge Services. Edge Services allows for edge processing, and for instance can be used to aggregate data that comes in at high frequency, but we don't need at that granularity. Think for instance of a temperate sensor that gives a reading at 20 Hz. But for our analysis we only need it every minute. We can then capture 1,200 data points and average them, or take the minimum and maximum value (depending on the use case). We can then let Edge Services only send those values up to the cloud every minute. Note also that instead of sending this data to the cloud, we can store it (instead) locally on premise in a data lake as well, should that be preferred. Of course, then any analysis of that data should occur locally as well.

The fifth data flow shows the connectivity from separate sensor networks. As explained in the previous article, running separate sensor networks completely outside the OT and IT networks provides strict network separation, and without any connectivity into those networks, it has no ability to affect them adversely, at least not directly. Only after processing of this data in the cloud will it be used in analysis and drive predictive models.

We see here two models: the first might be called Device Cloud infrastructure or a managed device service, where sensors connect to a gateway base station and from there pass the data to a device cloud. From there, using cloud-to-cloud integration through IoT Services, this data is ingested into the SAP Cloud Platform. This is a model we see increasingly where sensors come with connectivity pre-packaged as a managed service (whether by a network provider, an OEM, or otherwise), and data is first ingested in such device clouds, from where it can be moved elsewhere. This is a clear trend, where a particular provider focuses just on this aspect: managing devices, device security, data ingestion and management. Cloud-to-cloud data movements is relatively easy to set up, and wouldn't require separate work for each customer. I foresee that soon we will see such providers advertise their sensor equipment with "SAP CP ready" or "AWS ready". This would really ease secure adoption and operation of sensor networks.

Alternatively, sensors and things that have their own independent connection to the internet (for instance, GSM connected devices) can send that data directly to IoT Services.

Securing communications

So, now we have identified these data flows, what risks do we face, and how do we protect against them.

The two risks we face in these communication is eavesdropping and data manipulation. Eavesdropping on communications leads to the threat of information disclosure. This in itself can be damaging in terms of loss of intellectual property, confidential information, and in some cases personal information. The risk of data manipulation would be more serious, leading to making the system unreliable, or even be turned against itself and resulting in threats of provoked industrial accidents or sabotage with potentially significant costs.

We therefore need to protect communications against both these risks. We do this through the use of strong encryption, i.e. X.509 PKI, or alternatives where that may not be possible. This keeps the data protected in transit and in key situations also guarantees the integrity of sender and receiver of the data.

Starting at the machine layer, this is OPC-UA. This provides us with both encryption and mutual verification of certificates on both the machine-end as well as PlantConnectivity. It is guaranteed that PlantConnectivity communicates with the machine it believes it is communicating with, and in reverse, the machine verifies it is communicating with the PlantConnectivity instance it should be talking to.

For the data flows within the IT network and from there to the cloud is protected with TLS. The Cloud Connector provides a VPN tunnel. The data flows from the Manufacturing Gateway to the cloud is done with certificate-based authentication over TLS. And sensors and machine making their own connection to the SAP Cloud Platform IoT Services do the same.

Sensors and sensor networks themselves come in a wide variety of flavors and communication protocols. For devices that have their own TCP stack and can handle TLS connections, this is not a problem, as the devices themselves are capable of providing good security. However, and this is the reason for the red 'X' on one of the sensor to gateway communication, for the gateway model the landscape is very mixed, from reasonably secure down to no security at all. For smaller sensors based on micro controllers, for instance, communicating over LPWAN, TLS is not feasible as their chip architecture doesn't support it, and even if it would, could create problems with battery lifetime. LPWAN itself has some significant constraints on bandwidth and message length, and doesn't encrypt data from the device to the base station.

I referred already to the emerging trend of specialization amoung the various (I)IoT cloud providers. Rather than try to offer every service under the sun, some are starting to focus on just device and device connectivity, as well as security. A variety of solutions ranging from telecommunications providers to machine-, device-, chip- and sensor vendors to security companies with hardened embedded OS and communication services are offering to connect the edge and bring the data to a device cloud, from where it can be moved into the SAP Cloud Platform for further analysis. Connections from a device cloud to SAP CP are relatively easy to secure, even more so when both run on the same public cloud service. Meanwhile dedicated specialists take care of secure transmission of data from the devices it originates from up to the device cloud in a variety of ways. I see this trend continue, as it makes a lot of sense to have specialists involved here.

It should make it clear though that device/sensor selecting is a critical aspect of any IIoT solution. This is where things most likely will go wrong, and the selection should be made through a risk assessment, where the potential impact of manipulation of data is taken into account. The more critical the potential impact this device feed can have, the more important it is to select a device and sensor network infrastructure and provider that can secure that transmission.

Cloud to On-premise

We have one more data flow to discuss, and that is data movement back down into the on-premise environment from the cloud.This movement contains of instructions to the ERP and MII system, as well as configuration changes in the OT environment, as well as edge processing capabilities.

We have a flow back from the cloud to the on-premise environment through the Cloud Connector, the same VPN connection we used from the IT environment to the Cloud. This is used for PlantConnectivity configuration (a web interface), as well as to push information back down to MI, ERP, and from MII down to PlantConnectivity and into the OT network via OPC-UA. When CPI is used, the connection is initiated from the IT network to the cloud, where a message server runs for the on-premise client to pick up any message from that may have been left there for it, rather than have the cloud component connect directly into the IT environment. That also means no interface has to be opened to the IT environment from the cloud, and thus no firewalling is required. As explained before, this uses a TLS connection.

The only other link down to the on-premise environment is via Edge Services. Here the down-stream is used for configuration settings, streaming rules, and predictive models to execute at the edge. This uses the same TLS connection as during upload.

Mediation as an architectural security feature

It is important to note that each connection from the SAP Cloud Platform to the on-premise environment is to (and through) an SAP component. That means that any connection back down into the OT network is mediated through on-premise SAP systems first before hitting the machine layer. There is no direct connection from the cloud to the OT network, nor is there a direct connection from the OT network to the cloud. This mediation means that messages sent back and forth must make sense to those applications. That is, a vulnerability triggered in an SAP system - should an attack find one - has no direct impact on the machine layer. It may stop the data feed, but not affect the shop floor. This is in contrast with a direct connection with the machine layer, which might trigger a vulnerability directly in the PLC, for instance.

To clarify what I mean by this mediation, let me draw a simplified comparison with a SQL injection. SQL injections work, when an attacker can control input of data, which is then used directly in a SQL statement. We can easily prevent the most serious attacks of this kind when we use stored procedures or prepared statements, where the structure of the query statement is fixed, and parameters need to be (at least) of the correct data type. Only when the input makes some kind of sense would the query even get executed at all.

When such mediation takes place through multiple SAP systems, attempted attacks that target the physical layer of the environment through the environment (i.e. without attacking the OT environment directly, either physically or from within the OT network itself) become exceedingly complex. Attacks that try to force an exploit of some kind on the machine layer through SAP systems are not going to succeed.

Follow the data streams in the diagrams above: consider all the systems that would need to be compromised (or "fooled", that is, the receiving system accepts the manipulated data as acceptable input) by an attacker in order to get a malicious message down into the machine layer. If the aim of the attacker is the machine layer, this is a highly complex route to take. An attack that requires first to take over critical and highly secured on-premise business systems is at least one with significant obstacles, more likely one of the very last options an attacker would consider. An attack attempted from the cloud only adds additional layers of mediation where connections, authorizations and data would need to line up. I struggle to come up with realistic attack scenarios.

But it is useful to follow two possible scenarios along their data flows to understand what would be required. In both the SQL injection mitigation and the mediation through SAP systems, the mitigation stop the most destructive attacks, but attacks that use input that is semantically acceptable to the receiving components are still possible. If we take the weakest link in the landscape we have been discussing to be the sensor network, and this data is used for predictive modeling in which we have a training phase and an execution phase, we can pose two attack scenarios:

- Manipulation of sensor data during the training phase of predictive models to influence their operation in execution

- Manipulation of sensor data during the execution phase of predictive models - that is, when they are actually making predictions on new data - to create a malicious outcome in the machine layer

The first scenario would require to take over a significant portion of the sensor network for a significant amount of time during the generation of the training data. If we suppose this is possible, this data is combined in the cloud with data from the OT network itself, which during training is analyzed together with the device data. At this point, data scientists working with engineers will likely spot a data problem.

In the second scenario, the attacker may not need to take over as large a portion of the sensor network, nor necessarily for a long time, but it will be practically impossible for any attacker to get feedback from the system to understand what effect the manipulation had. Even with significant understanding of the entire landscape, it would be difficult to anticipate what the device values would need to be in order to create a result desired by the attacker. Any attempts to feed manipulated data into the environment is highly likely to be detected as well. This manipulated data would still need to make some sort of sense to the predictive model, but is more likely to raise an alert of outlier values.

More significantly, such manipulation of sensor data would require physical proximity to these sensors if not actual direct physical access, which means the attacker is already on the manufacturing floor, or at least within range of the base station. If that is the case, your physical security has failed. And if the attacker's goal was to attack the machine layer in the first place, such attacks are much easier and less complex when an attacker has physical access to the machines, or can plug a USB stick with malicious code directly into a host on the OT network.

Continuity

As should be clear from the various data flow diagrams above, we have built on top of what is already there. Each of the individual diagrams present legitimate use cases and value. Not every customer is ready for automated reconfiguration of the machine layer, and a lot can be gained before any write-back occurs. This is a journey, and not everybody may end up at the same end stage.

Nevertheless, we believe that security concerns should not be the main obstacle to embark on digital transformation in Manufacturing. This Open Book on the security in Digital Manufacturing hopefully takes away some of these concerns. A lot of security discussions in IIoT take place in the abstract, and can easily increase fear and doubt, rather than mitigate them. By instead looking at a real landscape and analyzing the actual data flows, we can have concrete discussions how to protect such environments, and make progress.

As always, this is an emerging field. Things move quickly and we will keep monitoring new developments. I always welcome feedback, especially where there might be disagreements, as we all learn from that. But I am increasingly convinced we have the tools and expertise to get this right. Now, it's primarily a question of execution.

- SAP Managed Tags:

- MAN (Manufacturing),

- Internet of Things

2 Comments

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

91 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

66 -

Expert

1 -

Expert Insights

177 -

Expert Insights

293 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

13 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,577 -

Product Updates

340 -

Replication Flow

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,873 -

Technology Updates

417 -

Workload Fluctuations

1

Related Content

- Partner-2-Partner Collaboration in Manufacturing in Technology Blogs by SAP

- SAP Datasphere - Space, Data Integration, and Data Modeling Best Practices in Technology Blogs by SAP

- SAP Datasphere News in March in Technology Blogs by SAP

- Deliver Real-World Results with SAP Business AI: Q4 2023 & Q1 2024 Release Highlights in Technology Blogs by SAP

- Digital Twins of an Organization: why worth it and why now in Technology Blogs by SAP

Top kudoed authors

| User | Count |

|---|---|

| 34 | |

| 25 | |

| 12 | |

| 7 | |

| 7 | |

| 6 | |

| 6 | |

| 6 | |

| 5 | |

| 4 |