- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- ProcessDirect Adapter

Technology Blogs by SAP

Learn how to extend and personalize SAP applications. Follow the SAP technology blog for insights into SAP BTP, ABAP, SAP Analytics Cloud, SAP HANA, and more.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Product and Topic Expert

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

02-14-2018

4:56 PM

Are you facing high network latency while establishing inter-communication between integration flows in SAP Cloud Platform Integration platform?

ProcessDirect adapter is the solution for you. You can use ProcessDirect adapter to provide direct communication between two integration flows and unlike HTTP/SOAP adapter communication between two integration flows will not be routed via load balancer.

When the ProcessDirect adapter is used to send data to other integration flow, we consider the integration flow as a Producer integration flow. This is when the integration flow has a ProcessDirect Receiver adapter.

When the ProcessDirect adapter is used to consume data from other integration flows, we consider the integration flow as a Consumer integration flows. This is when the integration flow has a ProcessDirect Sender adapter.

For detailed information on the ProcessDirect offerings, see the Help portal documentation.

For detailed information on the ProcessDirect offerings, see the Help portal documentation.

Yes, we know you might be using HTTP, SOAP or JMS queue adapters to decompose/split the integration flow into smaller integration flows.

Look at the below comparison chart to see what ProcessDirect adapter offers you over and above all:

ProcessDirect adapter improves network latency, as message propagation across integration flows do not involve load balancer. For this reason, we recommend that you consider memory utilization as a parameter in scenarios involving heavy payloads and alternatively use HTTP adapter in such scenarios because the behavior will be the same.

ProcessDirect adapter improves network latency, as message propagation across integration flows do not involve load balancer. For this reason, we recommend that you consider memory utilization as a parameter in scenarios involving heavy payloads and alternatively use HTTP adapter in such scenarios because the behavior will be the same.

In this scenario, we are creating a producer and a consumer integration flows with the content modifier, wherein the message body is sent from producer to consumer and it is received via email configured at the consumers integration flow.

In producer integration flow, we are using HTTPS adapter at the sender end and ProcessDirect adapter at the receiver end.

Producer Integration Flow:

Consumer Integration Flow:

In Consumer integration flow, we are using ProcessDirect adapter at the sender end and SFTP adapter at the receiver end.

Additional Tips: In case if you don't want to configure the receiver, you can also log the output into a script as shown below:

Add the following to the script file:

The integration developer needs to create the method processData

This method takes Message object of package com.sap.gateway.ip.core.customdev.util

which includes helper methods useful for the content developer:

The methods available are:

public java.lang.Object getBody()

public void setBody(java.lang.Object exchangeBody)

public java.util.Map<java.lang.String,java.lang.Object> getHeaders()

public void setHeaders(java.util.Map<java.lang.String,java.lang.Object> exchangeHeaders)

public void setHeader(java.lang.String name, java.lang.Object value)

public java.util.Map<java.lang.String,java.lang.Object> getProperties()

public void setProperties(java.util.Map<java.lang.String,java.lang.Object> exchangeProperties)

public void setProperty(java.lang.String name, java.lang.Object value)

*/

import com.sap.gateway.ip.core.customdev.util.Message;

import java.util.HashMap;

def Message processData(Message message) {

def body = message.getBody(java.lang.String) as String;

def messageLog = messageLogFactory.getMessageLog(message);

if(messageLog != null){

messageLog.addAttachmentAsString("Log current Payload:", body, "text/plain");

}

return message;

}

In this section you will find some typical scenarios which can be executed using ProcessDirect adapter.

Transactional processing ensures that the message is processed within a single transaction. For example, when the integration flow has Data Store Write operation, and the transaction handling is activated, the Data Store entry is only committed if the whole process is executed successfully. In case of failure, the transaction is rolled back and the Data Store entry is not persisted.

In this scenario, the transaction type for producer and consumer are set to JDBC. Consumer with Script component throws an exception which is not caught and hence the database operations are rolled back in both producer and consumer.

Producer Integration Flow:

Consumer Integration Flow:

In this scenario, transaction type is set to JDBC for both producer and consumer. We have script after Request-Reply step which throws an exception. 2 MPLs will be generated with the status Producer as Failed and Consumer as Completed. In this case database operations are rolled back in producer but not in the consumer.

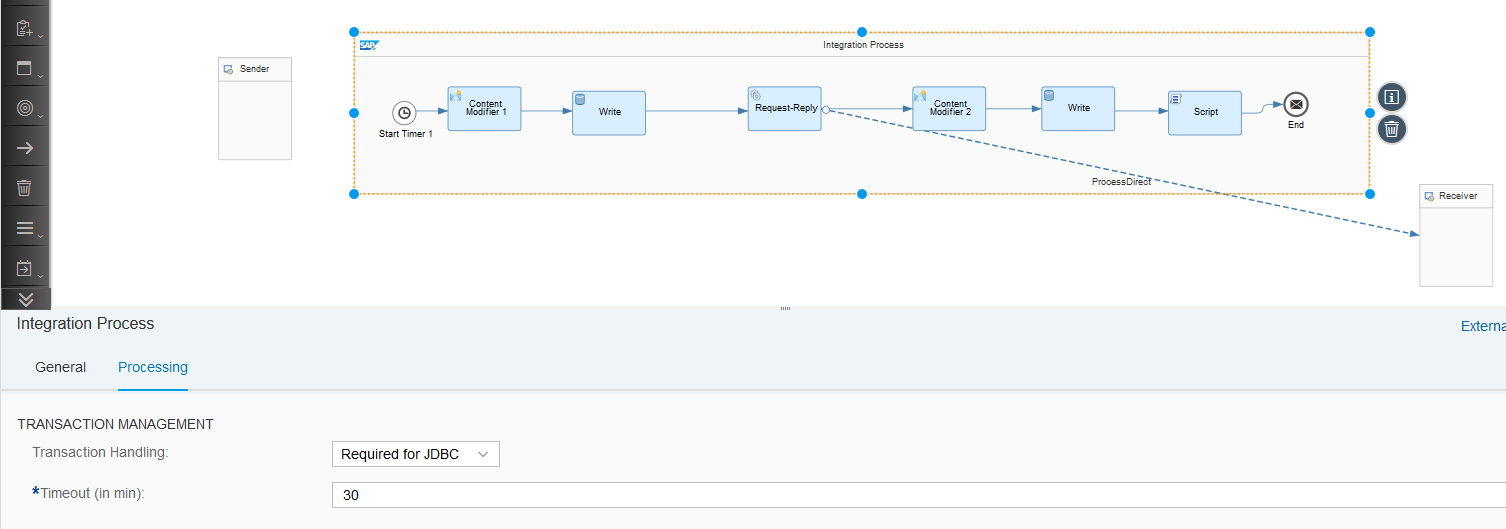

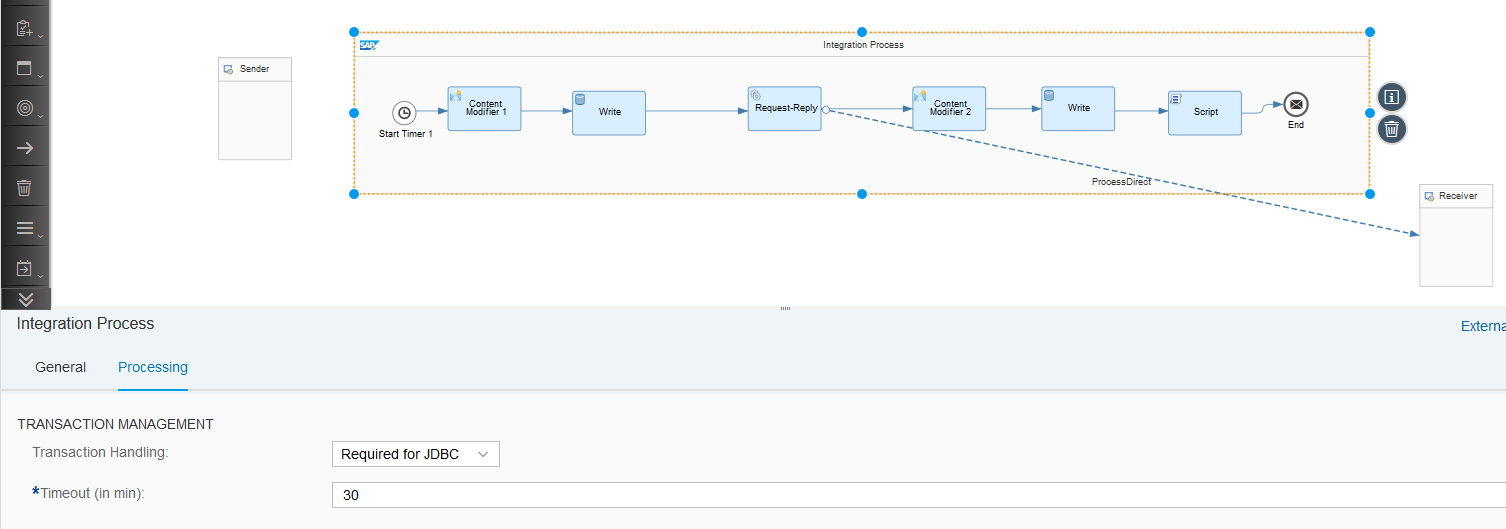

Producer Integration Flow:

Consumer Integration Flow:

In this scenario, transaction type is set JDBC for producer and consumer “Not Required”. We have script after Request-Reply step which throws an exception. Two MPLs will be generated with the status Producer as Failed and Consumer as Completed. In this case database operations are rolled back in producer but not in a consumer.

Producer Integration Flow:

Consumer Integration Flow:

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

In this scenario, the producer and consumer both will have Completed status in MPL logs and there will be three splits of message during the transaction.

Producer Integration Flow:

Consumer Integration Flow:

In this scenario, MPL logs per split will be generated, one for producer and one for each split message for consumers. Due to the default behavior of Aggregator, one additional MPL is generated in the consumer integration flow.

Producer Integration Flow:

Consumer Integration Flow:

In this scenario, depending on a condition the associated consumer is invoked.

Producer Integration Flow:

Consumer 1 Integration Flow:

Consumer 2 Integration Flow:

In this scenario, the request-reply in the Producer integration flow forwards the message in the body of Content Modifier 1 to consumer 1 integration flow. In consumer 1, the received message is appended to the body of Content Modifier 1 and is returned to the Request-Reply in Producer integration flow. This message is then forwarded to consumer 2 which is later sent to the SFTP server.

Producer Integration Flow:

Consumer 1 Integration Flow:

Consumer 2 Integration Flow:

ProcessDirect adapter is the solution for you. You can use ProcessDirect adapter to provide direct communication between two integration flows and unlike HTTP/SOAP adapter communication between two integration flows will not be routed via load balancer.

Terms to keep in mind while working with ProcessDirect adapter:

Producer Integration flow:

When the ProcessDirect adapter is used to send data to other integration flow, we consider the integration flow as a Producer integration flow. This is when the integration flow has a ProcessDirect Receiver adapter.

Consumer integration flow:

When the ProcessDirect adapter is used to consume data from other integration flows, we consider the integration flow as a Consumer integration flows. This is when the integration flow has a ProcessDirect Sender adapter.

ProcessDirect Adapter Offerings

- Allow multiple integration developers to work on the same integration scenario

- Reuse of integration flows across integration projects

- Decomposition of Integration Flows

Yes, we know you might be using HTTP, SOAP or JMS queue adapters to decompose/split the integration flow into smaller integration flows.

Look at the below comparison chart to see what ProcessDirect adapter offers you over and above all:

| Feature | JMS Queue adapter | HTTP/SOAP adapter | ProcessDirect adapter |

| Availability | Selective licenses | All | All |

| Latency | High | High Network traffic through load balancer | Low |

| Processing Mode | Asynchronous | Synchronous | Synchronous |

| Header propagation | Yes | No | Yes |

| Transaction Propagation | No | No | No |

| Session Sharing | No | Yes | Yes |

| Across Network | Yes | Yes | No |

Basic Configuration Settings for ProcessDirect Adapter

In this scenario, we are creating a producer and a consumer integration flows with the content modifier, wherein the message body is sent from producer to consumer and it is received via email configured at the consumers integration flow.

In producer integration flow, we are using HTTPS adapter at the sender end and ProcessDirect adapter at the receiver end.

Producer Integration Flow:

- Select the HTTPS adapter and go to Connection tab.

- Provide the producer address.

Note: if your project is not CSRF protected, ensure to disable CSRF Protected checkbox. - Select Content Modifier and go to Message Body tab.

Consumer Integration Flow:

In Consumer integration flow, we are using ProcessDirect adapter at the sender end and SFTP adapter at the receiver end.

- Select the ProcessDirect adapter and go to Connection tab.

- Use the same endpoint address in Address field as you have provided in producer integration flow at the receiver end.

- Select SFTP adapter configure the necessary settings.

Additional Tips: In case if you don't want to configure the receiver, you can also log the output into a script as shown below:

Add the following to the script file:

The integration developer needs to create the method processData

This method takes Message object of package com.sap.gateway.ip.core.customdev.util

which includes helper methods useful for the content developer:

The methods available are:

public java.lang.Object getBody()

public void setBody(java.lang.Object exchangeBody)

public java.util.Map<java.lang.String,java.lang.Object> getHeaders()

public void setHeaders(java.util.Map<java.lang.String,java.lang.Object> exchangeHeaders)

public void setHeader(java.lang.String name, java.lang.Object value)

public java.util.Map<java.lang.String,java.lang.Object> getProperties()

public void setProperties(java.util.Map<java.lang.String,java.lang.Object> exchangeProperties)

public void setProperty(java.lang.String name, java.lang.Object value)

*/

import com.sap.gateway.ip.core.customdev.util.Message;

import java.util.HashMap;

def Message processData(Message message) {

def body = message.getBody(java.lang.String) as String;

def messageLog = messageLogFactory.getMessageLog(message);

if(messageLog != null){

messageLog.addAttachmentAsString("Log current Payload:", body, "text/plain");

}

return message;

}

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

ProcessDirect Adapter Sample Use-cases

In this section you will find some typical scenarios which can be executed using ProcessDirect adapter.

Transaction Processing

Transactional processing ensures that the message is processed within a single transaction. For example, when the integration flow has Data Store Write operation, and the transaction handling is activated, the Data Store entry is only committed if the whole process is executed successfully. In case of failure, the transaction is rolled back and the Data Store entry is not persisted.

Scenario1: A basic scenario for setting transaction type to JDBC for producer and Consumer

In this scenario, the transaction type for producer and consumer are set to JDBC. Consumer with Script component throws an exception which is not caught and hence the database operations are rolled back in both producer and consumer.

Producer Integration Flow:

Consumer Integration Flow:

Scenario2: Producer throws an exception after request-reply and transaction type is set to JDBC

In this scenario, transaction type is set to JDBC for both producer and consumer. We have script after Request-Reply step which throws an exception. 2 MPLs will be generated with the status Producer as Failed and Consumer as Completed. In this case database operations are rolled back in producer but not in the consumer.

Producer Integration Flow:

Consumer Integration Flow:

Scenario 3: Producer throwing exception after Request-Reply and Transaction set to JDBC for Producer and Consumer set to Not Required.

In this scenario, transaction type is set JDBC for producer and consumer “Not Required”. We have script after Request-Reply step which throws an exception. Two MPLs will be generated with the status Producer as Failed and Consumer as Completed. In this case database operations are rolled back in producer but not in a consumer.

Producer Integration Flow:

Consumer Integration Flow:

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

Multiple MPLs

Scenario 1: Only Splitter in Producer, without any join/aggregator

In this scenario, the producer and consumer both will have Completed status in MPL logs and there will be three splits of message during the transaction.

Producer Integration Flow:

Consumer Integration Flow:

Scenario2: Iterating Splitter and Aggregator in Producer and Consumer respectively

In this scenario, MPL logs per split will be generated, one for producer and one for each split message for consumers. Due to the default behavior of Aggregator, one additional MPL is generated in the consumer integration flow.

Producer Integration Flow:

Consumer Integration Flow:

Scenario 3: Router in Producer, routing to Direct Adapter via two receivers.

In this scenario, depending on a condition the associated consumer is invoked.

Producer Integration Flow:

Consumer 1 Integration Flow:

Consumer 2 Integration Flow:

Scenario 4: Request-Reply and End Message in Producer and End Event in Consumer

In this scenario, the request-reply in the Producer integration flow forwards the message in the body of Content Modifier 1 to consumer 1 integration flow. In consumer 1, the received message is appended to the body of Content Modifier 1 and is returned to the Request-Reply in Producer integration flow. This message is then forwarded to consumer 2 which is later sent to the SFTP server.

Producer Integration Flow:

Consumer 1 Integration Flow:

Consumer 2 Integration Flow:

- SAP Managed Tags:

- Cloud Integration

Labels:

27 Comments

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,661 -

Business Trends

87 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

64 -

Expert

1 -

Expert Insights

178 -

Expert Insights

273 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

Kafka

1 -

Life at SAP

784 -

Life at SAP

11 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,577 -

Product Updates

325 -

Replication Flow

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,886 -

Technology Updates

403 -

Workload Fluctuations

1

Related Content

- CAP Extensibility: Exended Properties displayed in UI but missing in Requests in Technology Q&A

- Top Picks: Innovations Highlights from SAP Business Technology Platform (Q1/2024) in Technology Blogs by SAP

- Which SAP GUI for Windows as of release are adapted for SAP S/4 HANA (2023) ? in Technology Q&A

- SAP Datasphere DBADMIN and Data provisioning agent issue (AGENT ADMIN and ADAPTER ADMIN) in Technology Q&A

- How to build SOAP service in SAP Cloud Integration in Technology Blogs by Members

Top kudoed authors

| User | Count |

|---|---|

| 12 | |

| 10 | |

| 9 | |

| 7 | |

| 7 | |

| 7 | |

| 6 | |

| 6 | |

| 5 | |

| 4 |