- SAP Community

- Products and Technology

- Technology

- Technology Blogs by Members

- Discovering SCP Workflow - Service Proxy

Technology Blogs by Members

Explore a vibrant mix of technical expertise, industry insights, and tech buzz in member blogs covering SAP products, technology, and events. Get in the mix!

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Developer Advocate

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

01-17-2018

6:12 AM

Previous post in this series: Discovering SCP Workflow - Using Postman.

This post is part of a series, a guide to which can be found here: Discovering SCP Workflow.

In this post, I'll present a small proxy service I wrote to handle the minutiae of initiating a new workflow instance (see Discovering SCP Workflow - Instance Initiation).

Why did I write it? Well, lots of reasons. Here are some sensible sounding ones:

Here are some more fun ones:

This last reason is important to me - there's so much choice across different platforms (SCP, GCP and beyond) for design-time and runtime for solutions that unless you try things out it's hard to make informed decisions.

(Note: in the following post, some lines - code, URLs, etc - have been split for readability.)

The idea is that I will want to initiate workflow instances from various processes, and want a simple way of doing that with the minimum of fuss. As far as protection goes, I'm using a simple shared secret, in the form of a string that the caller passes and the receiver verifies before proceeding.

I also want to write and forget, and do it all in the cloud.

Before proceeding, it's worth spending a minute on what Google Cloud Functions allows me to do. I can write serverless functions (similar to AWS Lambda) and maintain the code in git repositories stored on GCP (similar to how git repositories are available on SCP). I can write a function in JavaScript, within a Node.js context, availing myself of the myriad libraries available for that platform, and I can test it inside a functions runtime emulator before deploying it to GCP using direct references to the source code master branch in the git repository.

And yes, I edited and tested this whole project it all in the cloud too, using a combination of vim on my Google Cloud Shell instance, and of course the SAP Web IDE. After all, we're surely in the 2nd mainframe era by now!

Anyway, who knows, in the future I may migrate this proxy function to some other platform or service, but for now it will do fine.

The entry point to a Google Cloud Function is an Express-based handler, which like many HTTP server side frameworks, has the concept of a request object and a response object. For what it's worth, this simple pattern also influenced the work on the early Alternative Dispatcher Layer (ADL) for the ABAP and ICF platform.

The way I write my functions for this environment is to have a relatively simple file, exporting a single 'handler' function, and then farm out heavy lifting to another module. The Node.js require/export concept is what this is based upon. We'll see this at a detailed level shortly.

I've always wondered whether it's better to show source code before demonstrating it, or demonstrating it first to give the reader some understanding of what the code is trying to achieve. In this case the demo is simple and worth showing first.

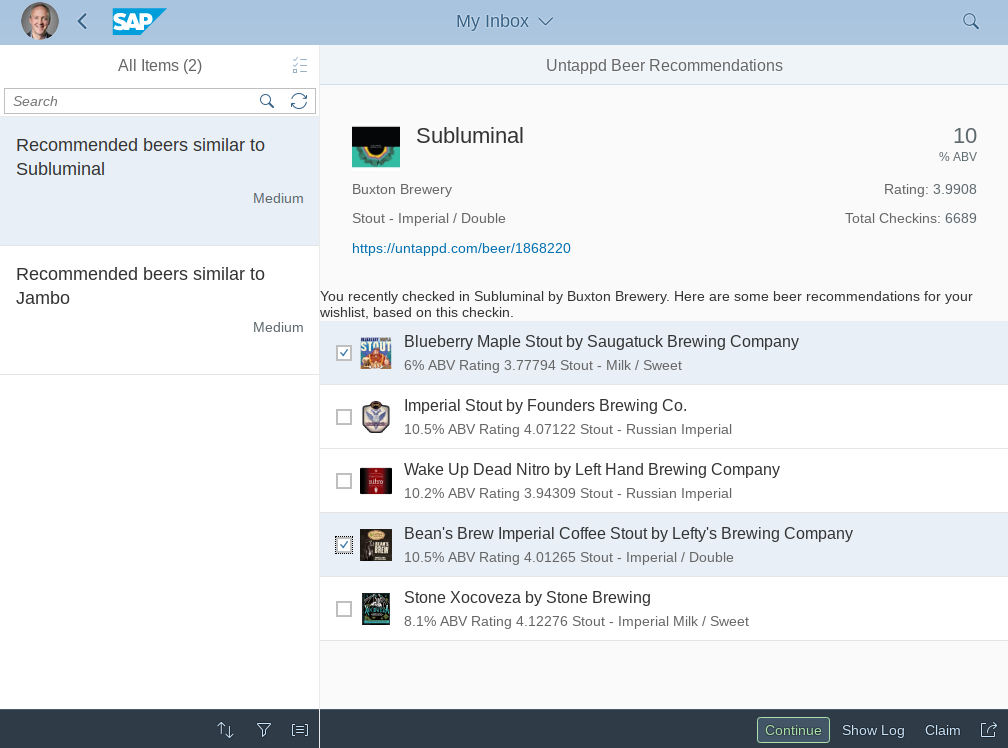

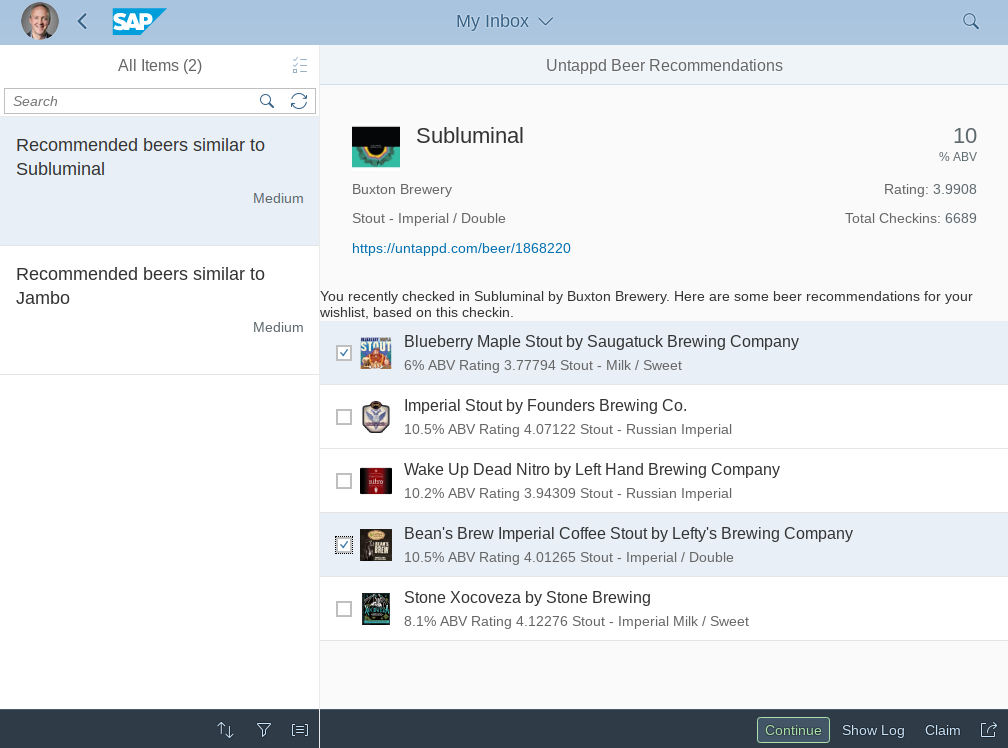

First, I'll highlight where I'm using this proxy for real, in a fun experiment involving beer recommendations based on what you're drinking, courtesy of Untappd's API, and presented within the SCP Workflow context:

I have workflow definition called "untappdrecommendation" which I initiate instances of when another mechanism sees that I've checked in a beer on Untappd.

That mechanism is a Google Apps Script that polls an RSS feed associated with my Untappd checkins and notices when I check in a new beer (I did start by looking at using IFTTT for this but my experience with it wasn't great, so I rolled my own). Once it sees a new checkin, it uses the Untappd API to grab relevant information and then calls the Workflow API, via the proxy that is the subject of this post. Here's an excerpt from that Google Apps Script:

Let's look into what that initiateWorkflow function is doing:

*some folks call them objects ... I prefer to call them maps if they're "passive" (if they have no other methods other than the JavaScript object built-in methods). The term map is used in other languages for this sort of structure.

Looking at the value of WFS_PROXY, we can see that it's the address of my live, hosted Google Cloud Function. The "ZZZ" here replaces the real address, by the way. And while "lifetheuniverseandeverything" isn't the real secret token, I thought it was a nice substitute for this post.

Note that the ability to call the initiateWorkflow function just like that, and to only have to make a single call to UrlFetchApp.fetch (to make a single HTTP request using a facility in the standard Google Apps Script class UrlFetchApp), is what I was meaning with "simple" and "minimum fuss". I'm not having to deal with XSRF tokens, nor wonder whether I need to manage the token's context between calls either.

Stepping out of the Google Apps Script context for a moment, let's see what it looks like when I use that proxy function by hand, with curl.

First, we've got a file, context.json, containing the beer info to be provided to the newly minted workflow instance in the form of context:

We'll send that file as the body of a POST request thus:

Here's what we see:

(Woo, an HTTP/2 response, by the way!)

This results, in the creation of an instance of the "untappdrecommendation" workflow definition which appears in My Inbox as shown in the screenshot earlier.

Now that we've seen what's supposed to happen, it's time to have a look at the JavaScript code. It's in two files. First, there's index.js, which contains the entry point handler which is invoked by the Google Cloud Functions machinery.

As an aside, it's this entry point handler which is referred to in the package.json which describes, amongst other things, the incantation to deploy a function to the cloud. Here's package.json, in case you're curious:

You can see that 'handler' (in index.js) is specified as the cloud function's entry point in the deploy incantation.

Ok, so this is what's in index.js:

It's pretty straightfoward, with the handler function being the one that takes the pair of HTTP request and response objects, checks the token matches, and calls the initiateWorkflow function, accessing the definitionId and the context via the req.query and req.body mechanisms on the request object.

I'm not interested in what the Workflow API returns (if you remember from the previous post, it's a map that includes the ID of the newly minted workflow instance, details of the definition upon which it's based, etc). So I just return a simple string.

To keep things modular, the actual "heavy lifting", if you can call it that, is done in wfslib.js, which looks like this:

Here there's a single function defined and exported - initiate - which takes a series of parameters in a map (opts), determines the Workflow API root endpoint, based on the username and whether it's a trial account or not.

I'm using the Promise-based HTTP library axios to manage my sequential HTTP requests, to avoid callbacks. First there's the GET request to /v1/xsrf-token to request a token, and then there's the POST request to /v1/workflow-instances to initiate a new instance. Inside the second call, I'm taking the XSRF token that was returned from the first call from the headers of the first response (res.headers["x-csrf-token"]).

Note here that this being a more low level HTTP client library, there's no automatic cookie handling as happens automatically in AJAX requests, or in Postman (see Discovering SCP Workflow - Instance Initiation and Discovering SCP Workflow - Using Postman). We have to exert a little bit of manual effort - joining together any cookies returned from the first response, using semi-colons, and sending them in the second request in a Cookie header.

That's pretty much it. I have a nice simple function, running serverless in the cloud, which I can call with minimal effort to kick off a new workflow on the SAP Cloud Platform. Now I have that, I can go to town on the important stuff - making sure that my workflow definition fits the requirements and that the UI for the user task (making a choice from the recommended beers) works well and is available to handle the detail of the workflow item in the My Inbox app in the SAP Fiori Launchpad.

Next post in this series: Discovering SCP Workflow - Workflow Definition.

This post is part of a series, a guide to which can be found here: Discovering SCP Workflow.

In this post, I'll present a small proxy service I wrote to handle the minutiae of initiating a new workflow instance (see Discovering SCP Workflow - Instance Initiation).

Why did I write it? Well, lots of reasons. Here are some sensible sounding ones:

- In my journey of discovery, it enables me to encapsulate stuff I've covered, to allow me to keep the surface area for initiating new workflow instances to a minimum within the wider context of what I'm doing

- It's a way to hide authentication details, especially when wanting to connect to the Workflow API from outside of the context of the SCP Connectivity Service

- It wraps up the XSRF token process so that I don't have to deal with or even see what's going on

Here are some more fun ones:

- It allows me to continue my journey exploring Google Cloud Functions on the Google Cloud Platform (GCP), which I have used already but in a different context: writing handlers for Actions on Google - the framework for the Google Assistant platform which is the underlying layer for Google Home devices and more

- Similarly it lets me explore how I use Node.js libraries, and learn what's out there

- I love the idea of "the second mainframe era" with cloud computing and web terminals (I'm building all this and writing about it purely on Chrome OS with no workstation-local activities), and the combination of GCP and SCP is very attractive

- The proxy lets me explore the possibilities of how to divide up work across different areas of the cloud

This last reason is important to me - there's so much choice across different platforms (SCP, GCP and beyond) for design-time and runtime for solutions that unless you try things out it's hard to make informed decisions.

(Note: in the following post, some lines - code, URLs, etc - have been split for readability.)

The general requirements

The idea is that I will want to initiate workflow instances from various processes, and want a simple way of doing that with the minimum of fuss. As far as protection goes, I'm using a simple shared secret, in the form of a string that the caller passes and the receiver verifies before proceeding.

I also want to write and forget, and do it all in the cloud.

Google Cloud Functions

Before proceeding, it's worth spending a minute on what Google Cloud Functions allows me to do. I can write serverless functions (similar to AWS Lambda) and maintain the code in git repositories stored on GCP (similar to how git repositories are available on SCP). I can write a function in JavaScript, within a Node.js context, availing myself of the myriad libraries available for that platform, and I can test it inside a functions runtime emulator before deploying it to GCP using direct references to the source code master branch in the git repository.

And yes, I edited and tested this whole project it all in the cloud too, using a combination of vim on my Google Cloud Shell instance, and of course the SAP Web IDE. After all, we're surely in the 2nd mainframe era by now!

Anyway, who knows, in the future I may migrate this proxy function to some other platform or service, but for now it will do fine.

The entry point to a Google Cloud Function is an Express-based handler, which like many HTTP server side frameworks, has the concept of a request object and a response object. For what it's worth, this simple pattern also influenced the work on the early Alternative Dispatcher Layer (ADL) for the ABAP and ICF platform.

The way I write my functions for this environment is to have a relatively simple file, exporting a single 'handler' function, and then farm out heavy lifting to another module. The Node.js require/export concept is what this is based upon. We'll see this at a detailed level shortly.

Setting the scene

I've always wondered whether it's better to show source code before demonstrating it, or demonstrating it first to give the reader some understanding of what the code is trying to achieve. In this case the demo is simple and worth showing first.

First, I'll highlight where I'm using this proxy for real, in a fun experiment involving beer recommendations based on what you're drinking, courtesy of Untappd's API, and presented within the SCP Workflow context:

I have workflow definition called "untappdrecommendation" which I initiate instances of when another mechanism sees that I've checked in a beer on Untappd.

That mechanism is a Google Apps Script that polls an RSS feed associated with my Untappd checkins and notices when I check in a new beer (I did start by looking at using IFTTT for this but my experience with it wasn't great, so I rolled my own). Once it sees a new checkin, it uses the Untappd API to grab relevant information and then calls the Workflow API, via the proxy that is the subject of this post. Here's an excerpt from that Google Apps Script:

var WFS_PROXY = "https://us-central1-ZZZ.cloudfunctions.net/wfs-proxy";

var WFS_SECRET = "lifetheuniverseandeverything";

var WORKFLOW_DEFINITION_ID = "untappdrecommendation";

[...]

// Go and get the beer info for this beer, particularly the similar beers.

// If we get the info, add it to the data and initiate a workflow.

var beerInfo = retrieveBeerInfo(beerId);

if (beerInfo) {

row[CHECKIN.STATUS] = initiateWorkflow(beerInfo) || "FAILED";

}

[...]

function initiateWorkflow(context) {

return UrlFetchApp

.fetch(WFS_PROXY + "?token=" + WFS_SECRET + "&definitionId=" + WORKFLOW_DEFINITION_ID, {

method : "POST",

contentType : "application/json",

payload : JSON.stringify(context)

})

.getContentText();

}Let's look into what that initiateWorkflow function is doing:

- it receives a map* of information on the specific beer checked in

- then it makes a POST HTTP request to the proxy service, passing

- a secret token (mentioned earlier)

- the workflow definition ID "untappdrecommendation"

- the context, containing the beer information, for the workflow instance

*some folks call them objects ... I prefer to call them maps if they're "passive" (if they have no other methods other than the JavaScript object built-in methods). The term map is used in other languages for this sort of structure.

Looking at the value of WFS_PROXY, we can see that it's the address of my live, hosted Google Cloud Function. The "ZZZ" here replaces the real address, by the way. And while "lifetheuniverseandeverything" isn't the real secret token, I thought it was a nice substitute for this post.

Note that the ability to call the initiateWorkflow function just like that, and to only have to make a single call to UrlFetchApp.fetch (to make a single HTTP request using a facility in the standard Google Apps Script class UrlFetchApp), is what I was meaning with "simple" and "minimum fuss". I'm not having to deal with XSRF tokens, nor wonder whether I need to manage the token's context between calls either.

Stepping out of the Google Apps Script context for a moment, let's see what it looks like when I use that proxy function by hand, with curl.

First, we've got a file, context.json, containing the beer info to be provided to the newly minted workflow instance in the form of context:

{

"beer": {

"bid": 1868220,

"beer_name": "Subluminal",

"beer_abv": 10,

"beer_ibu": 60,

"beer_slug": "buxton-brewery-subluminal",

"beer_style": "Stout - Imperial / Double",

"is_in_production": 1,

[...]

}

}We'll send that file as the body of a POST request thus:

curl \

--verbose \

--data @context.json \

--header "Content-Type: application/json" \

"https://us-central1-ZZZ.cloudfunctions.net/wfs-proxy

?definitionId=untappdrecommendation

&token=lifetheuniverseandeverthing"Here's what we see:

> POST /wfs-proxy?definitionId=untappdrecommendation&token=lifetheuniverseandeverything HTTP/1.1

> Host: us-central1-ZZZ.cloudfunctions.net

> User-Agent: curl/7.52.1

> Accept: */*

> Content-Type: application/json

> Content-Length: 131956

>

< HTTP/2 200

< content-type: text/html; charset=utf-8

< etag: W/"2-d736d92d"

< function-execution-id: pr85lvavhrvx

< x-powered-by: Express

< x-cloud-trace-context: 12ea0eb8b055ade13ff786b4c52af11e;o=1

< date: Tue, 16 Jan 2018 12:12:12 GMT

< server: Google Frontend

< content-length: 2

<

OK(Woo, an HTTP/2 response, by the way!)

This results, in the creation of an instance of the "untappdrecommendation" workflow definition which appears in My Inbox as shown in the screenshot earlier.

The proxy code

Now that we've seen what's supposed to happen, it's time to have a look at the JavaScript code. It's in two files. First, there's index.js, which contains the entry point handler which is invoked by the Google Cloud Functions machinery.

As an aside, it's this entry point handler which is referred to in the package.json which describes, amongst other things, the incantation to deploy a function to the cloud. Here's package.json, in case you're curious:

{

"name": "wfs-proxy",

"project": "ZZZ",

"version": "0.0.1",

"description": "A proxy to triggering a workflow on the SCP Workflow Service",

"main": "index.js",

"scripts": {

"test": "functions deploy $npm_package_name --entry-point handler --trigger-http",

"deploy": "gcloud beta functions deploy $npm_package_name

--entry-point handler

--trigger-http

--source https://source.developers.google.com

/projects/$npm_package_project/repos/$npm_package_name"

},

"author": "DJ Adams",

"license": "ISC",

"dependencies": {

"axios": "^0.17.1"

}

}You can see that 'handler' (in index.js) is specified as the cloud function's entry point in the deploy incantation.

Ok, so this is what's in index.js:

const

wfslib = require("./wfslib"),

user = "p481810",

pass = "*******",

secret = "lifetheuniverseandeverything",

initiateWorkflow = (definitionId, context, callback) => {

wfslib.initiate({

user : user,

pass : pass,

prod : false,

definitionId : definitionId,

context : context

});

callback("OK");

},

/**

* Main entrypoint, following the Node Express

* pattern. Expects an HTTP POST request with the

* workflow definition ID in a query parameter

* 'definitionId' and the payload being a JSON

* encoded context.

*/

handler = (req, res) => {

switch (req.query.token) {

case secret:

initiateWorkflow(

req.query.definitionId,

req.body,

result => { res.status(200).send(result); }

);

break;

default:

res.status(403).send("Incorrect token supplied");

}

};

exports.handler = handler;It's pretty straightfoward, with the handler function being the one that takes the pair of HTTP request and response objects, checks the token matches, and calls the initiateWorkflow function, accessing the definitionId and the context via the req.query and req.body mechanisms on the request object.

I'm not interested in what the Workflow API returns (if you remember from the previous post, it's a map that includes the ID of the newly minted workflow instance, details of the definition upon which it's based, etc). So I just return a simple string.

To keep things modular, the actual "heavy lifting", if you can call it that, is done in wfslib.js, which looks like this:

const

axios = require("axios"),

wfsUrl = "https://bpmworkflowruntimewfs-USERTRIAL.hanaTRIAL.ondemand.com/workflow-service/rest",

tokenPath = "/v1/xsrf-token",

workflowInstancesPath = "/v1/workflow-instances",

/**

* opts:

* - user: SCP user e.g. p481810

* - pass: SCP password

* - prod: SCP production (boolean, default false)

* - definitionId: ID of workflow definition

* - context: context to pass when starting the workflow instance

*/

initiate = opts => {

const

client = axios.create({

baseURL : wfsUrl

.replace(/USER/, opts.user)

.replace(/TRIAL/g, opts.prod ? "" : "trial"),

auth : {

username : opts.user,

password : opts.pass

}

});

return client

.get(tokenPath, {

headers : {

"X-CSRF-Token" : "Fetch"

}

})

.then(res => {

client

.post(workflowInstancesPath, {

definitionId : opts.definitionId,

context : opts.context

},

{

headers : {

"X-CSRF-Token" : res.headers["x-csrf-token"],

"Cookie" : res.headers["set-cookie"].join("; ")

}

})

.then(res => res.data)

.catch(err => err.status);

});

};

exports.initiate = initiate;

Here there's a single function defined and exported - initiate - which takes a series of parameters in a map (opts), determines the Workflow API root endpoint, based on the username and whether it's a trial account or not.

I'm using the Promise-based HTTP library axios to manage my sequential HTTP requests, to avoid callbacks. First there's the GET request to /v1/xsrf-token to request a token, and then there's the POST request to /v1/workflow-instances to initiate a new instance. Inside the second call, I'm taking the XSRF token that was returned from the first call from the headers of the first response (res.headers["x-csrf-token"]).

Note here that this being a more low level HTTP client library, there's no automatic cookie handling as happens automatically in AJAX requests, or in Postman (see Discovering SCP Workflow - Instance Initiation and Discovering SCP Workflow - Using Postman). We have to exert a little bit of manual effort - joining together any cookies returned from the first response, using semi-colons, and sending them in the second request in a Cookie header.

That's pretty much it. I have a nice simple function, running serverless in the cloud, which I can call with minimal effort to kick off a new workflow on the SAP Cloud Platform. Now I have that, I can go to town on the important stuff - making sure that my workflow definition fits the requirements and that the UI for the user task (making a choice from the recommended beers) works well and is available to handle the detail of the workflow item in the My Inbox app in the SAP Fiori Launchpad.

Next post in this series: Discovering SCP Workflow - Workflow Definition.

- SAP Managed Tags:

- API,

- JavaScript,

- Node.js,

- SAP Workflow Management, workflow capability

4 Comments

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

"automatische backups"

1 -

"regelmäßige sicherung"

1 -

"TypeScript" "Development" "FeedBack"

1 -

505 Technology Updates 53

1 -

ABAP

14 -

ABAP API

1 -

ABAP CDS Views

2 -

ABAP CDS Views - BW Extraction

1 -

ABAP CDS Views - CDC (Change Data Capture)

1 -

ABAP class

2 -

ABAP Cloud

2 -

ABAP Development

5 -

ABAP in Eclipse

1 -

ABAP Platform Trial

1 -

ABAP Programming

2 -

abap technical

1 -

absl

2 -

access data from SAP Datasphere directly from Snowflake

1 -

Access data from SAP datasphere to Qliksense

1 -

Accrual

1 -

action

1 -

adapter modules

1 -

Addon

1 -

Adobe Document Services

1 -

ADS

1 -

ADS Config

1 -

ADS with ABAP

1 -

ADS with Java

1 -

ADT

2 -

Advance Shipping and Receiving

1 -

Advanced Event Mesh

3 -

AEM

1 -

AI

7 -

AI Launchpad

1 -

AI Projects

1 -

AIML

9 -

Alert in Sap analytical cloud

1 -

Amazon S3

1 -

Analytical Dataset

1 -

Analytical Model

1 -

Analytics

1 -

Analyze Workload Data

1 -

annotations

1 -

API

1 -

API and Integration

3 -

API Call

2 -

API security

1 -

Application Architecture

1 -

Application Development

5 -

Application Development for SAP HANA Cloud

3 -

Applications and Business Processes (AP)

1 -

Artificial Intelligence

1 -

Artificial Intelligence (AI)

5 -

Artificial Intelligence (AI) 1 Business Trends 363 Business Trends 8 Digital Transformation with Cloud ERP (DT) 1 Event Information 462 Event Information 15 Expert Insights 114 Expert Insights 76 Life at SAP 418 Life at SAP 1 Product Updates 4

1 -

Artificial Intelligence (AI) blockchain Data & Analytics

1 -

Artificial Intelligence (AI) blockchain Data & Analytics Intelligent Enterprise

1 -

Artificial Intelligence (AI) blockchain Data & Analytics Intelligent Enterprise Oil Gas IoT Exploration Production

1 -

Artificial Intelligence (AI) blockchain Data & Analytics Intelligent Enterprise sustainability responsibility esg social compliance cybersecurity risk

1 -

ASE

1 -

ASR

2 -

ASUG

1 -

Attachments

1 -

Authorisations

1 -

Automating Processes

1 -

Automation

2 -

aws

2 -

Azure

1 -

Azure AI Studio

1 -

Azure API Center

1 -

Azure API Management

1 -

B2B Integration

1 -

Backorder Processing

1 -

Backup

1 -

Backup and Recovery

1 -

Backup schedule

1 -

BADI_MATERIAL_CHECK error message

1 -

Bank

1 -

BAS

1 -

basis

2 -

Basis Monitoring & Tcodes with Key notes

2 -

Batch Management

1 -

BDC

1 -

Best Practice

1 -

bitcoin

1 -

Blockchain

3 -

bodl

1 -

BOP in aATP

1 -

BOP Segments

1 -

BOP Strategies

1 -

BOP Variant

1 -

BPC

1 -

BPC LIVE

1 -

BTP

12 -

BTP Destination

2 -

Business AI

1 -

Business and IT Integration

1 -

Business application stu

1 -

Business Application Studio

1 -

Business Architecture

1 -

Business Communication Services

1 -

Business Continuity

1 -

Business Data Fabric

3 -

Business Partner

12 -

Business Partner Master Data

10 -

Business Technology Platform

2 -

Business Trends

4 -

CA

1 -

calculation view

1 -

CAP

4 -

Capgemini

1 -

CAPM

1 -

Catalyst for Efficiency: Revolutionizing SAP Integration Suite with Artificial Intelligence (AI) and

1 -

CCMS

2 -

CDQ

12 -

CDS

2 -

Cental Finance

1 -

Certificates

1 -

CFL

1 -

Change Management

1 -

chatbot

1 -

chatgpt

3 -

CL_SALV_TABLE

2 -

Class Runner

1 -

Classrunner

1 -

Cloud ALM Monitoring

1 -

Cloud ALM Operations

1 -

cloud connector

1 -

Cloud Extensibility

1 -

Cloud Foundry

4 -

Cloud Integration

6 -

Cloud Platform Integration

2 -

cloudalm

1 -

communication

1 -

Compensation Information Management

1 -

Compensation Management

1 -

Compliance

1 -

Compound Employee API

1 -

Configuration

1 -

Connectors

1 -

Consolidation Extension for SAP Analytics Cloud

2 -

Control Indicators.

1 -

Controller-Service-Repository pattern

1 -

Conversion

1 -

Cosine similarity

1 -

cryptocurrency

1 -

CSI

1 -

ctms

1 -

Custom chatbot

3 -

Custom Destination Service

1 -

custom fields

1 -

Customer Experience

1 -

Customer Journey

1 -

Customizing

1 -

cyber security

3 -

cybersecurity

1 -

Data

1 -

Data & Analytics

1 -

Data Aging

1 -

Data Analytics

2 -

Data and Analytics (DA)

1 -

Data Archiving

1 -

Data Back-up

1 -

Data Flow

1 -

Data Governance

5 -

Data Integration

2 -

Data Quality

12 -

Data Quality Management

12 -

Data Synchronization

1 -

data transfer

1 -

Data Unleashed

1 -

Data Value

8 -

database tables

1 -

Datasphere

3 -

datenbanksicherung

1 -

dba cockpit

1 -

dbacockpit

1 -

Debugging

2 -

Defender

1 -

Delimiting Pay Components

1 -

Delta Integrations

1 -

Destination

3 -

Destination Service

1 -

Developer extensibility

1 -

Developing with SAP Integration Suite

1 -

Devops

1 -

digital transformation

1 -

Documentation

1 -

Dot Product

1 -

DQM

1 -

dump database

1 -

dump transaction

1 -

e-Invoice

1 -

E4H Conversion

1 -

Eclipse ADT ABAP Development Tools

2 -

edoc

1 -

edocument

1 -

ELA

1 -

Embedded Consolidation

1 -

Embedding

1 -

Embeddings

1 -

Employee Central

1 -

Employee Central Payroll

1 -

Employee Central Time Off

1 -

Employee Information

1 -

Employee Rehires

1 -

Enable Now

1 -

Enable now manager

1 -

endpoint

1 -

Enhancement Request

1 -

Enterprise Architecture

1 -

ESLint

1 -

ETL Business Analytics with SAP Signavio

1 -

Euclidean distance

1 -

Event Dates

1 -

Event Driven Architecture

1 -

Event Mesh

2 -

Event Reason

1 -

EventBasedIntegration

1 -

EWM

1 -

EWM Outbound configuration

1 -

EWM-TM-Integration

1 -

Existing Event Changes

1 -

Expand

1 -

Expert

2 -

Expert Insights

2 -

Exploits

1 -

Fiori

14 -

Fiori Elements

2 -

Fiori SAPUI5

12 -

Flask

1 -

Full Stack

8 -

Funds Management

1 -

General

1 -

General Splitter

1 -

Generative AI

1 -

Getting Started

1 -

GitHub

8 -

Grants Management

1 -

GraphQL

1 -

groovy

1 -

GTP

1 -

HANA

6 -

HANA Cloud

2 -

Hana Cloud Database Integration

2 -

HANA DB

2 -

HANA XS Advanced

1 -

Historical Events

1 -

home labs

1 -

HowTo

1 -

HR Data Management

1 -

html5

8 -

HTML5 Application

1 -

Identity cards validation

1 -

idm

1 -

Implementation

1 -

input parameter

1 -

instant payments

1 -

Integration

3 -

Integration Advisor

1 -

Integration Architecture

1 -

Integration Center

1 -

Integration Suite

1 -

intelligent enterprise

1 -

iot

1 -

Java

1 -

job

1 -

Job Information Changes

1 -

Job-Related Events

1 -

Job_Event_Information

1 -

joule

4 -

Journal Entries

1 -

Just Ask

1 -

Kerberos for ABAP

8 -

Kerberos for JAVA

8 -

KNN

1 -

Launch Wizard

1 -

Learning Content

2 -

Life at SAP

5 -

lightning

1 -

Linear Regression SAP HANA Cloud

1 -

Loading Indicator

1 -

local tax regulations

1 -

LP

1 -

Machine Learning

2 -

Marketing

1 -

Master Data

3 -

Master Data Management

14 -

Maxdb

2 -

MDG

1 -

MDGM

1 -

MDM

1 -

Message box.

1 -

Messages on RF Device

1 -

Microservices Architecture

1 -

Microsoft Universal Print

1 -

Middleware Solutions

1 -

Migration

5 -

ML Model Development

1 -

Modeling in SAP HANA Cloud

8 -

Monitoring

3 -

MTA

1 -

Multi-Record Scenarios

1 -

Multiple Event Triggers

1 -

Myself Transformation

1 -

Neo

1 -

New Event Creation

1 -

New Feature

1 -

Newcomer

1 -

NodeJS

3 -

ODATA

2 -

OData APIs

1 -

odatav2

1 -

ODATAV4

1 -

ODBC

1 -

ODBC Connection

1 -

Onpremise

1 -

open source

2 -

OpenAI API

1 -

Oracle

1 -

PaPM

1 -

PaPM Dynamic Data Copy through Writer function

1 -

PaPM Remote Call

1 -

PAS-C01

1 -

Pay Component Management

1 -

PGP

1 -

Pickle

1 -

PLANNING ARCHITECTURE

1 -

Popup in Sap analytical cloud

1 -

PostgrSQL

1 -

POSTMAN

1 -

Prettier

1 -

Process Automation

2 -

Product Updates

4 -

PSM

1 -

Public Cloud

1 -

Python

4 -

python library - Document information extraction service

1 -

Qlik

1 -

Qualtrics

1 -

RAP

3 -

RAP BO

2 -

Record Deletion

1 -

Recovery

1 -

recurring payments

1 -

redeply

1 -

Release

1 -

Remote Consumption Model

1 -

Replication Flows

1 -

research

1 -

Resilience

1 -

REST

1 -

REST API

2 -

Retagging Required

1 -

Risk

1 -

Rolling Kernel Switch

1 -

route

1 -

rules

1 -

S4 HANA

1 -

S4 HANA Cloud

1 -

S4 HANA On-Premise

1 -

S4HANA

3 -

S4HANA_OP_2023

2 -

SAC

10 -

SAC PLANNING

9 -

SAP

4 -

SAP ABAP

1 -

SAP Advanced Event Mesh

1 -

SAP AI Core

8 -

SAP AI Launchpad

8 -

SAP Analytic Cloud Compass

1 -

Sap Analytical Cloud

1 -

SAP Analytics Cloud

4 -

SAP Analytics Cloud for Consolidation

3 -

SAP Analytics Cloud Story

1 -

SAP analytics clouds

1 -

SAP API Management

1 -

SAP BAS

1 -

SAP Basis

6 -

SAP BODS

1 -

SAP BODS certification.

1 -

SAP BTP

21 -

SAP BTP Build Work Zone

2 -

SAP BTP Cloud Foundry

6 -

SAP BTP Costing

1 -

SAP BTP CTMS

1 -

SAP BTP Innovation

1 -

SAP BTP Migration Tool

1 -

SAP BTP SDK IOS

1 -

SAP Build

11 -

SAP Build App

1 -

SAP Build apps

1 -

SAP Build CodeJam

1 -

SAP Build Process Automation

3 -

SAP Build work zone

10 -

SAP Business Objects Platform

1 -

SAP Business Technology

2 -

SAP Business Technology Platform (XP)

1 -

sap bw

1 -

SAP CAP

2 -

SAP CDC

1 -

SAP CDP

1 -

SAP CDS VIEW

1 -

SAP Certification

1 -

SAP Cloud ALM

4 -

SAP Cloud Application Programming Model

1 -

SAP Cloud Integration for Data Services

1 -

SAP cloud platform

8 -

SAP Companion

1 -

SAP CPI

3 -

SAP CPI (Cloud Platform Integration)

2 -

SAP CPI Discover tab

1 -

sap credential store

1 -

SAP Customer Data Cloud

1 -

SAP Customer Data Platform

1 -

SAP Data Intelligence

1 -

SAP Data Migration in Retail Industry

1 -

SAP Data Services

1 -

SAP DATABASE

1 -

SAP Dataspher to Non SAP BI tools

1 -

SAP Datasphere

9 -

SAP DRC

1 -

SAP EWM

1 -

SAP Fiori

3 -

SAP Fiori App Embedding

1 -

Sap Fiori Extension Project Using BAS

1 -

SAP GRC

1 -

SAP HANA

1 -

SAP HCM (Human Capital Management)

1 -

SAP HR Solutions

1 -

SAP IDM

1 -

SAP Integration Suite

9 -

SAP Integrations

4 -

SAP iRPA

2 -

SAP LAGGING AND SLOW

1 -

SAP Learning Class

1 -

SAP Learning Hub

1 -

SAP Master Data

1 -

SAP Odata

2 -

SAP on Azure

2 -

SAP PartnerEdge

1 -

sap partners

1 -

SAP Password Reset

1 -

SAP PO Migration

1 -

SAP Prepackaged Content

1 -

SAP Process Automation

2 -

SAP Process Integration

2 -

SAP Process Orchestration

1 -

SAP S4HANA

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Cloud for Finance

1 -

SAP S4HANA Cloud private edition

1 -

SAP Sandbox

1 -

SAP STMS

1 -

SAP successfactors

3 -

SAP SuccessFactors HXM Core

1 -

SAP Time

1 -

SAP TM

2 -

SAP Trading Partner Management

1 -

SAP UI5

1 -

SAP Upgrade

1 -

SAP Utilities

1 -

SAP-GUI

8 -

SAP_COM_0276

1 -

SAPBTP

1 -

SAPCPI

1 -

SAPEWM

1 -

sapmentors

1 -

saponaws

2 -

SAPS4HANA

1 -

SAPUI5

5 -

schedule

1 -

Script Operator

1 -

Secure Login Client Setup

8 -

security

9 -

Selenium Testing

1 -

Self Transformation

1 -

Self-Transformation

1 -

SEN

1 -

SEN Manager

1 -

service

1 -

SET_CELL_TYPE

1 -

SET_CELL_TYPE_COLUMN

1 -

SFTP scenario

2 -

Simplex

1 -

Single Sign On

8 -

Singlesource

1 -

SKLearn

1 -

Slow loading

1 -

soap

1 -

Software Development

1 -

SOLMAN

1 -

solman 7.2

2 -

Solution Manager

3 -

sp_dumpdb

1 -

sp_dumptrans

1 -

SQL

1 -

sql script

1 -

SSL

8 -

SSO

8 -

Substring function

1 -

SuccessFactors

1 -

SuccessFactors Platform

1 -

SuccessFactors Time Tracking

1 -

Sybase

1 -

system copy method

1 -

System owner

1 -

Table splitting

1 -

Tax Integration

1 -

Technical article

1 -

Technical articles

1 -

Technology Updates

14 -

Technology Updates

1 -

Technology_Updates

1 -

terraform

1 -

Threats

2 -

Time Collectors

1 -

Time Off

2 -

Time Sheet

1 -

Time Sheet SAP SuccessFactors Time Tracking

1 -

Tips and tricks

2 -

toggle button

1 -

Tools

1 -

Trainings & Certifications

1 -

Transformation Flow

1 -

Transport in SAP BODS

1 -

Transport Management

1 -

TypeScript

3 -

ui designer

1 -

unbind

1 -

Unified Customer Profile

1 -

UPB

1 -

Use of Parameters for Data Copy in PaPM

1 -

User Unlock

1 -

VA02

1 -

Validations

1 -

Vector Database

2 -

Vector Engine

1 -

Visual Studio Code

1 -

VSCode

2 -

VSCode extenions

1 -

Vulnerabilities

1 -

Web SDK

1 -

work zone

1 -

workload

1 -

xsa

1 -

XSA Refresh

1

- « Previous

- Next »

Related Content

- Supporting Multiple API Gateways with SAP API Management – using Azure API Management as example in Technology Blogs by SAP

- Top Picks: Innovations Highlights from SAP Business Technology Platform (Q1/2024) in Technology Blogs by SAP

- Consume Ariba APIs using Postman in Technology Blogs by SAP

- SAP BPA file upload into S4 in Technology Q&A

- How to utilize the Trigger Workflow Action in SAP BPA Process Visibility in Technology Q&A

Top kudoed authors

| User | Count |

|---|---|

| 8 | |

| 5 | |

| 5 | |

| 4 | |

| 4 | |

| 4 | |

| 3 | |

| 3 | |

| 3 | |

| 3 |