- SAP Community

- Products and Technology

- Technology

- Technology Blogs by Members

- Using The SAP Cloud Platform And SAP Search API To...

Technology Blogs by Members

Explore a vibrant mix of technical expertise, industry insights, and tech buzz in member blogs covering SAP products, technology, and events. Get in the mix!

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Robert_Russell

Contributor

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

05-19-2017

6:25 PM

Background

Setup SAP Cloud Platform: SAP Search API & Base Twitter API setup

Collecting Community Data and Tweeting from the SAP Cloud Platform

Using Web IDE with the Collected Answer.sap.com data as an OData Service

Analysis Of The Answers.sap.com Collected Data With SAP Lumira

Links to Individual Detail sections

- Setup HANA Table & Project files

- SQL Connection Configuration

- Twitter API Oauth Signature & tokens

- Setup The Secure Store

- XS Destinations

- Main XS Classic Code to Collect and Tweet About SAP Community Data

- XS Jobs

Background

⇑

I have a history of collecting SAP Community data and blogging about it here. It has been mainly driven by my general interest in SAP technology. My favourite out of these community based blogs was the Thematic mapping of SDN Points and asking community members to register their location on a

One good thing about the new SAP Community Platform is the constant updates (maybe it needs more updates to fix all issues) but the above screenshot does not reflect the current status of the site. The magnifying icon has changed and maybe we all have started by now as the "Get Started" icon is no more ;).

I do not use the that actual search page a great deal (my search is mostly Google driven) but I did discover the documentation from that page to the SAP Search API https://api-onedx.find.sap.com/api/v1/docs/index.html. I have found the actual search API a lot more flexible than the onedx.find.sap main search page. Options such as the ability changing the default sort order of the results. The default sort order is "relevance" from OneDX but I wanted to sort via the last update timestamp. I could achieve this via using search API calls rather than the limited options on the onedx page. The actual format of the search API is JSON based and needs very specific formatting (as per the documentation). I found that using my SAP Community User and Password I could control the process from my trial SAP Cloud Platform account. I used a combination of HANA capabilities on the SAP Cloud platform. I have created some XS Classic code for the collection of SAP Community data, subsequent OData service and to send out tweets via the twitter api.

The objective and overall intention of this blog was to use the search API to look for SAP Community information of interest and alert me via tweets. The example code and configuration below will monitor all the latest questions on the answers.sap.com forums and alert me to any SAPUI5 primary tagged questions. I chose SAPUI5 primary tag just as an example for this blog. I could chose any of the primary tags of this site. I could alter the API calls to search for any topic but my main aim is to get the latest updated information from the site. Therefore my example XS code is based on the search API's updated timestamps fields of any questions.

To highlight the process in one screenshot I have just checked answers.sap.com just now for the latest updated question on the site. The latest question was in the primary tag "BW (SAP Business Warehouse)". So I altered my code to tweet about this tag. As shown in the image below.

In the screenshot above the numbers represent the following

- Shows the answers.sap.com latest questions

- First I ran my XS job to collect this latest data. Then via Web IDE and an UI5 based table ,reading an xsOData service, from my SCP account I can view these latest updated questions

- Finally the 3rd image shows the tweet I generate using XS code by checking for updated questions in the primary tag BW (SAP Business Warehouse)

(As to the question in the tweet? I have no idea if you should convert real time infocubes to HANA optimized ones, so I am switching the primary tag to something else now 😉 )

I cover another example of collecting data from this site in my other blog here. In that blog I analyse the Answer.sap.com forums from Oct 2016 to Apr 2017.

https://blogs.sap.com/2017/05/19/unofficial-analysis-of-the-answers.sap.com-question-forums/

The collection of source data for that blog was adapted from the base code and the technical details in this blog.

Setup SAP Cloud Platform: SAP Search API & Base Twitter API setup

⇑

General Details

First some information in regards to the SAP Cloud Platform Trial accounts. It is worth noting that any Trial SAP Cloud Platform account and I mean the HANA MDC systems will be shutdown on a regular 12 hour schedule. I have no problem with that shutdown and my example is not meant to be setup running 24/7. It has always been and will remain a way for me to learn and practice using SAP technology. I do appreciate the fantastic options and solutions available on the SAP Cloud Platform. The fact that they are made available for free in the trial accounts is a BIG bonus for me as I can't make the investment in any Express version right now. Mentioning investment then it must be a BIGGER investment by SAP in the trial accounts! However I still do not like the fact that Trial accounts are deleted after a period of time. It seems a shame to invest a lot of time into something that could be deleted by any possible events out of my control. Back to my blog....

I have left the code and configuration below linked to the setup in my trial account. I have a schema and user called NEOGEODB. I setup a package for my XSc project called sapcomrjr. If you are interested in following any of the code then any reference to them in my code will obviously have to be adpated.

The NEOGEODB user has super power 🙂 type authorisations and added extra authorisations as required. I used an anonymous connection throughout and it is better for me to review the authorisations and anonymous setup at some point in the future with such a powerful user.

I use twitter quite a bit (mostly reading tweets and not actually tweeting a great deal) but I did not want to use my main account to tweet. So I set up a tweeting bot as in the above screen shot called @inXSc_rjruss For the Twitter account I followed the link to get the Twitter tokens required to actually tweet using XS Classic (XSc)

Twitter Bot setup - http://dghubble.com/blog/posts/twitter-app-write-access-and-bots/

I used the same approach with twitter bots but with SQL Anywhere when I analysed the blogs.sap.com RSS feeds and blogged about it here

The SAP Search API is flexible and I mainly used Chrome Dev tools to have a look how the format of the requests were being made when I was using the https://onedx.find.sap.com/landing page.

The documentation can be found here.

https://api-onedx.find.sap.com/api/v1/docs/search/overview/index.html

Using some of the information I did use Postman before moving onto the SAPCP to ensure I was getting what I was asking for 🙂

Throwing SAP Search API A Curve Ball

click on the arrow to see the image (Internet Explorer you will see the image and no need to click -> html5 details/summary tag)

Collecting Community Data and Tweeting from the SAP Cloud Platform

⇑

SAP HANA Web-based Development Workbench

⇑

I used the web based workbench for my setup and if required it may be better to start with an introduction to this via a developer tutorial ( using Option A on the SCP ) here - SAP HANA XS Classic, Develop your first SAP HANA XSC Application

HANA Table

I created a main table to collect Questions and an extra column "TWEETED" to control the actual tweeting.

Table Name TWEET_SAP_Q

CREATE COLUMN TABLE "NEOGEODB"."TWEET_SAP_Q"(

"ID" INTEGER CS_INT NOT NULL,

"CLIENT" NVARCHAR(50),

"MODIFICATION_TIMESTAMP" LONGDATE CS_LONGDATE,

"EXTENT" NVARCHAR(500),

"TITLE" NVARCHAR(500),

"USERURL" NVARCHAR(1000),

"SOURCE" NVARCHAR(50),

"KEYWORDS" NVARCHAR(2500),

"LANGUAGE" NVARCHAR(100),

"AUTHOR" NVARCHAR(100),

"SM_TECH_IDS" NVARCHAR(500),

"CREATED_TIMESTAMP" LONGDATE CS_LONGDATE,

"UPDATED_TIMESTAMP" LONGDATE CS_LONGDATE,

"AUTHOR_ID" NVARCHAR(100),

"TYPE" NVARCHAR(50),

"LANGUAGE_VARIANT_IDS" NVARCHAR(50),

"TYPE_IDS" NVARCHAR(100),

"PRODUCT_FUNCTION_IDS" NVARCHAR(500),

"CONTENT" CLOB MEMORY THRESHOLD 1000,

"PRIMTAG" NVARCHAR(300),

"TWEETED" NVARCHAR(10)

) UNLOAD PRIORITY 5 AUTO MERGE;

New Project files

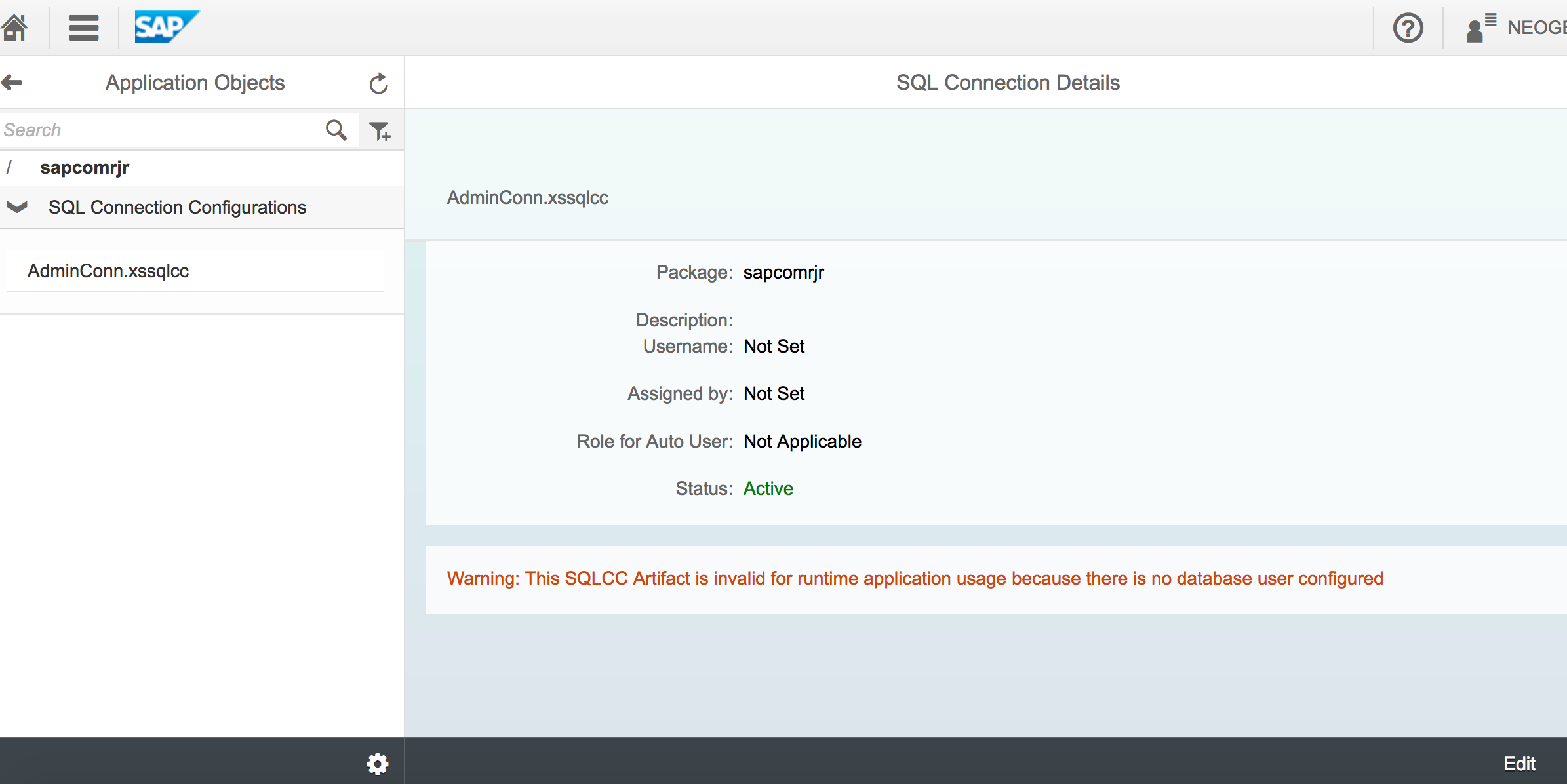

An SQLCC file to be used for connecting to HANA

AdminConn.xssqlcc

{}The Access file to remove authentication and link to the SQL connection used

.xsaccess file

{

"exposed" : true,

"authentication" : null,

"default_connection" : "sapcomrjr::AdminConn"

}.xs file

{}SQL Connection Configuration

⇑

Access to setup the SQL connections I found using XS URL admin screens e.g.

https://{DBNAME}{TRIALACCOUNT}.hanatrial.ondemand.com/sap/hana/xs/admin

Navigate to the XS artifacts - for me this was sapcomrjr as shown below the AdminConn.xssqlcc config is shown.

I could Edit the configuration to update the user to my NEOGEODB account. - as shown below.

Twitter API Oauth Signature & tokens

⇑

The twitter Oauth 1.0a tokens are vital to successfully tweet using my XS code below. I followed the Twitter developer site link below to create a signature with XS code. (I had already created the Oauth tokens for my twitter bot as mentioned in the earlier section of this blog.)

https://dev.twitter.com/oauth/overview/creating-signatures

My XS code is setup specifically to tweet (send a status update) and is tightly integrated to the signature process detailed in the twitter help pages above. If any extra parameters or changes to the URL string for the Twitter API then my XS code signature process has to change to. The process is detailed Twitter documentation and any additional parameters in the API call would trigger a review of the XS code.

Setup The Secure Store

⇑

I called my XS secure store file secureStoreTest.xsjs. I could then use the secure store to separate the tokens from the source code, this way they would not be in plain view in the code. The tokens are automatically encrypted in the secure store. It is possible to revert back to simply storing the tokens as variables and bypass the secure store. However as a one off process I add the tokens to XS code to encrypt them in the secure store. I could then delete them from the XS code once I confirmed they had been successfully stored. I would use the secure store XS commands to retrieve these tokens every time they were required.

My setup is the following

localStore1.xssecurestore

{}I took my approach and based the code on Thomas Jung's blog which covers the secure store here https://blogs.sap.com/2014/12/02/sap-hana-sps-09-new-developer-features-new-core-xsjs-apis/

secureStoreTest.xsjs file

//I found it best to delete localstore before storing new values

function store() {

var config = {

name: "foo",

value: "[ \"{consumekey}\", \"{consumesec}\", \"{accesstok}\", \"{accesssec}\" ]"

};

var aStore = new $.security.Store("localStore1.xssecurestore");

aStore.store(config);

}

function read() {

var config = {

name: "foo"

};

try {

var store = new $.security.Store("localStore1.xssecurestore");

var value = store.read(config);

var stJS = JSON.parse(value);

var outv = stJS[3];

$.response.contentType = "text/plain";

$.response.setBody(outv);

}

catch(ex) {

//do some error handling

}

}

var aCmd = $.request.parameters.get('cmd');

switch (aCmd) {

case "store":

store();

break;

case "read":

read();

break;

default:

$.response.status = $.net.http.INTERNAL_SERVER_ERROR;

$.response.setBody('Invalid Command');

}

There are two options to setup the important Twitter tokens, I followed point 1 and used the secure store or you could simply follow option 2 and hard code the values in the source XS code.

1 Run the secureStoreTest.xsjs with the parameter ?cmd=store in a web browser. This will store the tokens safely in the secure store and I could delete the actual values from the code after a successful run of the following URL in my browser.

or

2 Hard code the variables in the main XS job below

Change the following section in the main q_scananswers.xsjs code to the appropriate Twitter token value.

// var consumekey = "CONSUMERKEY";

var consumekey = read(0);

// var consumesec = "CONSUMERSECURITY";

var consumesec = read(1);

// var accesstok = "ACCESSTOKEN";

var accesstok = read(2);

// var accesssec = "ACCESSSECURITY";

var accesssec = read(3);

XS Destinations

⇑

SAP Search API Destination

FileName scs.xshttpdest

host = "onedx.find.sap.com";

port = 443;

proxyType = http;

proxyHost = "proxy-trial";

proxyPort = 8080;

authType = basic;

useSSL = true;

timeout = 30000;

sslHostCheck = false;

sslAuth = anonymous;Twitter API Destination

FileName twitter.xshttpdest

host = "api.twitter.com";

port = 443;

proxyType = http;

proxyHost = "proxy-trial";

proxyPort = 8080;

useSSL = true;

timeout = 30000;

sslHostCheck = false;

sslAuth = anonymous;Destination Configuration in XS Admin Screen

For the SAP Community Search destination I needed to configure my login details in the XS Admin screen, As shown below. The highlight User (in the red box) needed my login details to this site.

At this stage there is no need to change the twitter.xshttpdest. The SSL Trust Store can be left as default from my experience as the overall SCP uses a list of certificates that Twitter trust.

Main XS Classic Code to Collect and Tweet About SAP Community Data

⇑

I called the main XSJS script "q_scanswers.xsjs"

It does come with some covering remarks, in that originally the code was in individual sections. E.g. the collection of Search API Community data and the process for tweeting was in two XS programs. I then combined it into the final working code below. Error checking any the validity of this XS code I leave that judgement to you. I am happy to answer questions on any part of the process and I do know it works as intended.

I already knew how to use the Twitter API Oauth process when I covered that with another great SAP product, SQL Anywhere.

I analysed SCN @SCNblogs timeline using SQL Anywhere and the help of UI5. The crypto and base64 utilities that come with XS make it possible to replicate my SQL Anywhere routines with the HANA in the SAPCP.

At this point I will thank thomas.jung for his contributions on the SAP Community site that helped me solve some of the issues I had along the way. Any errors or wrong interpretation of Thomas's blogs/answers are obviously mine. I reference the links at the end of my blog. If you think any part could change let me know , I would be happy to hear about it.

In its current form in this blog it is not setup to collect all questions. My blog (if followed exactly) would collect SAP Questions every 15 minutes via the XS job schedule. It most likely will not read all questions into HANA unless it is adapted, in the search API JSON section of the code.

function read(v) {

var config = {

name: "foo"

};

try {

var store = new $.security.Store("localStore1.xssecurestore");

var value = store.read(config);

var stJS = JSON.parse(value);

var outv = stJS[v];

return outv;

} catch (ex) {

//do some error handling

}

}

function tweet1(text2tweet) {

var destination_package = "sapcomrjr";

var destination_name = "twitter";

//https://www.thepolyglotdeveloper.com/2015/03/create-a-random-nonce-string-using-javascript/

var randomString = function(length) {

var textr = "";

var possible = "ABCDEFGHIJKLMNOPQRSTUVWXYZabcdefghijklmnopqrstuvwxyz0123456789";

for (var i = 0; i < length; i++) {

textr += possible.charAt(Math.floor(Math.random() * possible.length));

}

return textr;

};

try {

var text = encodeURIComponent('@rjruss Still Working?');

var epn1 = new Date().getTime();

var ep2 = epn1.toString();

var epn = ep2.substring(0, 10);

var nonsense = randomString(6);

// text = encodeURIComponent('Q:' + epn);

text = encodeURIComponent(text2tweet);

var call = '/1.1/statuses/update.json?status=' + text;

var dest = $.net.http.readDestination(destination_package, destination_name);

var client = new $.net.http.Client();

var req = new $.web.WebRequest($.net.http.POST, call);

// var consumekey = "CONSUMERKEY";

var consumekey = read(0);

// var consumesec = "CONSUMERSECURITY";

var consumesec = read(1);

// var accesstok = "ACCESSTOKEN";

var accesstok = read(2);

// var accesssec = "ACCESSSECURITY";

var accesssec = read(3);

var t2 = 'oauth_consumer_key=' + consumekey;

var t3 = '&oauth_nonce=' + nonsense;

var t4 = '&oauth_signature_method=HMAC-SHA1';

var t5 = '&oauth_timestamp=' + epn;

var t6 = '&oauth_token=' + accesstok;

var t7 = '&oauth_version=1.0';

var t8 = '&status=' + text;

var tall = t2 + t3 + t4 + t5 + t6 + t7 + t8;

var tper = encodeURIComponent(tall);

var s1 = 'POST&https%3A%2F%2Fapi.twitter.com%2F1.1%2Fstatuses%2Fupdate.json&';

var out = s1 + tper;

var sk1 = encodeURIComponent(consumesec);

var sk2 = encodeURIComponent(accesssec);

var skey = sk1 + '&' + sk2;

var skeyhmac = $.security.crypto.sha1(out, skey);

var tsignature = encodeURIComponent($.util.codec.encodeBase64(skeyhmac));

var h1 = 'OAuth oauth_consumer_key="' + consumekey + '"';

var h2 = ',oauth_token="' + accesstok + '"';

var h3 = ',oauth_signature_method="HMAC-SHA1"';

var h4 = ',oauth_timestamp="' + epn + '"';

var h5 = ',oauth_nonce="' + nonsense + '"';

var h6 = ',oauth_version="1.0"';

var h7 = ',oauth_signature="' + tsignature + '"';

var twithead = h1 + h2 + h3 + h4 + h5 + h6 + h7;

req.headers.set('Authorization', twithead);

client.request(req, dest);

var response = client.getResponse();

//https://dev.twitter.com/overview/api/response-codes

var rettw = response.status;

if (rettw === 200) {

return rettw;

} else {

var ebody = response.body.asString();

var errM = JSON.parse(ebody);

return errM.errors[0].code;

//$.response.setBody(JSON.stringify(errM.errors[0].message));

}

} catch (e) {

//////$.response.contentType = "text/plain";

//////$.response.setBody(e.message);

return 'err';

}

}

function readAnswer() {

var destination_package = "sapcomrjr";

var destination_name = "scs";

var call = "/api/v1/search";

try {

var dest = $.net.http.readDestination(destination_package, destination_name);

var client = new $.net.http.Client();

var req = new $.net.http.Request($.net.http.POST, call);

req.headers.set('Content-Type', 'application/json; charset=UTF-8');

req.setBody(JSON.stringify({

"returnResults": {

"page": {

"number": 0,

"size": 10

},

"sort": [{

"field": "UPDATED_TIMESTAMP",

"order": "desc"

}],

"outputFields": ["ID", "CLIENT", "MODIFICATION_TIMESTAMP", "EXTENT", "TITLE", "USERURL", "CONTENT", "SOURCE", "KEYWORDS", "LANGUAGE",

"AUTHOR", "SM_TECH_IDS", "CREATED_TIMESTAMP", "UPDATED_TIMESTAMP", "AUTHOR_ID", "TYPE", "PRODUCT_FUNCTION", "LANGUAGE_VARIANT_IDS",

"TYPE_IDS", "PRODUCT_FUNCTION_IDS"]

},

"repository": "srh",

"type": "content",

"filters": [{

"field": "TYPE",

"values": ["question"]

}]

}));

var response = client.request(req, dest).getResponse();

var connection = $.hdb.getConnection({

"isolationLevel": $.hdb.isolation.REPEATABLE_READ,

"sapcomrjr.AdminConn": "package::sapcomrjr"

});

var list = [];

var body = response.body.asString();

var obj = JSON.parse(body);

var lp1 = obj.result.results.results;

for (var i = 0; i < lp1.length; i++) {

list.push(lp1[i]);

}

var vals = [];

for (var i = 0; i < lp1.length; i++) {

var valueToPush = [];

// vals.push(lp1[i].TITLE);

// vals.push(lp1[i].SCORE);

valueToPush[0] = lp1[i].ID;

valueToPush[1] = lp1[i].CLIENT;

valueToPush[2] = lp1[i].MODIFICATION_TIMESTAMP;

valueToPush[3] = lp1[i].EXTENT;

valueToPush[4] = lp1[i].TITLE;

valueToPush[5] = lp1[i].USERURL;

valueToPush[6] = lp1[i].SOURCE;

valueToPush[7] = JSON.stringify(lp1[i].KEYWORDS);

valueToPush[8] = lp1[i].LANGUAGE;

valueToPush[9] = lp1[i].AUTHOR;

valueToPush[10] = JSON.stringify(lp1[i].SM_TECH_IDS);

valueToPush[11] = lp1[i].CREATED_TIMESTAMP;

valueToPush[12] = lp1[i].UPDATED_TIMESTAMP;

valueToPush[13] = lp1[i].AUTHOR_ID;

valueToPush[14] = lp1[i].TYPE;

valueToPush[15] = lp1[i].LANGUAGE_VARIANT_IDS;

valueToPush[16] = lp1[i].TYPE_IDS;

valueToPush[17] = JSON.stringify(lp1[i].PRODUCT_FUNCTION_IDS);

valueToPush[18] = lp1[i].CONTENT;

valueToPush[19] = lp1[i].KEYWORDS[0];

//UPSERT repeat for where clause

valueToPush[20] = lp1[i].CREATED_TIMESTAMP;

vals.push(valueToPush);

}

//"ID","CLIENT","MODIFICATION_TIMESTAMP","EXTENT","TITLE","USERURL","SOURCE","KEYWORDS","LANGUAGE","AUTHOR","SM_TECH_IDS","CREATED_TIMESTAMP","UPDATED_TIMESTAMP","AUTHOR_ID","TYPE","LANGUAGE_VARIANT_IDS","TYPE_IDS","PRODUCT_FUNCTION_IDS","CONTENT"

connection.executeUpdate(

'UPSERT "NEOGEODB"."TWEET_SAP_Q" ("ID","CLIENT","MODIFICATION_TIMESTAMP","EXTENT","TITLE","USERURL","SOURCE","KEYWORDS","LANGUAGE","AUTHOR","SM_TECH_IDS","CREATED_TIMESTAMP","UPDATED_TIMESTAMP","AUTHOR_ID","TYPE","LANGUAGE_VARIANT_IDS","TYPE_IDS","PRODUCT_FUNCTION_IDS","CONTENT","PRIMTAG") VALUES (?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?) where CREATED_TIMESTAMP = ?',

vals);

connection.commit();

} catch (e) {

// ////$.response.contentType = "text/plain";

// ////$.response.setBody(e.message);

}

}

function checkAnswer() {

var connection = $.hdb.getConnection({

"isolationLevel": $.hdb.isolation.REPEATABLE_READ,

"sapcomrjr.AdminConn": "package::sapcomrjr"

});

var sqlc =

'select TOP 3 TITLE,USERURL, AUTHOR, ID, UPDATED_TIMESTAMP from "NEOGEODB"."TWEET_SAP_Q" where TWEETED is NULL AND PRIMTAG = \'ABAP Development\'';

//select TWEETED,UPDATED_TIMESTAMP,USERURL,TITLE,PRIMTAG from "NEOGEODB"."TWEET_SAP_Q" where TWEETED is NULL AND PRIMTAG = 'ABAP Development'

//https://blogs.sap.com/2014/12/02/sap-hana-sps-09-new-developer-features-new-xsjs-database-interface/

var rs = connection.executeQuery(sqlc);

var vals = [];

for (var i = 0; i < rs.length; i++) {

var body = '@rjruss ';

var ti = rs[i]["TITLE"].substring(0, 58);

var us = rs[i]["USERURL"];

var au = rs[i]["AUTHOR"].substring(0, 14);

var it = rs[i]["ID"];

var ud = rs[i]["UPDATED_TIMESTAMP"];

var valueToPush = [];

body += ti + '.. by ' + au + '..| ' + us;

valueToPush[0] = ud;

valueToPush[1] = tweet1(body);

valueToPush[2] = it;

valueToPush[3] = it;

vals.push(valueToPush);

// body += ' link: ' + us + ' by ' + au + ' id ' + it;

}

var outb = JSON.stringify(vals);

try {

//upsert "NEOGEODB"."TWEET_SAP_Q"("TWEETED","ID") VALUES('sent','13847790') where ID = '13847790'

connection.executeUpdate(

'UPSERT "NEOGEODB"."TWEET_SAP_Q" ("UPDATED_TIMESTAMP", "TWEETED", "ID") VALUES ( ?, ?, ?) where ID = ?', vals);

//UPSERT "NEOGEODB"."TESTU" (CLIENT) VALUES ('tes3t') where CLIENT = 'tes2t'

connection.commit();

//////$.response.setBody(body);

} catch (e) {

////$.response.contentType = "text/plain";

////$.response.setBody(e.message);

}

////$.response.setBody(outb);

//////$.response.status = $.net.http.OK;

}

readAnswer();

checkAnswer();

function control() {

readAnswer();

checkAnswer();

}XS Job

⇑

I use the HANA / SAP Cloud Platform XS job functionality to regularly run the main XSc function.

This required the following to set it up.

scan.xsjob Used to define the job and call the XSc code

{

"description": "Update SAP answers",

"action": "hihanaxs.sapcomrjr:q_scanswers.xsjs::control",

"schedules": [

{

"description": "Update SAP answers",

"xscron": "* * * * * */15 30"

}

]

}My workflow for XS jobs on SCP is to save/activate the xsjob file then visit the XS admin page to activate both the job and overall schedule.

Activate XS Job

I selected "Active" and entered my user/password for the job.

Activate XS Schedule

The Job will not run unless the Scheduler is Enabled (top right of the above screenshot)

The Result

Once running the tweets are sent to my notification timeline.

OData Service

⇑

I used a simple xsodata service statement to create an OData feed as follows.

service {"NEOGEODB"."TWEET_SAP_Q" as "SAF" keys generate local "GENERATED_ID" ;}As there is no primary key on my TWEET_SAP_Q table I use the xsodata functionality to create one called GENERATED_ID. I could test this service in the workbench and once I confirmed it was working I could use the OData feed in my WebIDE as follows.

SCP Destination

I added the following destination in my SCP cockpit to point back to my own SCP HANA xsodata service.

The URL is blanked out in the screenshot but is my trial account link.

Web IDE Config

I tested a simple table via a service URL call to my OData service.

For me the key step in the table definition was the following OData bindrows section

questions.bindRows({

path: "/SAF",

parameters: {

// operationMode: sap.ui.model.odata.OperationMode.Client

operationMode: sap.ui.model.odata.OperationMode.Server

}

});This allows me to monitor the last updated questions in my table and filter for successful Twitter status codes (return status 200 indicates a successful call to Twitter).

HANA Calculation View and SAP Lumira Setup

⇑

To analyse the data I had collected I used SAP Lumira as mentioned in the opening of this blog. I setup a couple of calculation views based on a collection table. The date range is from 10 Oct 2016 to 30th April 2017.

From the SAP Search API data I added calculated columns for QUESTIONS and AUTHORS (counter based) and the CALCULATED_TIMESTAMP adapted to DATE YYYY-MM-DD format, HOUR and finally DAYNAME, I used these columns to analyse the data as per the start of this blog.

***Accessing the SAPCP from Lumira works again after the fix noted in the question/answer below. My thanks to Jin for the answer to my question.

****THE Following Section for Lumira i

https://answers.sap.com/questions/198954/index.html

To connect Lumira to my trial SCP account I followed an approach I described in this blog via an SCP tunnel. Although the latest MDC SCP accounts any database user can be used. So I use my neogeodb user which I can save the details in Lumira. However the "SAP Cloud Platform Console Client" tunnel needs to be open to successfully connect.

Example chart I was able to produce analysing the SAP Community with Lumira connected to my SAPCP trial account is below.

That completes my blog and thanks for reading.

Best Regards

Robert Russell

The following links are to reference pages that I found useful for the complete setup.

SAP HANA XS JavaScript API Reference

https://help.sap.com/http.svc/rc/3de842783af24336b6305a3c0223a369/1.0.12/en-US/index.html

XSc Security API

https://help.sap.com/viewer/d89d4595fae647eabc14002c0340a999/1.0.12/en-US/c6bbca35b7734168ac585c0aef...

XSc Namespace: crypto

https://help.sap.com/http.svc/rc/3de842783af24336b6305a3c0223a369/2.0.00/en-US/$.security.crypto.htm...

Batch Insert

https://archive.sap.com/discussions/thread/3544443

SQLCC config

https://archive.sap.com/discussions/thread/3739973

http://help-legacy.sap.com/saphelp_hanaplatform/helpdata/en/74/0f8789a73340c2879246ebbaff6d5d/conten...

⇑

- SAP Managed Tags:

- SAP HANA,

- SAP Community,

- SAP Business Technology Platform

5 Comments

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

"automatische backups"

1 -

"regelmäßige sicherung"

1 -

"TypeScript" "Development" "FeedBack"

1 -

505 Technology Updates 53

1 -

ABAP

14 -

ABAP API

1 -

ABAP CDS Views

2 -

ABAP CDS Views - BW Extraction

1 -

ABAP CDS Views - CDC (Change Data Capture)

1 -

ABAP class

2 -

ABAP Cloud

2 -

ABAP Development

5 -

ABAP in Eclipse

1 -

ABAP Platform Trial

1 -

ABAP Programming

2 -

abap technical

1 -

absl

2 -

access data from SAP Datasphere directly from Snowflake

1 -

Access data from SAP datasphere to Qliksense

1 -

Accrual

1 -

action

1 -

adapter modules

1 -

Addon

1 -

Adobe Document Services

1 -

ADS

1 -

ADS Config

1 -

ADS with ABAP

1 -

ADS with Java

1 -

ADT

2 -

Advance Shipping and Receiving

1 -

Advanced Event Mesh

3 -

AEM

1 -

AI

7 -

AI Launchpad

1 -

AI Projects

1 -

AIML

9 -

Alert in Sap analytical cloud

1 -

Amazon S3

1 -

Analytical Dataset

1 -

Analytical Model

1 -

Analytics

1 -

Analyze Workload Data

1 -

annotations

1 -

API

1 -

API and Integration

3 -

API Call

2 -

Application Architecture

1 -

Application Development

5 -

Application Development for SAP HANA Cloud

3 -

Applications and Business Processes (AP)

1 -

Artificial Intelligence

1 -

Artificial Intelligence (AI)

5 -

Artificial Intelligence (AI) 1 Business Trends 363 Business Trends 8 Digital Transformation with Cloud ERP (DT) 1 Event Information 462 Event Information 15 Expert Insights 114 Expert Insights 76 Life at SAP 418 Life at SAP 1 Product Updates 4

1 -

Artificial Intelligence (AI) blockchain Data & Analytics

1 -

Artificial Intelligence (AI) blockchain Data & Analytics Intelligent Enterprise

1 -

Artificial Intelligence (AI) blockchain Data & Analytics Intelligent Enterprise Oil Gas IoT Exploration Production

1 -

Artificial Intelligence (AI) blockchain Data & Analytics Intelligent Enterprise sustainability responsibility esg social compliance cybersecurity risk

1 -

ASE

1 -

ASR

2 -

ASUG

1 -

Attachments

1 -

Authorisations

1 -

Automating Processes

1 -

Automation

2 -

aws

2 -

Azure

1 -

Azure AI Studio

1 -

B2B Integration

1 -

Backorder Processing

1 -

Backup

1 -

Backup and Recovery

1 -

Backup schedule

1 -

BADI_MATERIAL_CHECK error message

1 -

Bank

1 -

BAS

1 -

basis

2 -

Basis Monitoring & Tcodes with Key notes

2 -

Batch Management

1 -

BDC

1 -

Best Practice

1 -

bitcoin

1 -

Blockchain

3 -

bodl

1 -

BOP in aATP

1 -

BOP Segments

1 -

BOP Strategies

1 -

BOP Variant

1 -

BPC

1 -

BPC LIVE

1 -

BTP

12 -

BTP Destination

2 -

Business AI

1 -

Business and IT Integration

1 -

Business application stu

1 -

Business Application Studio

1 -

Business Architecture

1 -

Business Communication Services

1 -

Business Continuity

1 -

Business Data Fabric

3 -

Business Partner

12 -

Business Partner Master Data

10 -

Business Technology Platform

2 -

Business Trends

4 -

CA

1 -

calculation view

1 -

CAP

3 -

Capgemini

1 -

CAPM

1 -

Catalyst for Efficiency: Revolutionizing SAP Integration Suite with Artificial Intelligence (AI) and

1 -

CCMS

2 -

CDQ

12 -

CDS

2 -

Cental Finance

1 -

Certificates

1 -

CFL

1 -

Change Management

1 -

chatbot

1 -

chatgpt

3 -

CL_SALV_TABLE

2 -

Class Runner

1 -

Classrunner

1 -

Cloud ALM Monitoring

1 -

Cloud ALM Operations

1 -

cloud connector

1 -

Cloud Extensibility

1 -

Cloud Foundry

4 -

Cloud Integration

6 -

Cloud Platform Integration

2 -

cloudalm

1 -

communication

1 -

Compensation Information Management

1 -

Compensation Management

1 -

Compliance

1 -

Compound Employee API

1 -

Configuration

1 -

Connectors

1 -

Consolidation Extension for SAP Analytics Cloud

2 -

Control Indicators.

1 -

Controller-Service-Repository pattern

1 -

Conversion

1 -

Cosine similarity

1 -

cryptocurrency

1 -

CSI

1 -

ctms

1 -

Custom chatbot

3 -

Custom Destination Service

1 -

custom fields

1 -

Customer Experience

1 -

Customer Journey

1 -

Customizing

1 -

cyber security

3 -

cybersecurity

1 -

Data

1 -

Data & Analytics

1 -

Data Aging

1 -

Data Analytics

2 -

Data and Analytics (DA)

1 -

Data Archiving

1 -

Data Back-up

1 -

Data Flow

1 -

Data Governance

5 -

Data Integration

2 -

Data Quality

12 -

Data Quality Management

12 -

Data Synchronization

1 -

data transfer

1 -

Data Unleashed

1 -

Data Value

8 -

database tables

1 -

Datasphere

3 -

datenbanksicherung

1 -

dba cockpit

1 -

dbacockpit

1 -

Debugging

2 -

Delimiting Pay Components

1 -

Delta Integrations

1 -

Destination

3 -

Destination Service

1 -

Developer extensibility

1 -

Developing with SAP Integration Suite

1 -

Devops

1 -

digital transformation

1 -

Documentation

1 -

Dot Product

1 -

DQM

1 -

dump database

1 -

dump transaction

1 -

e-Invoice

1 -

E4H Conversion

1 -

Eclipse ADT ABAP Development Tools

2 -

edoc

1 -

edocument

1 -

ELA

1 -

Embedded Consolidation

1 -

Embedding

1 -

Embeddings

1 -

Employee Central

1 -

Employee Central Payroll

1 -

Employee Central Time Off

1 -

Employee Information

1 -

Employee Rehires

1 -

Enable Now

1 -

Enable now manager

1 -

endpoint

1 -

Enhancement Request

1 -

Enterprise Architecture

1 -

ETL Business Analytics with SAP Signavio

1 -

Euclidean distance

1 -

Event Dates

1 -

Event Driven Architecture

1 -

Event Mesh

2 -

Event Reason

1 -

EventBasedIntegration

1 -

EWM

1 -

EWM Outbound configuration

1 -

EWM-TM-Integration

1 -

Existing Event Changes

1 -

Expand

1 -

Expert

2 -

Expert Insights

2 -

Exploits

1 -

Fiori

14 -

Fiori Elements

2 -

Fiori SAPUI5

12 -

Flask

1 -

Full Stack

8 -

Funds Management

1 -

General

1 -

General Splitter

1 -

Generative AI

1 -

Getting Started

1 -

GitHub

8 -

Grants Management

1 -

GraphQL

1 -

groovy

1 -

GTP

1 -

HANA

6 -

HANA Cloud

2 -

Hana Cloud Database Integration

2 -

HANA DB

2 -

HANA XS Advanced

1 -

Historical Events

1 -

home labs

1 -

HowTo

1 -

HR Data Management

1 -

html5

8 -

HTML5 Application

1 -

Identity cards validation

1 -

idm

1 -

Implementation

1 -

input parameter

1 -

instant payments

1 -

Integration

3 -

Integration Advisor

1 -

Integration Architecture

1 -

Integration Center

1 -

Integration Suite

1 -

intelligent enterprise

1 -

iot

1 -

Java

1 -

job

1 -

Job Information Changes

1 -

Job-Related Events

1 -

Job_Event_Information

1 -

joule

4 -

Journal Entries

1 -

Just Ask

1 -

Kerberos for ABAP

8 -

Kerberos for JAVA

8 -

KNN

1 -

Launch Wizard

1 -

Learning Content

2 -

Life at SAP

5 -

lightning

1 -

Linear Regression SAP HANA Cloud

1 -

Loading Indicator

1 -

local tax regulations

1 -

LP

1 -

Machine Learning

2 -

Marketing

1 -

Master Data

3 -

Master Data Management

14 -

Maxdb

2 -

MDG

1 -

MDGM

1 -

MDM

1 -

Message box.

1 -

Messages on RF Device

1 -

Microservices Architecture

1 -

Microsoft Universal Print

1 -

Middleware Solutions

1 -

Migration

5 -

ML Model Development

1 -

Modeling in SAP HANA Cloud

8 -

Monitoring

3 -

MTA

1 -

Multi-Record Scenarios

1 -

Multiple Event Triggers

1 -

Myself Transformation

1 -

Neo

1 -

New Event Creation

1 -

New Feature

1 -

Newcomer

1 -

NodeJS

2 -

ODATA

2 -

OData APIs

1 -

odatav2

1 -

ODATAV4

1 -

ODBC

1 -

ODBC Connection

1 -

Onpremise

1 -

open source

2 -

OpenAI API

1 -

Oracle

1 -

PaPM

1 -

PaPM Dynamic Data Copy through Writer function

1 -

PaPM Remote Call

1 -

PAS-C01

1 -

Pay Component Management

1 -

PGP

1 -

Pickle

1 -

PLANNING ARCHITECTURE

1 -

Popup in Sap analytical cloud

1 -

PostgrSQL

1 -

POSTMAN

1 -

Process Automation

2 -

Product Updates

4 -

PSM

1 -

Public Cloud

1 -

Python

4 -

Qlik

1 -

Qualtrics

1 -

RAP

3 -

RAP BO

2 -

Record Deletion

1 -

Recovery

1 -

recurring payments

1 -

redeply

1 -

Release

1 -

Remote Consumption Model

1 -

Replication Flows

1 -

research

1 -

Resilience

1 -

REST

1 -

REST API

2 -

Retagging Required

1 -

Risk

1 -

Rolling Kernel Switch

1 -

route

1 -

rules

1 -

S4 HANA

1 -

S4 HANA Cloud

1 -

S4 HANA On-Premise

1 -

S4HANA

3 -

S4HANA_OP_2023

2 -

SAC

10 -

SAC PLANNING

9 -

SAP

4 -

SAP ABAP

1 -

SAP Advanced Event Mesh

1 -

SAP AI Core

8 -

SAP AI Launchpad

8 -

SAP Analytic Cloud Compass

1 -

Sap Analytical Cloud

1 -

SAP Analytics Cloud

4 -

SAP Analytics Cloud for Consolidation

3 -

SAP Analytics Cloud Story

1 -

SAP analytics clouds

1 -

SAP BAS

1 -

SAP Basis

6 -

SAP BODS

1 -

SAP BODS certification.

1 -

SAP BTP

21 -

SAP BTP Build Work Zone

2 -

SAP BTP Cloud Foundry

6 -

SAP BTP Costing

1 -

SAP BTP CTMS

1 -

SAP BTP Innovation

1 -

SAP BTP Migration Tool

1 -

SAP BTP SDK IOS

1 -

SAP Build

11 -

SAP Build App

1 -

SAP Build apps

1 -

SAP Build CodeJam

1 -

SAP Build Process Automation

3 -

SAP Build work zone

10 -

SAP Business Objects Platform

1 -

SAP Business Technology

2 -

SAP Business Technology Platform (XP)

1 -

sap bw

1 -

SAP CAP

2 -

SAP CDC

1 -

SAP CDP

1 -

SAP CDS VIEW

1 -

SAP Certification

1 -

SAP Cloud ALM

4 -

SAP Cloud Application Programming Model

1 -

SAP Cloud Integration for Data Services

1 -

SAP cloud platform

8 -

SAP Companion

1 -

SAP CPI

3 -

SAP CPI (Cloud Platform Integration)

2 -

SAP CPI Discover tab

1 -

sap credential store

1 -

SAP Customer Data Cloud

1 -

SAP Customer Data Platform

1 -

SAP Data Intelligence

1 -

SAP Data Migration in Retail Industry

1 -

SAP Data Services

1 -

SAP DATABASE

1 -

SAP Dataspher to Non SAP BI tools

1 -

SAP Datasphere

9 -

SAP DRC

1 -

SAP EWM

1 -

SAP Fiori

3 -

SAP Fiori App Embedding

1 -

Sap Fiori Extension Project Using BAS

1 -

SAP GRC

1 -

SAP HANA

1 -

SAP HCM (Human Capital Management)

1 -

SAP HR Solutions

1 -

SAP IDM

1 -

SAP Integration Suite

9 -

SAP Integrations

4 -

SAP iRPA

2 -

SAP LAGGING AND SLOW

1 -

SAP Learning Class

1 -

SAP Learning Hub

1 -

SAP Master Data

1 -

SAP Odata

2 -

SAP on Azure

1 -

SAP PartnerEdge

1 -

sap partners

1 -

SAP Password Reset

1 -

SAP PO Migration

1 -

SAP Prepackaged Content

1 -

SAP Process Automation

2 -

SAP Process Integration

2 -

SAP Process Orchestration

1 -

SAP S4HANA

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Cloud for Finance

1 -

SAP S4HANA Cloud private edition

1 -

SAP Sandbox

1 -

SAP STMS

1 -

SAP successfactors

3 -

SAP SuccessFactors HXM Core

1 -

SAP Time

1 -

SAP TM

2 -

SAP Trading Partner Management

1 -

SAP UI5

1 -

SAP Upgrade

1 -

SAP Utilities

1 -

SAP-GUI

8 -

SAP_COM_0276

1 -

SAPBTP

1 -

SAPCPI

1 -

SAPEWM

1 -

sapmentors

1 -

saponaws

2 -

SAPS4HANA

1 -

SAPUI5

5 -

schedule

1 -

Script Operator

1 -

Secure Login Client Setup

8 -

security

9 -

Selenium Testing

1 -

Self Transformation

1 -

Self-Transformation

1 -

SEN

1 -

SEN Manager

1 -

service

1 -

SET_CELL_TYPE

1 -

SET_CELL_TYPE_COLUMN

1 -

SFTP scenario

2 -

Simplex

1 -

Single Sign On

8 -

Singlesource

1 -

SKLearn

1 -

Slow loading

1 -

soap

1 -

Software Development

1 -

SOLMAN

1 -

solman 7.2

2 -

Solution Manager

3 -

sp_dumpdb

1 -

sp_dumptrans

1 -

SQL

1 -

sql script

1 -

SSL

8 -

SSO

8 -

Substring function

1 -

SuccessFactors

1 -

SuccessFactors Platform

1 -

SuccessFactors Time Tracking

1 -

Sybase

1 -

system copy method

1 -

System owner

1 -

Table splitting

1 -

Tax Integration

1 -

Technical article

1 -

Technical articles

1 -

Technology Updates

14 -

Technology Updates

1 -

Technology_Updates

1 -

terraform

1 -

Threats

2 -

Time Collectors

1 -

Time Off

2 -

Time Sheet

1 -

Time Sheet SAP SuccessFactors Time Tracking

1 -

Tips and tricks

2 -

toggle button

1 -

Tools

1 -

Trainings & Certifications

1 -

Transformation Flow

1 -

Transport in SAP BODS

1 -

Transport Management

1 -

TypeScript

2 -

ui designer

1 -

unbind

1 -

Unified Customer Profile

1 -

UPB

1 -

Use of Parameters for Data Copy in PaPM

1 -

User Unlock

1 -

VA02

1 -

Validations

1 -

Vector Database

2 -

Vector Engine

1 -

Visual Studio Code

1 -

VSCode

1 -

Vulnerabilities

1 -

Web SDK

1 -

work zone

1 -

workload

1 -

xsa

1 -

XSA Refresh

1

- « Previous

- Next »

Related Content

- ABAP Cloud Developer Trial 2022 Available Now in Technology Blogs by SAP

- SAP Partners unleash Business AI potential at global Hack2Build in Technology Blogs by SAP

- SAP BTP, Kyma Runtime internally available on SAP Converged Cloud in Technology Blogs by SAP

- Adversarial Machine Learning: is your AI-based component robust? in Technology Blogs by SAP

- Gamifying SAP C4C with BTP, the Flutter or Build Apps way. in Technology Blogs by SAP

Top kudoed authors

| User | Count |

|---|---|

| 8 | |

| 5 | |

| 5 | |

| 4 | |

| 4 | |

| 4 | |

| 4 | |

| 4 | |

| 3 | |

| 3 |