- SAP Community

- Products and Technology

- Technology

- Technology Blogs by Members

- Microservices, Containers, Images, Cluster ... and...

Technology Blogs by Members

Explore a vibrant mix of technical expertise, industry insights, and tech buzz in member blogs covering SAP products, technology, and events. Get in the mix!

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Advisor

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

04-17-2017

3:56 PM

Unless you have been living under a rock last two years, you have certainly heard about these buzz words around you. I am developing since a decade and I was never impatient to write a simple Hello World or Database select before. All it takes was just installing some software and copying few lines of code either from a book or internet, but now it took me a while to understand each of these technology stack and run some basic programs. Though basic program is not a typical scenario for these but it’s a good start to understand each towards building a complex scenario.

Disclaimer:

I am not a Linux guy; I develop applications on the windows operating system and it was initially tough for me to understand and remember simple commands. With this blog, I have followed all the hands on with my local Windows 10 machine running Hypervisor virtual machine.

If you are a beginner and looking forward to learn these technology buzz, I could help you here. The intention of this blog is not to dive deep into each but to give you an end to end experience. However, I will try to give some reference to learn more in detail.

Let me start with some terminologies,

Microservices is an approach to developing a single application as a suite of small services, each running in its own process and communicating with lightweight mechanisms, often an HTTP resource API. These services are built around business capabilities and independently deployable by fully automated deployment machinery. There is a bare minimum of centralized management of these services, which may be written in different programming languages and use different data storage technologies.

To read more in detail https://martinfowler.com/articles/microservices.html

Containers are a method of operating system virtualization that allow you to run an application and its dependencies in resource-isolated processes. Containers allow you to easily package an application's code, configurations, and dependencies into easy to use building blocks that deliver environmental consistency, operational efficiency, developer productivity, and version control. Containers can help ensure that applications deploy quickly, reliably, and consistently regardless of deployment environment. Containers also give you more granular control over resources giving your infrastructure improved efficiency.

Container Image is a lightweight, stand-alone, executable package of a piece of software that includes everything needed to run it: code, runtime, system tools, system libraries, settings. Available for both Linux and Windows based apps, containerized software will always run the same, regardless of the environment. Containers isolate software from its surroundings, for example differences between development and staging environments and help reduce conflicts between teams running different software on the same infrastructure.

Cluster is a group of servers and other resources that act like a single system and enable high availability and, in some cases, load balancing and parallel processing.

Now let’s look at the technology

Docker is the world’s leading software container platform. Developers use Docker to eliminate “works on my machine” problems when collaborating on code with co-workers. Operators use Docker to run and manage apps side-by-side in isolated containers to get better compute density. Enterprises use Docker to build agile software delivery pipelines to ship new features faster, more securely and with confidence for both Linux and Windows Server apps.

To read more in detail https://www.docker.com/

Kubernetes is an open-source system for automating deployment, scaling, and management of containerized applications. It groups containers that make up an application into logical units for easy management and discovery. Kubernetes builds upon 15 years of experience of running production workloads at Google, combined with best-of-breed ideas and practices from the community.

To read more in detail https://kubernetes.io/

As a developer when you want to develop day-to-day in your local machine, you need some software packages. Here I have listed then

Docker for Windows

An integrated, easy-to-deploy development environment for building, debugging and testing Docker apps on a Windows PC. Docker for Windows is a native Windows app deeply integrated with Hyper-V virtualization, networking and file system, making it the fastest and most reliable Docker environment for Windows.

Get it from https://store.docker.com/editions/community/docker-ce-desktop-windows

Kubernetes on Windows

I am not going to use the Kubernetes tool ‘minikube’ with this blog, as I chose to use another platform. If you are looking forward to work with Google Cloud Platform, you can start with minikube refer here https://kubernetes.io/docs/getting-started-guides/minikube/, However irrespective of the local set up you use to develop, you can run the containerized production application anywhere as the base technology is docker.

OpenShift Origin

Origin is the upstream community project that powers OpenShift. Built around a core of Docker container packaging and Kubernetes container cluster management, Origin is also augmented by application lifecycle management functionality and DevOps tooling. Origin provides a complete open source container application platform.

Minishift is a tool that helps you run OpenShift locally by launching a single-node OpenShift cluster inside a virtual machine. With Minishift you can try out OpenShift or develop with it, day-to-day, on your local machine. You can run Minishift on Windows, Mac OS, and GNU/Linux operating systems. Minishift uses libmachine for provisioning virtual machines, and OpenShift Origin for running the cluster. Refer here https://www.openshift.org/minishift/

If you had followed my older blogs, I am always the fan of Redhat’s OpenShift because of the community, documentation and user experience. Here I choose to use Minishift over minikube because of some technical challenges I had on Minikube running with hyperV, where as Docker for windows need hyperV. But Minishift and Docker for windows both can run parallely on hyperV with no issues.

Get it from https://github.com/minishift/minishift/releases

Enough of talking, let’s get started.

If Hyper-V is not enabled, you can do it under Windows Features

Search -> Windows Features -> Turn Windows Features on or off

Install Docker for Windows and make sure it’s running

Look at the VM created at the Hyper-V Manager

Search -> Hyper-V Manager

Before you install MiniShift, you should add a Virtual Switch using the Hyper-V Manager. Make sure that you pair the virtual switch with a network card (wired or wireless) that is connected to the network.

In Hyper-V Manager, select Virtual Switch Manager... from the 'Actions' menu on the right.

Under the 'Virtual Switches' section, select New virtual network switch.

Under 'What type of virtual switch do you want to create?', select External.

Select the Create Virtual Switch button.

Under ‘Virtual Switch Properties’, give the new switch a name such as External VM Switch.

Under ‘Connection Type’, ensure that External Network has been selected.

Select the physical network card to be paired with the new virtual switch. This is the network card that is physically connected to the network.

Select Apply to create the virtual switch. At this point you will most likely see the following message.

Click Yes to continue.

Select OK to close the Virtual Switch Manager Window.

Place minishift.exe in the C: folder

Open Powershell as an Administrator and execute the following statement

On successful creation of minishift VM you get the server and login details

You can also view the minishift VM created under the Hyper-V Manager

You can access the OpenShift server using the web console via a the https URL

By default a project named ‘My Project’ is created, You can choose to create a new project.

Let’s start the real development. I would like to build a simple Hello World node app and containerize the same and build the image and deploy it to OpenShift Origin.

Create a folder in your C: drive where you can place all your apps. In my case I have created a folder ‘home’ in C: drive and a folder named ‘nodejs-docker-webapp’ for this app.

I have followed the example from nodejs , I don’t want to get in to details of the same. you can refer here https://nodejs.org/en/docs/guides/nodejs-docker-webapp/

For the ease of development, I would suggest you to use the Docker for Windows initially and then you can change to Docker daemon on the minishift VM. Build your container based on the dockerfile from the current folder.

Run your container

and verify the output in your browser

Go back to your powershell as an Administrator

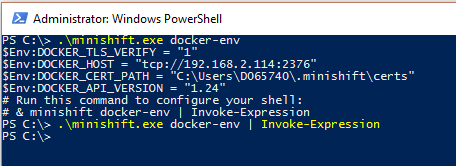

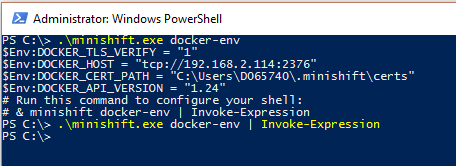

Execute the following command to get the Docker-Environment of minishift VM

.\minishift.exe docker-env and invoke expression to reuse the docker daemon of VM from the Docker client.

Now let’s quickly repeat the same steps on the minishift docker and check the image.

OpenShift Origin provides an Integrated Container Registry that adds the ability to provision new image repositories on the fly. Whenever a new image is pushed to the integrated registry, the registry notifies OpenShift Origin about the new image, passing along all the information about it, such as the namespace, name, and image metadata.

All the docker images should be tagged and pushed to the Integrated Registry, so they will be available for deployment as an Image Stream in the Web Console.

The syntax for the tag should be docker tag <imageid> <registryip:port>/<projectname>/<appname>

You could also combine this step with the docker build statement as docker build -t <registryip:port>/<projectname>/<appname> .

To login to Integrated Registry for pushing the image, we need the token from OpenShift Origin, we can get the same using OpenShift Client command line

Use the command oc whoami -t to get the token and use the same in the docker login command and push the image.

We are done and now let’s deploy the application on the Web Console. You can also open the Web console using the command

PS C:\> .\minishift.exe console

On the web console, navigate to your project and click on ‘Add to Project’ -> Deploy Image

Select the Project, app and version on the Image Stream Tag.

With no change to any of the properties, just click on Create.

Navigate to the overview page.

Next step is to create a default route to access the app, just click on the Create Route link

Click on Create. The route will be updated on the overview page. You can access this application using the route.

You can scale up and scale down your application by increasing or decreasing the number of pods on the overview page. Pod is the basic building block of Kubernetes–the smallest and simplest unit in the Kubernetes object model that you create or deploy. A Pod represents a running process on your cluster. When making a connection to a service, OpenShift will automatically route the connection to one of the pods associated with that service.

Let’s quickly recap what we did.

You can also push your images to Docker hub, or using Google Cloud SDK easily deploy these images in to your Google cloud engine or any other cloud platform like AWS or Azure.

Feel free to share your comments and suggesstion. I am also in the process of learning these new concepts and technology and I am open to learn together.

Disclaimer:

I am not a Linux guy; I develop applications on the windows operating system and it was initially tough for me to understand and remember simple commands. With this blog, I have followed all the hands on with my local Windows 10 machine running Hypervisor virtual machine.

If you are a beginner and looking forward to learn these technology buzz, I could help you here. The intention of this blog is not to dive deep into each but to give you an end to end experience. However, I will try to give some reference to learn more in detail.

Let me start with some terminologies,

Microservices is an approach to developing a single application as a suite of small services, each running in its own process and communicating with lightweight mechanisms, often an HTTP resource API. These services are built around business capabilities and independently deployable by fully automated deployment machinery. There is a bare minimum of centralized management of these services, which may be written in different programming languages and use different data storage technologies.

To read more in detail https://martinfowler.com/articles/microservices.html

Containers are a method of operating system virtualization that allow you to run an application and its dependencies in resource-isolated processes. Containers allow you to easily package an application's code, configurations, and dependencies into easy to use building blocks that deliver environmental consistency, operational efficiency, developer productivity, and version control. Containers can help ensure that applications deploy quickly, reliably, and consistently regardless of deployment environment. Containers also give you more granular control over resources giving your infrastructure improved efficiency.

Container Image is a lightweight, stand-alone, executable package of a piece of software that includes everything needed to run it: code, runtime, system tools, system libraries, settings. Available for both Linux and Windows based apps, containerized software will always run the same, regardless of the environment. Containers isolate software from its surroundings, for example differences between development and staging environments and help reduce conflicts between teams running different software on the same infrastructure.

Cluster is a group of servers and other resources that act like a single system and enable high availability and, in some cases, load balancing and parallel processing.

Now let’s look at the technology

Docker is the world’s leading software container platform. Developers use Docker to eliminate “works on my machine” problems when collaborating on code with co-workers. Operators use Docker to run and manage apps side-by-side in isolated containers to get better compute density. Enterprises use Docker to build agile software delivery pipelines to ship new features faster, more securely and with confidence for both Linux and Windows Server apps.

To read more in detail https://www.docker.com/

Kubernetes is an open-source system for automating deployment, scaling, and management of containerized applications. It groups containers that make up an application into logical units for easy management and discovery. Kubernetes builds upon 15 years of experience of running production workloads at Google, combined with best-of-breed ideas and practices from the community.

To read more in detail https://kubernetes.io/

As a developer when you want to develop day-to-day in your local machine, you need some software packages. Here I have listed then

Docker for Windows

An integrated, easy-to-deploy development environment for building, debugging and testing Docker apps on a Windows PC. Docker for Windows is a native Windows app deeply integrated with Hyper-V virtualization, networking and file system, making it the fastest and most reliable Docker environment for Windows.

Get it from https://store.docker.com/editions/community/docker-ce-desktop-windows

Kubernetes on Windows

I am not going to use the Kubernetes tool ‘minikube’ with this blog, as I chose to use another platform. If you are looking forward to work with Google Cloud Platform, you can start with minikube refer here https://kubernetes.io/docs/getting-started-guides/minikube/, However irrespective of the local set up you use to develop, you can run the containerized production application anywhere as the base technology is docker.

OpenShift Origin

Origin is the upstream community project that powers OpenShift. Built around a core of Docker container packaging and Kubernetes container cluster management, Origin is also augmented by application lifecycle management functionality and DevOps tooling. Origin provides a complete open source container application platform.

Minishift is a tool that helps you run OpenShift locally by launching a single-node OpenShift cluster inside a virtual machine. With Minishift you can try out OpenShift or develop with it, day-to-day, on your local machine. You can run Minishift on Windows, Mac OS, and GNU/Linux operating systems. Minishift uses libmachine for provisioning virtual machines, and OpenShift Origin for running the cluster. Refer here https://www.openshift.org/minishift/

If you had followed my older blogs, I am always the fan of Redhat’s OpenShift because of the community, documentation and user experience. Here I choose to use Minishift over minikube because of some technical challenges I had on Minikube running with hyperV, where as Docker for windows need hyperV. But Minishift and Docker for windows both can run parallely on hyperV with no issues.

Get it from https://github.com/minishift/minishift/releases

Enough of talking, let’s get started.

If Hyper-V is not enabled, you can do it under Windows Features

Search -> Windows Features -> Turn Windows Features on or off

Install Docker for Windows and make sure it’s running

Look at the VM created at the Hyper-V Manager

Search -> Hyper-V Manager

Before you install MiniShift, you should add a Virtual Switch using the Hyper-V Manager. Make sure that you pair the virtual switch with a network card (wired or wireless) that is connected to the network.

In Hyper-V Manager, select Virtual Switch Manager... from the 'Actions' menu on the right.

Under the 'Virtual Switches' section, select New virtual network switch.

Under 'What type of virtual switch do you want to create?', select External.

Select the Create Virtual Switch button.

Under ‘Virtual Switch Properties’, give the new switch a name such as External VM Switch.

Under ‘Connection Type’, ensure that External Network has been selected.

Select the physical network card to be paired with the new virtual switch. This is the network card that is physically connected to the network.

Select Apply to create the virtual switch. At this point you will most likely see the following message.

Click Yes to continue.

Select OK to close the Virtual Switch Manager Window.

Place minishift.exe in the C: folder

Open Powershell as an Administrator and execute the following statement

On successful creation of minishift VM you get the server and login details

You can also view the minishift VM created under the Hyper-V Manager

You can access the OpenShift server using the web console via a the https URL

By default a project named ‘My Project’ is created, You can choose to create a new project.

Let’s start the real development. I would like to build a simple Hello World node app and containerize the same and build the image and deploy it to OpenShift Origin.

Create a folder in your C: drive where you can place all your apps. In my case I have created a folder ‘home’ in C: drive and a folder named ‘nodejs-docker-webapp’ for this app.

I have followed the example from nodejs , I don’t want to get in to details of the same. you can refer here https://nodejs.org/en/docs/guides/nodejs-docker-webapp/

For the ease of development, I would suggest you to use the Docker for Windows initially and then you can change to Docker daemon on the minishift VM. Build your container based on the dockerfile from the current folder.

Run your container

and verify the output in your browser

Go back to your powershell as an Administrator

Execute the following command to get the Docker-Environment of minishift VM

.\minishift.exe docker-env and invoke expression to reuse the docker daemon of VM from the Docker client.

Now let’s quickly repeat the same steps on the minishift docker and check the image.

OpenShift Origin provides an Integrated Container Registry that adds the ability to provision new image repositories on the fly. Whenever a new image is pushed to the integrated registry, the registry notifies OpenShift Origin about the new image, passing along all the information about it, such as the namespace, name, and image metadata.

All the docker images should be tagged and pushed to the Integrated Registry, so they will be available for deployment as an Image Stream in the Web Console.

The syntax for the tag should be docker tag <imageid> <registryip:port>/<projectname>/<appname>

You could also combine this step with the docker build statement as docker build -t <registryip:port>/<projectname>/<appname> .

To login to Integrated Registry for pushing the image, we need the token from OpenShift Origin, we can get the same using OpenShift Client command line

Use the command oc whoami -t to get the token and use the same in the docker login command and push the image.

We are done and now let’s deploy the application on the Web Console. You can also open the Web console using the command

PS C:\> .\minishift.exe console

On the web console, navigate to your project and click on ‘Add to Project’ -> Deploy Image

Select the Project, app and version on the Image Stream Tag.

With no change to any of the properties, just click on Create.

Navigate to the overview page.

Next step is to create a default route to access the app, just click on the Create Route link

Click on Create. The route will be updated on the overview page. You can access this application using the route.

You can scale up and scale down your application by increasing or decreasing the number of pods on the overview page. Pod is the basic building block of Kubernetes–the smallest and simplest unit in the Kubernetes object model that you create or deploy. A Pod represents a running process on your cluster. When making a connection to a service, OpenShift will automatically route the connection to one of the pods associated with that service.

Let’s quickly recap what we did.

- We developed a simple Hello World node application. We build and tested the same locally, containerized it using Docker for windows.

- Build a local OpenShift Cluster using MiniShift and rebuild the app image using the MiniShift Docker daemon.

- We pushed the image to Integrated registry and deployed the same using Image Stream in web console and looked the scale up and scale down options.You can group two services together depends on the dependancies.

You can also push your images to Docker hub, or using Google Cloud SDK easily deploy these images in to your Google cloud engine or any other cloud platform like AWS or Azure.

Before as a developer I was never bothered about how DevOps works, how scaling happens, and efficiency in maintenance and security. With Microservices architecture each microservice team is responsible for the entire business process. Technology is moving fast, especially SAP moving towards cloud company we don’t talk anymore about quarterly or monthly releases; we deliver the services over night or even on an hourly basis.

Feel free to share your comments and suggesstion. I am also in the process of learning these new concepts and technology and I am open to learn together.

- SAP Managed Tags:

- Cloud

3 Comments

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

"automatische backups"

1 -

"regelmäßige sicherung"

1 -

"TypeScript" "Development" "FeedBack"

1 -

505 Technology Updates 53

1 -

ABAP

14 -

ABAP API

1 -

ABAP CDS Views

2 -

ABAP CDS Views - BW Extraction

1 -

ABAP CDS Views - CDC (Change Data Capture)

1 -

ABAP class

2 -

ABAP Cloud

2 -

ABAP Development

5 -

ABAP in Eclipse

1 -

ABAP Platform Trial

1 -

ABAP Programming

2 -

abap technical

1 -

absl

2 -

access data from SAP Datasphere directly from Snowflake

1 -

Access data from SAP datasphere to Qliksense

1 -

Accrual

1 -

action

1 -

adapter modules

1 -

Addon

1 -

Adobe Document Services

1 -

ADS

1 -

ADS Config

1 -

ADS with ABAP

1 -

ADS with Java

1 -

ADT

2 -

Advance Shipping and Receiving

1 -

Advanced Event Mesh

3 -

AEM

1 -

AI

7 -

AI Launchpad

1 -

AI Projects

1 -

AIML

9 -

Alert in Sap analytical cloud

1 -

Amazon S3

1 -

Analytical Dataset

1 -

Analytical Model

1 -

Analytics

1 -

Analyze Workload Data

1 -

annotations

1 -

API

1 -

API and Integration

3 -

API Call

2 -

Application Architecture

1 -

Application Development

5 -

Application Development for SAP HANA Cloud

3 -

Applications and Business Processes (AP)

1 -

Artificial Intelligence

1 -

Artificial Intelligence (AI)

5 -

Artificial Intelligence (AI) 1 Business Trends 363 Business Trends 8 Digital Transformation with Cloud ERP (DT) 1 Event Information 462 Event Information 15 Expert Insights 114 Expert Insights 76 Life at SAP 418 Life at SAP 1 Product Updates 4

1 -

Artificial Intelligence (AI) blockchain Data & Analytics

1 -

Artificial Intelligence (AI) blockchain Data & Analytics Intelligent Enterprise

1 -

Artificial Intelligence (AI) blockchain Data & Analytics Intelligent Enterprise Oil Gas IoT Exploration Production

1 -

Artificial Intelligence (AI) blockchain Data & Analytics Intelligent Enterprise sustainability responsibility esg social compliance cybersecurity risk

1 -

ASE

1 -

ASR

2 -

ASUG

1 -

Attachments

1 -

Authorisations

1 -

Automating Processes

1 -

Automation

2 -

aws

2 -

Azure

1 -

Azure AI Studio

1 -

B2B Integration

1 -

Backorder Processing

1 -

Backup

1 -

Backup and Recovery

1 -

Backup schedule

1 -

BADI_MATERIAL_CHECK error message

1 -

Bank

1 -

BAS

1 -

basis

2 -

Basis Monitoring & Tcodes with Key notes

2 -

Batch Management

1 -

BDC

1 -

Best Practice

1 -

bitcoin

1 -

Blockchain

3 -

bodl

1 -

BOP in aATP

1 -

BOP Segments

1 -

BOP Strategies

1 -

BOP Variant

1 -

BPC

1 -

BPC LIVE

1 -

BTP

12 -

BTP Destination

2 -

Business AI

1 -

Business and IT Integration

1 -

Business application stu

1 -

Business Application Studio

1 -

Business Architecture

1 -

Business Communication Services

1 -

Business Continuity

1 -

Business Data Fabric

3 -

Business Partner

12 -

Business Partner Master Data

10 -

Business Technology Platform

2 -

Business Trends

4 -

CA

1 -

calculation view

1 -

CAP

3 -

Capgemini

1 -

CAPM

1 -

Catalyst for Efficiency: Revolutionizing SAP Integration Suite with Artificial Intelligence (AI) and

1 -

CCMS

2 -

CDQ

12 -

CDS

2 -

Cental Finance

1 -

Certificates

1 -

CFL

1 -

Change Management

1 -

chatbot

1 -

chatgpt

3 -

CL_SALV_TABLE

2 -

Class Runner

1 -

Classrunner

1 -

Cloud ALM Monitoring

1 -

Cloud ALM Operations

1 -

cloud connector

1 -

Cloud Extensibility

1 -

Cloud Foundry

4 -

Cloud Integration

6 -

Cloud Platform Integration

2 -

cloudalm

1 -

communication

1 -

Compensation Information Management

1 -

Compensation Management

1 -

Compliance

1 -

Compound Employee API

1 -

Configuration

1 -

Connectors

1 -

Consolidation Extension for SAP Analytics Cloud

2 -

Control Indicators.

1 -

Controller-Service-Repository pattern

1 -

Conversion

1 -

Cosine similarity

1 -

cryptocurrency

1 -

CSI

1 -

ctms

1 -

Custom chatbot

3 -

Custom Destination Service

1 -

custom fields

1 -

Customer Experience

1 -

Customer Journey

1 -

Customizing

1 -

cyber security

3 -

Data

1 -

Data & Analytics

1 -

Data Aging

1 -

Data Analytics

2 -

Data and Analytics (DA)

1 -

Data Archiving

1 -

Data Back-up

1 -

Data Governance

5 -

Data Integration

2 -

Data Quality

12 -

Data Quality Management

12 -

Data Synchronization

1 -

data transfer

1 -

Data Unleashed

1 -

Data Value

8 -

database tables

1 -

Datasphere

2 -

datenbanksicherung

1 -

dba cockpit

1 -

dbacockpit

1 -

Debugging

2 -

Delimiting Pay Components

1 -

Delta Integrations

1 -

Destination

3 -

Destination Service

1 -

Developer extensibility

1 -

Developing with SAP Integration Suite

1 -

Devops

1 -

digital transformation

1 -

Documentation

1 -

Dot Product

1 -

DQM

1 -

dump database

1 -

dump transaction

1 -

e-Invoice

1 -

E4H Conversion

1 -

Eclipse ADT ABAP Development Tools

2 -

edoc

1 -

edocument

1 -

ELA

1 -

Embedded Consolidation

1 -

Embedding

1 -

Embeddings

1 -

Employee Central

1 -

Employee Central Payroll

1 -

Employee Central Time Off

1 -

Employee Information

1 -

Employee Rehires

1 -

Enable Now

1 -

Enable now manager

1 -

endpoint

1 -

Enhancement Request

1 -

Enterprise Architecture

1 -

ETL Business Analytics with SAP Signavio

1 -

Euclidean distance

1 -

Event Dates

1 -

Event Driven Architecture

1 -

Event Mesh

2 -

Event Reason

1 -

EventBasedIntegration

1 -

EWM

1 -

EWM Outbound configuration

1 -

EWM-TM-Integration

1 -

Existing Event Changes

1 -

Expand

1 -

Expert

2 -

Expert Insights

2 -

Fiori

14 -

Fiori Elements

2 -

Fiori SAPUI5

12 -

Flask

1 -

Full Stack

8 -

Funds Management

1 -

General

1 -

Generative AI

1 -

Getting Started

1 -

GitHub

8 -

Grants Management

1 -

groovy

1 -

GTP

1 -

HANA

6 -

HANA Cloud

2 -

Hana Cloud Database Integration

2 -

HANA DB

2 -

HANA XS Advanced

1 -

Historical Events

1 -

home labs

1 -

HowTo

1 -

HR Data Management

1 -

html5

8 -

HTML5 Application

1 -

Identity cards validation

1 -

idm

1 -

Implementation

1 -

input parameter

1 -

instant payments

1 -

Integration

3 -

Integration Advisor

1 -

Integration Architecture

1 -

Integration Center

1 -

Integration Suite

1 -

intelligent enterprise

1 -

iot

1 -

Java

1 -

job

1 -

Job Information Changes

1 -

Job-Related Events

1 -

Job_Event_Information

1 -

joule

4 -

Journal Entries

1 -

Just Ask

1 -

Kerberos for ABAP

8 -

Kerberos for JAVA

8 -

KNN

1 -

Launch Wizard

1 -

learning content

2 -

Life at SAP

5 -

lightning

1 -

Linear Regression SAP HANA Cloud

1 -

local tax regulations

1 -

LP

1 -

Machine Learning

2 -

Marketing

1 -

Master Data

3 -

Master Data Management

14 -

Maxdb

2 -

MDG

1 -

MDGM

1 -

MDM

1 -

Message box.

1 -

Messages on RF Device

1 -

Microservices Architecture

1 -

Microsoft Universal Print

1 -

Middleware Solutions

1 -

Migration

5 -

ML Model Development

1 -

Modeling in SAP HANA Cloud

8 -

Monitoring

3 -

MTA

1 -

Multi-Record Scenarios

1 -

Multiple Event Triggers

1 -

Neo

1 -

New Event Creation

1 -

New Feature

1 -

Newcomer

1 -

NodeJS

2 -

ODATA

2 -

OData APIs

1 -

odatav2

1 -

ODATAV4

1 -

ODBC

1 -

ODBC Connection

1 -

Onpremise

1 -

open source

2 -

OpenAI API

1 -

Oracle

1 -

PaPM

1 -

PaPM Dynamic Data Copy through Writer function

1 -

PaPM Remote Call

1 -

PAS-C01

1 -

Pay Component Management

1 -

PGP

1 -

Pickle

1 -

PLANNING ARCHITECTURE

1 -

Popup in Sap analytical cloud

1 -

PostgrSQL

1 -

POSTMAN

1 -

Process Automation

2 -

Product Updates

4 -

PSM

1 -

Public Cloud

1 -

Python

4 -

Qlik

1 -

Qualtrics

1 -

RAP

3 -

RAP BO

2 -

Record Deletion

1 -

Recovery

1 -

recurring payments

1 -

redeply

1 -

Release

1 -

Remote Consumption Model

1 -

Replication Flows

1 -

research

1 -

Resilience

1 -

REST

1 -

REST API

1 -

Retagging Required

1 -

Risk

1 -

Rolling Kernel Switch

1 -

route

1 -

rules

1 -

S4 HANA

1 -

S4 HANA Cloud

1 -

S4 HANA On-Premise

1 -

S4HANA

3 -

S4HANA_OP_2023

2 -

SAC

10 -

SAC PLANNING

9 -

SAP

4 -

SAP ABAP

1 -

SAP Advanced Event Mesh

1 -

SAP AI Core

8 -

SAP AI Launchpad

8 -

SAP Analytic Cloud Compass

1 -

Sap Analytical Cloud

1 -

SAP Analytics Cloud

4 -

SAP Analytics Cloud for Consolidation

3 -

SAP Analytics Cloud Story

1 -

SAP analytics clouds

1 -

SAP BAS

1 -

SAP Basis

6 -

SAP BODS

1 -

SAP BODS certification.

1 -

SAP BTP

21 -

SAP BTP Build Work Zone

2 -

SAP BTP Cloud Foundry

6 -

SAP BTP Costing

1 -

SAP BTP CTMS

1 -

SAP BTP Innovation

1 -

SAP BTP Migration Tool

1 -

SAP BTP SDK IOS

1 -

SAP Build

11 -

SAP Build App

1 -

SAP Build apps

1 -

SAP Build CodeJam

1 -

SAP Build Process Automation

3 -

SAP Build work zone

10 -

SAP Business Objects Platform

1 -

SAP Business Technology

2 -

SAP Business Technology Platform (XP)

1 -

sap bw

1 -

SAP CAP

2 -

SAP CDC

1 -

SAP CDP

1 -

SAP CDS VIEW

1 -

SAP Certification

1 -

SAP Cloud ALM

4 -

SAP Cloud Application Programming Model

1 -

SAP Cloud Integration for Data Services

1 -

SAP cloud platform

8 -

SAP Companion

1 -

SAP CPI

3 -

SAP CPI (Cloud Platform Integration)

2 -

SAP CPI Discover tab

1 -

sap credential store

1 -

SAP Customer Data Cloud

1 -

SAP Customer Data Platform

1 -

SAP Data Intelligence

1 -

SAP Data Migration in Retail Industry

1 -

SAP Data Services

1 -

SAP DATABASE

1 -

SAP Dataspher to Non SAP BI tools

1 -

SAP Datasphere

10 -

SAP DRC

1 -

SAP EWM

1 -

SAP Fiori

2 -

SAP Fiori App Embedding

1 -

Sap Fiori Extension Project Using BAS

1 -

SAP GRC

1 -

SAP HANA

1 -

SAP HCM (Human Capital Management)

1 -

SAP HR Solutions

1 -

SAP IDM

1 -

SAP Integration Suite

9 -

SAP Integrations

4 -

SAP iRPA

2 -

SAP Learning Class

1 -

SAP Learning Hub

1 -

SAP Odata

2 -

SAP on Azure

1 -

SAP PartnerEdge

1 -

sap partners

1 -

SAP Password Reset

1 -

SAP PO Migration

1 -

SAP Prepackaged Content

1 -

SAP Process Automation

2 -

SAP Process Integration

2 -

SAP Process Orchestration

1 -

SAP S4HANA

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Cloud for Finance

1 -

SAP S4HANA Cloud private edition

1 -

SAP Sandbox

1 -

SAP STMS

1 -

SAP successfactors

3 -

SAP SuccessFactors HXM Core

1 -

SAP Time

1 -

SAP TM

2 -

SAP Trading Partner Management

1 -

SAP UI5

1 -

SAP Upgrade

1 -

SAP Utilities

1 -

SAP-GUI

8 -

SAP_COM_0276

1 -

SAPBTP

1 -

SAPCPI

1 -

SAPEWM

1 -

sapmentors

1 -

saponaws

2 -

SAPS4HANA

1 -

SAPUI5

4 -

schedule

1 -

Secure Login Client Setup

8 -

security

9 -

Selenium Testing

1 -

SEN

1 -

SEN Manager

1 -

service

1 -

SET_CELL_TYPE

1 -

SET_CELL_TYPE_COLUMN

1 -

SFTP scenario

2 -

Simplex

1 -

Single Sign On

8 -

Singlesource

1 -

SKLearn

1 -

soap

1 -

Software Development

1 -

SOLMAN

1 -

solman 7.2

2 -

Solution Manager

3 -

sp_dumpdb

1 -

sp_dumptrans

1 -

SQL

1 -

sql script

1 -

SSL

8 -

SSO

8 -

Substring function

1 -

SuccessFactors

1 -

SuccessFactors Platform

1 -

SuccessFactors Time Tracking

1 -

Sybase

1 -

system copy method

1 -

System owner

1 -

Table splitting

1 -

Tax Integration

1 -

Technical article

1 -

Technical articles

1 -

Technology Updates

14 -

Technology Updates

1 -

Technology_Updates

1 -

terraform

1 -

Threats

1 -

Time Collectors

1 -

Time Off

2 -

Time Sheet

1 -

Time Sheet SAP SuccessFactors Time Tracking

1 -

Tips and tricks

2 -

toggle button

1 -

Tools

1 -

Trainings & Certifications

1 -

Transport in SAP BODS

1 -

Transport Management

1 -

TypeScript

2 -

ui designer

1 -

unbind

1 -

Unified Customer Profile

1 -

UPB

1 -

Use of Parameters for Data Copy in PaPM

1 -

User Unlock

1 -

VA02

1 -

Validations

1 -

Vector Database

2 -

Vector Engine

1 -

Visual Studio Code

1 -

VSCode

1 -

Web SDK

1 -

work zone

1 -

workload

1 -

xsa

1 -

XSA Refresh

1

- « Previous

- Next »

Related Content

- Kyma Integration with SAP Cloud Logging. Part 2: Let's ship some traces in Technology Blogs by SAP

- Deploying SAP S/4HANA Containers with Kubernetes in Technology Blogs by SAP

- What’s New in SAP HANA Cloud – March 2024 in Technology Blogs by SAP

- What's New in the Newly Repackaged SAP Integration Suite, advanced event mesh in Technology Blogs by SAP

- UDP on Kyma Environment in Technology Q&A

Top kudoed authors

| User | Count |

|---|---|

| 10 | |

| 9 | |

| 5 | |

| 4 | |

| 4 | |

| 3 | |

| 3 | |

| 3 | |

| 3 | |

| 3 |