- SAP Community

- Products and Technology

- CRM and Customer Experience

- CRM and CX Blogs by Members

- SAP Hybris - Load IDOC to Hybris

CRM and CX Blogs by Members

Find insights on SAP customer relationship management and customer experience products in blog posts from community members. Post your own perspective today!

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

former_member29

Explorer

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

03-22-2017

11:10 AM

This blog is continuation of SAP Hybris - Customizing Data Hub

Load IDOC to Hybris

- IDoc is an SAP object that carries data of a business transaction from one system to another in the form of electronic message

- IDoc is an acronym for Intermediate Document.

- The purpose of an IDoc is to transfer data or information from SAP to other systems and vice versa.

- The transfer from SAP to non-SAP system is done via EDI (Electronic Data Interchange) subsystems whereas for transfer between two SAP systems, ALE is used.

Step-by-step procedure.

Step 1: After successfully loading the customproduct

Step 2: Create the xml (spring.xml)

Note: The below is the sample IDoc (just for an example, you try with your own IDoc)

<?xml version="1.0" encoding="UTF-8"?>

<ZCRMXIF_PRODUCT_MATERIAL>

<IDOC BEGIN="1">

<EDI_DC40 SEGMENT="1">

<IDOCTYP>ZCRMXIF_PRODUCT_MATERIAL</IDOCTYP>

<MESTYP>CRMXIF_PRODUCT_MATERIAL_SAVE</MESTYP>

</EDI_DC40>

<E101COMXIF_PRODUCT_MATERIAL SEGMENT="1">

<PRODUCT_ID>EOS-30D-1623432_V1</PRODUCT_ID>

</E101COMXIF_PRODUCT_MATERIAL>

</IDOC>

</ZCRMXIF_PRODUCT_MATERIAL>Step 3:

Now add custom raw data to "customproduct-raw-datahub-extension.xml" , based on the idoc we will add custom rawitems to this XML file and also to "customproduct-raw-datahub-extension-spring.xml" .

- customproduct-raw-datahub-extension.xml

<extension xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xmlns="http://www.hybris.com/schema/"

xsi:schemaLocation="http://www.hybris.com/schema/ http://www.hybris.com/schema/datahub-metadata-schema-1.3.0.xsd"

name="customproduct-raw">

<dependencies>

<dependency>

<extension>customproduct-canonical</extension>

<extension>saperpproduct-canonical</extension>

</dependency>

</dependencies>

<rawItems>

<item>

<type>RawCustomProduct</type>

<description>Raw representation of a sample raw item</description>

<attributes>

<attribute>

<name>E101COMXIF_PRODUCT_MATERIAL-PRODUCT_ID</name>

</attribute>

</attributes>

</item>

</rawItems>

<canonicalItems>

<item>

<type>CanonicalCustomProduct</type>

<attributes>

<attribute>

<name>productId</name>

<transformations>

<transformation>

<rawSource>RawCustomProduct</rawSource>

<expression>E101COMXIF_PRODUCT_MATERIAL-PRODUCT_ID</expression>

</transformation>

</transformations>

</attribute>

</attributes>

</item>

</canonicalItems>

</extension>

- customproduct-raw-datahub-extension-spring.xml

<beans xmlns="http://www.springframework.org/schema/beans" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xmlns:context="http://www.springframework.org/schema/context" xmlns:int="http://www.springframework.org/schema/integration"

xmlns:int-xml="http://www.springframework.org/schema/integration/xml"

xmlns:util="http://www.springframework.org/schema/util"

xsi:schemaLocation="http://www.springframework.org/schema/beans http://www.springframework.org/schema/beans/spring-beans.xsd

http://www.springframework.org/schema/context http://www.springframework.org/schema/context/spring-context.xsd

http://www.springframework.org/schema/integration/xml http://www.springframework.org/schema/integration/xml/spring-integration-xml.xsd

http://www.springframework.org/schema/integration http://www.springframework.org/schema/integration/spring-integration.xsd

http://www.springframework.org/schema/util http://www.springframework.org/schema/util/spring-util.xsd">

<!-- ========================== -->

<!-- Spring-Integration Content -->

<!-- ========================== -->

<int:channel id="idocXmlInboundChannel">

<int:interceptors>

<int:wire-tap channel="logger" />

</int:interceptors>

</int:channel>

<int:logging-channel-adapter log-full-message="true" id="logger" level="DEBUG" />

<bean id="idocInboundService" class="com.hybris.datahub.sapidocintegration.spring.HttpInboundService">

<property name="idocXmlInboundChannel" ref="idocXmlInboundChannel" />

</bean>

<!-- Data Hub input channel for raw data -->

<int:channel id="rawFragmentDataInputChannel" />

<!-- Maps received IDOCs by value of header attribute: "IDOCTYP" to corresponding mapping service -->

<int:header-value-router input-channel="idocXmlInboundChannel" header-name="IDOCTYP">

<int:mapping value="ZCRMXIF_PRODUCT_MATERIAL" channel="ZCRMMATMAS" />

</int:header-value-router>

<!-- sap crm product -->

<int:service-activator input-channel="ZCRMMATMAS" output-channel="rawFragmentDataInputChannel" ref="customproductCRMMappingService" method="map" />

<!-- Dummy implementations of mapping services implemented elsewhere -->

<bean id="customproductCRMMappingService" class="com.hybris.datahub.sapidocintegration.IDOCMappingService">

<property name="rawFragmentDataExtensionSource" value="customproduct" />

<property name="rawFragmentDataType" value="RawCustomProduct" />

</bean>

</beans>

Step 4:

Now add custom canonical data to "customproduct-canonical-datahub-extension.xml"

- customproduct-canonical-datahub-extension.xml

<extension xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xmlns="http://www.hybris.com/schema/"

xsi:schemaLocation="http://www.hybris.com/schema/ http://www.hybris.com/schema/datahub-metadata-schema-1.3.0.xsd"

name="customproduct-canonical">

<canonicalItems>

<item>

<type>CanonicalCustomProduct</type>

<description>Canonical representation of sample item</description>

<status>ACTIVE</status>

<attributes>

<attribute>

<name>productId</name>

<model>

<localizable>false</localizable>

<collection>false</collection>

<type>String</type>

<primaryKey>true</primaryKey>

</model>

</attribute>

</attributes>

</item>

</canonicalItems>

</extension>

Step 5:

Now add custom target data to "customproduct-target-datahub-extension.xml"

- customproduct-target-datahub-extension.xml

<extension xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xmlns="http://www.hybris.com/schema/"

xsi:schemaLocation="http://www.hybris.com/schema/ http://www.hybris.com/schema/datahub-metadata-schema-1.3.0.xsd"

name="customproduct-target">

<dependencies>

<dependency>

<extension>customproduct-canonical</extension>

</dependency>

</dependencies>

<targetSystems>

<targetSystem>

<name>HybrisCore</name>

<type>HybrisCore</type>

<exportURL>${datahub.extension.exportURL}</exportURL>

<userName>${datahub.extension.username}</userName>

<password>${datahub.extension.password}</password>

<exportCodes>

</exportCodes>

<targetItems>

<item>

<type>TargetCustomProduct</type>

<exportCode>Product</exportCode>

<description>Hybris Platform representation of Product</description>

<updatable>true</updatable>

<canonicalItemSource>CanonicalCustomProduct</canonicalItemSource>

<status>ACTIVE</status>

<attributes>

<attribute>

<name>identifier</name>

<localizable>false</localizable>

<collection>false</collection>

<transformationExpression>productId</transformationExpression>

<exportCode>code[unique=true]</exportCode>

<mandatoryInHeader>true</mandatoryInHeader>

</attribute>

</attributes>

</item>

</targetItems>

</targetSystem>

</targetSystems>

</extension>

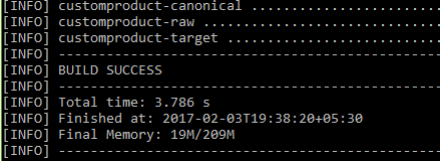

Step 6: Run command mvn clean install

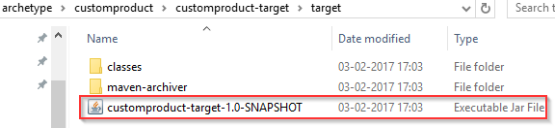

Step 7: Now go to the

<YOURPATH>\datahub6.2\archetype\customproduct\customproduct-canonical\target

Step 8: Copy above highlighted jar file to the below path folder

<YOURPATH>\datahub6.2

Same procedure will be followed for raw and target also

Step 9: Go to the path <YOURPATH>\datahub6.2\archetype\customproduct\customproduct-raw\target

Copy the jar file into crm folder

Step 10: Go to the path <YOURPATH>\datahub6.2\archetype\customproduct\customproduct-target\target

Copy the jar file into crm folder

Note: If any changes are done in customproduct - raw, canonical, target Step 5 to Step 10 should be followed and restart the Tomcat server.

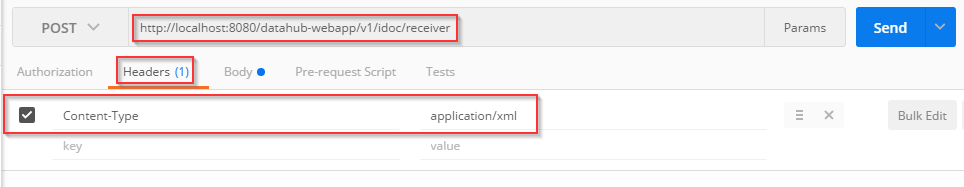

Step 11: Now go to chrome via https://chrome.google.com/webstore/detail/postman/fhbjgbiflinjbdggehcddcbncdddomop?hl=en

Step 12: Click on launch the app button

Step 13: Open Postman App and add the header details as below

Headers : Content-Type - application/xml

URL: http://localhost:8080/datahub-webapp/v1/idoc/receiver

Step 14: Go to Body-> raw -> add the IDoc and click on send button

- We will get a response as 200 which means the process is a success.

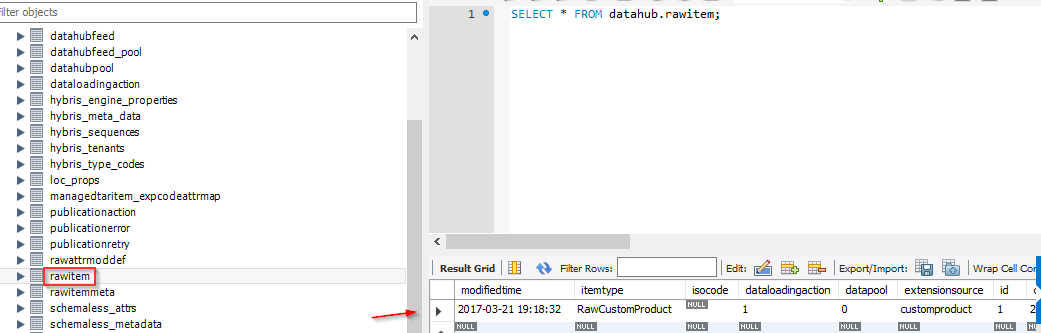

Step 15: Now go to mysql workbench and check the "rawitem" table data.

DATA COMPOSITION

Data composition means the transfer of data from raw items to canonical items.

Step 16: Post the

URL: http://localhost:8080/datahub-webapp/v1/pools/GLOBAL/compositions

as shown in below

Step 17: Now go to MySQL workbench and check the "canonicalitem" table data.

DATA PUBLICATION

Data publication means the transfer of data from canonical items to target items.

Step 18: Post the URL below

http://localhost:8080/datahub-webapp/v1/pools/GLOBAL/publications

Step 19: Now go to MySQL workbench and check the "targetitem" table data.

Step 20: Start the hybris server with recipe "sap_aom_som_b2b_b2c".

If we don't get datahubadapter in the local.properties xml file in config .Then add it manually

"<extension name="datahubadapter" />"

Step 21: Go to hmc,catalog->products enter the product id and click on search.

- Product which is sent via IDoc can be viewed in hmc.

Thanks for reading 🙂

- SAP Managed Tags:

- SAP Commerce

26 Comments

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

ABAP

1 -

API Rules

1 -

c4c

1 -

CAP development

1 -

clean-core

1 -

CRM

1 -

Custom Key Metrics

1 -

Customer Data

1 -

Determination

1 -

Determinations

1 -

Introduction

1 -

KYMA

1 -

Kyma Functions

1 -

open SAP

1 -

RAP development

1 -

Sales and Service Cloud Version 2

1 -

Sales Cloud

1 -

Sales Cloud v2

1 -

SAP

1 -

SAP Community

1 -

SAP CPQ

1 -

SAP CRM Web UI

1 -

SAP Customer Data Cloud

1 -

SAP Customer Experience

1 -

SAP CX

1 -

SAP CX extensions

1 -

SAP Integration Suite

1 -

SAP Sales Cloud v2

1 -

SAP Service Cloud v2

1 -

SAP Service Cloud Version 2

1 -

Service and Social ticket configuration

1 -

Service Cloud v2

1 -

side-by-side extensions

1 -

Ticket configuration in SAP C4C

1 -

Validation

1 -

Validations

1

Related Content

- SAP Hybris Marketing: Customer Data Upload in CRM and CX Questions

- Hybris user not authenticated after SAML successful round trip in CRM and CX Questions

- Issues while downgrading from 2211 to 2205 in CRM and CX Questions

- How to create specific entity region cache in SAP Commerce in CRM and CX Blogs by SAP

- Product getting removed and recreated during catalog sync in CRM and CX Questions

Top kudoed authors

| User | Count |

|---|---|

| 1 | |

| 1 | |

| 1 | |

| 1 | |

| 1 |