- SAP Community

- Products and Technology

- Technology

- Technology Blogs by Members

- How safe is cycling in London? Spatial analysis wi...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

If you've ever visited London you'll no doubt have seen those courier cyclists weaving in and out of traffic. You might have seen some of them hurtling through red lights, mounting the pavement (or sidewalk for American readers) and frightening pedestrians. Cycling in general is being encouraged by the London city mayor. Being a risk-averse scaredy-cat myself I got to wondering how safe it is to cycle in London? It certainly doesn't look very safe, so I set about attempting to quantify how safe - or otherwise - it is using HANA's spatial capabilities in SP8.

The UK government now publish road traffic accident data in a very convenient form. They provide spreadsheets containing 1.4 million accidents spanning 13 years. I used some of this data previously to allow accidents near your location to be viewed as heatmaps on your mobile phone. Back then the accident data needed a license and additional work was necessary to get locations, so the data is much easier to use now.

This article will show you how to get the necessary accident data, tidy it up using Linux tools, then perform some spatial analysis using SQL all in an attempt to measure: how safe cycling is in London?

For the impatient, I can reveal that the data shows no increase in average accident severity if you're involved in a cycling accident in London vs the rest of the UK. Good news for cyclists, then. Perhaps this is because average speeds in the capital are lower, or perhaps my amateur statistics analysis is flawed. But I'm getting ahead of myself. Let's take this step by step:

1) Define the question we want to answer

2) Source the Data and Understand the Data Model

3) Do the Extract, Transformation and Load (ETL) into HANA

4) Analyse Data using HANA SP8 Spatial capabilities

1) Define the question we want to answer

Ideally we'd like to know "how safe is cycling in London?". To answer that, it would be reasonable to say what is the probability of a cycling journey having an accident. That would mean we'd need to know how many journeys there are, including those that were incident free, and more about their nature (distance, weather, lighting). Data about accident-free journeys seems not so easy to get. Since we're not working to anyone's spec, let's redefine the question that we will answer to suit the data available. How about:

If you cycle in London and are in an accident, is it likely to be more serious than in the rest of the UK?

The above is much easier to answer with the available data.

2) Source the Data and Understand the Data Model

The source data is available here: http://data.gov.uk/dataset/road-accidents-safety-data. The data model is very simple, as shown below:

The tables of interest are Accidents and Vehicles. The Accidents table holds the worst case severity for an accident and it's location. The Vehicles table holds all vehicles involved and the field Vehicle_Type = 1 identifies cycles. In fact, that is all that we need. This is enough to let us filter on cycles only and average the severity over "in London" and "not in London" buckets based on the accident location.

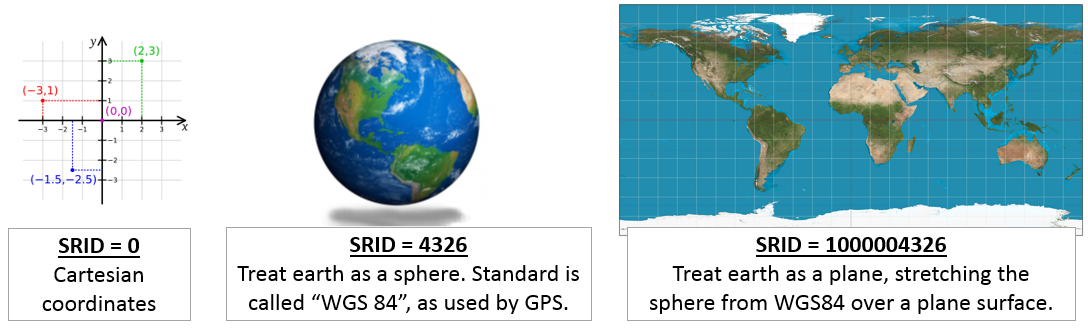

The last thing to consider here is what sort of spatial model we want to use in HANA. HANA offers 3 spatial reference systems (SRS) in SP8, each identified by a Spatial Reference ID (SRID):

Most of the articles you see use SRID 4326 (aka the "WGS84" system) which is the system used by GPS and it treats the earth as a sphere. However in HANA SP8, SRID 4326 does not allow tests of points being contained by shapes. So in this article we're going to use SRID 1000004326. This effectively takes the earth as a globe from SRID 4326 and stretches it out onto a flat planar surface. Distances are distorted (look at Antartica in the south of the above diagram) but tests of "does A contain B" are possible.

Suitable HANA tables to hold the data can be found in this GitHub repository, see the RoadF.hdbdd file.

Ok, now we have understood the data model, we're ready to get some data into the system.

3) Do the Extract, Transform and Load (ETL) into HANA

The data is supplied as three tables, one spreadsheet per table. The data will make a journey through process steps a) to e) as shown below:

a) Use PSCP to Upload Files

First download the files http://data.gov.uk/dataset/road-accidents-safety-data to your local PC. Next we need to get the files onto the HANA box. One method for this I'd not seen till recently is using a tool called PSCP. The HANA system for this demo was a CAL instance and if you use a CAL instance then you may already use PuTTY to connect to the backend Linux host. When you install PuTTY on a Windows PC you also get a secure FTP client called PSCP. PSCP can read configurations you've setup in PuTTY and so it is quite convenient to use to FTP files.

Let's use PSCP from a DOS command line to list some files on the Linux host. In windows run PSCP from the DOS command line like this:

C:\Program Files (x86)\PuTTY>pscp -ls "HANAonBW":/usr/sap/HDB/home/

In the above, the -ls is the Linix command to list files, the "HANAonBW" is a saved PuTTY config to allow us to login and the /usr/sap/HSB/home/ is the directory on the Linux box. The PuTTY configs are those you you see in the "Saved Session" in PuTTY here:

Now we are familiar with PSCP, it is easy to do the file transfer. The syntax is like this: pscp <source file from Windows> <PuTTY config>:<target directory on Linux>:

C:\Program Files (x86)\PuTTY>pscp c:\temp\RTA\AccidentsF.csv "HANAonBW":/usr/sap/HDB/home/

b) Use AWK to convert date format

The data provided contains a date in a format that is not compatible with HANA. We need to change the format of the date from 14/01/2005 to the HANA format 2015-01-14. To do this, we're going to use a tool that comes with Linux called AWK. To do this conversion, we use PuTTY to connect to the Linux backend, then run this series of Linux commands:

This runs pretty quickly, around 8 seconds to convert 1.4 million rows. Taking each line in turn:

// line count of source file for checking

wc -l < AccidentsF.csv

// Change field 10 be HANA style date

awk -F"," '{OFS=","; $10=substr($10,7,4)"-"substr($10,4,2)"-"substr($10,1,2); print $0}' AccidentsF.csv > AccidentsFT.csv

// line count of target file for checking

wc -l < AccidentsFT.csv = 1494276

The AWK command is quite long and deserves a bit more elaboration:

-F"," - to use a field separator of comma

{ - begin actions

OFS=","; - Output Field Separator, so that the output fields are also separated by a ,

$10= - field number 10 will equal...

substr($10,7,4)"-"substr($10,4,2)"-"substr($10,1,2); - some strings manipulated to make new date

print $0; - to print out the whole line to the target file.

} - end actions

AccidentsF.csv - the input file

> - is sent to

AccidentsFT.csv - the T transformed file

The result of the above is a new file called "Accidents FT.csv", with the date formatted as 2015-01-14.

c) Upload via CTL files

This is covered already on SCN, and so following the same principles: use PuTTY, move to directory /usr/sap/HDB/home/ and type:

cat > roadaccF.ctl

import data

into table "ROAD"."roadsafety.data::RoadF.AccF"

from '/usr/sap/HDB/home/AccidentsFT.csv'

record delimited by '\n'

field delimited by ','

optionally enclosed by '"'

error log '/usr/sap/HDB/home/AccidentFTErr.txt'

Use [CTRL+D] to end creating the CTL file. Then upload the data using SQL from insiide HANA studio (the necessary target table definitions are in GitHub here😞

IMPORT FROM '/usr/sap/HDB/home/roadaccF.ctl';

d) Data Cleansing

Now need a tiny bit of data cleansing. The data contains a few records without location data, and these need removed:

delete from "ROAD"."roadsafety.data::RoadF.AccFT" where LAT is null and LON is null;

e) Add Spatial Field & Fill it

To add the spatial field to our Accidents table, we cannot yet add that in the .hdbdd file in HANA Studio, instead we have to manually add the field with SQL. To do this I followed the article by rafael.babar and copied the data from the existing table to a new table, populating the Spatial Point in the process. The SQL to do this in Github.

4) Analyse Data using HANA SP8 Spatial capabilities

I spent much time trying to get HANA models to work, SQLScript calculation views to work, and various errors occurred. The recommendation seems to be to wait for SP9 for fuller integration with HANA models. Therefore I used pure SQL to do the analysis of the data.

Before doing any SQL, we need to define what "in London" and "outside London" means. For this I followed jon-paul.boyd's excellent blog and used Google Earth to draw a polygon around the area I was interested in:

That polygon is then exported as a series of coordinates which is used in the SQL statement below. Finally we're ready for some analysis! This SQL returns the mean severity and variance of all accidents in London that involved a bicycle:

-- Just bicyles in London

select

AVG (T0."SEVERITY") "Avg Severity",

VAR (T0."SEVERITY") "Avg Severity Variance",

SUM (T0."VEHCOUNT"),

SUM (T0."CASCOUNT")

from

ROAD."roadsafety.data::RoadF.AccFT" T0

left outer join "ROAD"."roadsafety.data::RoadF.VehF" T1

on T0."ACCREF" = T1."ACCREF"

where T1."VEHTYPE" = 1 --vehtype 1 is bicycle

and NEW ST_Polygon('Polygon((

51.69021545178133 -0.1000795593465265,

51.68262625218747 -0.1640894678953375,

51.62673844314873 -0.5003652550731252,

51.4687978441449 -0.5003020713080952,

51.37537638345922 -0.2604447782463681,

51.29248664506417 -0.1217913673590465,

51.3298782058117 -0.02055237147410183,

51.32142023464126 0.0993682688423303,

51.34618151800474 0.1346959279977478,

51.46491093248794 0.2133695972971839,

51.54192930978628 0.3296565877570212,

51.62542509952219 0.228648947683745,

51.60811732442631 0.0851277551187013,

51.67901853300752 -0.01341248134237749,

51.69021545178133 -0.1000795593465265

))').ST_Contains("LATLON") > 0; -- use = 0 for outside London

The results are, for cycling accidents inside and outside London:

Results

Location Severity Mean Severity Variance

Inside London 2.86076 0.12851

Outside London 2.81853 0.16485

Remember that lower severity is more serious. Severity is measured as an integer where 1 = fatal, 2 = serious and 3 = minor. The results suggest it is better to be involved in an accident inside London because the average severity value is higher (less serious). Perhaps this is because car speeds are slower.

The next question is, are the above results statistically significant? Could the difference be by chance alone, or does it reveal a pattern in the underlying data? This is beyond my (very) amateur statistics knowledge, and although there are plenty samples online about "comparing the mean of two populations" they all focus on taking samples from large populations where variance is not known but here we know every data point. If anyone with statistics knowledge reads this, I'd be interested to know how to go about comparing these means.

- SAP Managed Tags:

- SAP HANA

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

"automatische backups"

1 -

"regelmäßige sicherung"

1 -

505 Technology Updates 53

1 -

ABAP

14 -

ABAP API

1 -

ABAP CDS Views

2 -

ABAP CDS Views - BW Extraction

1 -

ABAP CDS Views - CDC (Change Data Capture)

1 -

ABAP class

2 -

ABAP Cloud

2 -

ABAP Development

5 -

ABAP in Eclipse

1 -

ABAP Platform Trial

1 -

ABAP Programming

2 -

abap technical

1 -

absl

1 -

access data from SAP Datasphere directly from Snowflake

1 -

Access data from SAP datasphere to Qliksense

1 -

Accrual

1 -

action

1 -

adapter modules

1 -

Addon

1 -

Adobe Document Services

1 -

ADS

1 -

ADS Config

1 -

ADS with ABAP

1 -

ADS with Java

1 -

ADT

2 -

Advance Shipping and Receiving

1 -

Advanced Event Mesh

3 -

AEM

1 -

AI

7 -

AI Launchpad

1 -

AI Projects

1 -

AIML

9 -

Alert in Sap analytical cloud

1 -

Amazon S3

1 -

Analytical Dataset

1 -

Analytical Model

1 -

Analytics

1 -

Analyze Workload Data

1 -

annotations

1 -

API

1 -

API and Integration

3 -

API Call

2 -

Application Architecture

1 -

Application Development

5 -

Application Development for SAP HANA Cloud

3 -

Applications and Business Processes (AP)

1 -

Artificial Intelligence

1 -

Artificial Intelligence (AI)

4 -

Artificial Intelligence (AI) 1 Business Trends 363 Business Trends 8 Digital Transformation with Cloud ERP (DT) 1 Event Information 462 Event Information 15 Expert Insights 114 Expert Insights 76 Life at SAP 418 Life at SAP 1 Product Updates 4

1 -

Artificial Intelligence (AI) blockchain Data & Analytics

1 -

Artificial Intelligence (AI) blockchain Data & Analytics Intelligent Enterprise

1 -

Artificial Intelligence (AI) blockchain Data & Analytics Intelligent Enterprise Oil Gas IoT Exploration Production

1 -

Artificial Intelligence (AI) blockchain Data & Analytics Intelligent Enterprise sustainability responsibility esg social compliance cybersecurity risk

1 -

ASE

1 -

ASR

2 -

ASUG

1 -

Attachments

1 -

Authorisations

1 -

Automating Processes

1 -

Automation

1 -

aws

2 -

Azure

1 -

Azure AI Studio

1 -

B2B Integration

1 -

Backorder Processing

1 -

Backup

1 -

Backup and Recovery

1 -

Backup schedule

1 -

BADI_MATERIAL_CHECK error message

1 -

Bank

1 -

BAS

1 -

basis

2 -

Basis Monitoring & Tcodes with Key notes

2 -

Batch Management

1 -

BDC

1 -

Best Practice

1 -

bitcoin

1 -

Blockchain

3 -

BOP in aATP

1 -

BOP Segments

1 -

BOP Strategies

1 -

BOP Variant

1 -

BPC

1 -

BPC LIVE

1 -

BTP

11 -

BTP Destination

2 -

Business AI

1 -

Business and IT Integration

1 -

Business application stu

1 -

Business Architecture

1 -

Business Communication Services

1 -

Business Continuity

1 -

Business Data Fabric

3 -

Business Partner

12 -

Business Partner Master Data

10 -

Business Technology Platform

2 -

Business Trends

1 -

CA

1 -

calculation view

1 -

CAP

3 -

Capgemini

1 -

CAPM

1 -

Catalyst for Efficiency: Revolutionizing SAP Integration Suite with Artificial Intelligence (AI) and

1 -

CCMS

2 -

CDQ

12 -

CDS

2 -

Cental Finance

1 -

Certificates

1 -

CFL

1 -

Change Management

1 -

chatbot

1 -

chatgpt

3 -

CL_SALV_TABLE

2 -

Class Runner

1 -

Classrunner

1 -

Cloud ALM Monitoring

1 -

Cloud ALM Operations

1 -

cloud connector

1 -

Cloud Extensibility

1 -

Cloud Foundry

3 -

Cloud Integration

6 -

Cloud Platform Integration

2 -

cloudalm

1 -

communication

1 -

Compensation Information Management

1 -

Compensation Management

1 -

Compliance

1 -

Compound Employee API

1 -

Configuration

1 -

Connectors

1 -

Consolidation Extension for SAP Analytics Cloud

1 -

Controller-Service-Repository pattern

1 -

Conversion

1 -

Cosine similarity

1 -

cryptocurrency

1 -

CSI

1 -

ctms

1 -

Custom chatbot

3 -

Custom Destination Service

1 -

custom fields

1 -

Customer Experience

1 -

Customer Journey

1 -

Customizing

1 -

Cyber Security

2 -

Data

1 -

Data & Analytics

1 -

Data Aging

1 -

Data Analytics

2 -

Data and Analytics (DA)

1 -

Data Archiving

1 -

Data Back-up

1 -

Data Governance

5 -

Data Integration

2 -

Data Quality

12 -

Data Quality Management

12 -

Data Synchronization

1 -

data transfer

1 -

Data Unleashed

1 -

Data Value

8 -

database tables

1 -

Datasphere

2 -

datenbanksicherung

1 -

dba cockpit

1 -

dbacockpit

1 -

Debugging

2 -

Delimiting Pay Components

1 -

Delta Integrations

1 -

Destination

3 -

Destination Service

1 -

Developer extensibility

1 -

Developing with SAP Integration Suite

1 -

Devops

1 -

digital transformation

1 -

Documentation

1 -

Dot Product

1 -

DQM

1 -

dump database

1 -

dump transaction

1 -

e-Invoice

1 -

E4H Conversion

1 -

Eclipse ADT ABAP Development Tools

2 -

edoc

1 -

edocument

1 -

ELA

1 -

Embedded Consolidation

1 -

Embedding

1 -

Embeddings

1 -

Employee Central

1 -

Employee Central Payroll

1 -

Employee Central Time Off

1 -

Employee Information

1 -

Employee Rehires

1 -

Enable Now

1 -

Enable now manager

1 -

endpoint

1 -

Enhancement Request

1 -

Enterprise Architecture

1 -

ETL Business Analytics with SAP Signavio

1 -

Euclidean distance

1 -

Event Dates

1 -

Event Driven Architecture

1 -

Event Mesh

2 -

Event Reason

1 -

EventBasedIntegration

1 -

EWM

1 -

EWM Outbound configuration

1 -

EWM-TM-Integration

1 -

Existing Event Changes

1 -

Expand

1 -

Expert

2 -

Expert Insights

1 -

Fiori

14 -

Fiori Elements

2 -

Fiori SAPUI5

12 -

Flask

1 -

Full Stack

8 -

Funds Management

1 -

General

1 -

Generative AI

1 -

Getting Started

1 -

GitHub

8 -

Grants Management

1 -

groovy

1 -

GTP

1 -

HANA

5 -

HANA Cloud

2 -

Hana Cloud Database Integration

2 -

HANA DB

1 -

HANA XS Advanced

1 -

Historical Events

1 -

home labs

1 -

HowTo

1 -

HR Data Management

1 -

html5

8 -

Identity cards validation

1 -

idm

1 -

Implementation

1 -

input parameter

1 -

instant payments

1 -

Integration

3 -

Integration Advisor

1 -

Integration Architecture

1 -

Integration Center

1 -

Integration Suite

1 -

intelligent enterprise

1 -

Java

1 -

job

1 -

Job Information Changes

1 -

Job-Related Events

1 -

Job_Event_Information

1 -

joule

4 -

Journal Entries

1 -

Just Ask

1 -

Kerberos for ABAP

8 -

Kerberos for JAVA

8 -

Launch Wizard

1 -

Learning Content

2 -

Life at SAP

1 -

lightning

1 -

Linear Regression SAP HANA Cloud

1 -

local tax regulations

1 -

LP

1 -

Machine Learning

2 -

Marketing

1 -

Master Data

3 -

Master Data Management

14 -

Maxdb

2 -

MDG

1 -

MDGM

1 -

MDM

1 -

Message box.

1 -

Messages on RF Device

1 -

Microservices Architecture

1 -

Microsoft Universal Print

1 -

Middleware Solutions

1 -

Migration

5 -

ML Model Development

1 -

Modeling in SAP HANA Cloud

8 -

Monitoring

3 -

MTA

1 -

Multi-Record Scenarios

1 -

Multiple Event Triggers

1 -

Neo

1 -

New Event Creation

1 -

New Feature

1 -

Newcomer

1 -

NodeJS

2 -

ODATA

2 -

OData APIs

1 -

odatav2

1 -

ODATAV4

1 -

ODBC

1 -

ODBC Connection

1 -

Onpremise

1 -

open source

2 -

OpenAI API

1 -

Oracle

1 -

PaPM

1 -

PaPM Dynamic Data Copy through Writer function

1 -

PaPM Remote Call

1 -

PAS-C01

1 -

Pay Component Management

1 -

PGP

1 -

Pickle

1 -

PLANNING ARCHITECTURE

1 -

Popup in Sap analytical cloud

1 -

PostgrSQL

1 -

POSTMAN

1 -

Process Automation

2 -

Product Updates

4 -

PSM

1 -

Public Cloud

1 -

Python

4 -

Qlik

1 -

Qualtrics

1 -

RAP

3 -

RAP BO

2 -

Record Deletion

1 -

Recovery

1 -

recurring payments

1 -

redeply

1 -

Release

1 -

Remote Consumption Model

1 -

Replication Flows

1 -

Research

1 -

Resilience

1 -

REST

1 -

REST API

1 -

Retagging Required

1 -

Risk

1 -

Rolling Kernel Switch

1 -

route

1 -

rules

1 -

S4 HANA

1 -

S4 HANA Cloud

1 -

S4 HANA On-Premise

1 -

S4HANA

3 -

S4HANA_OP_2023

2 -

SAC

10 -

SAC PLANNING

9 -

SAP

4 -

SAP ABAP

1 -

SAP Advanced Event Mesh

1 -

SAP AI Core

8 -

SAP AI Launchpad

8 -

SAP Analytic Cloud Compass

1 -

Sap Analytical Cloud

1 -

SAP Analytics Cloud

4 -

SAP Analytics Cloud for Consolidation

2 -

SAP Analytics Cloud Story

1 -

SAP analytics clouds

1 -

SAP BAS

1 -

SAP Basis

6 -

SAP BODS

1 -

SAP BODS certification.

1 -

SAP BTP

20 -

SAP BTP Build Work Zone

2 -

SAP BTP Cloud Foundry

5 -

SAP BTP Costing

1 -

SAP BTP CTMS

1 -

SAP BTP Innovation

1 -

SAP BTP Migration Tool

1 -

SAP BTP SDK IOS

1 -

SAP Build

11 -

SAP Build App

1 -

SAP Build apps

1 -

SAP Build CodeJam

1 -

SAP Build Process Automation

3 -

SAP Build work zone

10 -

SAP Business Objects Platform

1 -

SAP Business Technology

2 -

SAP Business Technology Platform (XP)

1 -

sap bw

1 -

SAP CAP

2 -

SAP CDC

1 -

SAP CDP

1 -

SAP Certification

1 -

SAP Cloud ALM

4 -

SAP Cloud Application Programming Model

1 -

SAP Cloud Integration for Data Services

1 -

SAP cloud platform

8 -

SAP Companion

1 -

SAP CPI

3 -

SAP CPI (Cloud Platform Integration)

2 -

SAP CPI Discover tab

1 -

sap credential store

1 -

SAP Customer Data Cloud

1 -

SAP Customer Data Platform

1 -

SAP Data Intelligence

1 -

SAP Data Migration in Retail Industry

1 -

SAP Data Services

1 -

SAP DATABASE

1 -

SAP Dataspher to Non SAP BI tools

1 -

SAP Datasphere

9 -

SAP DRC

1 -

SAP EWM

1 -

SAP Fiori

2 -

SAP Fiori App Embedding

1 -

Sap Fiori Extension Project Using BAS

1 -

SAP GRC

1 -

SAP HANA

1 -

SAP HCM (Human Capital Management)

1 -

SAP HR Solutions

1 -

SAP IDM

1 -

SAP Integration Suite

9 -

SAP Integrations

4 -

SAP iRPA

2 -

SAP Learning Class

1 -

SAP Learning Hub

1 -

SAP Odata

2 -

SAP on Azure

1 -

SAP PartnerEdge

1 -

sap partners

1 -

SAP Password Reset

1 -

SAP PO Migration

1 -

SAP Prepackaged Content

1 -

SAP Process Automation

2 -

SAP Process Integration

2 -

SAP Process Orchestration

1 -

SAP S4HANA

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Cloud for Finance

1 -

SAP S4HANA Cloud private edition

1 -

SAP Sandbox

1 -

SAP STMS

1 -

SAP SuccessFactors

2 -

SAP SuccessFactors HXM Core

1 -

SAP Time

1 -

SAP TM

2 -

SAP Trading Partner Management

1 -

SAP UI5

1 -

SAP Upgrade

1 -

SAP-GUI

8 -

SAP_COM_0276

1 -

SAPBTP

1 -

SAPCPI

1 -

SAPEWM

1 -

sapmentors

1 -

saponaws

2 -

SAPUI5

4 -

schedule

1 -

Secure Login Client Setup

8 -

security

9 -

Selenium Testing

1 -

SEN

1 -

SEN Manager

1 -

service

1 -

SET_CELL_TYPE

1 -

SET_CELL_TYPE_COLUMN

1 -

SFTP scenario

2 -

Simplex

1 -

Single Sign On

8 -

Singlesource

1 -

SKLearn

1 -

soap

1 -

Software Development

1 -

SOLMAN

1 -

solman 7.2

2 -

Solution Manager

3 -

sp_dumpdb

1 -

sp_dumptrans

1 -

SQL

1 -

sql script

1 -

SSL

8 -

SSO

8 -

Substring function

1 -

SuccessFactors

1 -

SuccessFactors Time Tracking

1 -

Sybase

1 -

system copy method

1 -

System owner

1 -

Table splitting

1 -

Tax Integration

1 -

Technical article

1 -

Technical articles

1 -

Technology Updates

1 -

Technology Updates

1 -

Technology_Updates

1 -

Threats

1 -

Time Collectors

1 -

Time Off

2 -

Tips and tricks

2 -

Tools

1 -

Trainings & Certifications

1 -

Transport in SAP BODS

1 -

Transport Management

1 -

TypeScript

2 -

unbind

1 -

Unified Customer Profile

1 -

UPB

1 -

Use of Parameters for Data Copy in PaPM

1 -

User Unlock

1 -

VA02

1 -

Validations

1 -

Vector Database

1 -

Vector Engine

1 -

Visual Studio Code

1 -

VSCode

1 -

Web SDK

1 -

work zone

1 -

workload

1 -

xsa

1 -

XSA Refresh

1

- « Previous

- Next »

- SAP HANA Cloud Vector Engine: Quick FAQ Reference in Technology Blogs by SAP

- 10+ ways to reshape your SAP landscape with SAP Business Technology Platform – Blog 4 in Technology Blogs by SAP

- Enhanced Data Analysis of Fitness Data using HANA Vector Engine, Datasphere and SAP Analytics Cloud in Technology Blogs by SAP

- AI Foundation on SAP BTP: Q1 2024 Release Highlights in Technology Blogs by SAP

- Deliver Real-World Results with SAP Business AI: Q4 2023 & Q1 2024 Release Highlights in Technology Blogs by SAP

| User | Count |

|---|---|

| 11 | |

| 9 | |

| 7 | |

| 6 | |

| 4 | |

| 4 | |

| 3 | |

| 3 | |

| 3 | |

| 3 |