- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- Implementation Guide of Running SAP System on SLES...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Recently, I have studied how to build up a SAP system with high availability. Before starting this project, I am literally a know-nothing in this respect. All I know is that this system is robust and the sap instance can be taken over by another machine without notice of end user when the main machine faces failure.

The road to successfully build this system is tough, there are not many useful and specific materials online, so I have written a quick guide for starts when I finish HA implementation. Hope this can help anyone who find this blog : )

NOTE: The main purpose of this guide is to help starters understand basic SAP High Availability concepts. Lots of detailed implementation methods are not rigorous. A complete HA solution for SAP systems would be more complicate and robust regarding to customer requirements.

Now begin

System Architecture

switchover cluster

switchover cluster

Basically, the ASCS instance should be running in a switchover cluster infrastructure.

Implement Steps

Build up cluster

First of all, build a switchover cluster.

We are showing the example on SLES SP3.

Start software management in YaST2, check whether the High Availability Extension is installed. If not, install it.

In order to build a cluster, make sure each host in cluster contain two network adapters (one for in-cluster communication and one for public communication) and they can ping each other.

Maintain /etc/hosts in all hosts.

Build a cluster refer to SUSE Linux Enterprise High Availability Extension High Availability Guide.

Choosing 10.68.228.0 as Bind Network Address in this situation because it is the subnet I use for cluster unicast.

Manually add two hosts

The Csync2 is used for replicate configuration files to all nodes automatically.

Refer to SLES HA guide, use the YaST cluster module to configure the user-space conntrackd. It needs a dedicated network interface that is not used for other communication channels.

In our scenario, the interface of both host1 and host2 are set to eth0, because eht0 in host1 with IP 10.68.231.30 and eht0 in host2 with IP 10.68.231.127 are in the same subnet.

Start openais to bring the cluster online.

To check whether the cluster is online, use Pacemaker GUI.

To connect to the cluster, select Connection+Login. By default, the Server field shows the localhost's IP address and hacluster as User Name. Enter the user's password to continue.

The default password can be seen in terminal if you build up the cluster using Automatic Cluster Setup (sleha-bootstrap). In this case, as we build up the cluster manually, the password can be set by using command “passwd hacluster” in terminal.

Now you can see the cluster is online and contains two nodes. If you see only one nodes in cluster, please redo the cluster-build steps and recheck the parameters you entered in previous steps.

Install SAP system

After building up the cluster, we are ready to start to install SAP system.

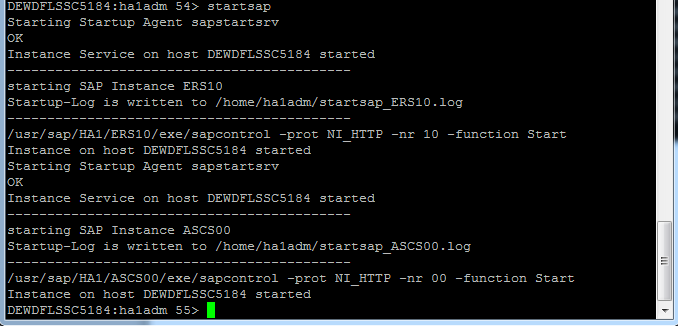

The ASCS instance should be installed first. In host1 we start sapinst with virtual hostname,

[DEWDFLSSC5184:~/root/SWPM # ./sapinst SAPINST_USE_HOSTNAME=ascsha1]

After successfully installing ASCS instance in host1. The next step is installing Enqueue Replication Server Instance (ERS) in all nodes. As there are a little difference between ERS installation in host1 and host2, we will show the steps respectively.

In host1:

ERS should be installed using local hostname, start sapinst without any parameters.

This installation is quite simple, and we skipped the detailed screenshots.

In host2:

The ERS instance should also be installed in host2. As the SAP profile in host1 cannot be accessed by host2, therefore we configure a NFS connection of folder /sapmnt between host1 and host2.

In this guide, we skip the detailed configuration steps of NFS.

After NFS is settled, make sure /sapmnt in host1 is mounted in the same folder in host2.

Start sapinst in host2, enter /sapmnt/<SID>/profile as Profile Directory, and finish the installation of ERS instance.

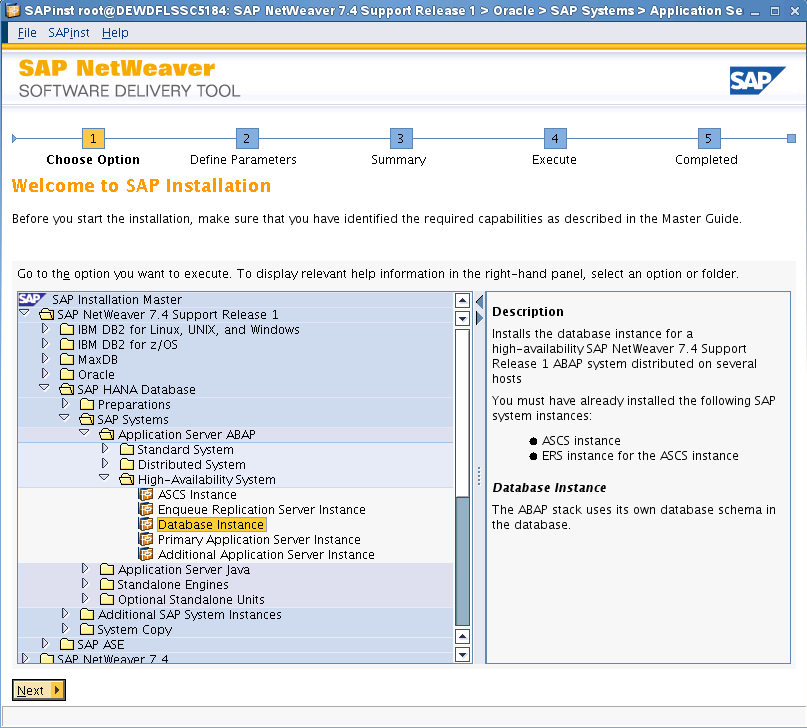

After successfully installing ERS instance on both nodes, the next step is installing Database instance.

In the scenario we install Database Instance in host1 and HANA is running on another separate server outside the cluster.

After the installation of Database Instance is done, the next step is installing Primary Application Server Instance.

The Primary Application Server Instance (PAS) serves as the communication interface between SAP system and user client. In this scenario we install PAS in host2.

After all the installation steps are done, a distributed sap system is built. Logon the system to check whether the system works fine.

HANA DB at DEWDFLHANA1182

Host1

Host2

Configure High Availability

First of all, configure a virtual IP address for the switchover cluster. The cluster will use this IP for public communication. You can add resource in Pacemaker GUI or use crm command:

crm configure

crm(live)configure# primitive res_virtual_ip ocf:heartbeat:IPaddr params ip=10.68.231.252

crm(live)configure# commit

In this scenario we choose 10.68.231.252 as virtual IP because this IP is not used by any machine in the current subnet.

After the virtual IP is added, you can see the resource is running (ignore z_sap_group and another resource, we are going to discuss them later)

In order to make ASCS instance available in host2 and take over the job when host1 is down, now it’s time to switch the virtual IP to host2 and register ASCS in host2.

In Pacemaker, select host1 and click make the node standby. Now you can see the virtual IP is running on host2.

Then configure a NFS communication of ASCS instance folder (in this case, /use/sap/HA1/ASCS00 in host1), make sure the ASCS instance folder in host1 is mounted on the same folder in host2.

In host2, register ASCS instance using command

/usr/sap/hostctrl/exe/saphostctrl -function RegisterInstanceService -sid HA1 -nr 00 -saplocalhost ascsha1

Now we can add the ASCS instance as resource in the cluster using command

crm configure primitive rsc_sapinst_HA1_ASCS00_sap ocf:heartbeat:SAPInstance params instanceName="HA1_ASCS00_ascsha1" AUTOMATIC_RECOVER="true" START_PROFILE="/sapmnt/HA1/profile/HA1_ASCS00_ascsha1" op monitor interval="120s" timeout="60s" start_delay="120s" op start interval="0" timeout="120s" op stop interval="0" timeout="180s" on_fail="block"

(Change the parameter in the command regarding to your own system)

Refer to Running SAP NetWeaver on SUSE Linux Enterprise Server with High Availability Simple Stack: SAPInstance ASCS00: ABAP central services instance - You should at least adjust the resource names and the parameters InstanceName, AUTOMA TIC_RECOVER and START_PROFILE. The START_PROFILE must be specified with full path.

You can check whether the resource is added via Pacemaker GUI.

Now group the two resources using command:

crm configure group <group_name> <resource_1> <resource_2> …..

Now you can see the two resources are combined into a group.

Now a simple HA system is successfully built, you can activate both nodes and restore ASCS instance in host1.

Enjoy : )

- SAP Managed Tags:

- SAP NetWeaver

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

91 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

66 -

Expert

1 -

Expert Insights

177 -

Expert Insights

297 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

13 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,577 -

Product Updates

342 -

Replication Flow

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,873 -

Technology Updates

420 -

Workload Fluctuations

1

- Exploring Integration Options in SAP Datasphere with the focus on using SAP extractors - Part II in Technology Blogs by SAP

- Python RAG sample for beginners using SAP HANA Cloud and SAP AI Core in Technology Blogs by SAP

- IoT - Ultimate Data Cyber Security - with Enterprise Blockchain and SAP BTP 🚀 in Technology Blogs by Members

- Mistral gagnant. Mistral AI and SAP Kyma serverless. in Technology Blogs by SAP

- SAP BTP, Kyma Runtime internally available on SAP Converged Cloud in Technology Blogs by SAP

| User | Count |

|---|---|

| 37 | |

| 25 | |

| 17 | |

| 13 | |

| 7 | |

| 7 | |

| 7 | |

| 6 | |

| 6 | |

| 6 |