- SAP Community

- Products and Technology

- Technology

- Technology Blogs by Members

- Data Quality Cockpit using SAP Information Steward...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Overview

There has been a lot of advancement in the area of Information management in last couple of years. Companies have invested millions of dollars and implemented many tools and processes to improve the way they manage their information assets. With such a large demand from companies, Vendor’s also have accordingly expanded their portfolio of offering to cater to the business requirements. Some Vendors have developed solutions themselves and some have acquired specialists who have proven tools to quickly address the market demand. SAP is no different and has been continuously on the path of innovation and in parallel looking at acquisitions to provide tools to meet the Customers’ demands. Two such solutions from SAP are SAP Information Steward and SAP Data Services. These two solutions along with SAP Master Data Management are primarily aimed at solving that puzzle of master data quality.

Business Data Quality Problem

Since the introduction of master data solution by SAP, many Customers have adopted the tool and have tried to solve the problem of bad and redundant data. It is known fact that master data and its problems are more often spoken and less often dealt with. The tangible benefits of good quality master data is not easily quantifiable and hence receives less focus from business. In recent past, Customers have implemented MDM solutions hoping that it would solve their issues with quality of master data. Often it is believed that MDM solves all problems of master data and implementing MDM is sufficient to manage data quality. When it has solved the problem to some extent in the area of managing and governing master data, it has not solved the problem completely in the area of data quality. Reason being, tools meant for managing master data are not very good tools meant for cleaning and enriching the data.

Some common complaints are -

- Poor governance and stewardship are reasons for bad quality of data

- Rules insufficient to identify bad and duplicate data

- No holistic view of data quality in the productive database

- Data in productive database has gone bad over a period of time

- Period data quality checks on productive database not possible due to tool limitations

- Mergers and Acquisitions lead to unclean data due to poor stewardship

Data Quality Cockpit

MDM as a tool is meant to consolidate, centralize and harmonize master data between various connected systems. But it lacks in depth data cleansing, transformations, enrichment and ongoing data quality assessment capabilities. The crux of the problem is in enabling business to control, monitor and maintain the quality of data on a continual basis and at the same time leverage existing master data setup.

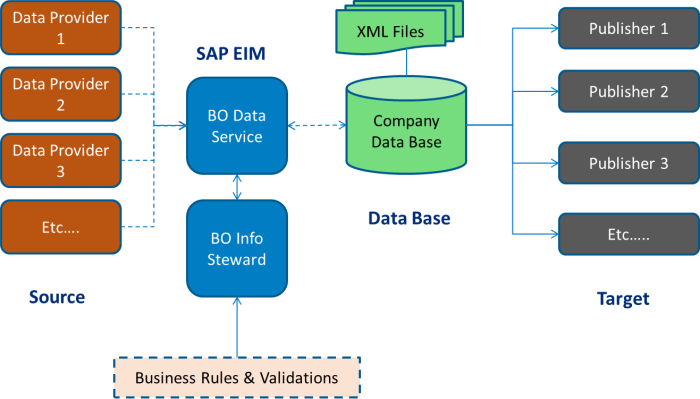

The answer to this problem statement is a combination of SAP’s Information Steward and SAP’s Data Services which could be leveraged in the existing client’s landscape with minimal disrupt to the established business processes. These two tools can very well complement the master data solution or work independently in providing a comprehensive solution for data quality management. SAP Info Steward provides the right tools to understand the fundamental area of problem in order to know where to focus the solution. This along with ETL capabilities of SAP Data Services provided the complete solution. Together they form the key elements of the data quality cockpit for information management.

What SAP Information Steward can do?

SAP Information Steward has some key capabilities that can enable business to assess, analyze and quantify the business problem. Using SAP IS for financial impact analysis could be the very first step to solving the DQ problem. The DQ score card fulfills this to a very large extent.

Further, SAP IS has capabilities that will enable fixing the bad data. The basic/advanced profiling, business user friendly rule building mechanism, metadata management, impact analysis, data lineage and cleansing package builder are some key features that can be used in the context of analyzing data cleansing and standardization requirements. Further these rules can be exported to SAP Data Services for reuse and actual cleansing.

What SAP Data Services can do?

SAP Data Services can complement SAP IS in providing the required support for actual cleansing, transformation, standardization and de-duplication of data. SAP DS can either be used independently or in conjunction with SAP IS. In addition, rules can be imported from SAP IS and additional rules can be set up within SQAP DS. SAP DS can have its own set of transforms, directories (like address and company name), validations and matching rules. The data flow functionality allows for step by step cleansing and enrichment of data. Data fed once into the data flow can be taken through various steps whereby data is cleansed, standardized, transformed and enriched to improve the data quality. This clean data can then be used in the matching transform for eventually identifying the unique and survivor records among the multiple matches.

Using SAP IS + SAP DS – A typical business application scenario

In typical business scenario, data resides in the ERP or Master Data applications. The SAP DQ tools can readily integrate with source or destination systems via database connections, application connections or file type connections for data exchange.

Once data is extracted to SAP IS and formatted, business can do various kinds of profiling to assess the data quality. Next step is to understand how much a single instance of bad data is costing the business and use SAP IS to do a financial impact analysis and build a business case for the data quality problem.

SAP IS and SAP DS together can then be used to solve the data quality problem, SAP IS provides the governing and analytical abilities and SAP DS does the actual cleansing and enrichment. “Data is only as good as the underlying rules that govern the data”. Building a cleansing package with inputs from business for validations and rules governing the data provides the platform for ensuring data entering the system is good. Additionally using the Industry standard transforms like the Address, Company name, Standardization, etc. data can help further cleanse and enrich the data. Matching transforms can then be used on the cleansed and enriched data to identify duplicates and only retain unique copies of record in the system.

The cleansing package should be regularly updated with additional rules/updated rules and validations to ensure that the package is not outdated as data can transform and mature with time. More and complex rules could be built to ensure bad or redundant data is filtered and only a golden copy of the record is stored in the productive instance. The recent introduction of data quality advisor in SAP IS uses statistical analysis and content type to guides Data Stewards to rapidly develop cleansing and matching rules to improve the quality of their data assets.

There is also the flexibility of building the cleansing package in SAP IS and transporting it to SAP DS for reuse. The rules can be packaged as services so that they can be used in other processes that allow for data entry into the system. Thus data quality can be governed not just once but on a continual basis - at the point of creation of data, import of data, periodic extraction and review of data from the productive instance.

What is the ROI for business?

To understand the ROI by investing on tool and additional processes a simple impact analysis feature of the IS tool could be leveraged. By identifying the key attributes that define data, determining the cost of each bad attribute and its effect on the record, analyzing the impact of bad data on business and extrapolating it to the universe gives a sense of magnitude the bad data can have on the overall business. This when translated into potential savings and presented in a form understandable by business provides answers to questions around ROI.

Some Challenges

To make good use of the DQ cockpit, a proper data quality or governance organization is required. Data Stewards and owners need to continuously engage with business users to understand the changing needs in data and its validation to continuously build or keep the rules updated. As data matures and expires with time, the same rules may not always hold good and the rules or validations that govern the data needs to continuously change and refine as business demands. Analysts can run daily, weekly or periodic jobs to generate reports that can help business or information stewards understand the state of data quality and take continuous measures to keep data clean and reliable.

Key Benefits

Below are some key benefits of the tool

- An easy plug in solution that could extract in-process data at particular stage in business process, cleanse/enrich and put data back

- Rules that can be easily configured by data admins/stewards for data validation and reuse in IS and DS interchangeably

- Usage of external services for enriching data like the address directory services for different countries

- Periodic health check and dashboard view to ensure data standards and quality are maintained at appropriate levels

- Identifying duplicates , determination of survivor and maintaining history of merged records

- Financial impact analysis and calculating ROI

Conclusion

The SAP Information Steward and SAP Data Services are very important tools for the Stewards, Analysts, Information Governance experts and Business Users to regularly conduct health check on the quality of data and take timely corrective actions. It gives a good understanding of where their data quality stands and where to fix to get maximum benefits. It is imperative that data quality management is not a one-time exercise but a continuous one. Continuous improvement in the quality of data enabled by SAP IS and SAP DS provide the key foundational elements that enable governance and improve trust in the data infrastructure of the Organization.

- SAP Managed Tags:

- SAP Data Insight,

- SAP Data Integrator,

- SAP Data Quality Management,

- SAP Data Services,

- SAP Information Steward

- bods

- data

- data integration

- data integration and quality management

- data integrator

- data quality

- data services

- data services 4.1

- DataQuality

- eim

- ETL

- SAP

- sap businessobjects data insight

- SAP Businessobjects Data Quality XI 3.0

- SAP Businessobjects Data Quality XI Release2

- sap data integrator

- SAP Data Services

- SAP Information Steward

- sapdq

- sapis

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

"automatische backups"

1 -

"regelmäßige sicherung"

1 -

"TypeScript" "Development" "FeedBack"

1 -

505 Technology Updates 53

1 -

ABAP

14 -

ABAP API

1 -

ABAP CDS Views

2 -

ABAP CDS Views - BW Extraction

1 -

ABAP CDS Views - CDC (Change Data Capture)

1 -

ABAP class

2 -

ABAP Cloud

2 -

ABAP Development

5 -

ABAP in Eclipse

1 -

ABAP Platform Trial

1 -

ABAP Programming

2 -

abap technical

1 -

absl

2 -

access data from SAP Datasphere directly from Snowflake

1 -

Access data from SAP datasphere to Qliksense

1 -

Accrual

1 -

action

1 -

adapter modules

1 -

Addon

1 -

Adobe Document Services

1 -

ADS

1 -

ADS Config

1 -

ADS with ABAP

1 -

ADS with Java

1 -

ADT

2 -

Advance Shipping and Receiving

1 -

Advanced Event Mesh

3 -

AEM

1 -

AI

7 -

AI Launchpad

1 -

AI Projects

1 -

AIML

9 -

Alert in Sap analytical cloud

1 -

Amazon S3

1 -

Analytical Dataset

1 -

Analytical Model

1 -

Analytics

1 -

Analyze Workload Data

1 -

annotations

1 -

API

1 -

API and Integration

3 -

API Call

2 -

Application Architecture

1 -

Application Development

5 -

Application Development for SAP HANA Cloud

3 -

Applications and Business Processes (AP)

1 -

Artificial Intelligence

1 -

Artificial Intelligence (AI)

5 -

Artificial Intelligence (AI) 1 Business Trends 363 Business Trends 8 Digital Transformation with Cloud ERP (DT) 1 Event Information 462 Event Information 15 Expert Insights 114 Expert Insights 76 Life at SAP 418 Life at SAP 1 Product Updates 4

1 -

Artificial Intelligence (AI) blockchain Data & Analytics

1 -

Artificial Intelligence (AI) blockchain Data & Analytics Intelligent Enterprise

1 -

Artificial Intelligence (AI) blockchain Data & Analytics Intelligent Enterprise Oil Gas IoT Exploration Production

1 -

Artificial Intelligence (AI) blockchain Data & Analytics Intelligent Enterprise sustainability responsibility esg social compliance cybersecurity risk

1 -

ASE

1 -

ASR

2 -

ASUG

1 -

Attachments

1 -

Authorisations

1 -

Automating Processes

1 -

Automation

2 -

aws

2 -

Azure

1 -

Azure AI Studio

1 -

B2B Integration

1 -

Backorder Processing

1 -

Backup

1 -

Backup and Recovery

1 -

Backup schedule

1 -

BADI_MATERIAL_CHECK error message

1 -

Bank

1 -

BAS

1 -

basis

2 -

Basis Monitoring & Tcodes with Key notes

2 -

Batch Management

1 -

BDC

1 -

Best Practice

1 -

bitcoin

1 -

Blockchain

3 -

bodl

1 -

BOP in aATP

1 -

BOP Segments

1 -

BOP Strategies

1 -

BOP Variant

1 -

BPC

1 -

BPC LIVE

1 -

BTP

12 -

BTP Destination

2 -

Business AI

1 -

Business and IT Integration

1 -

Business application stu

1 -

Business Application Studio

1 -

Business Architecture

1 -

Business Communication Services

1 -

Business Continuity

1 -

Business Data Fabric

3 -

Business Partner

12 -

Business Partner Master Data

10 -

Business Technology Platform

2 -

Business Trends

4 -

CA

1 -

calculation view

1 -

CAP

3 -

Capgemini

1 -

CAPM

1 -

Catalyst for Efficiency: Revolutionizing SAP Integration Suite with Artificial Intelligence (AI) and

1 -

CCMS

2 -

CDQ

12 -

CDS

2 -

Cental Finance

1 -

Certificates

1 -

CFL

1 -

Change Management

1 -

chatbot

1 -

chatgpt

3 -

CL_SALV_TABLE

2 -

Class Runner

1 -

Classrunner

1 -

Cloud ALM Monitoring

1 -

Cloud ALM Operations

1 -

cloud connector

1 -

Cloud Extensibility

1 -

Cloud Foundry

4 -

Cloud Integration

6 -

Cloud Platform Integration

2 -

cloudalm

1 -

communication

1 -

Compensation Information Management

1 -

Compensation Management

1 -

Compliance

1 -

Compound Employee API

1 -

Configuration

1 -

Connectors

1 -

Consolidation Extension for SAP Analytics Cloud

2 -

Control Indicators.

1 -

Controller-Service-Repository pattern

1 -

Conversion

1 -

Cosine similarity

1 -

cryptocurrency

1 -

CSI

1 -

ctms

1 -

Custom chatbot

3 -

Custom Destination Service

1 -

custom fields

1 -

Customer Experience

1 -

Customer Journey

1 -

Customizing

1 -

cyber security

3 -

Data

1 -

Data & Analytics

1 -

Data Aging

1 -

Data Analytics

2 -

Data and Analytics (DA)

1 -

Data Archiving

1 -

Data Back-up

1 -

Data Governance

5 -

Data Integration

2 -

Data Quality

12 -

Data Quality Management

12 -

Data Synchronization

1 -

data transfer

1 -

Data Unleashed

1 -

Data Value

8 -

database tables

1 -

Datasphere

2 -

datenbanksicherung

1 -

dba cockpit

1 -

dbacockpit

1 -

Debugging

2 -

Delimiting Pay Components

1 -

Delta Integrations

1 -

Destination

3 -

Destination Service

1 -

Developer extensibility

1 -

Developing with SAP Integration Suite

1 -

Devops

1 -

digital transformation

1 -

Documentation

1 -

Dot Product

1 -

DQM

1 -

dump database

1 -

dump transaction

1 -

e-Invoice

1 -

E4H Conversion

1 -

Eclipse ADT ABAP Development Tools

2 -

edoc

1 -

edocument

1 -

ELA

1 -

Embedded Consolidation

1 -

Embedding

1 -

Embeddings

1 -

Employee Central

1 -

Employee Central Payroll

1 -

Employee Central Time Off

1 -

Employee Information

1 -

Employee Rehires

1 -

Enable Now

1 -

Enable now manager

1 -

endpoint

1 -

Enhancement Request

1 -

Enterprise Architecture

1 -

ETL Business Analytics with SAP Signavio

1 -

Euclidean distance

1 -

Event Dates

1 -

Event Driven Architecture

1 -

Event Mesh

2 -

Event Reason

1 -

EventBasedIntegration

1 -

EWM

1 -

EWM Outbound configuration

1 -

EWM-TM-Integration

1 -

Existing Event Changes

1 -

Expand

1 -

Expert

2 -

Expert Insights

2 -

Fiori

14 -

Fiori Elements

2 -

Fiori SAPUI5

12 -

Flask

1 -

Full Stack

8 -

Funds Management

1 -

General

1 -

General Splitter

1 -

Generative AI

1 -

Getting Started

1 -

GitHub

8 -

Grants Management

1 -

groovy

1 -

GTP

1 -

HANA

6 -

HANA Cloud

2 -

Hana Cloud Database Integration

2 -

HANA DB

2 -

HANA XS Advanced

1 -

Historical Events

1 -

home labs

1 -

HowTo

1 -

HR Data Management

1 -

html5

8 -

HTML5 Application

1 -

Identity cards validation

1 -

idm

1 -

Implementation

1 -

input parameter

1 -

instant payments

1 -

Integration

3 -

Integration Advisor

1 -

Integration Architecture

1 -

Integration Center

1 -

Integration Suite

1 -

intelligent enterprise

1 -

iot

1 -

Java

1 -

job

1 -

Job Information Changes

1 -

Job-Related Events

1 -

Job_Event_Information

1 -

joule

4 -

Journal Entries

1 -

Just Ask

1 -

Kerberos for ABAP

8 -

Kerberos for JAVA

8 -

KNN

1 -

Launch Wizard

1 -

Learning Content

2 -

Life at SAP

5 -

lightning

1 -

Linear Regression SAP HANA Cloud

1 -

local tax regulations

1 -

LP

1 -

Machine Learning

2 -

Marketing

1 -

Master Data

3 -

Master Data Management

14 -

Maxdb

2 -

MDG

1 -

MDGM

1 -

MDM

1 -

Message box.

1 -

Messages on RF Device

1 -

Microservices Architecture

1 -

Microsoft Universal Print

1 -

Middleware Solutions

1 -

Migration

5 -

ML Model Development

1 -

Modeling in SAP HANA Cloud

8 -

Monitoring

3 -

MTA

1 -

Multi-Record Scenarios

1 -

Multiple Event Triggers

1 -

Neo

1 -

New Event Creation

1 -

New Feature

1 -

Newcomer

1 -

NodeJS

2 -

ODATA

2 -

OData APIs

1 -

odatav2

1 -

ODATAV4

1 -

ODBC

1 -

ODBC Connection

1 -

Onpremise

1 -

open source

2 -

OpenAI API

1 -

Oracle

1 -

PaPM

1 -

PaPM Dynamic Data Copy through Writer function

1 -

PaPM Remote Call

1 -

PAS-C01

1 -

Pay Component Management

1 -

PGP

1 -

Pickle

1 -

PLANNING ARCHITECTURE

1 -

Popup in Sap analytical cloud

1 -

PostgrSQL

1 -

POSTMAN

1 -

Process Automation

2 -

Product Updates

4 -

PSM

1 -

Public Cloud

1 -

Python

4 -

Qlik

1 -

Qualtrics

1 -

RAP

3 -

RAP BO

2 -

Record Deletion

1 -

Recovery

1 -

recurring payments

1 -

redeply

1 -

Release

1 -

Remote Consumption Model

1 -

Replication Flows

1 -

research

1 -

Resilience

1 -

REST

1 -

REST API

1 -

Retagging Required

1 -

Risk

1 -

Rolling Kernel Switch

1 -

route

1 -

rules

1 -

S4 HANA

1 -

S4 HANA Cloud

1 -

S4 HANA On-Premise

1 -

S4HANA

3 -

S4HANA_OP_2023

2 -

SAC

10 -

SAC PLANNING

9 -

SAP

4 -

SAP ABAP

1 -

SAP Advanced Event Mesh

1 -

SAP AI Core

8 -

SAP AI Launchpad

8 -

SAP Analytic Cloud Compass

1 -

Sap Analytical Cloud

1 -

SAP Analytics Cloud

4 -

SAP Analytics Cloud for Consolidation

3 -

SAP Analytics Cloud Story

1 -

SAP analytics clouds

1 -

SAP BAS

1 -

SAP Basis

6 -

SAP BODS

1 -

SAP BODS certification.

1 -

SAP BTP

21 -

SAP BTP Build Work Zone

2 -

SAP BTP Cloud Foundry

6 -

SAP BTP Costing

1 -

SAP BTP CTMS

1 -

SAP BTP Innovation

1 -

SAP BTP Migration Tool

1 -

SAP BTP SDK IOS

1 -

SAP Build

11 -

SAP Build App

1 -

SAP Build apps

1 -

SAP Build CodeJam

1 -

SAP Build Process Automation

3 -

SAP Build work zone

10 -

SAP Business Objects Platform

1 -

SAP Business Technology

2 -

SAP Business Technology Platform (XP)

1 -

sap bw

1 -

SAP CAP

2 -

SAP CDC

1 -

SAP CDP

1 -

SAP CDS VIEW

1 -

SAP Certification

1 -

SAP Cloud ALM

4 -

SAP Cloud Application Programming Model

1 -

SAP Cloud Integration for Data Services

1 -

SAP cloud platform

8 -

SAP Companion

1 -

SAP CPI

3 -

SAP CPI (Cloud Platform Integration)

2 -

SAP CPI Discover tab

1 -

sap credential store

1 -

SAP Customer Data Cloud

1 -

SAP Customer Data Platform

1 -

SAP Data Intelligence

1 -

SAP Data Migration in Retail Industry

1 -

SAP Data Services

1 -

SAP DATABASE

1 -

SAP Dataspher to Non SAP BI tools

1 -

SAP Datasphere

10 -

SAP DRC

1 -

SAP EWM

1 -

SAP Fiori

2 -

SAP Fiori App Embedding

1 -

Sap Fiori Extension Project Using BAS

1 -

SAP GRC

1 -

SAP HANA

1 -

SAP HCM (Human Capital Management)

1 -

SAP HR Solutions

1 -

SAP IDM

1 -

SAP Integration Suite

9 -

SAP Integrations

4 -

SAP iRPA

2 -

SAP Learning Class

1 -

SAP Learning Hub

1 -

SAP Odata

2 -

SAP on Azure

1 -

SAP PartnerEdge

1 -

sap partners

1 -

SAP Password Reset

1 -

SAP PO Migration

1 -

SAP Prepackaged Content

1 -

SAP Process Automation

2 -

SAP Process Integration

2 -

SAP Process Orchestration

1 -

SAP S4HANA

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Cloud for Finance

1 -

SAP S4HANA Cloud private edition

1 -

SAP Sandbox

1 -

SAP STMS

1 -

SAP successfactors

3 -

SAP SuccessFactors HXM Core

1 -

SAP Time

1 -

SAP TM

2 -

SAP Trading Partner Management

1 -

SAP UI5

1 -

SAP Upgrade

1 -

SAP Utilities

1 -

SAP-GUI

8 -

SAP_COM_0276

1 -

SAPBTP

1 -

SAPCPI

1 -

SAPEWM

1 -

sapmentors

1 -

saponaws

2 -

SAPS4HANA

1 -

SAPUI5

4 -

schedule

1 -

Secure Login Client Setup

8 -

security

9 -

Selenium Testing

1 -

SEN

1 -

SEN Manager

1 -

service

1 -

SET_CELL_TYPE

1 -

SET_CELL_TYPE_COLUMN

1 -

SFTP scenario

2 -

Simplex

1 -

Single Sign On

8 -

Singlesource

1 -

SKLearn

1 -

soap

1 -

Software Development

1 -

SOLMAN

1 -

solman 7.2

2 -

Solution Manager

3 -

sp_dumpdb

1 -

sp_dumptrans

1 -

SQL

1 -

sql script

1 -

SSL

8 -

SSO

8 -

Substring function

1 -

SuccessFactors

1 -

SuccessFactors Platform

1 -

SuccessFactors Time Tracking

1 -

Sybase

1 -

system copy method

1 -

System owner

1 -

Table splitting

1 -

Tax Integration

1 -

Technical article

1 -

Technical articles

1 -

Technology Updates

14 -

Technology Updates

1 -

Technology_Updates

1 -

terraform

1 -

Threats

1 -

Time Collectors

1 -

Time Off

2 -

Time Sheet

1 -

Time Sheet SAP SuccessFactors Time Tracking

1 -

Tips and tricks

2 -

toggle button

1 -

Tools

1 -

Trainings & Certifications

1 -

Transport in SAP BODS

1 -

Transport Management

1 -

TypeScript

2 -

ui designer

1 -

unbind

1 -

Unified Customer Profile

1 -

UPB

1 -

Use of Parameters for Data Copy in PaPM

1 -

User Unlock

1 -

VA02

1 -

Validations

1 -

Vector Database

2 -

Vector Engine

1 -

Visual Studio Code

1 -

VSCode

1 -

Web SDK

1 -

work zone

1 -

workload

1 -

xsa

1 -

XSA Refresh

1

- « Previous

- Next »

- Demystifying the Common Super Domain for SAP Mobile Start in Technology Blogs by SAP

- FAQ for C4C Certificate Renewal in Technology Blogs by SAP

- What’s new in Mobile development kit client 24.4 in Technology Blogs by SAP

- Improving Time Management in SAP S/4HANA Cloud: A GenAI Solution in Technology Blogs by SAP

- IoT - Ultimate Data Cyber Security - with Enterprise Blockchain and SAP BTP 🚀 in Technology Blogs by Members

| User | Count |

|---|---|

| 8 | |

| 5 | |

| 5 | |

| 4 | |

| 4 | |

| 3 | |

| 3 | |

| 3 | |

| 3 | |

| 3 |