- SAP Community

- Groups

- Interest Groups

- Application Development

- Blog Posts

- A tale of transaction management in ABAP

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

"A transaction management? Why do we need some framework doing that"?

I had to introduce to my management why we wanted to go for BOPF when we started to design our custom application.

Although I knew that it will be quite tricky to convey the benefits of an application framework to Non-Developers (sometimes, it's even hard to discuss this with developers :wink: ), I could not just tell them "because we do need it" as I needed to convince them to upgrade the landscape to at least EhP5.

So I listed some of the benefits. I focused on the features supporting the development process:

- "Living models" in the system

- Enhanced modularization

- Reuse

- Common consumer interface

- Adapter for web-based user interfaces (FBI)

But also mentione some more technical ones (as I thought it might be a good idea to sound knowledgable :wink: )

- Database abstraction

- Buffering

- Transaction management

This was not the best idea I had. Actually, the audience knew close-to-nothing about what it takes to implement a full fledged transactional application, but some of them had attended the BC400 some decades ago. And they had a good memory:

"SAP has an excellent database abstraction, buffering and an integrated transaction management". And they could not resist adding a "Don't you know that?" after their speech.

What they were referring to were the features of the OpenSQL (as an abstraction of the vendor-individual database-dialects), the database buffer and the logical unit of work. And of course this is true.

So I was confronted to argue why these well-known concepts are not state-of-the-art and where theit limitations are.

While this was possible for database abstraction (as not every information is persisted), promoting the benefits of a buffer which does not directly flush into the database was more difficult. In the end, I was resorting to some aspect which I considerd to be a minor one, but which finally convinced them.

Implicit commits, buffering and a transaction manager

"How do you handle implicit commits when calling a remote function module using RFC", I asked. They did not understand.

Of course, as with most systems, our application should connect to other SAP-systems via RFC. But to my surprise, no one knew that we might have an architectural challenge when not using proper buffer- and transaction management.

I implemented a short report illustrating the need for a transaction management and application-server-memory based buffering with BOPF.

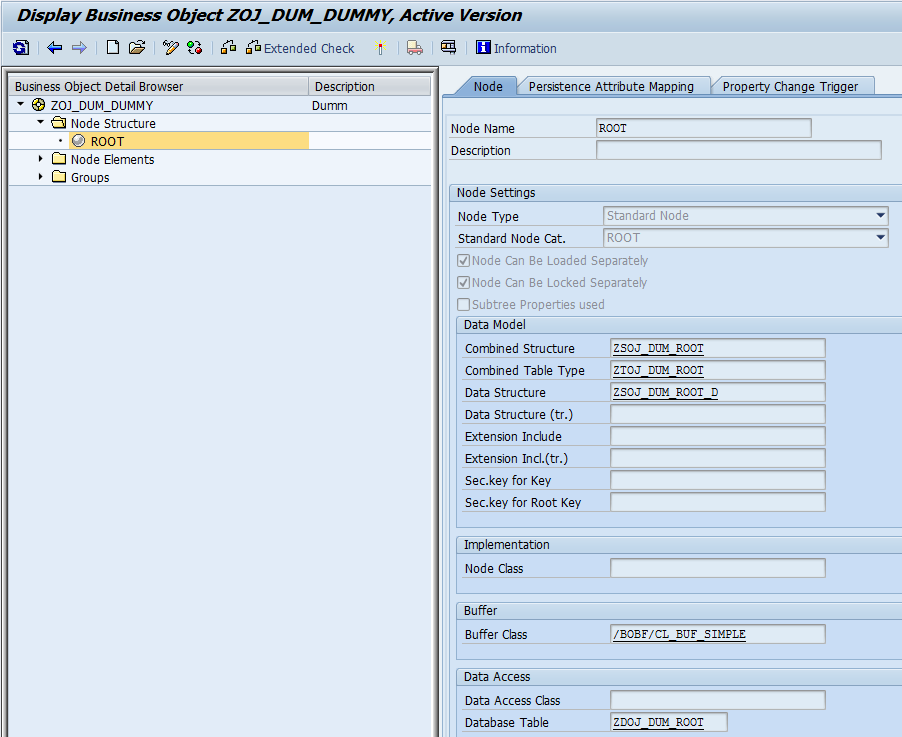

The model

For the sake of having a persistence to address I modeled a very simple dummy-business-object with only one node and one attribute and generated the DB table. The attribute is considered to be unique.

The sample transaction

For a short showcase, I implemented a sample consumer accessing the object. It creates a new instance, rolls back the transaction, tries to create the same instance again. As a variation, it calls a remote function module again within the sequence.

As the technical key of the instance is unique on the DB, subsequent creates / INSERTs are expected to fail unless there is a rollback in between.

Alternative implementations:

Classical OpenSQL-based transaction

In the "classical" approach I did not introduce a transaction layer but did it the way ABAPers (used to) do: Simply read and update the DB table directly. I call this the DB-interface approach as this uses the database table as first-level-citizen of the model and interacts with it like a programming interface. Buffering is taken care of by the application servers database buffer. This also means that no other buffers (internal tables) should be used within the application so that an OpenSQL SELECT can always get the current state. For managing the transaction, the well-known COMMIT and ROLL-BACK statements were as good as ever.

We all love OpenSQL, every ABAPer who has not done like that in his career may throw the first stone at me :wink:

BOPF-based transaction

With BOPF, I used the public interface of the service manager to access the dummy business object. Via the framework, a generic buffer using internal (member) tables is instantiated which takes care of returning the requested state (in this sample the current one) to the consumer reading the data. All transaction related operations were done - well - using the BOPF transaction manager.

The result

As the code pictures, direct DB interaction in ABAP is very efficient with respect to lines-of code. Particularly updating data using the BOPF interface methods is much less convenient and takes much more space on the screen. But that was not what counted.

When executing the transaction, the same controller for the transactions printed the intermediate results to the screen as an execution logs.

Execution log of the "classical" implementation

Execution log of the BOPF based implementation

We can clearly see that the transaction behave very similar. As expected, after each INSERT of the classical transaction the result is persisted on the database and thus globally visble. As BOPF only inserts into internal tables, a SELECT can't find them.

But after the RFC-based function module reading some remote data is called, the logs vary:

Although the transaction is rolled back afterwards, the changed data was persisted in the "classical" implementation. The reason for that is that a remote function call implicitly commits the transaction - in the calling system. With the application-server-memory based buffering of BOPF, this DB COMMIT is not an issue. Everything has been properly undone.

I've been showing this to some of my fellow developers and was surprised to hear that almost nobody knew about this benefit.

But this just shows the real value-add: Using a well-engineered framework which takes care of all the technology-handling, developers simply don't have to know about a many of those details and can concentrate on what it actually takes: Implementing Business Logic.

What do you think? How do you handle the transaction and buffer in your ABAP-application?

I'd be most interested to reading from you in the comments!

Oliver

- SAP Managed Tags:

- ABAP Development

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

A Dynamic Memory Allocation Tool

1 -

ABAP

9 -

abap cds

1 -

ABAP CDS Views

14 -

ABAP class

1 -

ABAP Cloud

1 -

ABAP Development

5 -

ABAP in Eclipse

2 -

ABAP Keyword Documentation

2 -

ABAP OOABAP

2 -

ABAP Programming

1 -

abap technical

1 -

ABAP test cockpit

7 -

ABAP test cokpit

1 -

ADT

1 -

Advanced Event Mesh

1 -

AEM

1 -

AI

1 -

API and Integration

1 -

APIs

9 -

APIs ABAP

1 -

App Dev and Integration

1 -

Application Development

2 -

application job

1 -

archivelinks

1 -

Automation

4 -

BTP

1 -

CAP

1 -

CAPM

1 -

Career Development

3 -

CL_GUI_FRONTEND_SERVICES

1 -

CL_SALV_TABLE

1 -

Cloud Extensibility

8 -

Cloud Native

7 -

Cloud Platform Integration

1 -

CloudEvents

2 -

CMIS

1 -

Connection

1 -

container

1 -

Debugging

2 -

Developer extensibility

1 -

Developing at Scale

3 -

DMS

1 -

dynamic logpoints

1 -

Dynpro

1 -

Dynpro Width

1 -

Eclipse ADT ABAP Development Tools

1 -

EDA

1 -

Event Mesh

1 -

Expert

1 -

Field Symbols in ABAP

1 -

Fiori

1 -

Fiori App Extension

1 -

Forms & Templates

1 -

General

1 -

Getting Started

1 -

IBM watsonx

2 -

Integration & Connectivity

9 -

Introduction

1 -

JavaScripts used by Adobe Forms

1 -

joule

1 -

NodeJS

1 -

ODATA

3 -

OOABAP

3 -

Outbound queue

1 -

Product Updates

1 -

Programming Models

14 -

Restful webservices Using POST MAN

1 -

RFC

1 -

RFFOEDI1

1 -

SAP BAS

1 -

SAP BTP

1 -

SAP Build

1 -

SAP Build apps

1 -

SAP Build CodeJam

1 -

SAP CodeTalk

1 -

SAP Odata

2 -

SAP SEGW

1 -

SAP UI5

1 -

SAP UI5 Custom Library

1 -

SAPEnhancements

1 -

SapMachine

1 -

security

3 -

SM30

1 -

Table Maintenance Generator

1 -

text editor

1 -

Tools

18 -

User Experience

6 -

Width

1

| User | Count |

|---|---|

| 4 | |

| 3 | |

| 2 | |

| 2 | |

| 2 | |

| 2 | |

| 2 | |

| 2 | |

| 1 | |

| 1 |