- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- How to improve the initial load

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Introduction

- Access Plan BTC Work Process Requirements

- Which Process Should You Use To Load Large Tables?

- What to know and do before you start

- ECC Source System Preparation

- Netweaver-Based SAP System Landscape Transformation (SLT) Systems

- Load Monitoring

- Process Summary Checklist

- Post Load Considerations

• Complex table partitioning models for the larger tables. For example, partitioning based on a substring of the last character of a column value

• Oracle database statistics that are ‘frozen’ to remove Oracle CBO operations from the data access model.

• The source ECC system was also consuming system-crashing volumes of PSAPTEMP.

• The statistics freeze prevented us from creating indexes for optimized parallel SLT loads.

Each access plan job requires one SAP BTC work process on both the SLT and ECC systems.

Let’s assume that your SLT server has 15 BTC work processes allocated. You have configured via the SLT transaction LTR for a Total work process allocation of 10 with 8 of those 10 allocated for Initial Loads. This leaves 5 available BTC work processes.

• Additional SAP batch work processes (BTC) will be needed on the source and SLT servers to handle the multiple access plan jobs.

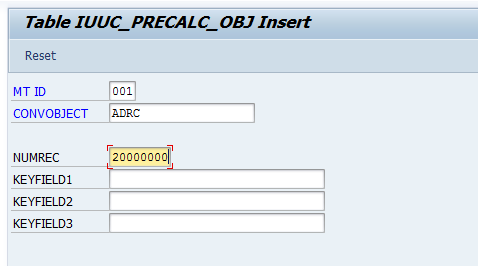

• Table data will be divided into separate access plans by record count (NUMREC in IUUC_PRECALC_OBJ) of the physical (RFBLG, for example) cluster table, not the logical (BSEG,for example) cluster table.

• Be careful not to create too many access plans, as all of them need to be processed by yet another batch job in the sender system. As a maximum, use 8 access plans.

• Requires use of table DMC_INDXCL on source ECC system

• Simple configuration

• Normally not needed, unless you not only want to parallelize the processing but also want to restrict the set of data to be processed, for example, by filtering for a certain organizational unit, or fiscal year, etc.

• Requires use of table DMC_INDXCL on source ECC system

• More complex configuration steps – contact SAP support

• Table data will be divided into separate access plans by record count (NUMREC in IUUC_PRECALC_OBJ). For each of them, another batch job will run in the sender system, each of them doing a full table scan of the table.

• Requires use of table DMC_INDXCL on source ECC system

• Simple configuration

- May need to request storage and tablespace from customer

- Add security profile S_BTCH_ADMIN to your SLT CPIC user on the source ECC system. Sometimes, depending on Basis SP, the user will not automatically have the ability to release batch jobs and so your SLT access plan jobs will just sit there waiting to be manually released. Therefore, make sure that you obtain the latest version of role SAP_IUUC_REPL_REMOTE and assign it to the user assigned to the rfc destination pointing from the SLT system to the source system. You can get this role from note 1817026.

- Oracle and ASSM issues - Oracle 10.2.0.4 has issues with LOB tables which will impact inserts – they hang – to table DMC_INDXCL. See note 1166242 for the work around: alter table SAPR3.DMC_INDXCL modify lob (CLUSTD) (pctversion 10);

- Minimum DMIS SP: DMIS 2010 SP07 or DMIS 2011 SP02. It is much better to go with the most recent release of DMIS SP.

- For DMIS 2010 SP07 or DMIS 2011 SP02, ensure that note 1745666 is applied via SNOTE

Note: The corrections of this note is included in DMIS 2010 SP08 and DMIS 2011 SP03. - For DMIS 2010 SP07 or DMIS 2011 SP02: Ensure that note 1751368 is applied via SNOTE.

- SLT: Minimum DMIS SP: DMIS 2010 SP07 or DMIS 2011 SP02.

- For DMIS 2010 SP07 or DMIS 2011 SP02: Ensure that note 1745666 and 1751368 are applied via SNOTE.

- For DMIS 2010 SP08 or DMIS 2011 SP03: Ensure that all notes listed in Note 1759156 - Installation/Upgrade SLT - DMIS 2011 SP3 / 2010 SP8 are applied and current.

- SLT: Add entry to table IUUC_PRECALC_OBJ

- SLT: Add entry to table IUUC_PERF_OPTION

• Transparent Table: Type 5 -> “INDX CLUSTER with FULL TABLE SCAN”

- HANA: Select table for REPLICATION in Data Provisioning

- ECC: Review Transaction SM37

- SLT: Review Transaction SM37

- Job ACC_PLAN_CALC_001_01 is running (Starting from DMIS 2011 SP5, the job names will be /1LT/MWB_CALCCACP_<mass transfer ID> )

- SLT: Standard MWBMON processes

- ECC: SM21/ST22/SM66

- ECC: Review Transaction SM37

- Review job log for MWBACPLCALC_Z_<table name>_<mt id> to monitor record count and progress:

Process Summary Checklist

- SLT: Created entry in IUUC_PRECALC_OBJ

- SLT: Created entry in IUUC_PERF_OPTION

- SLT: SLT batch jobs are running.

- HANA: Table submitted from HANA Data Provisioning

- ECC: Job DMC_DL_<table_name> is running

- SLT: ACC* batch job is running

- ECC: Job DMC* started/finished and job MWBACPLCALC_Z_<table_name>_<mt_id> started

- SLT: All ACC* batch jobs completed

- ECC: All MWB* batch jobs completed

- SLT: Table loading (MWBMON)

- HANA: Table loaded and in replication

Post Load Considerations

After the load has completed, it is good manners to clean up DMC_INDXCL on the ECC system and remove the table data. Starting with DMIS 2011 SP4, or DMIS 2010 SP9 this is done automatically, with older SPs, you should to it manually.

On Source System:

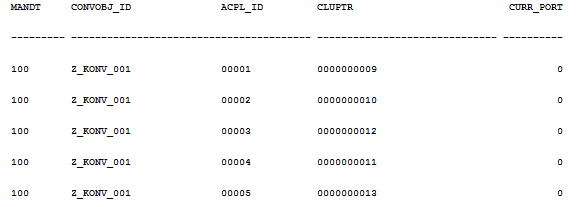

Review your data in the DMC cluster table:

Select * from <schema>.dmc_cluptr order by 1,2,3 where convobj_id=’Z_KONV_001’

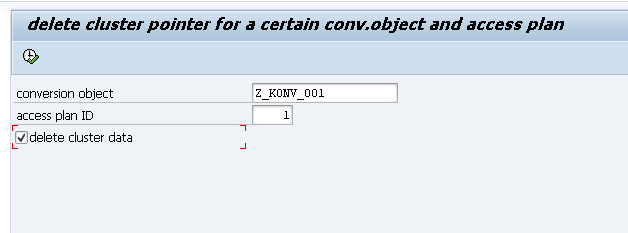

SE38->DMC_DELETE_CLUSTER_POINTER

- The conversion object is the same as is listed in DMC_COBJ->IDENT on the SLT server.

- Access plan ID is listed in select statement above. Run this command for 00001-00005.

- Select 'delete cluster data'

- There is no output. If it does not fail, then it worked.

This is a techical expert guide provided by development. Please feel free to share your feelings and feedback.

Best,

Tobias

- SAP Managed Tags:

- SAP HANA,

- SAP Landscape Transformation replication server

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

91 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

66 -

Expert

1 -

Expert Insights

177 -

Expert Insights

293 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

12 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,577 -

Product Updates

340 -

Replication Flow

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,873 -

Technology Updates

417 -

Workload Fluctuations

1

- SAP Datasphere Replication Flow Partition Issue in Technology Q&A

- SAP Analytics Cloud - Performance statistics and zero records in Technology Blogs by SAP

- 10+ ways to reshape your SAP landscape with SAP Business Technology Platform – Blog 4 in Technology Blogs by SAP

- What’s New in SAP Datasphere Version 2024.8 — Apr 11, 2024 in Technology Blogs by Members

- Unlocking Full-Stack Potential using SAP build code - Part 1 in Technology Blogs by Members

| User | Count |

|---|---|

| 33 | |

| 25 | |

| 10 | |

| 7 | |

| 7 | |

| 7 | |

| 6 | |

| 6 | |

| 5 | |

| 4 |