- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- How to load data from archives into HANA DB

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Hi,

the load from archives is available as of DMIS 2010 SP7, or DMIS 2011 SP2.

Prerequisite for an archive load is that the tables for which data should be transferred from the archive into HANA have been added to the replication. So this is the very first step.

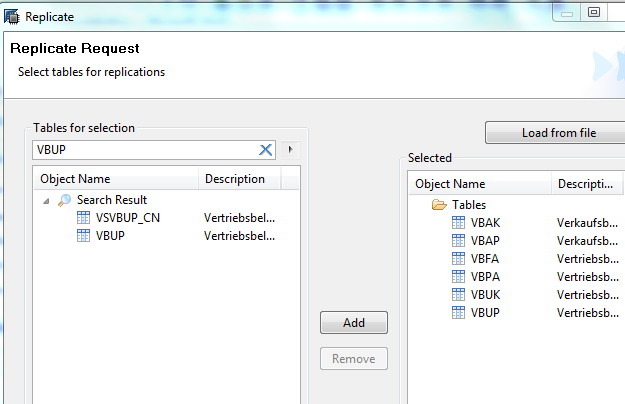

In the example of archive object SD_VBAK, there are very many tables involved. For simplification, only a small subset of them is selected. This is done, as usual, in the data provisioning UI provided by the HANA studio:

Only if those tables are processed in the normal initial load and replication processing, it is possible to use report IUUC_CREATE_ARCHIVE_OBJECT to define a migration object to load data from the archive.

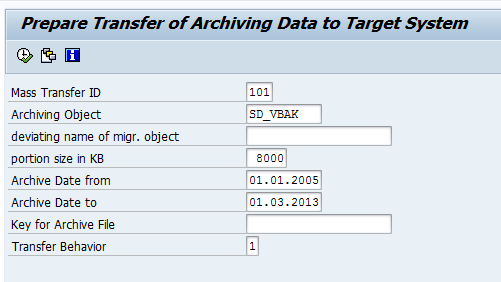

Usage of report IUUC_CREATE_ARCHIVE_OBJECT is explained in its online documentation. In our example, the parameters would be supplied as shown below:

The mass transfer ID to be specified here is the one assigned to the respective HANA replication configuration and can be seen from the WebDynpro started with transaction LTR.

Transfer behavior 1 means that we want to transfer data to HANA. If the data should be deleted, the transfer behavior can be set to 8.

Pressing the “execute” button for this report will then show a selection screen where all those tables which are both being replicated and are part of the archive object are shown. The user could select a part of those tables, or all of them, to be loaded from the archive:

After having chosen the relevant tables, press ENTER to have the corresponding migration object created, and its runtime objects generated. Then, you will see the new object in the “data transfer monitor” tab of transaction LTRC, the name will be exactly the name of the archive object, if not specified otherwise.

Currently it is still necessary to invoke step “Calculate Access Plan” manually from the “Steps” tab of transaction MWBMON. Only the two highlighted fields need to be manually filled:

After that, the data from the archive will be automatically transferred.

Necessary authorizations

Please note that the rfc user assigned to the rfc destination pointing from the SLT system to the sender system requires an additional authorization which is currently not part of role SAP_IUUC_REPL_REMOTE. The authorization check is:

AUTHORITY-CHECK OBJECT 'S_ARCHIVE'

ID 'ACTVT' FIELD '03'

ID 'APPLIC' FIELD l_applic

ID 'ARCH_OBJ' FIELD object.

That means, for authorization object S_ARCHIVE, we need an authorization with activity 03 (display), the respective archive object, and the application to which the archive object belongs.

Filter or mapping rules not available

Note that the automatic assignment of filter or mapping rules which is done for other migration objects (initial load and replication) by means of table IUUC_A S S_RUL_MAP will not work for the archive load objects. If any filtering and / or mapping is required for such objects, the corresponding rules need to be manually assigned to the migration object.

Relevant Notes

- Note 1880224 - Replication of archived data: TABLE_INVALID_INDEXhttps://service.sap.com/sap/support/notes/1864525

Hope this helps to understand the load from archives much better. Special thanks to Günter for these details.

Best,

Tobias

- SAP Managed Tags:

- SAP Landscape Transformation replication server

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,661 -

Business Trends

87 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

64 -

Expert

1 -

Expert Insights

178 -

Expert Insights

273 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

Kafka

1 -

Life at SAP

784 -

Life at SAP

11 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,577 -

Product Updates

325 -

Replication Flow

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,886 -

Technology Updates

403 -

Workload Fluctuations

1

- [BIG PROBLEM] SAP Host Agent cannot connect to SYSTEMDB in Technology Q&A

- SAP HANA Cloud Vector Engine: Quick FAQ Reference in Technology Blogs by SAP

- I cannot cancel an import in SAP Analytics Cloud from SAP Hana. in Technology Q&A

- How to configure Custom SAPUI5 app to Fiori launchpad in S/4 HANA 2022 on premise? in Technology Q&A

- Capture Your Own Workload Statistics in the ABAP Environment in the Cloud in Technology Blogs by SAP

| User | Count |

|---|---|

| 12 | |

| 10 | |

| 9 | |

| 7 | |

| 7 | |

| 7 | |

| 6 | |

| 6 | |

| 5 | |

| 4 |