- SAP Community

- Products and Technology

- Technology

- Technology Blogs by Members

- The ABAP Detective And The Degraded Upgrade - PART...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

What do the little grey cells say? They tell me this upgrade will be just another dusty manila-jacketed case file in a few weeks, just one more notch on the barrel of a never-ending line of patches, large and small, to one or the other of the house of punch cards that support the glass towers in the silos of industry. Back when ERP 6 was the bees knees, all the shills said "you'll never patch in this town again." I'm planning to stick around long enough to prove them wrong.

In the last episode of The ABAP Detective (Part 1 of this saga), I penned the story from the viewpoint of the source code system, the development box, the thinker's statue. This time, days before the production down time, I'll look at the volume testing and code base of the production copy. But before that, what's the mystery here? Do I even have a case, or am I dreaming?

Figure 1

The heavy hitters in a transaction system are the reports that run in parallel, like 18-wheelers jolting down a New York side street with a load of flowers, or the sanitation trucks that take away the same vegetation a few days later. Or they're the unnecessary buzz of the crowd outside a dance hall, magpies chattering about nothing. Or just the strident jolt of a stack of plates in a diner when the shifts change. For figure one, it's a SQL statement, seen in all of its glory via ST04.

What does this show about an ECC upgrade? In my case book, it's the same line as before, a buffer that runneth over. Harmless by itself, but a portend of a tuning opportunity. I'd need to know how much it runs, how much it costs, whether it's worth redemption.

Figure 2a

Figure 2b

Two rows down from the table buffer overrun (which ran 600 times and pulled in 25 million rows...) is a statement against CDHDR. So, yeah, the new system has the same guts as the old system, change pointers, index into a cluster table, and lots and lots of data.

SELECT * FROM "CDHDR" WHERE "MANDANT"=:A0 AND "OBJECTCLAS"=:A1 AND "OBJECTID"=:A2 AND ("UDATE"=:A3 AND "UTIME">=:A4 OR "UDATE">:A5 ) AND ("UDATE"=:A6 AND "UTIME"<=:A7 OR "UDATE"<:A8 ) |

So, good statement, or bad statement? It's got a few of the key fields, and a date/time range that would work best if the user or code out the screws to the limits. If not, leaving the field wide open, maybe bad. We'd need to see the literals, not this bound version. What does the optimizer do?

SELECT STATEMENT ( Estimated Costs = 2 , Estimated #Rows = 0 2 TABLE ACCESS BY INDEX ROWID CDHDR ( Estim. Costs = 1 , Estim. #Rows = 1 ) Estim. CPU-Costs = 7,368 Estim. IO-Costs = 1 Filter Predicates 1 INDEX RANGE SCAN CDHDR~0 ( Estim. Costs = 1 , Estim. #Rows = 1 ) Search Columns: 3 Estim. CPU-Costs = 5,777 Estim. IO-Costs = 1 Access Predicates |

Primary key, hoping for the best. There aren't a lot of suspects to choose from in this field.

|

Back of the envelope math (would need to do frequency analysis via DB05 for more dirt) - almost 300 million records, key fields limit the selection to about 1 percent of that (OBJECTID is specified) so maybe we only get 100 hundred rows to sift through. Maybe.

Figure 3

Figure 4

Flipping ahead a few days to look at the system logs, rather than the shared SQL cursor area. My notebook shows the trash cans and recycle bins overflowed, causinga bit of havoc as the stat files could not be updated. A minor transgression, but once again, the underlying data structures are pretty much the same as the last several generations of this code.

Looking at the case from a different angle, the operator view of the system logs:

dev_w11 M Wed Dec 19 17:29:49 2012 M ***LOG R4F=> PfWriteIntoFile, PfWrStatFile failed ( 0028) [pfxxstat.c 5445] M ***LOG R2A=> PfWriteIntoFile, PfWrStatFile failed (/usr/sap/???/DVEBMGS02/data/stat) [pfxxstat.c 5450] M M Wed Dec 19 17:33:59 2012 M ***LOG R4F=> PfWriteIntoFile, PfWrStatFile failed ( 0028) [pfxxstat.c 5445] M ***LOG R2A=> PfWriteIntoFile, PfWrStatFile failed (/usr/sap/???/DVEBMGS02/data/stat) [pfxxstat.c 5450] |

Yup, stat file nolo contendre. Here's another view, from the UNIX operation level:

$ df -g /usr/sap/???/DVEBMGS02/data/stat Filesystem GB blocks Free %Used Iused %Iused Mounted on /dev/???sap???01lv 2.00 0.00 100% 26719 99% /usr/sap/??? |

Figure 5

Figure 5 shows more system log noise, with an enqueue_read (sorry, enque_read) that failed with a bad user id. SCN shows 4 hits for this error, with no clear resolution (at least to this ABAP Detective). Hope it gets better soon.

| http://search.sap.com/ui/scn#query=enque_read+error+password&startindex=1&filter=scm_a_site%28scm_v_... |

|---|

Figure 6

Back to some basic database / application server inter-operability checks. Table buffers. Drab stuff, but users like fast buffers. Oh, that's right, keep the data tables in memory. Sound familiar? Where did I hear that before?

This view is sorted by invalidations; usual suspects at the top. The rogues to be fixed are hiding a little farther down.

Figure 7

Generic key buffer - my favorite. Okay, the quality system isn't as big as production. We'd want more than 30 MB available for common data on each application server.

Figure 8

A cross-town taxi ride to SM66, Global Work Process Overview, shows the busy traffic as would be expected in an enterprise system. Mostly direct reads (meaning waiting for the database to respond), and interactive sessions doing communications. Not bad, but those 10-thousand second reports need pruning eventually.

Figure 9

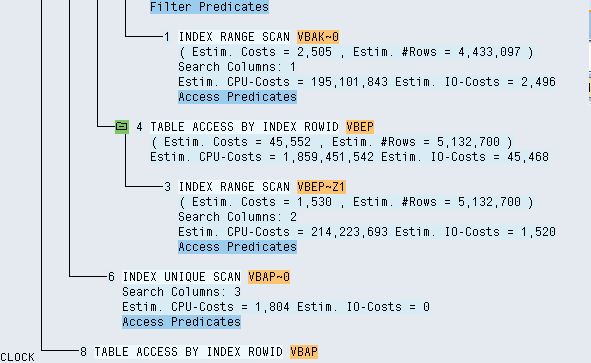

Flipping back to ST04, database cache SQL tuning, I've opened a background check on the VBEP table. What is it doing for 18K seconds in a Z program?

Figure 10

One execution, a million disk reads, 45 rows returned. Great. A lot of noise from such a pretty little gun.

Figure 11

Urm, why did VBAK show up here?

Ah, there's VBEP. Along with VBAP and VBAK. Three major table join, a few million rows in each. It's Z code. You know.

Figure 12

Last shot, then we'll put this news rag to bed. Z program, joining VBEP, VBAK and VBAP, just like it says. Into an internal table - classic ABAP styling. All it needs is double-breasted brass buttons and a feather in the hat band.

There's a database hint embedded - not for the faint of heart. Whether the hint still makes sense in the current database version is left as an exercise for the student. That's you. What do you think?

PART 1 - The ABAP Detective And The Degraded Upgrade - PART 1

PART 3 - The ABAP Detective And The Degraded Upgrade - PART 3

- SAP Managed Tags:

- SAP ERP,

- Software Logistics

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

"automatische backups"

1 -

"regelmäßige sicherung"

1 -

505 Technology Updates 53

1 -

ABAP

14 -

ABAP API

1 -

ABAP CDS Views

2 -

ABAP CDS Views - BW Extraction

1 -

ABAP CDS Views - CDC (Change Data Capture)

1 -

ABAP class

2 -

ABAP Cloud

2 -

ABAP Development

5 -

ABAP in Eclipse

1 -

ABAP Platform Trial

1 -

ABAP Programming

2 -

abap technical

1 -

absl

1 -

access data from SAP Datasphere directly from Snowflake

1 -

Access data from SAP datasphere to Qliksense

1 -

Accrual

1 -

action

1 -

adapter modules

1 -

Addon

1 -

Adobe Document Services

1 -

ADS

1 -

ADS Config

1 -

ADS with ABAP

1 -

ADS with Java

1 -

ADT

2 -

Advance Shipping and Receiving

1 -

Advanced Event Mesh

3 -

AEM

1 -

AI

7 -

AI Launchpad

1 -

AI Projects

1 -

AIML

9 -

Alert in Sap analytical cloud

1 -

Amazon S3

1 -

Analytical Dataset

1 -

Analytical Model

1 -

Analytics

1 -

Analyze Workload Data

1 -

annotations

1 -

API

1 -

API and Integration

3 -

API Call

2 -

Application Architecture

1 -

Application Development

5 -

Application Development for SAP HANA Cloud

3 -

Applications and Business Processes (AP)

1 -

Artificial Intelligence

1 -

Artificial Intelligence (AI)

4 -

Artificial Intelligence (AI) 1 Business Trends 363 Business Trends 8 Digital Transformation with Cloud ERP (DT) 1 Event Information 462 Event Information 15 Expert Insights 114 Expert Insights 76 Life at SAP 418 Life at SAP 1 Product Updates 4

1 -

Artificial Intelligence (AI) blockchain Data & Analytics

1 -

Artificial Intelligence (AI) blockchain Data & Analytics Intelligent Enterprise

1 -

Artificial Intelligence (AI) blockchain Data & Analytics Intelligent Enterprise Oil Gas IoT Exploration Production

1 -

Artificial Intelligence (AI) blockchain Data & Analytics Intelligent Enterprise sustainability responsibility esg social compliance cybersecurity risk

1 -

ASE

1 -

ASR

2 -

ASUG

1 -

Attachments

1 -

Authorisations

1 -

Automating Processes

1 -

Automation

1 -

aws

2 -

Azure

1 -

Azure AI Studio

1 -

B2B Integration

1 -

Backorder Processing

1 -

Backup

1 -

Backup and Recovery

1 -

Backup schedule

1 -

BADI_MATERIAL_CHECK error message

1 -

Bank

1 -

BAS

1 -

basis

2 -

Basis Monitoring & Tcodes with Key notes

2 -

Batch Management

1 -

BDC

1 -

Best Practice

1 -

bitcoin

1 -

Blockchain

3 -

BOP in aATP

1 -

BOP Segments

1 -

BOP Strategies

1 -

BOP Variant

1 -

BPC

1 -

BPC LIVE

1 -

BTP

11 -

BTP Destination

2 -

Business AI

1 -

Business and IT Integration

1 -

Business application stu

1 -

Business Architecture

1 -

Business Communication Services

1 -

Business Continuity

1 -

Business Data Fabric

3 -

Business Partner

12 -

Business Partner Master Data

10 -

Business Technology Platform

2 -

Business Trends

1 -

CA

1 -

calculation view

1 -

CAP

3 -

Capgemini

1 -

CAPM

1 -

Catalyst for Efficiency: Revolutionizing SAP Integration Suite with Artificial Intelligence (AI) and

1 -

CCMS

2 -

CDQ

12 -

CDS

2 -

Cental Finance

1 -

Certificates

1 -

CFL

1 -

Change Management

1 -

chatbot

1 -

chatgpt

3 -

CL_SALV_TABLE

2 -

Class Runner

1 -

Classrunner

1 -

Cloud ALM Monitoring

1 -

Cloud ALM Operations

1 -

cloud connector

1 -

Cloud Extensibility

1 -

Cloud Foundry

3 -

Cloud Integration

6 -

Cloud Platform Integration

2 -

cloudalm

1 -

communication

1 -

Compensation Information Management

1 -

Compensation Management

1 -

Compliance

1 -

Compound Employee API

1 -

Configuration

1 -

Connectors

1 -

Consolidation Extension for SAP Analytics Cloud

1 -

Controller-Service-Repository pattern

1 -

Conversion

1 -

Cosine similarity

1 -

cryptocurrency

1 -

CSI

1 -

ctms

1 -

Custom chatbot

3 -

Custom Destination Service

1 -

custom fields

1 -

Customer Experience

1 -

Customer Journey

1 -

Customizing

1 -

Cyber Security

2 -

Data

1 -

Data & Analytics

1 -

Data Aging

1 -

Data Analytics

2 -

Data and Analytics (DA)

1 -

Data Archiving

1 -

Data Back-up

1 -

Data Governance

5 -

Data Integration

2 -

Data Quality

12 -

Data Quality Management

12 -

Data Synchronization

1 -

data transfer

1 -

Data Unleashed

1 -

Data Value

8 -

database tables

1 -

Datasphere

2 -

datenbanksicherung

1 -

dba cockpit

1 -

dbacockpit

1 -

Debugging

2 -

Delimiting Pay Components

1 -

Delta Integrations

1 -

Destination

3 -

Destination Service

1 -

Developer extensibility

1 -

Developing with SAP Integration Suite

1 -

Devops

1 -

digital transformation

1 -

Documentation

1 -

Dot Product

1 -

DQM

1 -

dump database

1 -

dump transaction

1 -

e-Invoice

1 -

E4H Conversion

1 -

Eclipse ADT ABAP Development Tools

2 -

edoc

1 -

edocument

1 -

ELA

1 -

Embedded Consolidation

1 -

Embedding

1 -

Embeddings

1 -

Employee Central

1 -

Employee Central Payroll

1 -

Employee Central Time Off

1 -

Employee Information

1 -

Employee Rehires

1 -

Enable Now

1 -

Enable now manager

1 -

endpoint

1 -

Enhancement Request

1 -

Enterprise Architecture

1 -

ETL Business Analytics with SAP Signavio

1 -

Euclidean distance

1 -

Event Dates

1 -

Event Driven Architecture

1 -

Event Mesh

2 -

Event Reason

1 -

EventBasedIntegration

1 -

EWM

1 -

EWM Outbound configuration

1 -

EWM-TM-Integration

1 -

Existing Event Changes

1 -

Expand

1 -

Expert

2 -

Expert Insights

1 -

Fiori

14 -

Fiori Elements

2 -

Fiori SAPUI5

12 -

Flask

1 -

Full Stack

8 -

Funds Management

1 -

General

1 -

Generative AI

1 -

Getting Started

1 -

GitHub

8 -

Grants Management

1 -

groovy

1 -

GTP

1 -

HANA

5 -

HANA Cloud

2 -

Hana Cloud Database Integration

2 -

HANA DB

1 -

HANA XS Advanced

1 -

Historical Events

1 -

home labs

1 -

HowTo

1 -

HR Data Management

1 -

html5

8 -

Identity cards validation

1 -

idm

1 -

Implementation

1 -

input parameter

1 -

instant payments

1 -

Integration

3 -

Integration Advisor

1 -

Integration Architecture

1 -

Integration Center

1 -

Integration Suite

1 -

intelligent enterprise

1 -

Java

1 -

job

1 -

Job Information Changes

1 -

Job-Related Events

1 -

Job_Event_Information

1 -

joule

4 -

Journal Entries

1 -

Just Ask

1 -

Kerberos for ABAP

8 -

Kerberos for JAVA

8 -

Launch Wizard

1 -

Learning Content

2 -

Life at SAP

1 -

lightning

1 -

Linear Regression SAP HANA Cloud

1 -

local tax regulations

1 -

LP

1 -

Machine Learning

2 -

Marketing

1 -

Master Data

3 -

Master Data Management

14 -

Maxdb

2 -

MDG

1 -

MDGM

1 -

MDM

1 -

Message box.

1 -

Messages on RF Device

1 -

Microservices Architecture

1 -

Microsoft Universal Print

1 -

Middleware Solutions

1 -

Migration

5 -

ML Model Development

1 -

Modeling in SAP HANA Cloud

8 -

Monitoring

3 -

MTA

1 -

Multi-Record Scenarios

1 -

Multiple Event Triggers

1 -

Neo

1 -

New Event Creation

1 -

New Feature

1 -

Newcomer

1 -

NodeJS

2 -

ODATA

2 -

OData APIs

1 -

odatav2

1 -

ODATAV4

1 -

ODBC

1 -

ODBC Connection

1 -

Onpremise

1 -

open source

2 -

OpenAI API

1 -

Oracle

1 -

PaPM

1 -

PaPM Dynamic Data Copy through Writer function

1 -

PaPM Remote Call

1 -

PAS-C01

1 -

Pay Component Management

1 -

PGP

1 -

Pickle

1 -

PLANNING ARCHITECTURE

1 -

Popup in Sap analytical cloud

1 -

PostgrSQL

1 -

POSTMAN

1 -

Process Automation

2 -

Product Updates

4 -

PSM

1 -

Public Cloud

1 -

Python

4 -

Qlik

1 -

Qualtrics

1 -

RAP

3 -

RAP BO

2 -

Record Deletion

1 -

Recovery

1 -

recurring payments

1 -

redeply

1 -

Release

1 -

Remote Consumption Model

1 -

Replication Flows

1 -

Research

1 -

Resilience

1 -

REST

1 -

REST API

1 -

Retagging Required

1 -

Risk

1 -

Rolling Kernel Switch

1 -

route

1 -

rules

1 -

S4 HANA

1 -

S4 HANA Cloud

1 -

S4 HANA On-Premise

1 -

S4HANA

3 -

S4HANA_OP_2023

2 -

SAC

10 -

SAC PLANNING

9 -

SAP

4 -

SAP ABAP

1 -

SAP Advanced Event Mesh

1 -

SAP AI Core

8 -

SAP AI Launchpad

8 -

SAP Analytic Cloud Compass

1 -

Sap Analytical Cloud

1 -

SAP Analytics Cloud

4 -

SAP Analytics Cloud for Consolidation

2 -

SAP Analytics Cloud Story

1 -

SAP analytics clouds

1 -

SAP BAS

1 -

SAP Basis

6 -

SAP BODS

1 -

SAP BODS certification.

1 -

SAP BTP

20 -

SAP BTP Build Work Zone

2 -

SAP BTP Cloud Foundry

5 -

SAP BTP Costing

1 -

SAP BTP CTMS

1 -

SAP BTP Innovation

1 -

SAP BTP Migration Tool

1 -

SAP BTP SDK IOS

1 -

SAP Build

11 -

SAP Build App

1 -

SAP Build apps

1 -

SAP Build CodeJam

1 -

SAP Build Process Automation

3 -

SAP Build work zone

10 -

SAP Business Objects Platform

1 -

SAP Business Technology

2 -

SAP Business Technology Platform (XP)

1 -

sap bw

1 -

SAP CAP

2 -

SAP CDC

1 -

SAP CDP

1 -

SAP Certification

1 -

SAP Cloud ALM

4 -

SAP Cloud Application Programming Model

1 -

SAP Cloud Integration for Data Services

1 -

SAP cloud platform

8 -

SAP Companion

1 -

SAP CPI

3 -

SAP CPI (Cloud Platform Integration)

2 -

SAP CPI Discover tab

1 -

sap credential store

1 -

SAP Customer Data Cloud

1 -

SAP Customer Data Platform

1 -

SAP Data Intelligence

1 -

SAP Data Migration in Retail Industry

1 -

SAP Data Services

1 -

SAP DATABASE

1 -

SAP Dataspher to Non SAP BI tools

1 -

SAP Datasphere

9 -

SAP DRC

1 -

SAP EWM

1 -

SAP Fiori

2 -

SAP Fiori App Embedding

1 -

Sap Fiori Extension Project Using BAS

1 -

SAP GRC

1 -

SAP HANA

1 -

SAP HCM (Human Capital Management)

1 -

SAP HR Solutions

1 -

SAP IDM

1 -

SAP Integration Suite

9 -

SAP Integrations

4 -

SAP iRPA

2 -

SAP Learning Class

1 -

SAP Learning Hub

1 -

SAP Odata

2 -

SAP on Azure

1 -

SAP PartnerEdge

1 -

sap partners

1 -

SAP Password Reset

1 -

SAP PO Migration

1 -

SAP Prepackaged Content

1 -

SAP Process Automation

2 -

SAP Process Integration

2 -

SAP Process Orchestration

1 -

SAP S4HANA

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Cloud for Finance

1 -

SAP S4HANA Cloud private edition

1 -

SAP Sandbox

1 -

SAP STMS

1 -

SAP SuccessFactors

2 -

SAP SuccessFactors HXM Core

1 -

SAP Time

1 -

SAP TM

2 -

SAP Trading Partner Management

1 -

SAP UI5

1 -

SAP Upgrade

1 -

SAP-GUI

8 -

SAP_COM_0276

1 -

SAPBTP

1 -

SAPCPI

1 -

SAPEWM

1 -

sapmentors

1 -

saponaws

2 -

SAPUI5

4 -

schedule

1 -

Secure Login Client Setup

8 -

security

9 -

Selenium Testing

1 -

SEN

1 -

SEN Manager

1 -

service

1 -

SET_CELL_TYPE

1 -

SET_CELL_TYPE_COLUMN

1 -

SFTP scenario

2 -

Simplex

1 -

Single Sign On

8 -

Singlesource

1 -

SKLearn

1 -

soap

1 -

Software Development

1 -

SOLMAN

1 -

solman 7.2

2 -

Solution Manager

3 -

sp_dumpdb

1 -

sp_dumptrans

1 -

SQL

1 -

sql script

1 -

SSL

8 -

SSO

8 -

Substring function

1 -

SuccessFactors

1 -

SuccessFactors Time Tracking

1 -

Sybase

1 -

system copy method

1 -

System owner

1 -

Table splitting

1 -

Tax Integration

1 -

Technical article

1 -

Technical articles

1 -

Technology Updates

1 -

Technology Updates

1 -

Technology_Updates

1 -

Threats

1 -

Time Collectors

1 -

Time Off

2 -

Tips and tricks

2 -

Tools

1 -

Trainings & Certifications

1 -

Transport in SAP BODS

1 -

Transport Management

1 -

TypeScript

2 -

unbind

1 -

Unified Customer Profile

1 -

UPB

1 -

Use of Parameters for Data Copy in PaPM

1 -

User Unlock

1 -

VA02

1 -

Validations

1 -

Vector Database

1 -

Vector Engine

1 -

Visual Studio Code

1 -

VSCode

1 -

Web SDK

1 -

work zone

1 -

workload

1 -

xsa

1 -

XSA Refresh

1

- « Previous

- Next »

- Business Continuity with RISE and BTP: part 1 – Concept Explained in Technology Blogs by SAP

- The ABAP Detective Copies A Client in Technology Blogs by Members

- Big Data with SAP | SAP HANA 2.0 – An Introduction in Technology Blogs by SAP

- CPU flag nonstop_tsc is not set error after HANA upgrade in Technology Q&A

- The ABAP Detective Forms A Pythonic Quest: Part 1 in Technology Blogs by Members

| User | Count |

|---|---|

| 11 | |

| 9 | |

| 7 | |

| 6 | |

| 4 | |

| 4 | |

| 3 | |

| 3 | |

| 3 | |

| 3 |