- SAP Community

- Products and Technology

- Additional Blogs by Members

- SEM-BCS - Refresh your test system with productive...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Introduction

The initial need was to automatically and periodically refresh the SEM-BCS (Business Consolidation System) Infoproviders in Quality system with the Production data. The purpose was to enable the business users to perform their test scenarii in Quality with the up to date data records.

At that time, two solutions were discussed :

- Perform a system copy

- Use a datamart interface

The first solution is quite heavy because generally implies to copy both the data and the customizing (which might have side effects) and requires an administrator assistance. The second solution is a lot more flexible because allows to copy only the data (with filters on characteristic values, if needed) and can be easily executed by a business user.

How does it work ?

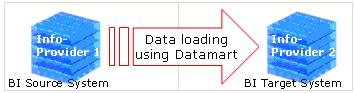

A datamart interface makes it possible to update data from one infoprovider of a BI source system (MBP - Production) to another Infoprovider of a BI target system (MBQ - Quality).

Steps to be followed to create a datamart interface :

In the source system (MBP)

- Generate the export datasource (8+Name of the Infoprovider)

In the target system (MBQ)

- Assign the MBP source system to MBQ

- Replicate the export datasource

- Create an Infopackage to load the PSA of the replicated export datasource

- Create a transformation/DTP to load the target infoprovider

SEM-BCS data model

The SEM-BCS component requires several data targets :

- A Real Time Infocube used to collect/store the totals records

- A Virtual Infocube to retrieve the totals records with the consolidation logic

- Direct update DSOs to collect/store the documents and additional data

- Virtual DSOs to retrieve the documents and additional data with the consolidation logic

- A Reporting Infocube and a Multicube (both are optional) to archive the historical data and improve reporting performances

List of the data targets involved in our SEM-BCS system :

Regarding the datamart we want to implement here, SEM-BCS has two specific features that need to be taken into consideration :

- The BCS Real Time Infocube is different from a Basic Infocube: it enables the user to collect small quantities of data on the fly, by simply executing tasks in the consolidation monitor. It can also be loaded like a Basic Infocube with a DTP. Thus, this Infocube can work in two modes: plan mode (data collection on the fly) or load mode (DTP execution). In our scenario, it has to be switched to load mode to be updated using the datamart interface.

- The direct update DSOs differ from the standard ones in terms of how the data is processed: in a standard DSO, data is stored in different versions (active, delta, modified), whereas a DSO for direct update contains data in a single version (active data only). A DSO for Direct update can not be the Target of a loading process (not supported by the BI system). In other words, it is not possible to execute a transformation between the Export Datasource and the DSO. However the DSO for Direct Update can fill other Infoproviders. That is why in our Datamart scenario a « Source » BCS DSO can only be used to load a PSA in the target system. Then, to update the « Target » DSO, a specific program has to be executed (ZBCS_DM_DSO_UPDATE_MBQ). This program reads the PSA and writes the data directly into the Active data table of the target DSO.

Solution architecture

Here is the picture of the target solution :

Details about the code

The program that loads the data from a PSA to a target DSO can be built as follows :

TABLES: /BIC/B0000150000,

/BIC/AMA_BCO1E00.

DATA S_PSA_1E TYPE /BIC/B0000150000.

DATA S_DSO_1E TYPE /BIC/AMA_BCO1E00.

DATA I_PSA_1E TYPE /BIC/B0000150000 occurs 0.

DATA I_DSO_1E TYPE /BIC/AMA_BCO1E00 occurs 0.

SELECT * FROM /BIC/B0000150000 INTO TABLE I_PSA_1E.

IF SY-SUBRC = 0.

LOOP AT I_PSA_1E INTO S_PSA_1E.

MOVE-CORRESPONDING S_PSA_1E TO S_DSO_1E.

APPEND S_DSO_1E TO I_DSO_1E.

ENDLOOP.

MODIFY /BIC/AMA_BCO1E00 FROM TABLE I_DSO_1E.

ELSE.

*MESSAGE E001(ZBCS_1).

ENDIF.

Loading process sequence

The loading process follows a logical/chronological sequence. This sequence is materialized by two local process chains, one for the DSOs and one for the Totals Infocube. The user only has to execute a Metachain that includes these 2 local process chains. This Metachain is executed in the target System (MBQ).

DSOs loading process sequence :

- Deletion of PSAs content

- Deletion of DSOs content

- Execution of the Infopackages (PSA loading)

- Execution of the ZBCS_DM_DSO_UPDATE program

Infocube loading process sequence :

- Deletion of PSA content

- Switch the Infocube to « load mode »

- Deletion of Infocube content

- Execution of the Infopackage (PSA loading)

- Execution of the transformation (DTP)

- Switch the Infocube to « plan mode »

Additional remarks

Please pay attention to the following additional remarks :

- The solution explained in this document only allows to copy the data records : the detailed logs, such as the tasks status, are not updated in the target system

- Don’t forget to also switch the source Real Time Infocube (in MBP) if you want to copy all latest productive data, that is to say including those which were contained in the open request

- For data consistency reasons and to avoid any risk of error, the loading process is executed in Full

- If you need to copy only a part of the source data records, it is possible to set filters in the infopackages

- Quick Start guide for PLM system integration 3.0 Implementation in Product Lifecycle Management Blogs by SAP

- Quick Start guide for PLM system integration 3.0 Implementation/Installation in Enterprise Resource Planning Blogs by SAP

- Application Link Enabling (ALE) in Enterprise Resource Planning Blogs by Members

- SAP S/4HANA Cloud Extensions with SAP Build Best Practices: An Expert Roundtable in Enterprise Resource Planning Blogs by SAP

- SAP PI/PO migration? Why you should move to the Cloud with SAP Integration Suite! in Technology Blogs by SAP

| User | Count |

|---|---|

| 1 | |

| 1 | |

| 1 | |

| 1 |