- SAP Community

- Products and Technology

- Additional Blogs by Members

- Not all benchmarks are equal

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

We have been on our current enterprise hardware platform for about 2 years, so my current goal is to chart any necessary midcourse corrections as applications go live, upgrade or otherwise outgrow their original home. Within the next year we'll be looking at the entire data center as major leases finish. The challenge is how to hit the moving target as vendors release new gear, promise the next version will be even better, and minimize variations that cause side effects like too many spare parts, administrator adjustments, and different end user service levels.

My job is to determine what effect changing hardware will have on the company bottom line, meaning, will users notice a different SAP experience. Assume our service level is a 1 second response time, and we achieve this with DB = 400 ms, CPU=400 ms, misc=200 ms. If we doubled our CPU throughput (not clock speed) (so, 100% better) we might decrease the CPU time to 200ms, but our response time only changes from 1,000 ms to 800 ms (20% better). Less significant increases in CPU throughput have correspondingly less impact on user experiences.

Over two years ago, we were looking to replace our DEC Alpha systems, so I ran a number of benchmark tests, from basic UNIX shell, C and Perl programs to database metrics. I also examined the published literature and contacted vendors concerning their SAP benchmark, or SAPS results.

Prior to this, I compared each Alpha system as we had purchased hardware over 6 years or so, with the resulting variation of CPU steppings, clock speed, backplanes and memory capacity. I found we had deployed an ABAP program to execute a delay by counting to a very high number. In retrospect, this was poor programming practice as the code consumed CPU resources when it ran, but it turns out to be a useful benchmark since it exercises the ABAP stack, not just the CPU and UNIX kernel. I've found each successive CPU generation counts just a bit faster than the one before.

Another test I used when I could get a database set up but not the whole SAP Basis layer was to export BW tables used in some of our long-running queries, and then repeat the SQL that SAP had generated.

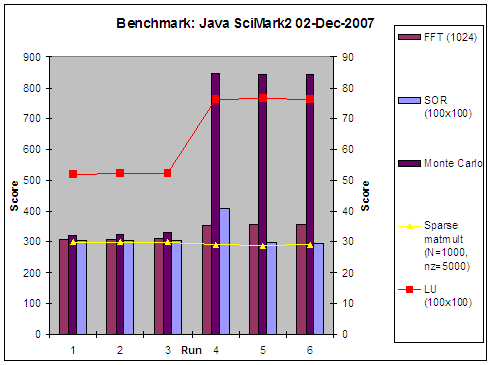

This time, in addition to basic OS-level tests, I decided to look at Java performance. After a brief literature review (read: Googled) I found a few candidate benchmark programs or suites. I ran them on test UNIX workstations, producing baseline results to compare to the big iron. We schedule 2 technical support windows on December, so I was able to work on production systems without interfering with others. I'll take the preliminary results from this past Sunday to decide what else to do next Sunday.

One set of interesting results came from trying 2 different Java implementations on the same system. We've been using Java 1.4.2 since we went to this hardware, but only for a few incidental applications, not a full-scale SAP Java stack. It's likely we'll depend on this more over time, so we need to know where the weak spots are (besides memory dumps). I compared the same tests between our stock 1.4.2 and a recent Java 1.5 SDK I found. I expected to see slight improvements on some tests.

Java 1.4.2 vs 1.5

What I found was surprising, with most tests being about the same, but some dramatically better. Only one degraded, by less than 4%.

STREAMS - CPU / memory tests

I also ran the STREAMS benchmark, which is used to measure CPU/memory access. Since I have a catalog of prior results, I expected to find continued incremental improvements. If you google for "STREAMS benchmark" you're likely to find some hot (read: flaming) blogs. I found changes in compiler techniques to allow much higher results. I won't go into the flags, libraries, etc. that I used, but the next chart shows my results on one hardware model with different executables.

The first 3 runs used classic C compiler optimization ("-O") while the others add options. I don't think this will directly assist our SAP performance since I doubt SAP's kernel uses the libraries I needed (in other words, they aren't on our production systems), but I'm working with our non-SAP application teams to see if this benefits their code.

Conclusions?

The preparation for benchmarking is 90% of the work.

Running the tests take 10% of the time.

Documenting the results is the other 90%.

Then analyzing the results takes the final 90%.

Management conclusions and decisions should take under 1 minute if the analysis is clear.

References

- Java SciMark 2.0 - http://math.nist.gov/scimark2/

- STREAMS - http://www.cs.virginia.edu/stream/

- SAPS certifications - http://www.sap.com/benchmark

- Account Balance Validation in SAP S/4HANA Cloud, Public Edition in Enterprise Resource Planning Blogs by SAP

- Unable to print a billing document (AdobeForms) in VF02 in Enterprise Resource Planning Q&A

- Total value of the sale order is not equal to the Sum of Total Item Net Value and Tax. in Enterprise Resource Planning Q&A

- Manage Supply Shortage and Excess Supply with MRP Material Coverage Apps in Enterprise Resource Planning Blogs by SAP

- Dropdown scripting in Technology Q&A