This might be a real weblog, i.e. created during several weeks as investigation goes. So let's start.[Few weeks ago]

In our team I am responsible as well for BW Statistics on customer's landscape. They are loaded weekly (on Sundays) and all my duty is just to check if loads went Ok last Sunday. It is just really marginal fraction of my time. But still it happened quite regularly that one load (0BWTC_C02 - BW Statistics - OLAP) was failing. I had no really time to do any deep investigations, so the only thing I did that time is rescheduled this particular load to happen after 5 others (0BWTC_C04, 0BWTC_C05, 0BWTC_C09, 0BWTC_C11, 0BWTC_C03).

This helped (no more regular failures), but...

[2007-01-14]

...the load still takes very long time. This surprised me, and I decided to finally llok into it and learn something from it.

Here are statistics for the loads:

| Cube | Records | Load time |

| 0BWTC_C02 | 343067 | 3h 6m 52s |

| 0BWTC_C03 | 729661 | 29m 1s |

Load that is 2 times smaller in number of records takes 6 times more time! Something is wrong, and I decided to look into this and learn something.[2007-01-21]

My basic assumption was that it is extractor that takes so long and that the problem then might be in its logic or in tables (or their indexes) where data is taken from.

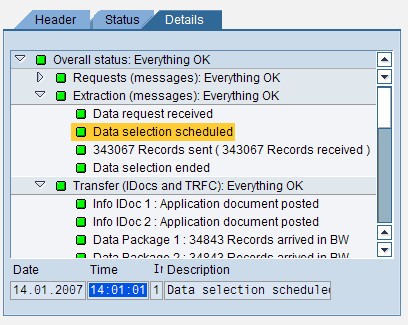

So I went to Details tab of the last load to check how long it takes to extract the data. To my big surprise I found that there are only 5 minutes between Data Selection

and return of first Data Package

So it should not be problem with extracting the data, but somewhere in the next steps. And indeed I found that the longest step is Update (writing to the cube tables),

which took 43 minutes for one Data Package.

So I will need to figure out the reason (indexes, cube tables, buffering etc?) and resolution to slow updates in the next episode. For the moment, I just increased a bit DataPackage max kB size to check what this will do.