- SAP Community

- Products and Technology

- Additional Blogs by Members

- The Diachronic Metadata Repository as a Tool for R...

Additional Blogs by Members

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

former_member18

Active Participant

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

11-06-2006

7:15 AM

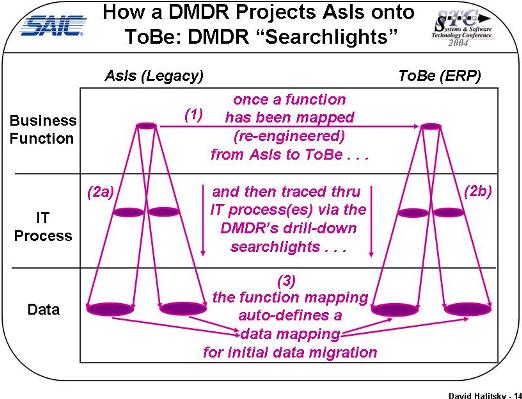

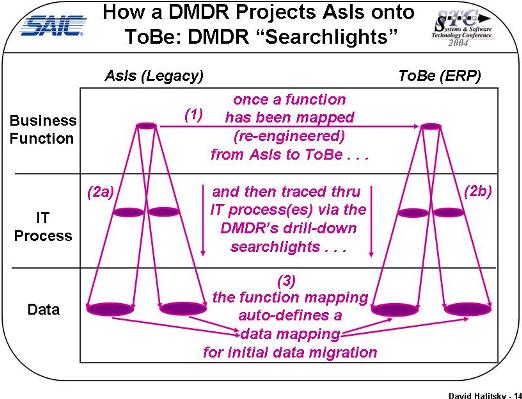

In the last installment of this blog (Part 8): The Diachronic Metadata Repository as a Tool for Risk Reduction via Conflict-Prevention During Legac... The Diachronic Metadata Repository as a Tool for Risk Reduction via Conflict-Prevention During Legac... The Diachronic Metadata Repository as a Tool for Risk Reduction via Conflict-Prevention During Legac... The Diachronic Metadata Repository as a Tool for Risk Reduction via Conflict-Prevention During Legac... The Diachronic Metadata Repository as a Tool for Risk Reduction via Conflict-Prevention During Legac... The Diachronic Metadata Repository as a Tool for Risk Reduction via Conflict-Prevention During Legac... I presented Figures 14 and 15 in order to try and show diagrammatically how and why a Diachronic Metadata Repository (DMDR) facilitates data mapping in a Legacy -> ERP conversion (LERCON) by auto-computing accurate solution sets from which final data mappings will be selected for initial data migraton, permanent external interfaces, and temporary internal interfaces. Figure 14  Figure 15

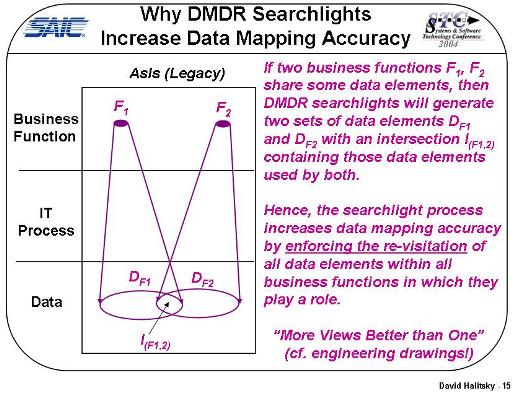

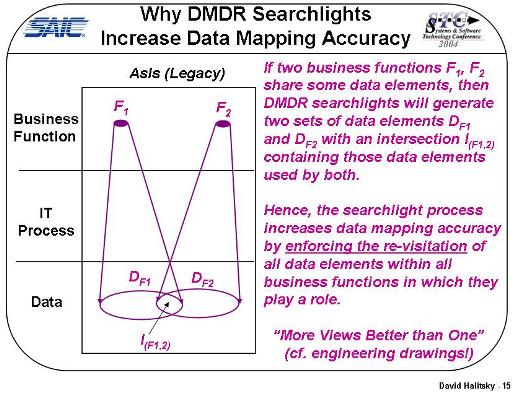

Figure 15  Although Figure 14 does present an over-simplified case in which one AsIs Legacy Business Function has been mapped to one ToBe ERP Business Function, it nonetheless makes the basic point that needs to be made: If: a) AsIs Legacy Business Functions have been horizontally mapped to some ToBe ERP Business Functions TBF1; b) AsIs Legacy Business Functions have been vertically mapped to AsIs Legacy IT Processes; c) ToBe ERP Business Functions have also been vertically mapped to ToBe IT Processes (hopefully in the ERP software vendor's own metadata repository) then: a DMDR can use the horizontal mapping in (a) and the two vertical mappings in (b-c) to compute the "solution sets" from which analysts can select the final data mappings needed to support the mapping of each AsIs Legacy Business Function to its corresponding ToBe ERP Business Functions. As shown in Figure 15, moreover, this iterative (function-by function) approach to data mapping differs radically from traditional non-iterative approaches to data mapping which simply try to map AsIs Legacy data elements onto ToBe ERP data elements using nothing more than the logical definitions of these elements that can be found in the AsIs and ToBe data dictionaries. And for this reason, most traditional business analysts, business process re-engineers, and business process experts will scoff at the proposed iterative approach on the grounds that it is a wate of time. The reason why most traditionalists will scoff at an iterative function-by-function approach to data mapping is because this approach insists that each AsIs data element and each ToBe data element must be revisited multiple times: each AsIs data element must be revisited in the context of each AsIs Business Function which it supports, and each ToBe data element must be revisited in the context of each ToBe Business Function which it supports. And to most traditionalists, this iterative revisitation of data elements will seem to be a waste of time. But in fact, nothing could be further from the truth. The iterative function-by-function approach to data mapping is actually the only way to guarantee that nothing important has been overlooked during the data mapping process of a LERCON. Or, to put this point another way, if you don't know how any given AsIs data element is used in each AsIs Business Function which it supports, and how any given ToBe data element is used in each ToBe Business Function which it supports, how can you possibly pretend to know how to map AsIs to ToBe data elements effectively and accurately? But that's OK - just keep on doing it the way you've always done it. You know the drill: i) find an AsIs domain expert and try to get a day of his or her time; ii) find the corresponding ToBe domain expert and try to get the same day of his or her time; iii) have them cross-walk just "their" portions of the AsIS and ToBe data dictionaries together, e.g. if they're vendor experts, have them walk just the vendor portions of the data dictionaries; if they're parts experts, have them walk just the parts portions of the data dictionaries, etc. Yeah - we all know how very effective (i-iii) can be as the basic protocol for LERCON data mappings.

Although Figure 14 does present an over-simplified case in which one AsIs Legacy Business Function has been mapped to one ToBe ERP Business Function, it nonetheless makes the basic point that needs to be made: If: a) AsIs Legacy Business Functions have been horizontally mapped to some ToBe ERP Business Functions TBF1; b) AsIs Legacy Business Functions have been vertically mapped to AsIs Legacy IT Processes; c) ToBe ERP Business Functions have also been vertically mapped to ToBe IT Processes (hopefully in the ERP software vendor's own metadata repository) then: a DMDR can use the horizontal mapping in (a) and the two vertical mappings in (b-c) to compute the "solution sets" from which analysts can select the final data mappings needed to support the mapping of each AsIs Legacy Business Function to its corresponding ToBe ERP Business Functions. As shown in Figure 15, moreover, this iterative (function-by function) approach to data mapping differs radically from traditional non-iterative approaches to data mapping which simply try to map AsIs Legacy data elements onto ToBe ERP data elements using nothing more than the logical definitions of these elements that can be found in the AsIs and ToBe data dictionaries. And for this reason, most traditional business analysts, business process re-engineers, and business process experts will scoff at the proposed iterative approach on the grounds that it is a wate of time. The reason why most traditionalists will scoff at an iterative function-by-function approach to data mapping is because this approach insists that each AsIs data element and each ToBe data element must be revisited multiple times: each AsIs data element must be revisited in the context of each AsIs Business Function which it supports, and each ToBe data element must be revisited in the context of each ToBe Business Function which it supports. And to most traditionalists, this iterative revisitation of data elements will seem to be a waste of time. But in fact, nothing could be further from the truth. The iterative function-by-function approach to data mapping is actually the only way to guarantee that nothing important has been overlooked during the data mapping process of a LERCON. Or, to put this point another way, if you don't know how any given AsIs data element is used in each AsIs Business Function which it supports, and how any given ToBe data element is used in each ToBe Business Function which it supports, how can you possibly pretend to know how to map AsIs to ToBe data elements effectively and accurately? But that's OK - just keep on doing it the way you've always done it. You know the drill: i) find an AsIs domain expert and try to get a day of his or her time; ii) find the corresponding ToBe domain expert and try to get the same day of his or her time; iii) have them cross-walk just "their" portions of the AsIS and ToBe data dictionaries together, e.g. if they're vendor experts, have them walk just the vendor portions of the data dictionaries; if they're parts experts, have them walk just the parts portions of the data dictionaries, etc. Yeah - we all know how very effective (i-iii) can be as the basic protocol for LERCON data mappings.

Figure 15

Figure 15  Although Figure 14 does present an over-simplified case in which one AsIs Legacy Business Function has been mapped to one ToBe ERP Business Function, it nonetheless makes the basic point that needs to be made: If: a) AsIs Legacy Business Functions have been horizontally mapped to some ToBe ERP Business Functions TBF1; b) AsIs Legacy Business Functions have been vertically mapped to AsIs Legacy IT Processes; c) ToBe ERP Business Functions have also been vertically mapped to ToBe IT Processes (hopefully in the ERP software vendor's own metadata repository) then: a DMDR can use the horizontal mapping in (a) and the two vertical mappings in (b-c) to compute the "solution sets" from which analysts can select the final data mappings needed to support the mapping of each AsIs Legacy Business Function to its corresponding ToBe ERP Business Functions. As shown in Figure 15, moreover, this iterative (function-by function) approach to data mapping differs radically from traditional non-iterative approaches to data mapping which simply try to map AsIs Legacy data elements onto ToBe ERP data elements using nothing more than the logical definitions of these elements that can be found in the AsIs and ToBe data dictionaries. And for this reason, most traditional business analysts, business process re-engineers, and business process experts will scoff at the proposed iterative approach on the grounds that it is a wate of time. The reason why most traditionalists will scoff at an iterative function-by-function approach to data mapping is because this approach insists that each AsIs data element and each ToBe data element must be revisited multiple times: each AsIs data element must be revisited in the context of each AsIs Business Function which it supports, and each ToBe data element must be revisited in the context of each ToBe Business Function which it supports. And to most traditionalists, this iterative revisitation of data elements will seem to be a waste of time. But in fact, nothing could be further from the truth. The iterative function-by-function approach to data mapping is actually the only way to guarantee that nothing important has been overlooked during the data mapping process of a LERCON. Or, to put this point another way, if you don't know how any given AsIs data element is used in each AsIs Business Function which it supports, and how any given ToBe data element is used in each ToBe Business Function which it supports, how can you possibly pretend to know how to map AsIs to ToBe data elements effectively and accurately? But that's OK - just keep on doing it the way you've always done it. You know the drill: i) find an AsIs domain expert and try to get a day of his or her time; ii) find the corresponding ToBe domain expert and try to get the same day of his or her time; iii) have them cross-walk just "their" portions of the AsIS and ToBe data dictionaries together, e.g. if they're vendor experts, have them walk just the vendor portions of the data dictionaries; if they're parts experts, have them walk just the parts portions of the data dictionaries, etc. Yeah - we all know how very effective (i-iii) can be as the basic protocol for LERCON data mappings.

Although Figure 14 does present an over-simplified case in which one AsIs Legacy Business Function has been mapped to one ToBe ERP Business Function, it nonetheless makes the basic point that needs to be made: If: a) AsIs Legacy Business Functions have been horizontally mapped to some ToBe ERP Business Functions TBF1; b) AsIs Legacy Business Functions have been vertically mapped to AsIs Legacy IT Processes; c) ToBe ERP Business Functions have also been vertically mapped to ToBe IT Processes (hopefully in the ERP software vendor's own metadata repository) then: a DMDR can use the horizontal mapping in (a) and the two vertical mappings in (b-c) to compute the "solution sets" from which analysts can select the final data mappings needed to support the mapping of each AsIs Legacy Business Function to its corresponding ToBe ERP Business Functions. As shown in Figure 15, moreover, this iterative (function-by function) approach to data mapping differs radically from traditional non-iterative approaches to data mapping which simply try to map AsIs Legacy data elements onto ToBe ERP data elements using nothing more than the logical definitions of these elements that can be found in the AsIs and ToBe data dictionaries. And for this reason, most traditional business analysts, business process re-engineers, and business process experts will scoff at the proposed iterative approach on the grounds that it is a wate of time. The reason why most traditionalists will scoff at an iterative function-by-function approach to data mapping is because this approach insists that each AsIs data element and each ToBe data element must be revisited multiple times: each AsIs data element must be revisited in the context of each AsIs Business Function which it supports, and each ToBe data element must be revisited in the context of each ToBe Business Function which it supports. And to most traditionalists, this iterative revisitation of data elements will seem to be a waste of time. But in fact, nothing could be further from the truth. The iterative function-by-function approach to data mapping is actually the only way to guarantee that nothing important has been overlooked during the data mapping process of a LERCON. Or, to put this point another way, if you don't know how any given AsIs data element is used in each AsIs Business Function which it supports, and how any given ToBe data element is used in each ToBe Business Function which it supports, how can you possibly pretend to know how to map AsIs to ToBe data elements effectively and accurately? But that's OK - just keep on doing it the way you've always done it. You know the drill: i) find an AsIs domain expert and try to get a day of his or her time; ii) find the corresponding ToBe domain expert and try to get the same day of his or her time; iii) have them cross-walk just "their" portions of the AsIS and ToBe data dictionaries together, e.g. if they're vendor experts, have them walk just the vendor portions of the data dictionaries; if they're parts experts, have them walk just the parts portions of the data dictionaries, etc. Yeah - we all know how very effective (i-iii) can be as the basic protocol for LERCON data mappings.

6 Comments

Related Content

- SAP Analytics Cloud Planning - Converting data in Technology Blogs by SAP

- S/4HANA 2023 FPS00 Upgrade in Technology Blogs by Members

- BW/4 conversion: Export datasources 8* - can it deleted before the conversion? in Technology Q&A

- SAP ERP Functionality for EDI Processing: UoMs Determination for Inbound Orders in Enterprise Resource Planning Blogs by Members

- SAC Currency Conversion Rate from previous Month (Priorities of Calculations) in Technology Q&A

Top kudoed authors

| User | Count |

|---|---|

| 1 | |

| 1 | |

| 1 | |

| 1 |