- SAP Community

- Products and Technology

- Technology

- Technology Blogs by Members

- Be Prepared for Using Pacemaker Cluster for SAP HA...

Technology Blogs by Members

Explore a vibrant mix of technical expertise, industry insights, and tech buzz in member blogs covering SAP products, technology, and events. Get in the mix!

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

tomas-krojzl

Active Contributor

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

11-19-2017

4:49 PM

Be Prepared for Using Pacemaker Cluster for SAP HANA – Main Part

Introduction

I am probably stating obvious when saying that every infrastructure deployment option needs to be properly tested before it can be used to host productive workloads. This is even more important for High Availability clusters, as poorly implemented cluster can cause more downtime than decision to not use any clustering at all. Worst situation that must not happen under any circumstances is that cluster would cause impact to the data consistency or data loss.

Typical High Availability cluster testing starts with situations that could happen under normal operation – these include situations like:

- Cluster stability

- Graceful failover

- Crash of primary application or server

- Crash of secondary application or server

- Patching and maintenance, etc.

These scenarios must behave as expected otherwise you better not use the cluster at all.

Next you should focus on testing more advanced scenarios – these typically include multi-level failures. These are happening in very rare cases and goal here is not to ensure that cluster will be able to protect against these failures, but to ensure that cluster will not misbehave and will not cause any damage to database.

Highest level of testing is to consider what human System Administrator will do when he is called in the middle of night to fix failed cluster. Can he accidentally cause data loss and/or inconsistency to SAP HANA database? What are protections that prevent him from inadvertently damaging SAP HANA database?

This blog is about such scenarios where System Administrator must be extremely careful as otherwise he can accidentally cause data loss and inconsistency to SAP HANA database.

Before we jump to the scenarios themselves we need to set the stage and explain some basics.

Big thanks to Fabian Herschel and Peter Schinagl from SUSE for proof-reading the blog.

For better readability whole blog is divided into following parts:

- Be Prepared for Using Pacemaker Cluster for SAP HANA – Main Part (this blog)

- Be Prepared for Using Pacemaker Cluster for SAP HANA – Part 1: Basics (this blog)

- Be Prepared for Using Pacemaker Cluster for SAP HANA – Part 2: Failure of Both Nodes

Be Prepared for Using Pacemaker Cluster for SAP HANA – Part 1: Basics

How Pacemaker Cluster works with SAP HANA System Replication

SUSE developed in collaboration with SAP the SAPHanaSR solution and released it as part of SLES for SAP Applications. This solution is based on Pacemaker Cluster that is automating failovers between two SAP HANA databases that are mirroring each other. This solution was later adopted by RedHat and is now jointly developed by both companies. Therefore, this whole blog is equally applicable to both Operating Systems.

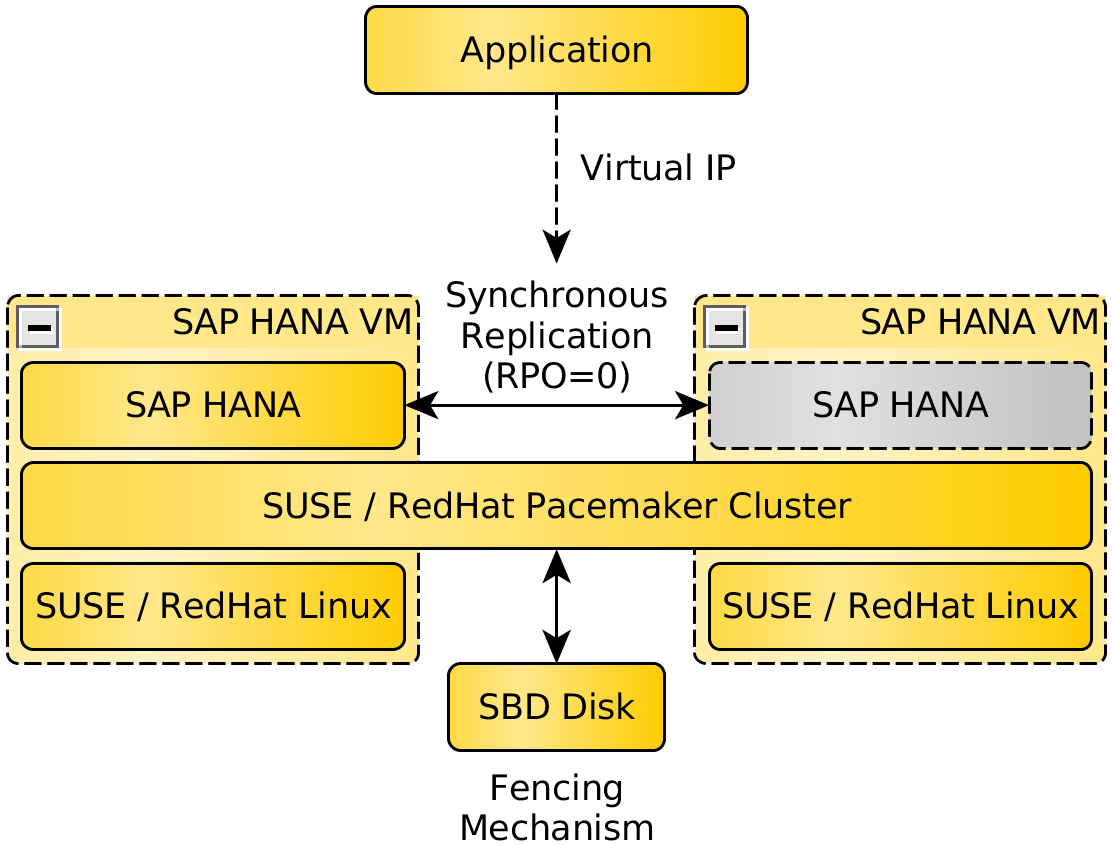

Pacemaker Cluster with SAP HANA System Replication as visualized below is based on two identical servers (VMs) each having one SAP HANA database. Both servers are bundled together by SUSE Pacemaker Cluster.

Figure 1 - Pacemaker Cluster for SAP HANA Architecture

SAP HANA database on primary server is replicating information to SAP HANA database running on secondary server. Replication method is based on Synchronous SAP HANA System Replication – this is to ensure that no data is lost during failover. Both databases are running at the same time, however only primary database can support customer workloads. Secondary database is either completely passive or can be active in read-only mode (since SAP HANA 2.0).

Failure of primary SAP HANA database is automatically detected by Pacemaker Cluster. The cluster will automatically shutdown primary database (if still partially running) and will activate secondary database. It will also relocate virtual IP to ensure that all applications using the database can reconnect to new primary SAP HANA database. Since all the data is already pre-loaded in memory of new primary database this failover is very fast.

More details here:

https://www.suse.com/products/sles-for-sap/resource-library/sap-best-practices

https://access.redhat.com/articles/1466063

Importance of fencing

Under normal operation fencing mechanism is not actively used. Cluster is communicating over network (corosync) and both sides of the cluster are constantly updating each other on the health status of SAP HANA database on given node.

The problem starts when one of nodes stops responding. Let’s assume secondary server is suddenly unable to connect to primary server. In such case the cluster on secondary server is having no way of knowing what happened – generally two options are possible:

- Primary server is not responding because it crashed or is frozen

- Primary server is healthy however due to a network issue it is not reachable

The problem is that in first case cluster should consider executing failover to restore the service while in second case the failover must not happen as otherwise SAP HANA would be active on both servers.

This situation is called split-brain (or dual primary) and is extremely dangerous. It is even more dangerous in this scenario because we are working with two independent SAP HANA databases that can easily be active on both sides.

Business impact would fatal – imagine that you are writing some transactions to database running on primary server and later other transactions to database running on secondary server which is not aware about changes written to the first database.

Now imagine what about other systems in landscape – CRM having records that does not exist in ERP, etc. This would result in logical inconsistency cascading across all systems in the customer landscape. I believe it is now obvious that fixing such situation would be very difficult and would cause huge impact on the business.

It is good to be paranoid when it comes to split-brain situations.

Pacemaker Cluster is addressing this by fencing technique called STONITH (Shoot-the-other-node-in-the-head). This mechanism does exactly what the name suggests. In case that nodes suddenly cannot communicate then one of the nodes will kill the other node to ensure that both nodes are not active at the same time. Surviving node will then serve the customer workloads.

More details here:

http://linux-ha.org/wiki/STONITH

There are multiple techniques how fencing can be implemented. However, following two techniques are most common:

- Node shutdown via IPMI (for most Intel devices), HMC (for Power devices), vCenter or VMware plugins (for VMware VMs)

- In case of issue surviving node will power down the other node to ensure that it is not active. Main drawback is that implementation depends on used HW or VMware configuration. In some cases this approach might be considered insecure due to a password being stored in cluster configuration in unencrypted way.

- SBD (Storage-based-death) disk fencing is based on shared disk(s) provided from external source(s) – obviously, multiple SBD disks should not share same single-point-of-failure and if provided over network then not over cluster communication network (corosync).

- In case of issue surviving node will write “poison pill” to the disk instructing other node (if active) to commit suicide. Advantage of this approach is that it is generic approach that can be equally applied across different scenarios including bare-metal and virtual solutions.

More details here:

http://clusterlabs.org/doc/crm_fencing.html

http://www.linux-ha.org/wiki/SBD_Fencing

https://www.suse.com/documentation/sle-ha-12/book_sleha/data/sec_ha_fencing_nodes.html

How does cluster know it is safe to failover

At this point we need to deep dive into how Pacemaker Cluster internally works with SAP HANA System Replication.

There are two SAP HANA cluster packages that are automating SAP HANA System Replication failover:

- SAPHanaSR – automating failover for following two SAP HANA single-node scenarios:

- SAP HANA SR performance optimized infrastructure – where secondary node is dedicated to fulfilling High Availability function

- SAP HANA SR cost optimized infrastructure – where secondary node is hosting additional non-productive SAP HANA database

- SAPHanaSR-ScaleOut – automating failover for SAP HANA scale-out scenario (at the time of writing of this blog available only on SUSE Linux Enterprise Server for SAP Applications)

For the sake of simplicity we will focus on single node package (SAPHanaSR) only. This package is designed to monitor and locally record multiple attributes for each node:

- Cluster Resource State (hana_<sid>_clone_state)

- Valid values: PROMOTED, DEMOTED, WAITING, UNDEFINED

This attribute is describing actual status of local SAP HANA cluster resource.

- Remote Node Hostname (hana_<sid>_remoteHost)

- Hostname of remote server (“the other node”).

- SAP HANA Roles (hana_<sid>_roles)

- String describing health status of local SAP HANA database. This includes:

- Return Code from landscapeHostConfiguration.py

- HANA role – primary/secondary

- Nameserver role

- Index server roles

- SAP HANA Site Name (hana_<sid>_site)

- Alias of local SAP HANA database (as registered when replication was configured).

- SAP HANA System Replication mode (hana_<sid>_srmode)

- Valid values: sync, syncmem

Configured SAP HANA replication mode. Replication mode async should not be used in High Availability scenario as it is associated with potential data loss during failover.

- SAP HANA System Replication status (hana_<sid>_sync_state)

- Valid values: PRIM, SOK, SFAIL

Failover to secondary cluster node can happen only in case that replication status on secondary node is SOK as otherwise replication was not operational when primary crashed and data on secondary database is not in sync with primary database. In this case failover will not happen.

- Local Node Hostname (hana_<sid>_vhost)

- Hostname used during SAP HANA installation – this could be either the local hostname any other "virtual" hostname.

- Last Primary Timestamp – LPT value (lpa_<sid>_lpt)

- Value is either timestamp value of SAP HANA database being last seen as primary or low “static” value suggesting that database is not primary.

This attribute is preventing dual primary situation. In case that cluster node attributes on both nodes are showing last state of SAP HANA database as primary (this can happen after multi-level failure – see next part for details) then higher LPT value is used to determine which SAP HANA database was “last primary”. This database is started while the other database is kept down.

- Resource Weight (master-rsc_SAPHana_<SID>_HDB<SN>)

- Internal technical cluster attribute used to control failover process. Node with highest weight will be promoted to become primary. It is calculated based on other attributes.

These attributes are updated at regular intervals and stored locally as part cluster node status.

Example of internal cluster states during normal operation (hana43 being primary):

Node Attributes:

* Node hana43-hb:

+ hana_hac_clone_state : PROMOTED

+ hana_hac_remoteHost : hana44

+ hana_hac_roles : 4:P:master1:master:worker:master

+ hana_hac_site : TOR-HAC-00-NODE1

+ hana_hac_srmode : sync

+ hana_hac_sync_state : PRIM

+ hana_hac_vhost : hana43

+ lpa_hac_lpt : 1439227830

+ master-rsc_SAPHana_HAC_HDB00 : 150

* Node hana44-hb:

+ hana_hac_clone_state : DEMOTED

+ hana_hac_remoteHost : hana43

+ hana_hac_roles : 4:S:master1:master:worker:master

+ hana_hac_site : TOR-HAC-00-NODE2

+ hana_hac_srmode : sync

+ hana_hac_sync_state : SOK

+ hana_hac_vhost : hana44

+ lpa_hac_lpt : 30

+ master-rsc_SAPHana_HAC_HDB00 : 100All these attributes are used to determine actual cluster health and are considered before failover decision is taken.

- SAP Managed Tags:

- SUSE Linux Enterprise Server,

- SAP HANA

8 Comments

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

"automatische backups"

1 -

"regelmäßige sicherung"

1 -

"TypeScript" "Development" "FeedBack"

1 -

505 Technology Updates 53

1 -

ABAP

14 -

ABAP API

1 -

ABAP CDS Views

2 -

ABAP CDS Views - BW Extraction

1 -

ABAP CDS Views - CDC (Change Data Capture)

1 -

ABAP class

2 -

ABAP Cloud

2 -

ABAP Development

5 -

ABAP in Eclipse

1 -

ABAP Platform Trial

1 -

ABAP Programming

2 -

abap technical

1 -

absl

2 -

access data from SAP Datasphere directly from Snowflake

1 -

Access data from SAP datasphere to Qliksense

1 -

Accrual

1 -

action

1 -

adapter modules

1 -

Addon

1 -

Adobe Document Services

1 -

ADS

1 -

ADS Config

1 -

ADS with ABAP

1 -

ADS with Java

1 -

ADT

2 -

Advance Shipping and Receiving

1 -

Advanced Event Mesh

3 -

AEM

1 -

AI

7 -

AI Launchpad

1 -

AI Projects

1 -

AIML

9 -

Alert in Sap analytical cloud

1 -

Amazon S3

1 -

Analytical Dataset

1 -

Analytical Model

1 -

Analytics

1 -

Analyze Workload Data

1 -

annotations

1 -

API

1 -

API and Integration

3 -

API Call

2 -

Application Architecture

1 -

Application Development

5 -

Application Development for SAP HANA Cloud

3 -

Applications and Business Processes (AP)

1 -

Artificial Intelligence

1 -

Artificial Intelligence (AI)

5 -

Artificial Intelligence (AI) 1 Business Trends 363 Business Trends 8 Digital Transformation with Cloud ERP (DT) 1 Event Information 462 Event Information 15 Expert Insights 114 Expert Insights 76 Life at SAP 418 Life at SAP 1 Product Updates 4

1 -

Artificial Intelligence (AI) blockchain Data & Analytics

1 -

Artificial Intelligence (AI) blockchain Data & Analytics Intelligent Enterprise

1 -

Artificial Intelligence (AI) blockchain Data & Analytics Intelligent Enterprise Oil Gas IoT Exploration Production

1 -

Artificial Intelligence (AI) blockchain Data & Analytics Intelligent Enterprise sustainability responsibility esg social compliance cybersecurity risk

1 -

ASE

1 -

ASR

2 -

ASUG

1 -

Attachments

1 -

Authorisations

1 -

Automating Processes

1 -

Automation

2 -

aws

2 -

Azure

1 -

Azure AI Studio

1 -

B2B Integration

1 -

Backorder Processing

1 -

Backup

1 -

Backup and Recovery

1 -

Backup schedule

1 -

BADI_MATERIAL_CHECK error message

1 -

Bank

1 -

BAS

1 -

basis

2 -

Basis Monitoring & Tcodes with Key notes

2 -

Batch Management

1 -

BDC

1 -

Best Practice

1 -

bitcoin

1 -

Blockchain

3 -

bodl

1 -

BOP in aATP

1 -

BOP Segments

1 -

BOP Strategies

1 -

BOP Variant

1 -

BPC

1 -

BPC LIVE

1 -

BTP

12 -

BTP Destination

2 -

Business AI

1 -

Business and IT Integration

1 -

Business application stu

1 -

Business Application Studio

1 -

Business Architecture

1 -

Business Communication Services

1 -

Business Continuity

1 -

Business Data Fabric

3 -

Business Partner

12 -

Business Partner Master Data

10 -

Business Technology Platform

2 -

Business Trends

4 -

CA

1 -

calculation view

1 -

CAP

3 -

Capgemini

1 -

CAPM

1 -

Catalyst for Efficiency: Revolutionizing SAP Integration Suite with Artificial Intelligence (AI) and

1 -

CCMS

2 -

CDQ

12 -

CDS

2 -

Cental Finance

1 -

Certificates

1 -

CFL

1 -

Change Management

1 -

chatbot

1 -

chatgpt

3 -

CL_SALV_TABLE

2 -

Class Runner

1 -

Classrunner

1 -

Cloud ALM Monitoring

1 -

Cloud ALM Operations

1 -

cloud connector

1 -

Cloud Extensibility

1 -

Cloud Foundry

4 -

Cloud Integration

6 -

Cloud Platform Integration

2 -

cloudalm

1 -

communication

1 -

Compensation Information Management

1 -

Compensation Management

1 -

Compliance

1 -

Compound Employee API

1 -

Configuration

1 -

Connectors

1 -

Consolidation Extension for SAP Analytics Cloud

2 -

Control Indicators.

1 -

Controller-Service-Repository pattern

1 -

Conversion

1 -

Cosine similarity

1 -

cryptocurrency

1 -

CSI

1 -

ctms

1 -

Custom chatbot

3 -

Custom Destination Service

1 -

custom fields

1 -

Customer Experience

1 -

Customer Journey

1 -

Customizing

1 -

cyber security

3 -

Data

1 -

Data & Analytics

1 -

Data Aging

1 -

Data Analytics

2 -

Data and Analytics (DA)

1 -

Data Archiving

1 -

Data Back-up

1 -

Data Governance

5 -

Data Integration

2 -

Data Quality

12 -

Data Quality Management

12 -

Data Synchronization

1 -

data transfer

1 -

Data Unleashed

1 -

Data Value

8 -

database tables

1 -

Datasphere

2 -

datenbanksicherung

1 -

dba cockpit

1 -

dbacockpit

1 -

Debugging

2 -

Delimiting Pay Components

1 -

Delta Integrations

1 -

Destination

3 -

Destination Service

1 -

Developer extensibility

1 -

Developing with SAP Integration Suite

1 -

Devops

1 -

digital transformation

1 -

Documentation

1 -

Dot Product

1 -

DQM

1 -

dump database

1 -

dump transaction

1 -

e-Invoice

1 -

E4H Conversion

1 -

Eclipse ADT ABAP Development Tools

2 -

edoc

1 -

edocument

1 -

ELA

1 -

Embedded Consolidation

1 -

Embedding

1 -

Embeddings

1 -

Employee Central

1 -

Employee Central Payroll

1 -

Employee Central Time Off

1 -

Employee Information

1 -

Employee Rehires

1 -

Enable Now

1 -

Enable now manager

1 -

endpoint

1 -

Enhancement Request

1 -

Enterprise Architecture

1 -

ETL Business Analytics with SAP Signavio

1 -

Euclidean distance

1 -

Event Dates

1 -

Event Driven Architecture

1 -

Event Mesh

2 -

Event Reason

1 -

EventBasedIntegration

1 -

EWM

1 -

EWM Outbound configuration

1 -

EWM-TM-Integration

1 -

Existing Event Changes

1 -

Expand

1 -

Expert

2 -

Expert Insights

2 -

Fiori

14 -

Fiori Elements

2 -

Fiori SAPUI5

12 -

Flask

1 -

Full Stack

8 -

Funds Management

1 -

General

1 -

General Splitter

1 -

Generative AI

1 -

Getting Started

1 -

GitHub

8 -

Grants Management

1 -

groovy

1 -

GTP

1 -

HANA

6 -

HANA Cloud

2 -

Hana Cloud Database Integration

2 -

HANA DB

2 -

HANA XS Advanced

1 -

Historical Events

1 -

home labs

1 -

HowTo

1 -

HR Data Management

1 -

html5

8 -

HTML5 Application

1 -

Identity cards validation

1 -

idm

1 -

Implementation

1 -

input parameter

1 -

instant payments

1 -

Integration

3 -

Integration Advisor

1 -

Integration Architecture

1 -

Integration Center

1 -

Integration Suite

1 -

intelligent enterprise

1 -

iot

1 -

Java

1 -

job

1 -

Job Information Changes

1 -

Job-Related Events

1 -

Job_Event_Information

1 -

joule

4 -

Journal Entries

1 -

Just Ask

1 -

Kerberos for ABAP

8 -

Kerberos for JAVA

8 -

KNN

1 -

Launch Wizard

1 -

Learning Content

2 -

Life at SAP

5 -

lightning

1 -

Linear Regression SAP HANA Cloud

1 -

local tax regulations

1 -

LP

1 -

Machine Learning

2 -

Marketing

1 -

Master Data

3 -

Master Data Management

14 -

Maxdb

2 -

MDG

1 -

MDGM

1 -

MDM

1 -

Message box.

1 -

Messages on RF Device

1 -

Microservices Architecture

1 -

Microsoft Universal Print

1 -

Middleware Solutions

1 -

Migration

5 -

ML Model Development

1 -

Modeling in SAP HANA Cloud

8 -

Monitoring

3 -

MTA

1 -

Multi-Record Scenarios

1 -

Multiple Event Triggers

1 -

Neo

1 -

New Event Creation

1 -

New Feature

1 -

Newcomer

1 -

NodeJS

2 -

ODATA

2 -

OData APIs

1 -

odatav2

1 -

ODATAV4

1 -

ODBC

1 -

ODBC Connection

1 -

Onpremise

1 -

open source

2 -

OpenAI API

1 -

Oracle

1 -

PaPM

1 -

PaPM Dynamic Data Copy through Writer function

1 -

PaPM Remote Call

1 -

PAS-C01

1 -

Pay Component Management

1 -

PGP

1 -

Pickle

1 -

PLANNING ARCHITECTURE

1 -

Popup in Sap analytical cloud

1 -

PostgrSQL

1 -

POSTMAN

1 -

Process Automation

2 -

Product Updates

4 -

PSM

1 -

Public Cloud

1 -

Python

4 -

Qlik

1 -

Qualtrics

1 -

RAP

3 -

RAP BO

2 -

Record Deletion

1 -

Recovery

1 -

recurring payments

1 -

redeply

1 -

Release

1 -

Remote Consumption Model

1 -

Replication Flows

1 -

research

1 -

Resilience

1 -

REST

1 -

REST API

1 -

Retagging Required

1 -

Risk

1 -

Rolling Kernel Switch

1 -

route

1 -

rules

1 -

S4 HANA

1 -

S4 HANA Cloud

1 -

S4 HANA On-Premise

1 -

S4HANA

3 -

S4HANA_OP_2023

2 -

SAC

10 -

SAC PLANNING

9 -

SAP

4 -

SAP ABAP

1 -

SAP Advanced Event Mesh

1 -

SAP AI Core

8 -

SAP AI Launchpad

8 -

SAP Analytic Cloud Compass

1 -

Sap Analytical Cloud

1 -

SAP Analytics Cloud

4 -

SAP Analytics Cloud for Consolidation

3 -

SAP Analytics Cloud Story

1 -

SAP analytics clouds

1 -

SAP BAS

1 -

SAP Basis

6 -

SAP BODS

1 -

SAP BODS certification.

1 -

SAP BTP

21 -

SAP BTP Build Work Zone

2 -

SAP BTP Cloud Foundry

6 -

SAP BTP Costing

1 -

SAP BTP CTMS

1 -

SAP BTP Innovation

1 -

SAP BTP Migration Tool

1 -

SAP BTP SDK IOS

1 -

SAP Build

11 -

SAP Build App

1 -

SAP Build apps

1 -

SAP Build CodeJam

1 -

SAP Build Process Automation

3 -

SAP Build work zone

10 -

SAP Business Objects Platform

1 -

SAP Business Technology

2 -

SAP Business Technology Platform (XP)

1 -

sap bw

1 -

SAP CAP

2 -

SAP CDC

1 -

SAP CDP

1 -

SAP CDS VIEW

1 -

SAP Certification

1 -

SAP Cloud ALM

4 -

SAP Cloud Application Programming Model

1 -

SAP Cloud Integration for Data Services

1 -

SAP cloud platform

8 -

SAP Companion

1 -

SAP CPI

3 -

SAP CPI (Cloud Platform Integration)

2 -

SAP CPI Discover tab

1 -

sap credential store

1 -

SAP Customer Data Cloud

1 -

SAP Customer Data Platform

1 -

SAP Data Intelligence

1 -

SAP Data Migration in Retail Industry

1 -

SAP Data Services

1 -

SAP DATABASE

1 -

SAP Dataspher to Non SAP BI tools

1 -

SAP Datasphere

10 -

SAP DRC

1 -

SAP EWM

1 -

SAP Fiori

2 -

SAP Fiori App Embedding

1 -

Sap Fiori Extension Project Using BAS

1 -

SAP GRC

1 -

SAP HANA

1 -

SAP HCM (Human Capital Management)

1 -

SAP HR Solutions

1 -

SAP IDM

1 -

SAP Integration Suite

9 -

SAP Integrations

4 -

SAP iRPA

2 -

SAP Learning Class

1 -

SAP Learning Hub

1 -

SAP Odata

2 -

SAP on Azure

1 -

SAP PartnerEdge

1 -

sap partners

1 -

SAP Password Reset

1 -

SAP PO Migration

1 -

SAP Prepackaged Content

1 -

SAP Process Automation

2 -

SAP Process Integration

2 -

SAP Process Orchestration

1 -

SAP S4HANA

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Cloud for Finance

1 -

SAP S4HANA Cloud private edition

1 -

SAP Sandbox

1 -

SAP STMS

1 -

SAP successfactors

3 -

SAP SuccessFactors HXM Core

1 -

SAP Time

1 -

SAP TM

2 -

SAP Trading Partner Management

1 -

SAP UI5

1 -

SAP Upgrade

1 -

SAP Utilities

1 -

SAP-GUI

8 -

SAP_COM_0276

1 -

SAPBTP

1 -

SAPCPI

1 -

SAPEWM

1 -

sapmentors

1 -

saponaws

2 -

SAPS4HANA

1 -

SAPUI5

4 -

schedule

1 -

Secure Login Client Setup

8 -

security

9 -

Selenium Testing

1 -

SEN

1 -

SEN Manager

1 -

service

1 -

SET_CELL_TYPE

1 -

SET_CELL_TYPE_COLUMN

1 -

SFTP scenario

2 -

Simplex

1 -

Single Sign On

8 -

Singlesource

1 -

SKLearn

1 -

soap

1 -

Software Development

1 -

SOLMAN

1 -

solman 7.2

2 -

Solution Manager

3 -

sp_dumpdb

1 -

sp_dumptrans

1 -

SQL

1 -

sql script

1 -

SSL

8 -

SSO

8 -

Substring function

1 -

SuccessFactors

1 -

SuccessFactors Platform

1 -

SuccessFactors Time Tracking

1 -

Sybase

1 -

system copy method

1 -

System owner

1 -

Table splitting

1 -

Tax Integration

1 -

Technical article

1 -

Technical articles

1 -

Technology Updates

14 -

Technology Updates

1 -

Technology_Updates

1 -

terraform

1 -

Threats

1 -

Time Collectors

1 -

Time Off

2 -

Time Sheet

1 -

Time Sheet SAP SuccessFactors Time Tracking

1 -

Tips and tricks

2 -

toggle button

1 -

Tools

1 -

Trainings & Certifications

1 -

Transport in SAP BODS

1 -

Transport Management

1 -

TypeScript

2 -

ui designer

1 -

unbind

1 -

Unified Customer Profile

1 -

UPB

1 -

Use of Parameters for Data Copy in PaPM

1 -

User Unlock

1 -

VA02

1 -

Validations

1 -

Vector Database

2 -

Vector Engine

1 -

Visual Studio Code

1 -

VSCode

1 -

Web SDK

1 -

work zone

1 -

workload

1 -

xsa

1 -

XSA Refresh

1

- « Previous

- Next »

Related Content

- Configure Custom SAP IAS tenant with SAP BTP Kyma runtime environment in Technology Blogs by SAP

- Data Flows - The Python Script Operator and why you should avoid it in Technology Blogs by Members

- Active/passive Oracle Database in a Red Hat High Availability Pacemaker Cluster in Technology Q&A

- Rolling Kernel Switch and Linux Server Patch Activity without Business Downtime in Technology Blogs by Members

- SAP HANA DB Maintenance - No Outage SAP Applications in Technology Blogs by Members

Top kudoed authors

| User | Count |

|---|---|

| 8 | |

| 5 | |

| 5 | |

| 4 | |

| 4 | |

| 3 | |

| 3 | |

| 3 | |

| 3 | |

| 3 |